The Global Infrastructure of AWS: Powering the Cloud Revolution

Jasai Hansda

Jasai HansdaTable of contents

In this blog post, we'll explore the foundational elements of AWS's global presence: Regions, Availability Zones, Local Zones, and Edge Locations.

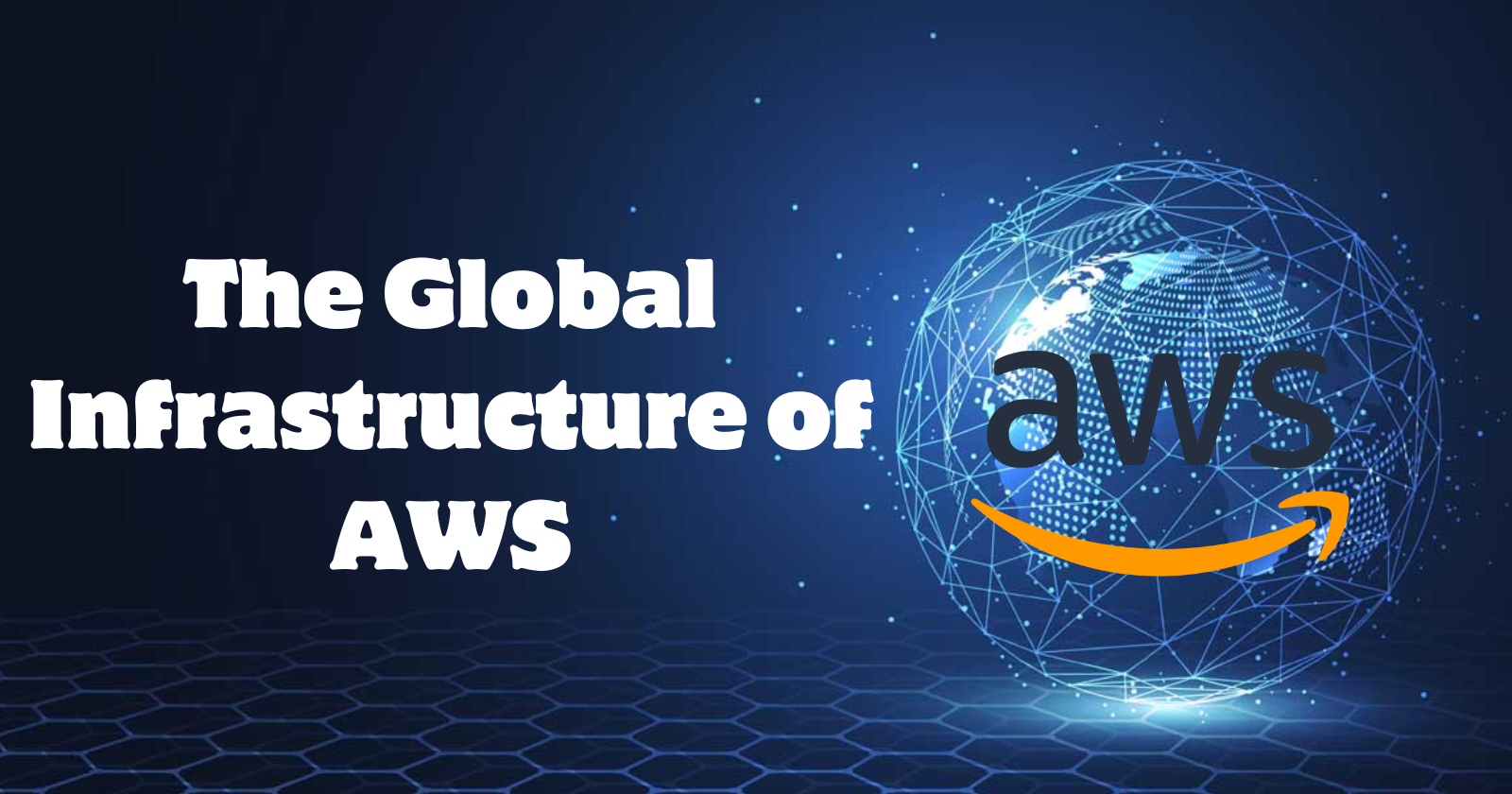

Amazon Web Services (AWS) has revolutionized the way businesses deploy and manage their IT infrastructure. As a global leader in cloud computing, AWS offers a robust and expansive global infrastructure designed to deliver high availability, security, and performance.

AWS Regions: The Foundation of AWS's Global Presence

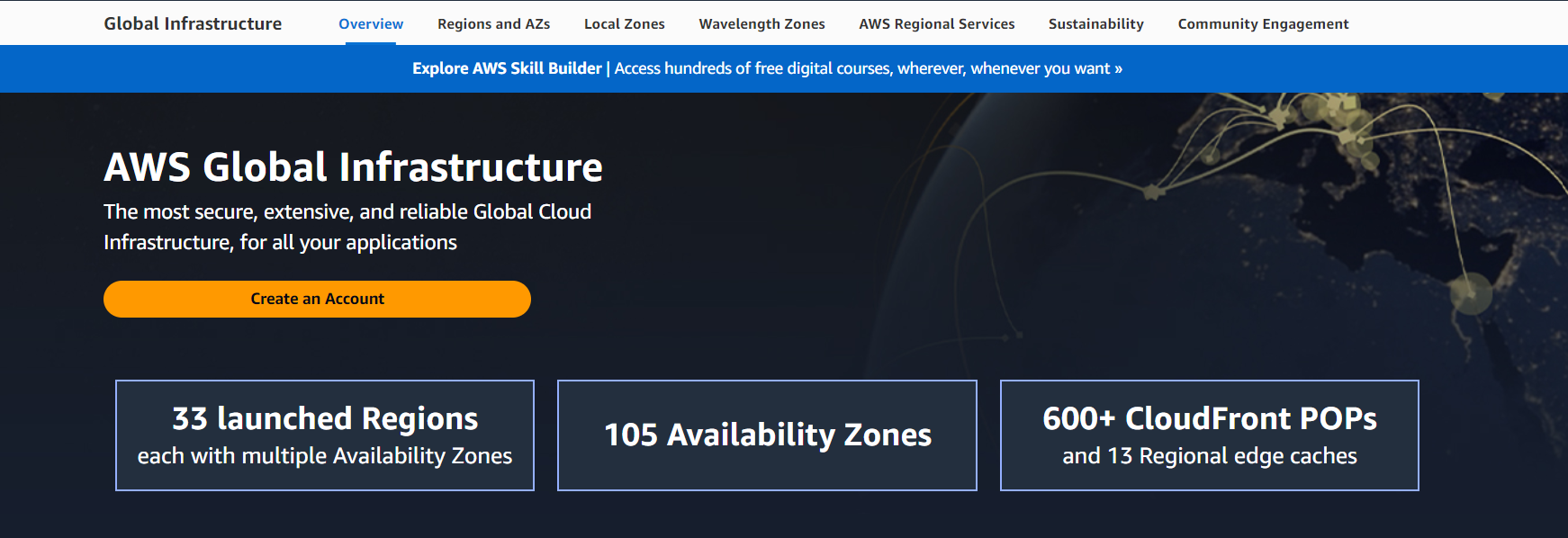

AWS Regions are the primary building blocks of AWS's global infrastructure. Imagine them as giant data centers strategically located around the world. Each Region acts as a self-contained unit offering a complete set of AWS services like compute, storage, databases, and more. As of now, AWS operates in 33 geographic regions worldwide(May 2024), with more in the pipeline.

Key Characteristics of AWS Regions:

Geographical Distribution: AWS Regions are distinct areas around the world that let you deploy applications closer to your audience, reducing latency and improving user experience.

Isolation and Security: Each Region operates independently with its own power, cooling, and network infrastructure, enhancing security and meeting specific data residency and compliance requirements.

Data Sovereignty: Regions allow businesses to comply with data sovereignty laws by choosing a specific geographic location to store and process data.

High Availability and redundancy: Each Region is further divided into Availability Zones (AZs). Think of AZs as smaller data centers within a Region, each with its own set of redundant infrastructure, with independent power, cooling, and network infrastructure, enhancing fault tolerance and resilience.

Complete Set of AWS Services: Each Region offers a full range of AWS services, such as compute, storage, databases, networking, and analytics, enabling you to build and deploy your entire application infrastructure within one Region.

Scalability: Regions are designed to be highly scalable, allowing you to easily adjust your resources (compute, storage, etc.) up or down based on your application's needs.

Cost Considerations: AWS service pricing can vary between Regions due to factors like local taxes, cost of living, and demand, with cost calculators available to help estimate expenses before deployment.

Choosing the Right Region:

The optimal Region for your application depends on several factors:

Latency: For applications sensitive to response times, like real-time gaming or financial trading, choose a Region closest to your target audience.

Data Residency: For compliance reasons, you might need to choose a Region that aligns with specific data storage regulations.

Disaster Recovery: For robust disaster recovery strategies, consider deploying your application across multiple Regions.

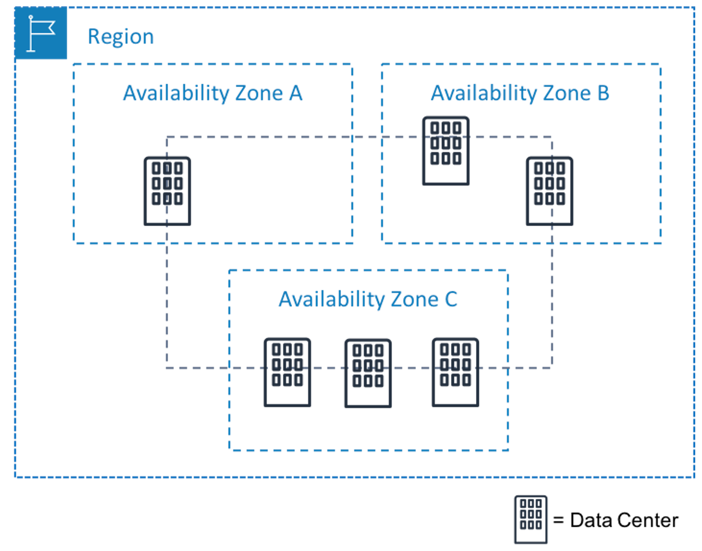

Availability Zones: The Safety Nets Within AWS Regions

Imagine you've built a magnificent castle (your critical application) within the vast kingdom of AWS (Amazon Web Services). While the kingdom itself offers a secure environment, what happens if a fire breaks out in a specific part of the castle? That's where Availability Zones (AZs) come in - they act as safety nets within an AWS Region.

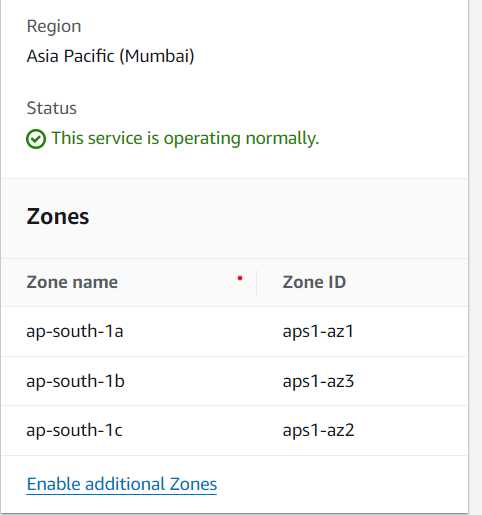

Within each AWS Region, there are multiple Availability Zones (AZs). An Availability Zone consists of one or more discrete data centers, each with redundant power, networking, and connectivity.

Key Characteristics of Availability Zones:

Physical Separation: Each AZ is a physically separate data center (about 100 km apart) with its own power generation, cooling systems, and network connectivity, minimizing the impact of localized failures on other AZs within the same Region.

High Availability: By distributing your application across multiple AZs, you achieve redundancy, allowing your application to automatically failover to a healthy AZ in the same Region if one encounters an issue, thus minimizing downtime and ensuring service continuity.

Improved Fault Tolerance: AZs enhance fault tolerance by isolating disruptions within a single zone, ensuring your application continues to function in the remaining healthy AZs even if one experiences a hardware failure or localized event.

Reduced Downtime: Compared to relying on a single data center, deploying your application across AZs significantly reduces downtime during disruptions. This translates to a more reliable user experience for your application.

Latency Considerations: While AZs within a Region are geographically close, they may have slightly higher latency compared to instances in the same AZ due to the short distance data must travel during a failover event.

Cost Implications: Distributing your application across multiple AZs may cost more than deploying in a single AZ because you're using resources in several locations.

Not Completely Isolated: It's important to remember that AZs within a Region are still geographically close, so large-scale natural disasters or regional outages could potentially impact multiple AZs within the same Region.

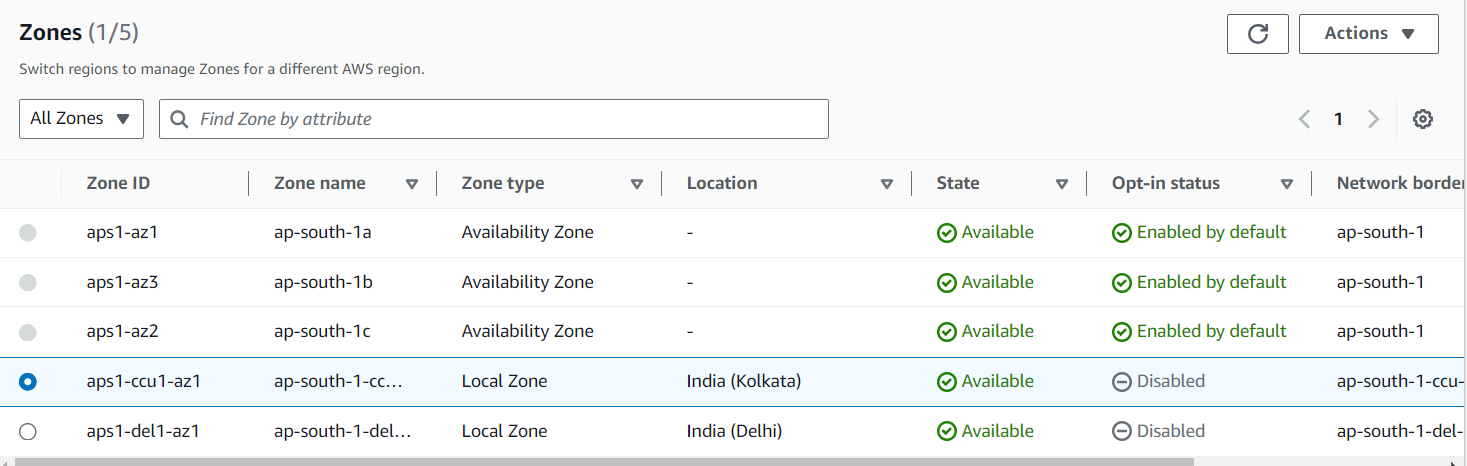

Three Availability Zones in Mumbai Region

Local Zones: Extending the AWS Cloud Closer to End Users

AWS Local Zones bring AWS services closer to end-users by extending AWS Regions to more locations. They are designed to support latency-sensitive applications that require single-digit millisecond latency.

Key Characteristics of Local Zones:

Ultra-Low Latency: Local Zones are designed for ultra-low latency access to AWS services, located near major population centers, offering single-digit millisecond latency for applications like compute and storage.

Real-time gaming

Financial transactions

Media processing

Augmented reality/Virtual reality experiences

Extension of a Region: Local Zones are extensions of a parent AWS Region, providing the same security and scalability but with much lower latency for specific workloads.

Limited Service Availability: Unlike Regions that offer a full suite of AWS services, Local Zones provide a more limited selection. Currently, they primarily focus on compute, storage, database, and networking services that benefit most from reduced latency.

Data Residency: Local Zones can be beneficial for adhering to specific data residency requirements. By keeping your data geographically close within a Local Zone, you can potentially address compliance concerns for certain regulations.

Cost Considerations: Utilizing Local Zones may be slightly more expensive than standard Regions due to their specialized low-latency infrastructure and premium locations.

Integration with Parent Region: Local Zones integrate seamlessly with their parent Region, allowing you to manage and access resources using the same familiar AWS tools and APIs.

Not a Replacement for Regions: Local Zones are not a full replacement for traditional AWS Regions and are best for specific ultra-low latency use cases, while Regions are better for general applications due to their broader services and potentially lower costs.

Choosing Local Zones: The choice to use Local Zones depends on whether your application needs low latency, making them a good option, while Regions might be better for broader or cost-sensitive deployments.

Edge Locations: Enhancing Performance with Content Delivery

Edge Locations are part of the AWS content delivery network (CDN) called Amazon CloudFront. They cache copies of content closer to end-users to improve performance and reduce latency.

Key Characteristics of Edge Locations:

Content Delivery Acceleration: Edge Locations are crucial for speeding up content delivery within AWS, acting as geographically distributed caches for CloudFront, AWS's content delivery network (CDN). By storing frequently accessed content (like images, videos, JavaScript files) closer to end-users, Edge Locations significantly reduce latency and improve content delivery speeds, resulting in a faster, more responsive experience for users worldwide.

Global Reach and Scalability: AWS has a vast network of Edge Locations worldwide, ensuring content is cached closer to users and automatically scaling to handle traffic spikes, providing consistent performance even during high usage periods.

Reduced Network Congestion: By moving static content delivery from origin servers to Edge Locations, network traffic is greatly reduced. This helps ease congestion on internet backbones and improves overall performance and user experience.

Integration with CloudFront: Edge Locations work seamlessly with CloudFront by checking the nearest cache for requested content and delivering it directly if available, significantly reducing latency.

Limited Processing Power: It's crucial to remember that Edge Locations are primarily designed for content caching and delivery. They have limited processing power and are not suitable for running complex applications or logic.

Security Benefits: Edge Locations enhance security by offloading traffic from origin servers, helping to mitigate denial-of-service (DoS) attacks by distributing traffic across a wider network.

Cost Considerations: While Edge Locations offer significant performance benefits, using CloudFront with Edge Locations may incur additional costs compared to serving content directly from your origin server.

Choosing Edge Locations: For applications or websites that depend heavily on static content delivery, using CloudFront with Edge Locations is highly recommended, but for those needing complex processing or cost-sensitive deployments, using your origin server directly might be sufficient.

-660.jpg)

CloudFront: The Speed Demon of Content Delivery on AWS

CloudFront is a powerful content delivery network (CDN) service offered by Amazon Web Services (AWS). It acts as a global network of servers strategically placed around the world, designed to accelerate the delivery of your static content (like images, videos, JavaScript files) to users.

Here's how CloudFront brings speed to your applications:

Edge Locations: CloudFront leverages a massive network of Edge Locations, which are geographically distributed points of presence. These Edge Locations act as caches, storing frequently accessed content closer to end users.

Reduced Latency: By delivering content from the nearest Edge Location, CloudFront significantly reduces latency (the time it takes for data to travel). This translates to faster loading times and a more responsive experience for users worldwide.

Improved User Experience: Faster loading times keep users engaged and happy. CloudFront helps ensure a smooth and seamless experience for users accessing your website or application.

Is CloudFront Right for You?

CloudFront is an excellent choice for websites and applications that rely heavily on static content delivery. Here are some scenarios where CloudFront shines:

Websites with Global Audiences: If your website has users worldwide, CloudFront can ensure fast loading times regardless of their location.

Media-Rich Applications: Websites or applications that use a lot of images, videos, or other static content can benefit significantly from CloudFront's caching capabilities.

E-commerce Platforms: Fast loading times are crucial for a positive user experience on e-commerce platforms. CloudFront can help keep your product pages loading quickly.

Conclusion

AWS's global infrastructure, including Regions, Availability Zones, Local Zones, and Edge Locations, supports its cloud services. This setup allows businesses to deploy applications worldwide with high availability, low latency, and local compliance. As AWS grows, customers can expect more regions and edge locations, enhancing AWS cloud capabilities.

Whether you're a startup aiming to scale quickly or an enterprise seeking to modernize your IT infrastructure, AWS's global infrastructure offers the reliability, performance, and security needed to succeed in today's digital world.

Subscribe to my newsletter

Read articles from Jasai Hansda directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Jasai Hansda

Jasai Hansda

Software Engineer (2 years) | In-transition to DevOps. Passionate about building and deploying software efficiently. Eager to leverage my development background in the DevOps and cloud computing world. Open to new opportunities!