Simple, Internet-Addressable, Distributed Storage

Kirk Haines

Kirk HainesThe Background History

Most good stories start at the beginning, and so does this one. However, if you're eager to dive into the code, feel free to scroll down to the Let's Build It section. For everyone else, here's the tale leading up to the code.

The year 2006 was eventful. Italy won the World Cup, Google acquired YouTube for $1.65 billion, and a Northern Bottlenose Whale ended up in the River Thames. Notably, Amazon also released S3.

Before S3, developers faced significant challenges in storing data accessible from the internet with access controls. They either had to use something like NFS or undertake a massive engineering project to build their own storage solution. This was a tough problem.

With the release of S3, anyone could create an account and use an API to store any data. Amazon took care of the infrastructure, ensuring data security and availability. This transformed a difficult problem into an easy one. Developers could now focus on building products without worrying about storage issues.

And many did. Netflix uses S3 for its vast streaming content. Airbnb stores user-uploaded property content on S3. Pinterest and Dropbox also leverage S3 for user-uploaded content. The list goes on.

Despite S3's utility, innovation in internet-addressable storage didn't stop. In 2015, Protocol Labs released IPFS (InterPlanetary File System), a decentralized file storage solution. Unlike S3's centralized model, IPFS allows anyone to participate in the storage network. Content is addressed by its CID (Content Identifier), an SHA2-256 hash of the content. As long as one IPFS node has pinned the content, it can be accessed. However, IPFS lacks flexible authorization controls.

The evolution continued. In 2017, Protocol Labs introduced FileCoin, an incentive layer for IPFS. FileCoin monetizes storage and retrieval, allowing two parties to agree on a storage deal. One provides data, and the other provides storage services, with value exchanged through the FileCoin token. FileCoin stores billions of GB of data but is only viable for large chunks (32GB and 64GB). This complements IPFS's use.

In 2021, Protocol.ai launched web3.storage, combining these elements with UCANs (User Controlled Authorization Networks) to create a compelling storage product for developers. Web3.storage offers APIs in JavaScript and Go, a command-line interface, and a web-based console. It uses IPFS for fast, decentralized access, Filecoin for long-term archival, and UCANs for flexible authorization. This integration hides complexity from developers, allowing them to focus on building.

Let's Build It!

The goal is to create a simple command-line tool that, given an account, space ID, and URL, scrapes the page and stores a copy in the specified space, returning a URL for access. This tutorial uses JavaScript and assumes you have a web3.storage account. If not, follow the Quickstart guide to create one.

The Simple Approach

I'd be remiss to not point out that what we're going to do next is a lot more complex than it has to be, if all that you want to do is to copy some web pages and archive them into web3.storage. You can do all of this via the command line.

First, you need a tool that can scrape the content to your local system. wget is perfect for this. If you are on an Ubuntu system, you can install this with sudo apt install wget. Likewise, on a Mac with Homebrew, you can run brew install wget. If you are on Windows, you will want to grab a binary from here.

To mirror content, run:

wget --mirror --convert-links --adjust-extension --page-requisites --no-parent https://http.cat

This will create a local copy of the https://http.cat site into a local directory, http.cat.

To upload this to web3.storage, you will want to use their command line utility, w3. Follow the Quickstart guide to install it and to create your account, if you have not already done so.

Now run:

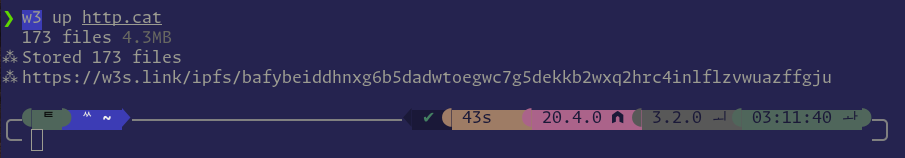

w3 up http.cat

It will look something like this:

The returned URL can be accessed in your browser, displaying the archived site.

The command line tool is powerful, and provides access to a wide variety of capabilities for interacting with web3.storage. However, there is also a fully featured Javascript API available, which allows a developer to create applications for anything that they might dream of doing on top of this powerful decentralize storage solution. So let's try building a tool to can also archive web pages in one step. The complete code for the solution can be found at https://github.com/wyhaines/w3s-page-dup.

The Web Page Duplicator

The page scraper portion of this utility is somewhat orthogonal to the overall purpose of this post, so this overview will be kept brief.

In order to make HTTP requests, we will use axios, which is an ubiquitous HTTP client for Javascript.

The fetched page content has to be searched for other required assets -- images, CSS, and javascript files -- so we need a tool that can help with this. For this code, that tool is cheerio.

And finally, we're leveraging files-from-paths to extend path with some useful additional functionality around iterating over a list of paths to access a bunch of files.

The Scraper

The implementation of the scrape, as shown below, can be viewed on GitHub: https://github.com/wyhaines/w3s-page-dup/blob/main/w3s-page-dup.mjs#L1-L88

import axios from 'axios';

import cheerio from 'cheerio';

import path from 'path';

import { promises as fs } from 'fs';

import { create } from '@web3-storage/w3up-client';

import { filesFromPaths } from 'files-from-path';

const assetBase = path.join(process.cwd(), 'assets');

/**

* Scrapes a webpage and downloads all its assets (CSS, JS, images).

* @param {string} url - The URL of the webpage to scrape.

* @returns {Promise<string[]>} - A promise that resolves to an array of file paths.

*/

export async function scrapePage(url) {

try {

const { data } = await axios.get(url);

const $ = cheerio.load(data);

// Download all CSS, JS, abd image assets, and prepare a list of them for downloading.

const assets = [];

$('link[rel="stylesheet"], script[src], img[src]').each((i, element) => {

const assetUrl = $(element).attr('href') || $(element).attr('src');

if (assetUrl) {

const absoluteUrl = new URL(assetUrl, url).href;

assets.push({ element, assetUrl: absoluteUrl });

}

});

const assetPaths = await downloadAssets(assets, $);

const modifiedHtmlPath = path.join(assetBase, 'index.html');

await fs.writeFile(modifiedHtmlPath, $.html());

return [modifiedHtmlPath, ...assetPaths];

} catch (error) {

console.error('Error scraping the page:', error);

return [];

}

}

/**

* Downloads assets and saves them locally.

* @param {Array} assets - An array of asset objects to download.

* @param {Object} $ - The Cheerio instance.

* @returns {Promise<string[]>} - A promise that resolves to an array of asset file paths.

*/

export async function downloadAssets(assets, $) {

const assetPaths = [];

for (const { element, assetUrl } of assets) {

try {

console.log(`Fetching: ${assetUrl}`);

const response = await axios.get(assetUrl, { responseType: 'arraybuffer' });

const assetPath = path.join(assetBase, path.basename(assetUrl));

await ensureDirectoryExists(path.dirname(assetPath));

await fs.writeFile(assetPath, response.data);

assetPaths.push(assetPath);

// Update element's URL to the new path

if (element.tagName === 'link') {

$(element).attr('href', `assets/${path.basename(assetUrl)}`);

} else {

$(element).attr('src', `assets/${path.basename(assetUrl)}`);

}

} catch (error) {

console.error(`Failed to download asset: ${assetUrl}`, error);

}

}

return assetPaths;

}

/**

* Ensures that a directory exists, creating it if necessary.

* @param {string} dir - The directory path.

*/

export async function ensureDirectoryExists(dir) {

await fs.mkdir(dir, { recursive: true });

}

/**

* Clear the assetBase directory of old files.

*/

export async function clearAssets() {

try {

await fs.rmdir(assetBase, { recursive: true });

await fs.mkdir(assetBase);

} catch (error) {

console.error('Error clearing assets:', error);

}

}

Within scrapePage, web page is retrieved, and is then loaded by the cheerio library, and is traversed for all links to stylesheets, script files, or images. The complete list of URLs for all resources is passed into downloadAssets, where each is traversed and downloaded.

This implementation is naive. It doesn't spider an entire web site, and it also has no code to deal with many of the more complex cases that show up in web pages, but it will suffice for illustration purposes, and it works well enough for the HTML that makes up https://http.cat.

Once the scraper portion of the code has finished running, there will be a subdirectory, assets/, inside of the current working directory. This subdirectory contains a localized version of the web page that was just scraped.

The Command Line

Below is a very simple command line parser for our tool. It checks to ensure that the file is being ran directly (so that we can more easily write specs for this code, later, if we want to), and then checks to ensure that the required information has been passed. If it hasn't been, a short usage summary is printed.

And then finally, if everything is ready to go, the page is scraped, and the results are uploaded.

https://github.com/wyhaines/w3s-page-dup/blob/main/w3s-page-dup.mjs#L110-L141

/**

* If the script is run directly, scrape a page and upload it to Web3 Storage.

*/

if (import.meta.url === `file://${process.argv[1]}`) {

(async () => {

const email = process.argv[2]

const space = process.argv[3]

const url = process.argv[4]

if (!email) {

console.error("Please provide your email address.\nUsage: node script.js my-email@provider.com <space> <url>")

process.exit(1)

}

if (!space) {

console.error("Please provide a space key for Web3 Storage.\nUsage: node script.js <email> <space> <url>")

process.exit(1)

}

if (!url) {

console.error("Please provide the URL of a page to duplicate.\nUsage: node script.js <email> <space> <url>")

process.exit(1)

}

const files = await scrapePage(url)

if (files.length > 0) {

const web3StorageUrl = await uploadToWeb3Storage(files, email, space)

if (web3StorageUrl) {

console.log('Access the copied page at:', web3StorageUrl)

}

}

})()

The Uploader

Now, that there is a web page copy, let's see what is involve in uploading it to web3.storage. We will go through it line by line, but the full code can be seen on GitHub, at https://github.com/wyhaines/w3s-page-dup/blob/main/w3s-page-dup.mjs#L90-L110.

export async function uploadToWeb3Storage(files, email, key) {

To successfully perform an upload, three things are needed:

a list of file paths for files to upload

the email address of the account to use to do the upload

the key to the space to upload the files into

const client = await create()

This creates an instance of the API.

await client.login(email)

Next, log the client into the account that was specified, via email address, on the command line. You may have to go to your browser to complete the login process.

await client.setCurrentSpace(`did:key:${key}`)

In order to upload something, the client must be told which space to use to store the data. This, also, will have been provided on the command line, and should be the ID to a space that has already been created, either in the console, or via the w3 command line tool.

const filesToUpload = await filesFromPaths(files)

This part isn't utilizing the web3.storage API, but it is essential. It creates a list of files which will be passed into the API to be uploaded.

const directoryCid = await client.uploadDirectory(filesToUpload)

Here is the heart of the operation. The API provides a couple different functions for uploading. One is uploadFile, which will upload a single file, and the other is uploadDirectory, which will upload multiple files.

While the files for the duplicated page could be uploaded either way, there are some semantic differences. When uploading individual files, the uploaded files are each wrapped in an IPFS directory listing. If this were used for uploading our web page archive, we would have to replace all of the links inside of the HTML to links to the relevant IPFS URLs for each individual file. This level of bookkeeping might be onerous, however.

The alternative is to upload all of the files at the same time, using uploadDirectory. This approach puts all of the files under an IPFS directory, which allows relative linking from a web page to the assets require for the web page to work seamlessly. For our purposes here, this is much simpler.

Also notice that the uploadDirectory returns a an IPFS directory CID. When control is returned from await-ing the client.uploadDirectory call, the uploading of our archived web page sholde be complete. This returned CID is then used in the code that follows to return the URL to access the new archive.

console.log('Uploaded directory CID:', directoryCid)

return `https://${directoryCid}.ipfs.dweb.link`

The archive is addressable through the returned CID.

Conclusion

From the advent of S3 in 2006 to the innovative developments of IPFS, Filecoin, and web3.storage, the journey of internet-addressable storage has continually evolved to address the varying needs of developers and users alike. Today, web3.storage provides developers with an easy-to-use decentralized storage solution for their applications, freeing them to focus on building impactful products without worrying deeply about the management or implementation of a storage solution. The result -- files stored on a reliable, distributed network backed up by trusted FileCoin storage -- is just a CLI command or API call away.

Subscribe to my newsletter

Read articles from Kirk Haines directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by