Deep Learning Basics: A Clear Overview

Spheron Network

Spheron Network

In the rapidly evolving landscape of technology, one concept stands out as a game-changer: deep learning. This powerful subset of machine learning is revolutionizing the way we approach complex tasks and solve intricate problems. At its core, deep learning is a technique that enables computers to learn from data and mimic human intelligence, much like how we learn from experiences.

Imagine teaching a computer to recognize cats. Instead of providing it with a set of rules to identify whiskers, ears, and tails, you present it with thousands of images of cats. The computer then finds the common patterns and features all by itself, ultimately learning how to identify a cat. This is the essence of deep learning – an approach that empowers machines to discern patterns and make decisions without explicit programming.

Deep learning is built upon the foundation of neural networks, computational models inspired by the human brain. These networks consist of interconnected nodes, or "neurons," that process information and make decisions, much like the different regions of our brain handle specific tasks. However, what sets deep learning apart is the depth of these networks – they have multiple layers between the input and output, allowing them to learn increasingly complex features and make more accurate predictions.

The Evolution of Machine Learning to Deep Learning

What is Machine Learning?

Machine learning is a subset of artificial intelligence (AI) that enables computers to learn from data and make decisions without explicit programming. It encompasses various techniques and algorithms that allow systems to recognize patterns, make predictions, and improve performance over time. You can explore the difference between machine learning and AI in a separate article.

How Deep Learning Differs from Traditional Machine Learning

While machine learning has been transformative, deep learning advances it further by automating many tasks that typically require human expertise. Deep learning is essentially a specialized subset of machine learning, characterized by its use of neural networks with three or more layers. These networks attempt to simulate the human brain’s behavior to "learn" from large amounts of data.

| Criteria | Traditional Machine Learning | Deep Learning |

| Data Size | Generally works well with small to medium-sized datasets. | Requires large amounts of data for training. |

| Feature Engineering | Features must be manually extracted and selected by domain experts. | Can automatically learn features through multiple layers of computation. |

| Model Complexity | Typically has simpler models, e.g., linear regression or decision trees. | Has more complex models, such as neural networks with many hidden layers. |

| Training Time | Shorter training time due to fewer parameters to optimize. | Longer training time due to larger number of parameters and computations. |

| Interpretability | Models are often interpretable and explainable. | Models can be "black boxes," making it difficult to understand how they make predictions. |

| Hardware Requirements | Works on CPUs and requires less memory compared to deep learning. | Performs best on GPUs and TPUs, requiring significant memory resources. |

| Use Cases | Good for simple problems, classification tasks, and structured data. | Ideal for unstructured data (images, audio, video), natural language processing, and high-dimensional data. |

The Importance of Feature Engineering

Feature engineering involves selecting, transforming, or creating the most relevant variables (features) from raw data for use in machine learning models. For example, in building a weather prediction model, the raw data might include temperature, humidity, wind speed, and barometric pressure. Feature engineering would involve determining which variables are most important and transforming them (e.g., converting temperature from Fahrenheit to Celsius) to make them more useful for the model.

In traditional machine learning, feature engineering is a manual and time-consuming process requiring domain expertise. However, one advantage of deep learning is its ability to automatically learn relevant features from raw data, reducing the need for manual intervention.

Why is Deep Learning Important?

Deep learning has become the industry standard for several reasons:

Handling Unstructured Data: Deep learning models can learn from unstructured data, reducing the time and resources needed to standardize datasets.

Handling Large Data: With the introduction of graphics processing units (GPUs), deep learning models can process vast amounts of data at high speed.

High Accuracy: Deep learning models provide the most accurate results in computer vision, natural language processing (NLP), and audio processing.

Pattern Recognition: Unlike most models requiring intervention from machine learning engineers, deep learning models can automatically detect various patterns.

This tutorial dives into the world of deep learning, covering the key concepts needed to start a career in artificial intelligence (AI).

Core Concepts of Deep Learning

Before diving into the intricacies of deep learning algorithms and their applications, it’s essential to understand the foundational concepts that make this technology revolutionary. This section introduces the building blocks of deep learning: neural networks, deep neural networks, and activation functions.

Neural Networks

At the heart of deep learning are neural networks, computational models inspired by the human brain. These networks consist of interconnected nodes, or "neurons," that work together to process information and make decisions. Just as our brain has different regions for different tasks, a neural network has layers designated for specific functions. We have a full guide, What are Neural Networks, covering the essentials in more detail.

Deep Neural Networks

A neural network becomes "deep" based on the number of layers between the input and output. A deep neural network has multiple layers, enabling it to learn more complex features and make more accurate predictions. The "depth" of these networks is what gives deep learning its name and its power to solve intricate problems. Our introduction to deep neural networks tutorial covers the significance of DNNs in deep learning and artificial intelligence.

Activation Functions

In a neural network, activation functions act as decision-makers, determining what information should be passed along to the next layer. These functions add complexity, enabling the network to learn from data and make nuanced decisions.

How Deep Learning Works

Deep learning uses feature extraction to recognize similar features of the same label and employs decision boundaries to determine which features accurately represent each label. For instance, in classifying cats and dogs, deep learning models extract information such as eyes, face, and body shape, dividing them into two classes.

The deep learning model consists of deep neural networks. A simple neural network comprises an input layer, a hidden layer, and an output layer. Deep learning models have multiple hidden layers, with additional layers improving the model’s accuracy.

Simple Neural Network

The input layers contain raw data, which is transferred to the hidden layers’ nodes. The hidden layers classify the data points based on broader target information, and with each subsequent layer, the target value’s scope narrows down to produce accurate assumptions. The output layer uses hidden layer information to select the most probable label, such as accurately predicting a dog’s image rather than a cat’s.

Credit: Gary Marcus

Artificial Intelligence vs. Deep Learning vs. Machine Learning

One frequently asked question is: "Is deep learning artificial intelligence?" The short answer is yes. Deep learning is a subset of machine learning, and machine learning is a subset of AI.

| AI | Machine Learning | Deep Learning | |

| Definition | The simulation of human intelligence in machines that are programmed to think like humans and mimic their actions. | A subset of AI that involves the use of statistical techniques to enable machines to improve with experience. | A subset of ML that uses artificial neural networks with many layers ("deep" structures) for learning representation. |

| Key Concepts | Reasoning, knowledge representation, planning, natural language processing, perception, machine learning. | Supervised learning, unsupervised learning, reinforcement learning, feature engineering, model selection. | Neural networks, activation functions, backpropagation, convolutional neural networks, recurrent neural networks. |

| Examples | Autonomous vehicles, robotic process automation, virtual personal assistants, and recommendation systems. | Fraud detection, credit scoring, spam filtering, image recognition. | Speech recognition, image classification, natural language processing, autonomous driving. |

| Advantages | Can perform tasks that require human-like reasoning or decision making. | Improves over time without explicit programming. | High accuracy in complex pattern recognition tasks. |

| Disadvantages | Requires extensive domain expertise and manual feature engineering. Difficult to interpret results. | Limited by the quality of available data and features. May not generalize well to new domains. | Computationally expensive, requires large amounts of labeled training data, hard to interpret models. |

| Applications | Gaming, finance, healthcare, manufacturing, education, entertainment. | Marketing, fraud detection, customer service, predictive maintenance, cybersecurity. | Computer vision, speech recognition, natural language understanding, medical diagnosis. |

What is Deep Learning Used For?

The world of technology has seen a surge in artificial intelligence applications powered by deep learning models. These applications range from recommending movies on Netflix to managing Amazon warehouses.

1. Computer Vision

Computer vision (CV) is used in self-driving cars to detect objects and avoid collisions. It’s also employed for face recognition, pose estimation, image classification, and anomaly detection.

2. Automatic Speech Recognition

Automatic speech recognition (ASR) is widely used, and it is found in phones that activate the phone by saying "Hey, Google" or "Hi, Siri." ASR is also used for text-to-speech, audio classification, and voice activity detection.

3. Generative AI

Generative AI has become popular, with examples like CryptoPunk NFTs, which use deep learning models to create art collections. OpenAI’s GPT-4 model has revolutionized text generation, enabling the creation of novels or code for data science projects.

4. Translation

Deep learning translation extends beyond language translation, including converting photos to text using OCR or text to images with NVIDIA GauGAN2.

5. Time Series Forecast

Time series forecasting is used for predicting market crashes, stock prices, and weather changes. The financial sector relies on such projections, and deep learning models excel in detecting patterns.

6. Automation

Deep learning automates tasks, such as training robots for warehouse management. Notable applications include playing video games and solving puzzles, with OpenAI’s Dota AI defeating pro team OG, showcasing deep learning’s capabilities.

7. Customer Feedback

Deep learning handles customer feedback and complaints, powering chatbots for seamless customer service.

8. Biomedical

Deep learning has significantly impacted biomedicine, used for cancer detection, stable medicine development, anomaly detection in chest X-rays, and medical equipment assistance.

Deep Learning Models

Different types of deep learning models exist, each with specific functions.

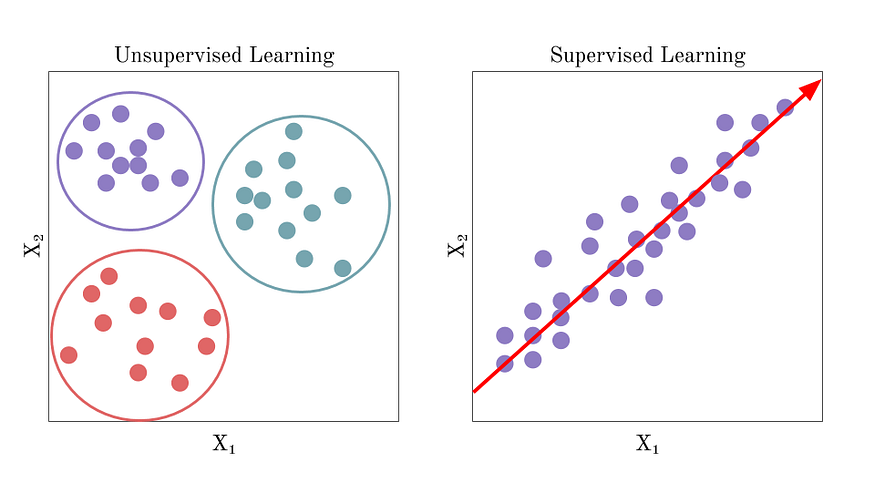

1. Supervised Learning

Supervised learning uses labeled datasets to train models for classification or prediction. The dataset contains features and target labels, allowing the algorithm to learn over time by minimizing the loss between predicted and actual labels. It can be divided into classification and regression problems.

Classification: Algorithms divide datasets into categories based on feature extractions. Popular models include ResNet50 for image classification and BERT for text classification.

Regression: Models learn the relationship between input and output variables to predict outcomes. Regression models are used for predictive analysis, weather forecasting, and stock market performance, with popular models being LSTM and RNN.

2. Unsupervised Learning

Unsupervised learning algorithms identify patterns within unlabeled datasets and create clusters. These models learn hidden patterns without human intervention and are often used in recommendation engines, medical imaging, and market research. The deep-embedded clustering algorithm is a common model.

3. Reinforcement Learning

Reinforcement learning (RL) is a machine learning method where agents learn behaviors from the environment. Agents take actions and receive rewards, learning to achieve goals through trial and error without human intervention. RL is used in automation, robotics, self-driving cars, gaming, and space exploration.

4. Generative Adversarial Networks

Generative adversarial networks (GANs) involve two neural networks that produce synthetic instances of original data. GANs are popular for generating synthetic art, video, music, and texts.

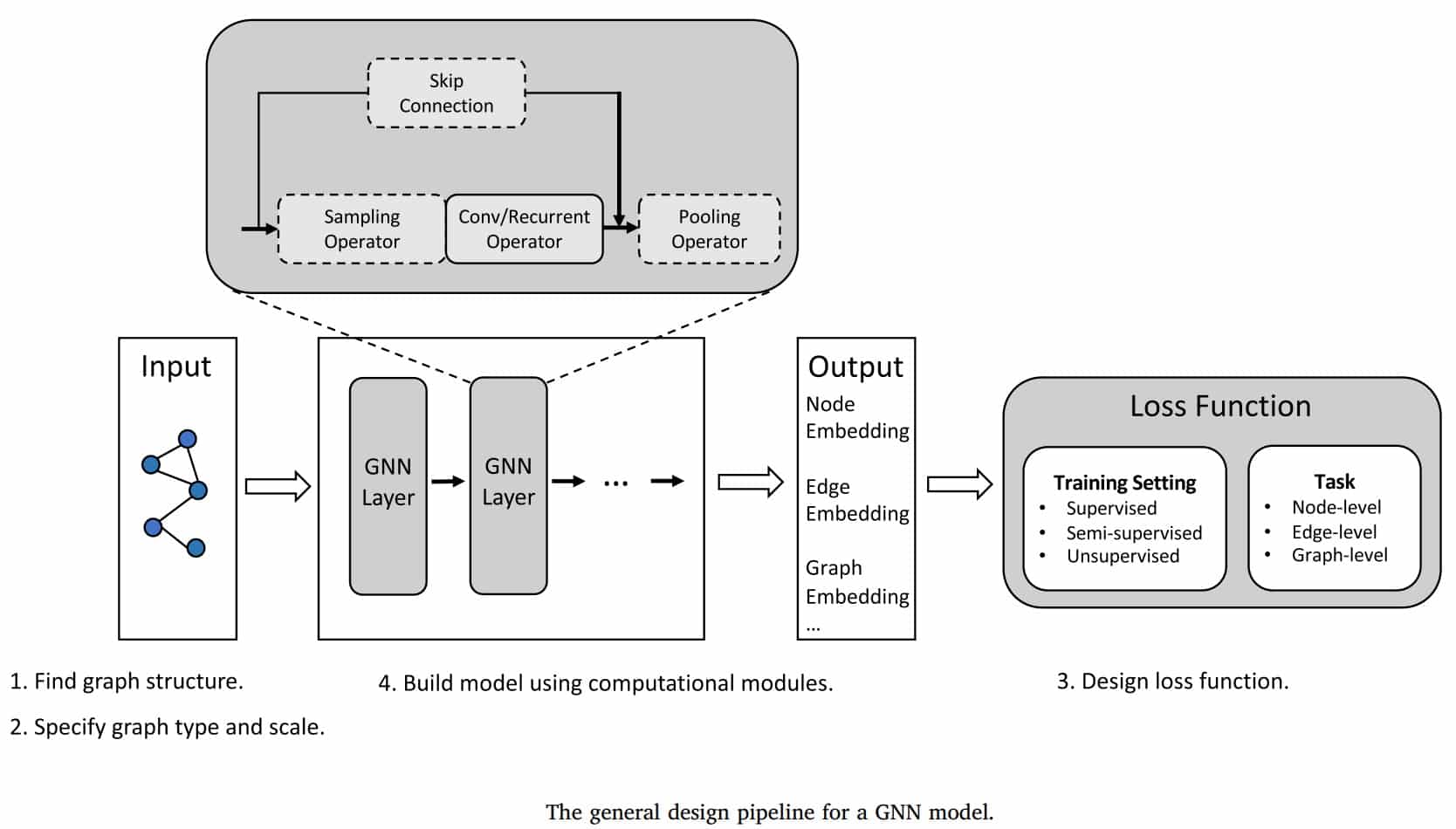

5. Graph Neural Networks

Graph neural networks (GNNs) operate on graph structures, applied in large dataset analysis, recommendation systems, and computer vision. They are used for node classification, link prediction, and clustering.

6. Natural Language Processing

Natural language processing (NLP) uses deep learning to help computers understand human language. NLP applications include translation, summarization, classification, generation, conversation, question answering, feature extraction, sentence similarities, text-to-speech, and optical character recognition.

NLP encompasses several fields, each with distinct applications:

Translation: Converts languages, molecular structures, and mathematical equations.

Summarization: Condenses large text blocks into brief summaries while preserving key information.

Classification: Sorts text into various categories.

Generation: Produces text, such as generating essays from a single prompt.

Conversational: Powers virtual assistants, simulating human conversations and retaining context.

Question Answering: Utilizes Q&A data to answer questions.

Feature Extraction: Detects patterns and extracts information like named entities and parts of speech.

Sentence Similarities: Evaluates similarities between texts.

Text to Speech: Converts text into audible speech.

Automatic Speech Recognition: Converts spoken language into text.

Optical Character Recognition: Extracts text data from images.

To explore these NLP applications, visit Hugging Face Spaces, which hosts a variety of web applications for inspiration.

Deep Learning Concepts

Activation Functions

Activation functions in neural networks determine whether an input should pass through a neuron, adding non-linearity to the model. Without them, a neural network would act as a simple linear regression model. Types of activation functions include:

Tanh

ReLU

Sigmoid

Linear

Softmax

Swish

Loss Function

The loss function measures the difference between actual and predicted values, helping track model performance. Common loss functions are:

Binary cross-entropy

Categorical hinge

Mean squared error

Huber

Sparse categorical cross-entropy

Backpropagation

Backpropagation adjusts weights in a neural network to improve performance by minimizing the loss function.

Stochastic Gradient Descent

Gradient descent optimizes the loss function by adjusting weights to achieve minimal loss. Stochastic gradient descent speeds up this process by dividing data into batches.

The equation below shows how weights are updated using gradient descent.

w = w -Jw

In stochastic gradient descent, samples are divided into batches instead of using the entire dataset to optimize gradient descent. This is useful if you want to achieve minimum loss faster and optimize computational power.

Hyperparameters

Hyperparameters are adjustable parameters that impact model performance and training speed. Key hyperparameters include:

Learning Rate: Determines the step size for each iteration (0.1 to 0.0001).

Batch Size: Number of samples processed at a time.

Number of Epochs: Iterations over the entire dataset, affecting overfitting and underfitting.

Popular Deep Learning Algorithms

Convolutional Neural Networks (CNNs)

CNNs are feed-forward networks ideal for image classification, recognizing patterns, lines, and shapes. They consist of convolutional layers, pooling layers, and fully connected layers.

Recurrent Neural Networks (RNNs)

RNNs are designed for sequential data, where the output of one layer is fed back into the input. This makes them suitable for tasks like text prediction, stock price forecasting, and AI chatbots.

Long Short-term Memory Networks (LSTMs)

LSTMs are advanced RNNs that retain more information over time, solving issues like the vanishing gradient problem. They consist of four interactive layers for processing long sequences of data.

Deep Learning Frameworks

TensorFlow

TensorFlow (TF) is an open-source library for creating deep learning applications. It supports CPUs, GPUs, and TPUs and includes TensorBoard for experiment analysis. TensorFlow integrates with Keras for developing deep neural networks.

Keras

Keras is a Python-based neural network framework that runs on multiple backends like TensorFlow and Theano. It's designed for fast experimentation and easy integration with data science projects.

PyTorch

PyTorch is a popular deep learning framework known for its flexibility and ease of use, favored by academic researchers. It uses tensors for fast numerical computations and supports GPU and TPU acceleration.

Conclusion

This tutorial covered the basics of deep learning, its applications, and how deep neural networks operate. We also explored various deep learning models and popular frameworks, providing a comprehensive introduction to the field.

Subscribe to my newsletter

Read articles from Spheron Network directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Spheron Network

Spheron Network

On-demand DePIN for GPU Compute