Understanding Autoencoders: Unsupervised Representation Learning

Osen Muntu

Osen Muntu

Autoencoders (AEs) are a type of neural network particularly useful for unsupervised learning, where the goal is to find patterns and representations within data that doesn't come with labels. This is essential when dealing with large datasets where labeled data is scarce. Autoencoders work by learning to efficiently compress input data into a lower-dimensional representation and then reconstruct it as accurately as possible.

What are Autoencoders?

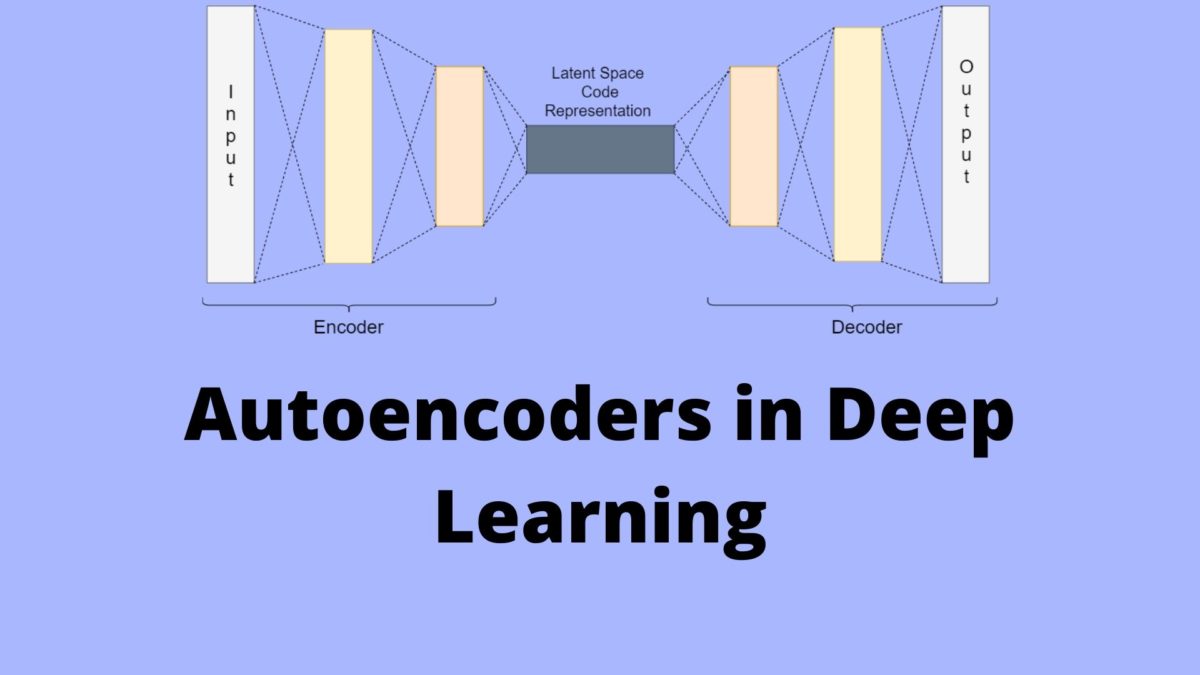

Autoencoders consist of two main parts:

Encoder This part of the network compresses the input data into a lower-dimensional representation.

Decoder This part reconstructs the original data from the compressed representation.

The objective of an autoencoder is to minimize the difference between the input data and the reconstructed data, known as the reconstruction error.

How Autoencoders Work

Autoencoders learn to encode data by training on a dataset, where the goal is to output the same data as the input. This involves two main steps:

Encoding The input data is passed through the encoder, which compresses it into a smaller representation. This is often done using several layers of neurons that progressively reduce the data's dimensionality.

Decoding The compressed data is then passed through the decoder, which tries to reconstruct the original input data. The decoder is essentially a mirror of the encoder, with layers that progressively increase the data's dimensionality back to the original size.

During training, the autoencoder adjusts its parameters to minimize the reconstruction error. This means the network learns to capture the most important features of the data in the compressed representation.

Variants of Autoencoders

Several variants of autoencoders have been developed to address different needs and enhance performance;

Stacked Autoencoder (SAE) This involves stacking multiple autoencoders on top of each other. The output of one layer is the input to the next, allowing the network to learn more complex representations.

Denoising Autoencoder (DAE) Proposed by Vincent Pascal., this type of autoencoder is trained to reconstruct the input from a corrupted version of it. This helps the network learn robust features that are useful even when the input data is noisy.

Variational Autoencoder (VAE) VAEs ensure that the latent space (the compressed representation) follows a specific distribution, typically a Gaussian distribution. This is useful for generating new data samples similar to the training data.

Sparse Autoencoder This type of autoencoder imposes a sparsity constraint on the hidden representation, meaning only a few neurons are active at a time. This can lead to more interpretable features and prevent overfitting.

Implementing an Autoencoder in TensorFlow

import tensorflow as tf

from tensorflow.keras import layers, models

# Define the dimensions

input_dim = 784 # For example, MNIST dataset flattened input

encoding_dim = 32 # Compressed representation

# Define the encoder model

input_layer = layers.Input(shape=(input_dim,))

encoded = layers.Dense(encoding_dim, activation='relu')(input_layer)

# Define the decoder model

decoded = layers.Dense(input_dim, activation='sigmoid')(encoded)

# Combine into an autoencoder model

autoencoder = models.Model(input_layer, decoded)

# Compile the model

autoencoder.compile(optimizer='adam', loss='mse')

# Display the model architecture

autoencoder.summary()

# For training the autoencoder

# Example with MNIST dataset

(x_train, _), (x_test, _) = tf.keras.datasets.mnist.load_data()

x_train = x_train.reshape((len(x_train), input_dim)).astype('float32') / 255

x_test = x_test.reshape((len(x_test), input_dim)).astype('float32') / 255

# Train the autoencoder

autoencoder.fit(x_train, x_train, epochs=50, batch_size=256, shuffle=True, validation_data=(x_test, x_test))

# Encode and decode some digits

encoded_imgs = autoencoder.predict(x_test[:10])

decoded_imgs = autoencoder.predict(encoded_imgs)

In this implementation

Input Layer Takes a vector of size 784 (e.g., a flattened 28x28 MNIST image).

Encoder Compresses this to a vector of size 32 using a dense layer with ReLU activation.

Decoder Reconstructs the input from this 32-dimensional vector using a dense layer with sigmoid activation.

Training The autoencoder is trained on the MNIST dataset to minimize the mean squared error between the input and the reconstructed output.

Use Cases of Autoencoders

Autoencoders have a wide range of applications;

Dimensionality Reduction Autoencoders can reduce the dimensionality of data, similar to Principal Component Analysis (PCA), but can capture more complex relationships.

Anomaly Detection By learning the normal patterns in the data, autoencoders can detect anomalies, which will have a high reconstruction error.

Data Denoising. Denoising autoencoders can remove noise from data, making them useful in image and audio processing.

Feature Extraction Autoencoders can learn a compressed representation of data that can be used as features for other tasks, such as classification.

Image Generation. Variational autoencoders (VAEs) can generate new images by sampling from the latent space.

Autoencoders are a powerful tool in the realm of unsupervised learning, capable of learning efficient representations of data and finding applications across various domains. Whether you're looking to reduce dimensionality, detect anomalies, denoise data, extract features, or generate new data, autoencoders offer versatile solutions. With TensorFlow, implementing and experimenting with autoencoders has become more accessible, enabling developers to push the boundaries of machine learning and artificial intelligence.

For more in-depth tutorials, practical examples, and the latest updates on machine learning and AI, be sure to follow my blog. Stay tuned for more insightful content to help you master these exciting technologies!

Subscribe to my newsletter

Read articles from Osen Muntu directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Osen Muntu

Osen Muntu

Osen Muntu, AI Engineer and Flutter Developer, passionate about artificial intelligence and mobile app development. With expertise in machine learning, deep learning, natural language processing, computer vision, and Flutter, I dedicate myself to building intelligent systems and creating beautiful, functional apps. Through my blog, I share my knowledge and experiences, offering insights into AI trends, development tips, and tutorials to inspire and educate fellow tech enthusiasts. Let's connect and drive innovation together!