Understanding Neural Networks: A Beginner's Guide

Salilesh Verma

Salilesh VermaTable of contents

- What is Neural Network

- What are neural network used for?

- How do neural network work?

- Forward Propagation:

- Loss Calculation:

- Backward Propagation:

- Iterative Process:

- Simple neural network architecture

- Deep neural network architecture

- Types of neural network-

- How to train Neural Network-

- Deep learning in context of neural network

What is Neural Network

A neural network, or artificial neural network, is a type of computing architecture that is based on a model of how a human brain functions — hence the name "Neural." Neural networks are made up of a collection of processing units called "nodes." These nodes pass data to each other, just like how in a brain, neurons pass electrical impulses to each other.

Neural networks are used in machine learning, which refers to a category of computer programs that learn without definite instructions. Specifically, neural networks are used in deep learning — an advanced type of machine learning that can draw conclusions from unlabeled data without human intervention. For instance, a deep learning model built on a neural network and fed sufficient training data could be able to identify items in a photo it has never seen before. Neural network helps in summarizing the documents and recognizing faces with higher accuracy.

What are neural network used for?

Neural network are used in many industries and various applications of neural network are there:

Medical diagnosis by medical image classification.

Targeted marketing by social network filtering.

Chemical compound identification.

Pattern recognition and prediction.

Financial predictions by processing historical data of financial instruments.

Electrical load and energy demand forecasting.

Four applications of neural network are-

Computer vision- Computer vision is when computers can get information and understand images and videos. Using neural networks, computers can identify and recognize images like people do. Computer vision has many uses, such as:

Helping self-driving cars recognize road signs and other vehicles or pedestrians

Automatically removing unsafe or inappropriate content from images and videos

Identifying faces and recognizing features like open eyes, glasses, and facial hair

Labeling images to identify brand logos, clothing, safety gear, and other details.

Natural language processing- Natural language processing (NLP) is the ability for computers to understand and work with human-created text. Neural networks help computers find meaning and insights from text data and documents. NLP has many uses, such as:

Creating automated virtual agents and chatbots

Automatically organizing and classifying written data

Analyzing long documents like emails and forms for business insights

Finding key phrases that show sentiment, like positive or negative comments on social media

Summarizing documents and generating articles on specific topics

Speech Recognition- Speech recognition allows computers to understand human speech, even with different speech patterns, pitches, tones, languages, and accents. Neural networks help with this. Virtual assistants like Amazon Alexa and transcription software use speech recognition for tasks like:

Helping call center agents and automatically classifying calls

Turning clinical conversations into real-time documentation

Creating accurate subtitles for videos and meeting recordings to reach more people

How do neural network work?

Forward Propagation:

Inputs (x1, x2): These are the input features fed into the neural network.

Weights: The weights (connections) between neurons in the layers.

Hidden Layers:

Neurons in the hidden layers (represented by circles) receive inputs, process them, and pass the results to the next layer.

Each neuron performs a weighted sum of inputs, adds a bias, and then applies an activation function (denoted as z,fz, fz,f).

Output Layer:

- Produces predictions (y′y'y′) based on the final transformation of data through the network.

Loss Calculation:

Predictions (y'): The output of the neural network.

True Values (y): The actual values that the model is trying to predict.

Loss Function: Compares the predictions (y′y'y′) with the true values (yyy) to calculate the loss score, which quantifies the error in the predictions.

Backward Propagation:

Loss Score: The error derived from the loss function.

Optimizer: Adjusts the weights to minimize the loss. The optimizer uses the loss score to update the weights through gradient descent or other optimization algorithms.

Weight Update: The process of adjusting the weights in the network based on the gradients calculated from the loss score.

Iterative Process:

- The entire process of forward and backward propagation is repeated iteratively until the loss function is minimized, meaning the predictions (y′y'y′) are as close as possible to the true values (yyy).

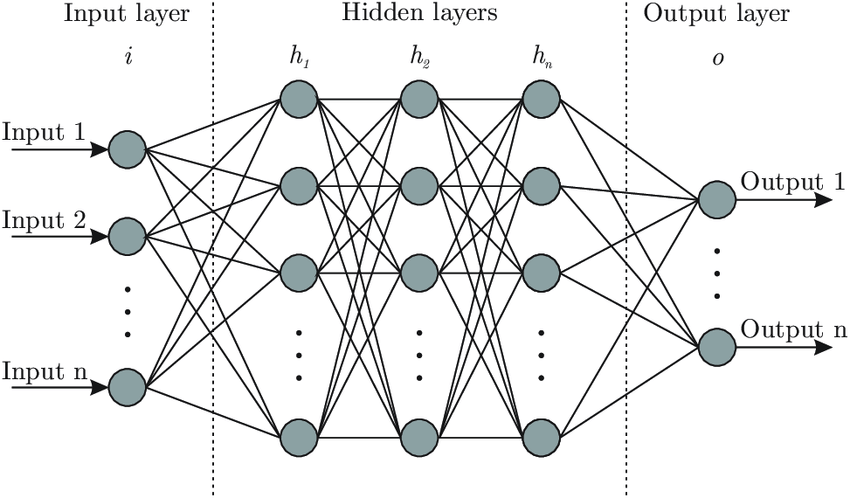

Simple neural network architecture

A basic neural network has interconnected artificial neurons in three layers: the input layer, hidden layers, and the output layer. The input layer is where information from the outside world enters the neural network, and input nodes process, analyze, or categorize this data before passing it to the next layer. Hidden layers, which can be numerous, receive input from the input layer or other hidden layers, analyze the output from the previous layer, process it further, and then pass it to the next layer. The output layer provides the final result of all the data processing done by the neural network. It can have one or multiple nodes; for instance, in a binary classification problem (yes/no), the output layer will have one node that gives the result as 1 or 0, while in a multi-class classification problem, the output layer might have more than one node to provide the result.

Deep neural network architecture

Deep neural networks have many hidden layers with millions of connected artificial neurons. These connections have numbers called weights. A positive weight means one node excites another, while a negative weight means it suppresses it. Nodes with higher weights influence other nodes more.

In theory, deep neural networks can connect any input to any output. But they need a lot more training than other machine learning methods, requiring millions of examples instead of just hundreds or thousands.

Types of neural network-

It can be classified on the basis of how data flows from input to the output node, so the classification are as follows-

1. Feed forward neural networks- A feedforward neural network (FNN) is a type of artificial neural network where information flows in one direction: from input nodes to hidden nodes (if any), and then to output nodes. There are no cycles or loops. Unlike recurrent neural networks, which have information flow in both directions, feedforward networks only move forward. They are usually trained using a method called backpropagation and are often called "vanilla" neural networks.

2. Backpropogation Algorithm- Artificial neural networks learn by using feedback loops to improve their predictions. Data flows from input to output through many paths, but only one is correct. To find the right path, the network follows these steps:

Each node guesses the next node in the path.

It checks if the guess is correct. Correct guesses get higher weights; incorrect guesses get lower weights.

For the next data point, nodes use the higher weight paths to make new guesses and repeat the process.

3. Convolution neural network- Hidden layers in convolutional neural networks (CNNs) perform functions called convolutions, which summarize or filter information. This is useful for image classification because it helps the network extract important features from images. These features, like edges, color, and depth, are then used for accurate image recognition and classification. Each hidden layer processes different aspects of the image, making the data easier to analyze without losing crucial details.

How to train Neural Network-

Initialization:

- Neural networks start with random weights. These initial values are crucial because they determine the starting point for the learning process.

Forward Pass:

- Data flows through the network from input to output. Each layer processes the data and passes it to the next layer, resulting in an output.

Loss Calculation:

- The difference between the predicted output and the actual output is calculated using a loss function. This function measures how well the network's predictions match the expected results.

Backpropagation:

Backpropagation adjusts the weights to minimize the loss. It involves the following steps:

Error Propagation: The error is propagated backward through the network.

Gradient Calculation: The gradient of the loss with respect to each weight is calculated. This shows how much the loss would change if the weight is adjusted.

Weight Adjustment: Weights are updated in the direction that reduces the loss, based on the gradients.

Gradient Descent:

This is the optimization method used to update the weights. The main types of gradient descent include:

Batch Gradient Descent: Uses the entire dataset to calculate the gradients.

Stochastic Gradient Descent (SGD): Uses one data point at a time for updates, making the process faster but more noisy.

Mini-Batch Gradient Descent: Uses small batches of data points, balancing speed and stability.

Learning Rate:

- This parameter controls the size of the weight updates. A smaller learning rate means slower but more precise updates, while a larger learning rate speeds up the process but can overshoot the optimal values.

Iteration and Convergence:

- The forward pass, loss calculation, backpropagation, and weight update steps are repeated for many iterations (epochs). Training continues until the loss is minimized and the network's performance stabilizes.

Efficiency Techniques:

- Techniques like SGD and mini-batch gradient descent improve training efficiency by reducing the computational load and helping avoid local minima in the loss landscape.

By following these steps, neural networks learn to make accurate predictions by continuously adjusting their weights based on the feedback from the loss calculations.

Deep learning in context of neural network

Artificial intelligence (AI) is a part of computer science that works on making machines do tasks that usually need human thinking. Machine learning (ML) is a way in AI where computers use large amounts of data to learn and make decisions. Deep learning is a special type of ML that uses deep learning networks to understand data better.

Machine Learning vs. Deep Learning:

Machine Learning: Needs human help to choose important features in the data. This can be time-consuming and complex.

Deep Learning: Works with raw data and figures out the important features by itself, making it more powerful and independent.

Example: Identifying Pet Images

Machine Learning:

Manually label thousands of pet images.

Tell the software what features to look for, like the number of legs, eye shape, ear shape, etc.

Adjust the data and labels to improve accuracy.

Deep Learning:

- The software processes all images and automatically learns which features to analyze, such as legs and face shape, to correctly identify the pet.

Subscribe to my newsletter

Read articles from Salilesh Verma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Salilesh Verma

Salilesh Verma

I'm Salilesh Verma, currently in my second year pursuing a B-Tech in Computer Science. I'm passionate about crafting seamless and engaging user experiences, and my skill set includes proficiency in front-end technologies such as HTML, CSS, and JavaScript, along with expertise in React. Additionally, I've delved into backend development using Node.js. My language proficiency extends to both JavaScript and Java. Beyond technical prowess, I pride myself on effective communication and consider myself a dedicated team player. Excited about the intersection of technology and creativity, I'm eager to contribute and collaborate on innovative projects.