Tutorial: Web Crawler with Surf and Async-Std 🦀

Eleftheria Batsou

Eleftheria Batsou

Hello, amazing people and welcome back to my blog! Today we're going to build a practical example in Rust where we are going to explore the async-await. it will be a web crawler with Surf and Async-Std.

Dependencies

Let's start with theCargo.tomlfile:

We'll need 4 things:

async-stdis the standard Rust library for async-awaitsurfis an HTTP client which utilizes the async-awaithtml5everis a module from theservoproject, which will allow us to parse the HTML we geturlis a library that will allow us to determine whether or not the URLs that we're getting from the web pages that we're pinging to are valid.

async-std = "1.2.0"

surf = "1.0.3"

html5ever = "0.25.1"

url = "2.1.0"

Let's go to themain.rsfile:

The first thing we want to do with this application is write the internal logic so that we can parse the HTML and identify the proper tags that we need to get the URLs that are on the page.

We'll be bringing in a lot of stuff from the html5ever, and more specifically from the tokenizer. We'll bring in the BufferQueue, Tag, TagKind, TagToken, Token, TokenSink, TokenSinkResult, Tokenizer, TokenizerOpts. Also, we're going to need use std::borrow::Borrow;, and use url::{ParseError, Url}; .

As we continue our program we'll return back to importing a couple of more things :)

use html5ever::tokenizer::{

BufferQueue, Tag, TagKind, TagToken, Token, TokenSink, TokenSinkResult, Tokenizer,

TokenizerOpts,

};

use std::borrow::Borrow;

use url::{ParseError, Url};

Struct and Implementation

As you can imagine, as we're crawling over a web page we're going to have a lot of links, so we want to create a struct that will allow us to keep all those links inside of a nice vector. We'll call it LinkQueue (it'll contain all of the links that we'll be crawling.)

For the LinkQueuestruct, we want to #[derive(Default, Debug)] so that we can Default the values in the struct. Because we want to be able to see all of the links inside of the queue, we'll derive the Debug trait on top of it.

#[derive(Default, Debug)]

struct LinkQueue {

links: Vec<String>,

}

For us to be able to use the Tokenizer from html5ever, we want to take the TokenSink trait and implement it on our LinkQueue struct, and because our LinkQueue is going to be mutable, we'll do it like this: impl TokenSink for &mut LinkQueue.

Now the TokenSink trait has a few things attached to it! We'll also need a process_token function which will be essentially the main function.

process_token needs to take in a reference to mutable self, so mutable LinkQueue, and then it will take in the token type, and then the line_number, and then it will output a TokenSinkResult, and since the Handle will be a union type (type Handle = ();), we'll put that Handle inside of the result.

The TokenSinkResult type is an enum type, and we can use this enum type to specify what we want our function to do after it passes over one of our tokens. So once we find a link, we want our function to continue to parse the rest of the HTML to find all of the rest of the links, so we can use the TokenSinkResultContinue type as our return type from our process function (TokenSinkResult::Continue).

impl TokenSink for &mut LinkQueue {

type Handle = ();

// <a href="link">some text</a>

fn process_token(&mut self, token: Token, line_number: u64) -> TokenSinkResult<Self::Handle> {

match token {

TagToken(

ref tag @ Tag {

kind: TagKind::StartTag,

..

},

) => {

if tag.name.as_ref() == "a" {

for attribute in tag.attrs.iter() {

if attribute.name.local.as_ref() == "href" {

let url_str: &[u8] = attribute.value.borrow();

self.links

.push(String::from_utf8_lossy(url_str).into_owned());

}

}

}

}

_ => {}

}

TokenSinkResult::Continue

}

}

But before we return this TokenSinkResult of Continue type, we want to match on the token itself. The token type that we'll be matching on has a bunch of different values this is because it's an enum. The one that we're worried about is just the TagToken.

So the TagToken means that it's a normal HTML tag, and that's what we're looking for. To make our pattern match exhaustive, we can set it up so that we look for the tag type specifically, and then we have a catch-all which catches the rest and throws them out.

Each tag inside of an HTML document has two pieces to it: It has an opening tag and a closing tag. Inside of our code, the TagKind can either be a StartTag, which is this opening piece, or it can be an EndTag, which is the closing piece.

We're specifically looking for the links inside of a tags, so we want to look inside of the StartTag. We can do a pattern match on the TagKind, and we can say specifically that we want to look at tags where the kind of the tag is a StartTag. Once we've determined that the tag is a StartTag, we can go ahead and just run an if check to if the tag name is a (tag.name.as_ref() == "a"). Opening tags can have multiple attributes inside of them, so we want to take the tag that we've identified, grab the attributes, and then iterate through all of them (for attribute in tag.attrs.iter()). Then we can check if the attribute's local name is href (if attribute.name.local.as_ref() == "href").

After all of these checks, we know that we're looking at the url_str. We can go ahead and grab the url_str by calling attribute.value.borrow(). This will allow us to get the value of the URL. Then, we want to borrow it so that we can push it into our links vector (let url_str: &[u8] = attribute.value.borrow();). The value we're getting from the attribute will come in as a slice of u8 numbers because it's being read in as bytes. We want to convert those bytes into a string when we push it into our LinkQueue, it takes these in as a reference to u8, and then we call String::from_utf8_lossy(url_str) on our url_str, finally, we convert it into an url_str as we push it into our links vector.

get_links function

Let's continue by creating a function called get_links, this will take in a url and a page, and it will pass back a vector of URLs. First, we want to make sure that the URL is just the domain_url, and we can do this by taking the URL that we pass into the function and cloning it into a mutable variable called domain_url.

pub fn get_links(url: &Url, page: String) -> Vec<Url> {

let mut domain_url = url.clone();

domain_url.set_path("");

domain_url.set_query(None);

We can instantiate one of our link queues by using the default method, and this will zero out the links vector inside of our LinkQueue.

let mut queue = LinkQueue::default();

let mut tokenizer = Tokenizer::new(&mut queue, TokenizerOpts::default());

let mut buffer = BufferQueue::new();

With our LinkQueue, we can now go ahead and create the HTML Tokenizer. We pass the LinkQueue into Tokenizer as a mutable reference. For the tokenizer options, we can pass in TokenizerOpts::default(). Let's now create a buffer queue, let mut buffer = BufferQueue::new();. The BufferQueue will allow us to essentially go through our page: String one item at a time. It's essentially what's responsible for reading through the characters in our HTML page. The tokenizer on the other hand is what's responsible for identifying the tokens in the HTML document.

buffer.push_back(page.into());

let _ = tokenizer.feed(&mut buffer);

Now let's take our page: String and push it into the BufferQueue. Now we can take that buffer and feed it into our tokenizer. Once we've done this, we can take all of the links, which will be fed into our LinkQueue, iterate through them, and then map on top of them to check what kind of URLs we have.

queue

.links

.iter()

.map(|link| match Url::parse(link) {

Err(ParseError::RelativeUrlWithoutBase) => domain_url.join(link).unwrap(),

Err(_) => panic!("Malformed link found: {}", link),

Ok(url) => url,

})

.collect()

}

We call Url::parse on each of the links inside of our links vector. This will allow us to determine whether or not we have a valid URL.

There's one error case that we want to specifically look for and that is to check if URL is a relative URL (RelativeUrlWithoutBase). That is to say that it doesn't have the domain attached to it. On web pages, it's not uncommon to just put in the relative URL rather than the absolute URL. If we want to deal with this case, and we don't just want to throw out all of the links that are directly connected to whatever domain we're searching on what we can do is take those relative URLs and attach them to the domain URL, and then pass them back as a new absolute URL. Then if we get any other kind of error, we can just panic and say that we have Malformed link found: {}, and then we can print out that link.

If we have a normal URL, then we'll get back Ok and the URL, and we can pass that back. To finish this off, we want to collect all of these URLs into a vector. We can call the collect method on our map function.

crawl function

If we were to feed an HTML document into the above function, it would properly scan over the HTML and find all of the links that were associated with it. But of course, we want to be able to call a server using a URL, and then have that server feed us the HTML. So let's try to accomplish this!

We need to make two more imports. We need to import async_std::task, and we need to import the surf client.

use async_std::task;

use surf;

Let's create some helper types. We'll create a type called CrawlResult, which will be a Result type:

type CrawlResult = Result<(), Box<dyn std::error::Error + Send + Sync + 'static>>;

Next, we have a BoxFuture type which will be surrounded by a standard library pin. The pin is essentially just a pinned pointer. Inside of this pin pointer, we'll have another box. And inside of the box, we'll have an implementation of a standard future, where the output is a CrawlResult, which is that other type that we just created!

type BoxFuture = std::pin::Pin<Box<dyn std::future::Future<Output = CrawlResult> + Send>>;

With these helper types we are saying that inside of both of these boxes, we want to return a type that implements the trait.

Now, we can go ahead and create a function calledcrawl!

async fn crawl(pages: Vec<Url>, current: u8, max: u8) -> CrawlResult {

println!("Current Depth: {}, Max Depth: {}", current, max);

if current > max {

println!("Reached Max Depth");

return Ok(());

}

.

.

}

Our crawl function will return a future type. So even though we've specified that we want to return the CrawlResult, we're actually returning a future that's wrapped around the CrawlResult by specifying that this function is asynchronous. The CrawlResult takes in a vector of URL, which will be our pages (pages: Vec<Url>), it takes in the current depth, which will be a u8 value ( current: u8) and then it takes in the max depth, which will also be a u8 value (max: u8). These depths determine how many pages from the original page we want to crawl. Let's also print out the current depth and the max depth ("Current Depth: {}, Max Depth: {}", current, max).

Then we want to check if current > max because if it is, then we want to stop crawling.

let mut tasks = vec![];

println!("crawling: {:?}", pages);

for url in pages {

let task = task::spawn(async move {

println!("getting: {}", url);

let mut res = surf::get(&url).await?;

let body = res.body_string().await?;

let links = get_links(&url, body);

println!("Following: {:?}", links);

.

.

.

});

tasks.push(task);

}

Essentially what we're going to do is spawn multiple different tasks for each of the URLs that we get and each of these tasks will spin up what's called a green thread. So they'll run asynchronous to one another. We can create a vector for all of our tasks and print out that we're crawling over all of these pages println!("crawling: {:?}", pages);.

Now for each URL inside of our pages vector, we want to spawn a specific task that is spawn one of these green threads. For each of our tasks, we'll go ahead and we'll print out that we're getting that URL println!("getting: {}", url); Then we'll go and use the surf library to call a get method on that URL: let mut res = surf::get(&url).await?;.

After we've gotten the result back from calling surfget, we'll take that result and get the body_string, which again will give us back a future, which we want to wait on (let body = res.body_string().await?;). We'll wait until we get that entire body_string before we move forward and call our get_links function on both the url and the body_string.

let links = get_links(&url, body);

We can print out that we're following all of the links (println!("Following: {:?}", links);) that were inside of the body_string that we passed to our get_links function and after we've done all these, we can take the task that we created and push it into our tasks vector. It's important to keep in mind that even though we're generating tasks here, we haven't actually tried to execute them yet! We're just putting them inside of our tasks vector. To execute them, we need to take them out of the tasks vector and then call a wait on them. Essentially what we're doing is just going through each of the URLs, getting them from our pages and then spawning tasks for each URL so that we can continue to follow them. To continue to follow them, we want to recursively call our crawl function after we get all of the links on each of the links.

box_crawl function

So what we want to do is set up another function that puts our crawl function inside of a Box pointer. We'll create a wrapper function called box_crawl, which takes in the pages, the current depth and the max depth, and it returns our BoxFuture type.

fn box_crawl(pages: Vec<Url>, current: u8, max: u8) -> BoxFuture {

Box::pin(crawl(pages, current, max))

}

In the function, we create a new Box::pin(crawl(pages, current, max)) and we put our crawl function call inside of it.

Now we can return into our crawl function and pass a call to box_crawl, pass in the links, then take our current depth and add 1 to it, and then also pass in the max depth.

box_crawl(links, current + 1, max).await

Now we're finished with our crawler logic!

main function

Let's finish up our application by tying our main function.

fn main() -> CrawlResult {

task::block_on(async {

box_crawl(vec![Url::parse("https://www.rust-lang.org").unwrap()], 1, 2).await

})

}

We'll call the box_crawl and as an example, I'm using the rustlang.org website. Then I'm putting in a current depth of 1 and a max depth of 2.

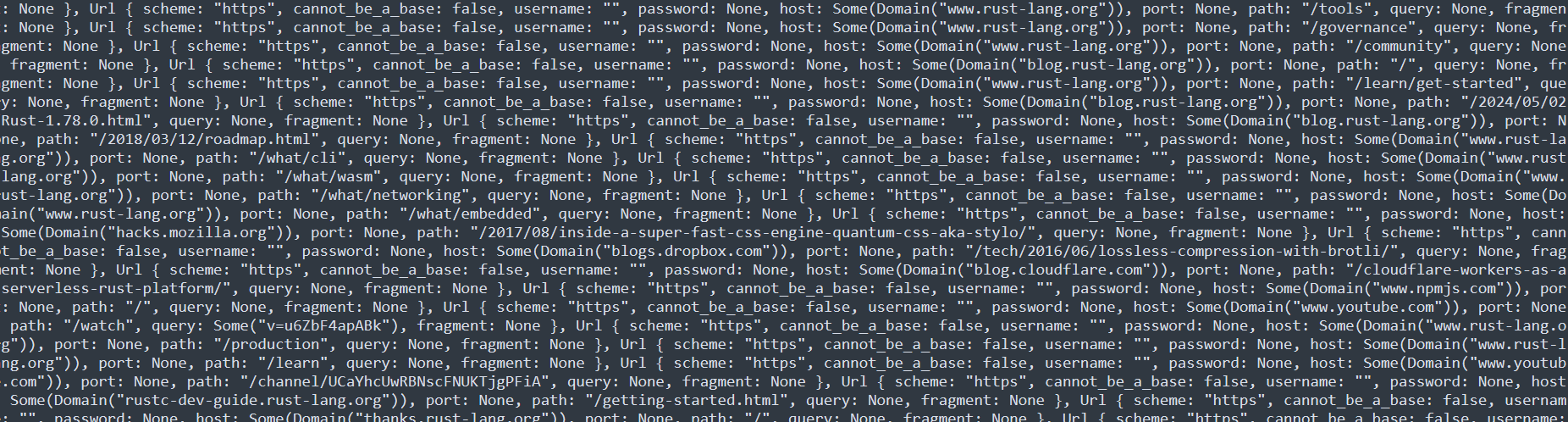

Run it

Now, we can go ahead and run this application with cargo run. It goes through all of the different links and it follows them as we would expect with a web crawler. If right now you run the same example as me (rustlang.org,current depth of 1 and a max depth of 2), you can see there are quite a lot of things!

Find the code here:

Happy Rust Coding! 🤞🦀

👋 Hello, I'm Eleftheria, Community Manager, developer, public speaker, and content creator.

🥰 If you liked this article, consider sharing it.

Subscribe to my newsletter

Read articles from Eleftheria Batsou directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Eleftheria Batsou

Eleftheria Batsou

Hi there 🙆♀️, I'm Eleftheria, Community Manager with a coding background and a passion for UX. Do you have any questions? Don't hesitate to contact me. I can talk about front-end development, design, job hunting/freelancing/internships.