🚀Unraveling the Kubernetes Architecture: A Journey Through Containers and Clusters🚀

Sprasad Pujari

Sprasad Pujari

Kubernetes, also known as K8s, is a powerful open-source container orchestration platform that simplifies the deployment, scaling, and management of containerized applications. In this blog post, we'll dive into the architecture of Kubernetes and explore the key components that make it such a versatile and robust system.

The Tale of Two Architectures: Docker vs. Kubernetes 🐳

Before we delve into the intricacies of Kubernetes, it's essential to understand the fundamental difference between Docker and Kubernetes architectures:

In Docker, the simplest unit of deployment is a container 📦, encapsulating an application and its dependencies.

In Docker, when you create a container and install a Java application on it, you need a Java runtime environment to run the Java application. Similarly, without a Docker container runtime, you cannot run Docker containers; this runtime is also called the Docker Engine or Docker Daemon.

In Kubernetes, the smallest deployable unit is a Pod 🥔, which can contain one or more containers.

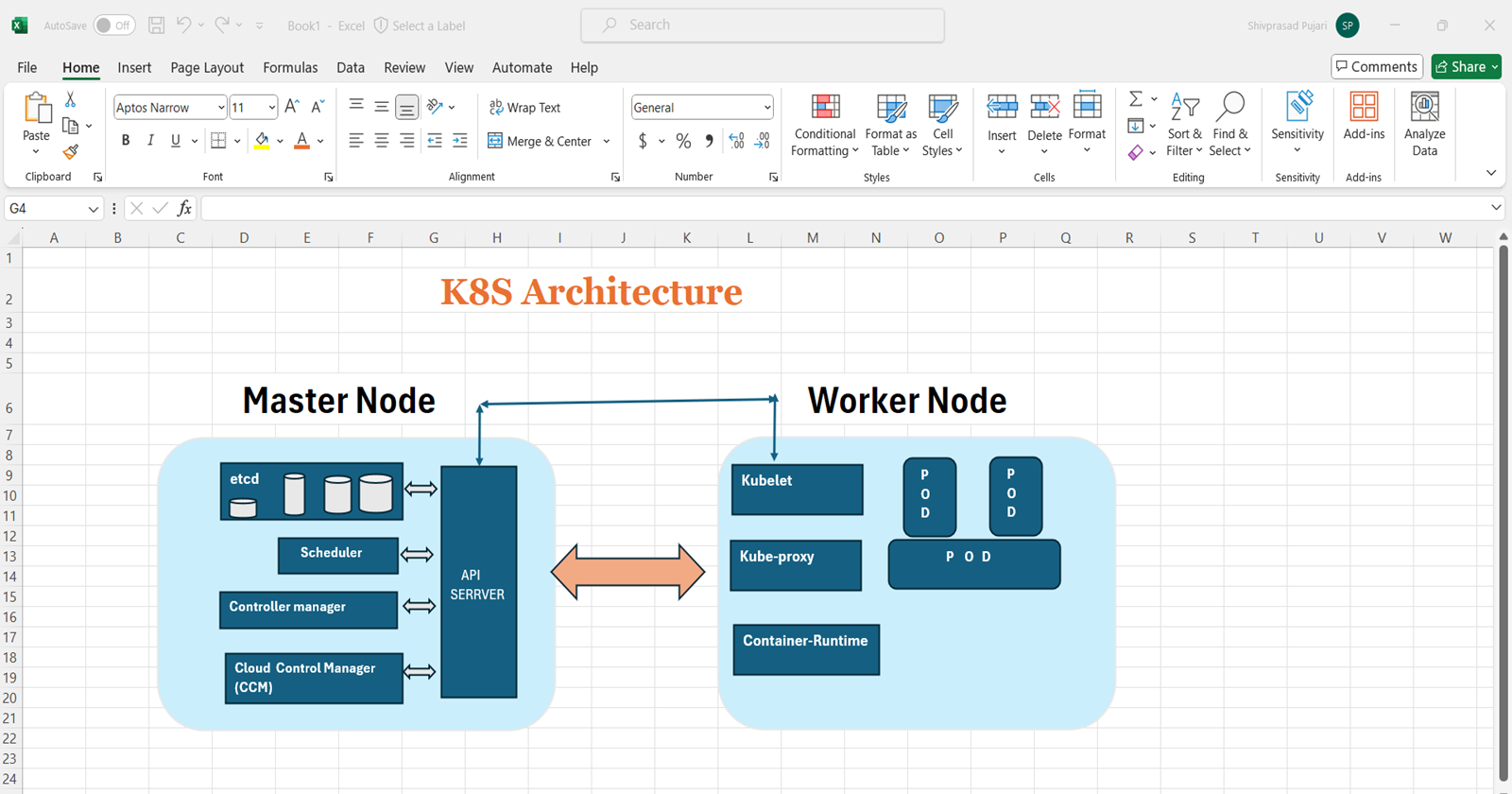

In Kubernetes, we have Master Nodes and Worker Nodes. In a production environment, multiple Master and Worker Nodes are typically used based on requirements. Requests always go from the Master Node to the Worker Nodes.

The smallest deployment unit in Kubernetes is a Pod, whereas in Docker, it's a Container. Suppose a Pod is deployed on a Worker Node; in Kubernetes, there is a component called Kubelet that monitors the Pod's status, such as whether it's running or not. If the Pod is not running, Kubelet informs the API Server (part of the Master Node).

Inside a Pod, a container runtime is available, but unlike Docker, Kubernetes doesn't require Docker specifically. Kubernetes supports various container runtimes like containerd, CRI-O, etc.

While Docker provides a runtime environment for running containers, Kubernetes is a higher-level orchestration system that manages and orchestrates the deployment, scaling, and lifecycle of containerized applications across a cluster of nodes.

The Kubernetes Cluster: A Harmonious Symphony 🎼

At the heart of Kubernetes lies the cluster, a set of nodes working together to run your containerized applications. The cluster is divided into two main components:

The Master Node: The Conductor 🎻

The Master Node, also known as the Control Plane, is the central management component of the Kubernetes cluster. It consists of several key players:

🎤 API Server: The primary management component that exposes the Kubernetes API and serves as the entry point for all cluster operations.

The API Server decides on which Node a Pod should be deployed based on requirements. It is the core component and the heart of Kubernetes.

🎷 Scheduler: Responsible for scheduling and assigning Pods to appropriate Worker Nodes based on resource availability and constraints.

If the API Server decides to deploy a Pod on a free Node (e.g., Node-1), there is a Scheduler component that schedules the Pod deployment on that Node. The Scheduler is responsible for scheduling Pods or resources in Kubernetes.

🎺 Controller Manager: Manages various controllers responsible for maintaining the desired state of the cluster, such as the Replication Controller, Deployment Controller, and others.

Kubernetes has a Controller Manager component that manages various controllers like Replica Sets. For example, if you set replica-set=2 in your YAML file, two Pods will be created, and the Controller Manager ensures that both Pods are running correctly.

🥁 etcd: A distributed key-value store that persists the cluster's configuration data and state, acting as the heartbeat of the cluster.

Another component, etcd, acts as a backup for the entire cluster, storing cluster data in a key-value pair format.

Cloud Control Manager (CCM): Finally, there is a Cloud Controller Manager (CCM) component used when Kubernetes runs on a cloud platform like AWS EKS or Azure AKS. If Kubernetes is running on AWS or Azure, user requests need to be translated into the cloud provider's format, which is handled by the Cloud Controller Manager. The Cloud Controller Manager is an open-source utility.

The Worker Nodes: The Musicians 🎸

The Worker Nodes, also known as the Data Plane, are responsible for running the actual workloads, such as Pods and containers. Each Worker Node consists of the following components:

🎙️ Kubelet: An agent that runs on each Worker Node, managing Pods and ensuring they are running as expected.

In Kubernetes, there is a component called Kubelet that monitors the Pod's status, such as whether it's running or not. If the Pod is not running, Kubelet informs the API Server (part of the Master Node).

🎚️ Container Runtime (e.g., Docker, containerd, CRI-O): Responsible for pulling container images and running containers.

Inside a Pod, a container runtime is available, but unlike Docker, Kubernetes doesn't require Docker specifically. Kubernetes supports various container runtimes like containerd, CRI-O, etc.

🎛️ Kube-Proxy: A network proxy that facilitates communication between Pods and manages network rules.

it is responsible for networking, IP addressing, and load balancing. The Worker Node, or Data Plan is responsible for running our applications soomethly

The Building Blocks of Kubernetes 🧱

Kubernetes introduces several key concepts and components that work together to manage and orchestrate containerized applications:

🥔 Pods: The smallest deployable unit in Kubernetes, consisting of one or more containers that share resources and a network namespace.

🔄 Replica Sets: Ensure that a specified number of Pod replicas are running at any given time.

🚀 Deployments: Manage the rolling updates and rollbacks of Pods in a declarative way.

🌐 Services: Provide a stable network endpoint and load balancing for Pods.

🚪 Ingress: Exposes HTTP and HTTPS routes from outside the cluster to services within the cluster.

💾 Volumes: Provide persistent storage for Pods, allowing data to survive container restarts and failures.

🔐 Namespaces: Provide virtual clusters within a single physical cluster, allowing for resource isolation and access control.

A Real-time Example: Orchestrating Your Application 🎬

Let's illustrate how Kubernetes manages containerized applications with a real-time example:

👨💻 A developer creates a Docker image containing their application and pushes it to a container registry.

🧑💼 The cluster administrator creates a Deployment object in Kubernetes, specifying the desired state of the application (e.g., number of replicas, container image, resource requirements).

🎤 The Kubernetes API Server receives the Deployment request and communicates with the Scheduler to determine the appropriate Worker Nodes for running the application Pods.

🎷 The Scheduler assigns the Pods to the selected Worker Nodes based on available resources and constraints.

🎺 The Kubelet on each Worker Node pulls the required container images and starts the containers within the Pods.

🎛️ The Kube-Proxy component sets up network rules to allow communication between the Pods and external clients.

🌐 The application is now accessible through a Service, which provides load balancing and a stable network endpoint.

🔄 If a Pod fails or becomes unhealthy, the Replication Controller ensures that a new Pod is automatically created to maintain the desired state.

Conclusion: Unlocking the Power of Kubernetes 🔓

Kubernetes provides a robust and flexible architecture for managing containerized applications at scale. With its modular design, extensibility, and rich set of features, Kubernetes simplifies the deployment, scaling, and management of modern applications across a wide range of environments, from on-premises data centers to public clouds.

Whether you're a developer, DevOps engineer, or IT professional, understanding the Kubernetes architecture is essential for effectively leveraging this powerful platform and unlocking its full potential. So, buckle up and get ready to embrace the world of containerized applications with Kubernetes! 🚀

Thank you for joining me on this journey through the world of cloud computing! Your interest and support mean a lot to me, and I'm excited to continue exploring this fascinating field together. Let's stay connected and keep learning and growing as we navigate the ever-evolving landscape of technology.

LinkedIn Profile: https://www.linkedin.com/in/prasad-g-743239154/

Feel free to reach out to me directly at spujari.devops@gmail.com. I'm always open to hearing your thoughts and suggestions, as they help me improve and better cater to your needs. Let's keep moving forward and upward!

If you found this blog post helpful, please consider showing your support by giving it a round of applause👏👏👏. Your engagement not only boosts the visibility of the content, but it also lets other DevOps and Cloud Engineers know that it might be useful to them too. Thank you for your support! 😀

Thank you for reading and happy deploying! 🚀

Best Regards,

Sprasad

Subscribe to my newsletter

Read articles from Sprasad Pujari directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sprasad Pujari

Sprasad Pujari

Greetings! I'm Sprasad P, a DevOps Engineer with a passion for optimizing development pipelines, automating processes, and enabling teams to deliver software faster and more reliably.