Step by Step - Deploying your Counting App with a Redis Cluster running as Kubernetes StatefulSet

ferozekhan

ferozekhan

Kubernetes has revolutionized the way we deploy and manage cointainers and cloud native applications. While many workloads like Web Applications are stateless and can be easily scaled and managed with Deployments or ReplicaSets, there are cases where the state persistence is crucial. This is where StatefulSets come into play. StatefulSets are designed for stateful applications, where each instance requires a unique identity and stable, persistent storage.

In this article, we'll delve into Kubernetes StatefulSets and demonstrate how to create and manage them step-by-step.

Stateless vs Stateful Applications?

All Software have some degree of state. In some applications like web applications, the state doesn’t impact the users. Their purpose is to serve HTML and other static files as web content to the user. These applications are called Stateless Applications because they don't keep record of their state and each request is completely new. There is no state that binds the user's browser to the web server application. We usually deploy multiple instances of such stateless applications with the Kubernetes Deployment and a Load Balancer, hence the webpage content may be served by any of these instances of the application. If one instance of the application fails, we have other replicas of the same application to serve the web requests. The multiple instances of the application run as containers inside PODs that are identical and interchangeable. These are created randomly and can be randomly accessed.

Example: A NodeJS Frontend Web Application forwards the requests to the backend MongoDB. Based on the type of request, MongoDB will either update data based on its previous state or query the data.

Stateful Applications are applications that store data to keep track of its state and are often deployed as StatefulSets. Applications like Databases, Message Queues and Caches have a much higher degree of state, because they rely on Storage to persist state data, hence called Stateful Applications. These are often deployed as a backend for your Stateless Applications. For instance a user simply going a website may be a considered as a use case of a stateless application; while a user authenticating on the website, may be considered as an implementation of a Stateless Application communicating with a Stateful Application like a backend datastore for authentication of the user.

Stateful Applications are deployed using StatefulSets component of the API Server. The multiple instances of the application may run as containers inside PODs that are NOT identical and NOT interchangeable. These CANNOT be created or deleted at the same time randomly. They CANNOT be randomly accessed. Each POD has its own Persistent Volume for data persistence. The stateful applications maintain unique identity between re-scheduling and restarts because only ONE instance of this application must act as the source of truth hence allow READ and WRITE while the other instances will only be READ only and must have the same data as the first instance. This is active / passive architecture where the READ replicas must sync data from the master. These have fixed ordered name like mysql-0, mysql-1, mysql-2. They also have fixed DNS names. This means when PODs restart, their IP address will change however they continue to persist their unique identifiers like POD names and DNS Names thereby enabling them to retain their state and role within the cluster.

What is a StatefulSet?

A StatefulSet is a Kubernetes resource that manages the deployment and scaling of a set of Pods. Unlike a Deployment, a StatefulSet maintains a sticky identity for each of its Pods. These Pods are created from the same spec but are not interchangeable: each Pod has a persistent identifier that it maintains across rescheduling.

StatefulSets are best for Stateful applications that store data to keep track of its state. Example: Databases, Message Queues and Caches.

Key features of StatefulSets:

Stable, unique network identifiers: Each Pod in a StatefulSet gets a unique hostname that stays the same regardless of where the Pod is scheduled.

Stable, persistent storage: Each Pod gets its own PersistentVolumeClaim (PVC) that it retains across rescheduling.

Ordered, graceful deployment and scaling: Pods are created, deleted, and scaled in a controlled, sequential manner.

Step-by-Step Demo: Deploying a StatefulSet

Prerequisites

Before we start, ensure you have:

A running Kubernetes cluster (you can use KinD for local testing) as described in my last blog article HERE.

You may also use any Cloud based Kubernetes Managed Services like AKS on Azure or EKS on AWS or GKE Service on Google Cloud.

Step 1: Get the Source Code Files

Let us download the source code files from my GitHb repository HERE.

git clone https://github.com/mfkhan267/flask-redis-cluster-app.git

cd flask-redis-cluster-app/k8s/

Step 2: Create the Namespace for the project Demo

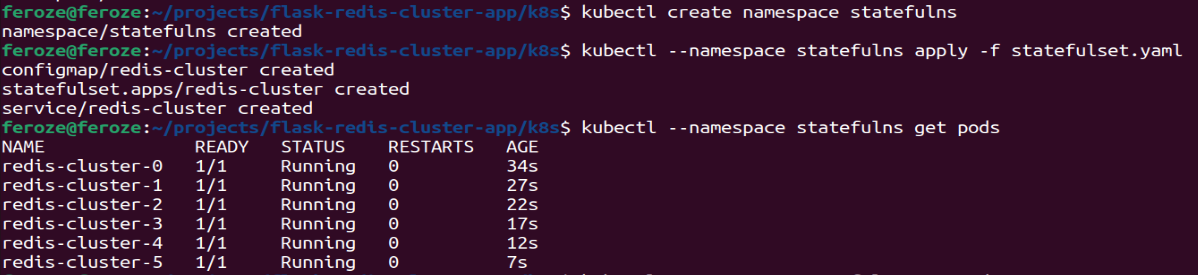

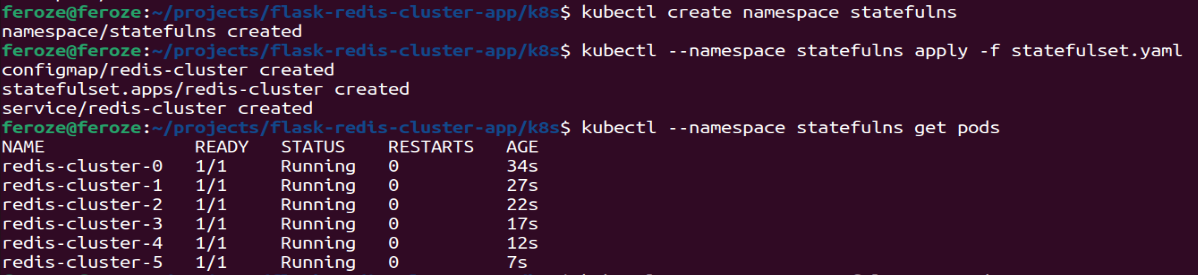

kubectl create namespace statefulns

Step 3: Create a StatefulSet for Redis Cluster

Let us create the StatefulSet for the Redis Cluster with a headless service that is required for the StatefulSet to provide network identities to the Pods.

---

apiVersion: v1

kind: ConfigMap

metadata:

name: redis-cluster

data:

update-node.sh: |

#!/bin/sh

REDIS_NODES="/data/nodes.conf"

sed -i -e "/myself/ s/[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}/${POD_IP}/" ${REDIS_NODES}

exec "$@"

redis.conf: |+

cluster-enabled yes

cluster-require-full-coverage no

cluster-node-timeout 15000

cluster-config-file /data/nodes.conf

cluster-migration-barrier 1

appendonly yes

protected-mode no

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis-cluster

spec:

serviceName: redis-cluster

replicas: 6

selector:

matchLabels:

app: redis-cluster

template:

metadata:

labels:

app: redis-cluster

spec:

containers:

- name: redis

image: redis:7.0.15-alpine

ports:

- containerPort: 6379

name: client

- containerPort: 16379

name: gossip

command: ["/conf/update-node.sh", "redis-server", "/conf/redis.conf"]

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: conf

mountPath: /conf

readOnly: false

- name: data

mountPath: /data

readOnly: false

volumes:

- name: conf

configMap:

name: redis-cluster

defaultMode: 0755

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "standard"

resources:

requests:

storage: 50Mi

---

apiVersion: v1

kind: Service

metadata:

name: redis-cluster

spec:

clusterIP: None

ports:

- port: 6379

targetPort: 6379

name: client

- port: 16379

targetPort: 16379

name: gossip

selector:

app: redis-cluster

Apply the StatefulSet.

kubectl apply -f statefulset.yaml --namespace statefulns

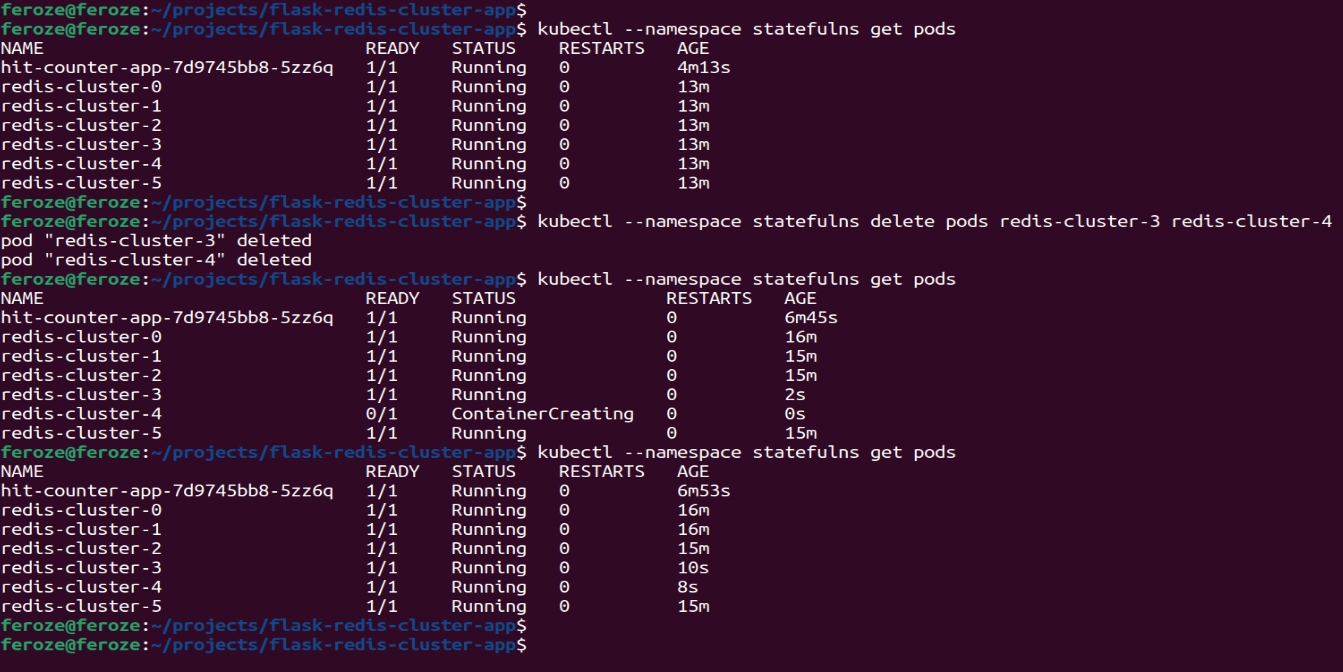

Step 4: Verify the StatefulSet

Check the status of the StatefulSet and its Pods.

kubectl --namespace statefulns get pods

You should see three Pods with stable identities (redis-cluster-0, redis-cluster-1, redis-cluster-2, redis-cluster-3, redis-cluster-4, redis-cluster-5). Wait for for all the 5 redis-cluster-x Pods to come up before proceeding further.

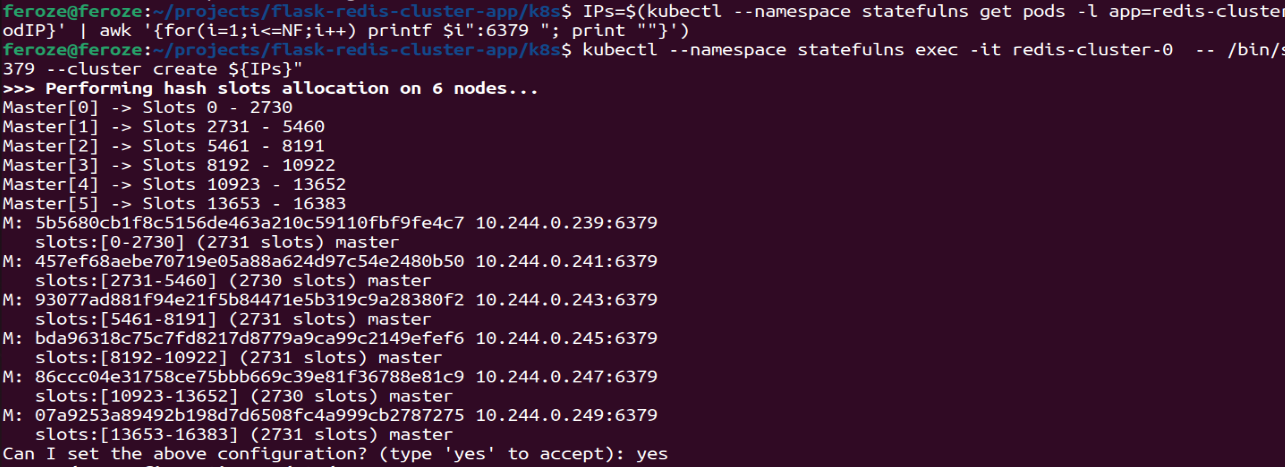

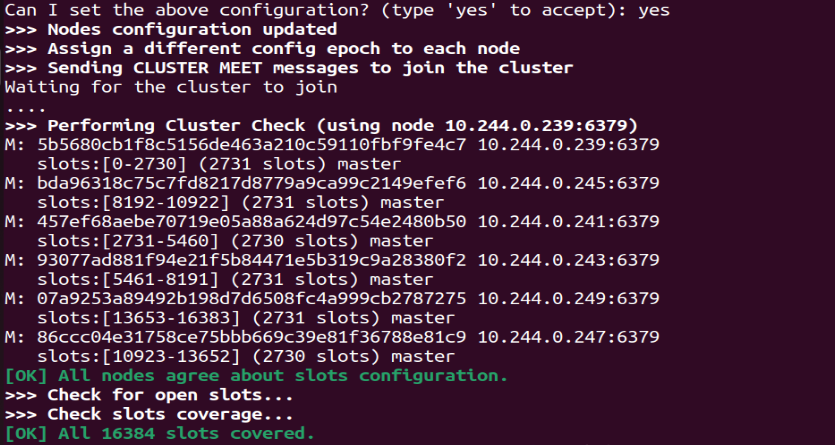

Step 5: Access the StatefulSet and Create the Redis Cluster

Since we created a headless service for our Redis Cluster, you can access the Redis Cluster Pods individually.

The first command creates a variable IPs with the list of the internal IP addresses of the 5 Pods running as part of the Redis Cluster.

The second command to ssh into one of the redis cluster pods to execute the cluster create command. This creates the Redis Cluster with the Redis Cluster pods as its members.

IPs=$(kubectl --namespace statefulns get pods -l app=redis-cluster -o jsonpath='{.items[*].status.podIP}' | awk '{for(i=1;i<=NF;i++) printf $i":6379 "; print ""}')

kubectl --namespace statefulns exec -it redis-cluster-0 -- /bin/sh -c "redis-cli -h 127.0.0.1 -p 6379 --cluster create ${IPs}"

Step 6: Check the status of the Redis Cluster

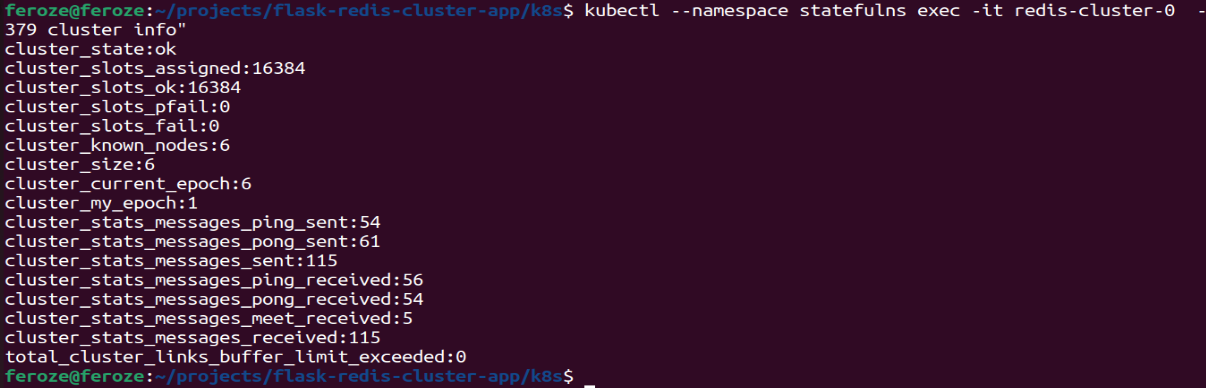

kubectl --namespace statefulns exec -it redis-cluster-0 -- /bin/sh -c "redis-cli -h 127.0.0.1 -p 6379 cluster info"

Step 7: Create an Example App Deployment

Now, we will deploy our Example App with a Kubernetes Deployment.

apiVersion: v1

kind: Service

metadata:

name: hit-counter-lb

spec:

type: LoadBalancer

ports:

- port: 80

protocol: TCP

targetPort: 5000

selector:

app: myapp

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: hit-counter-app

spec:

replicas: 1

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: mfk267/flask-redis-cluster-app:7.0

ports:

- containerPort: 5000

Apply the Deployment.

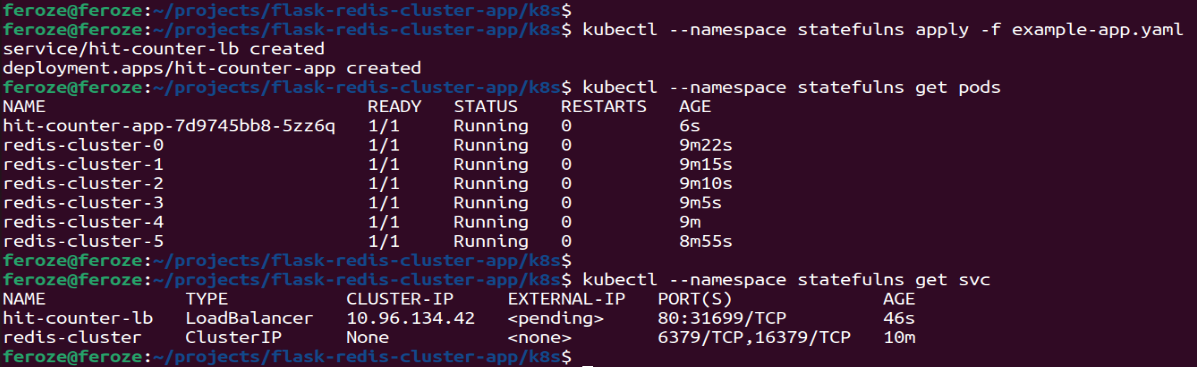

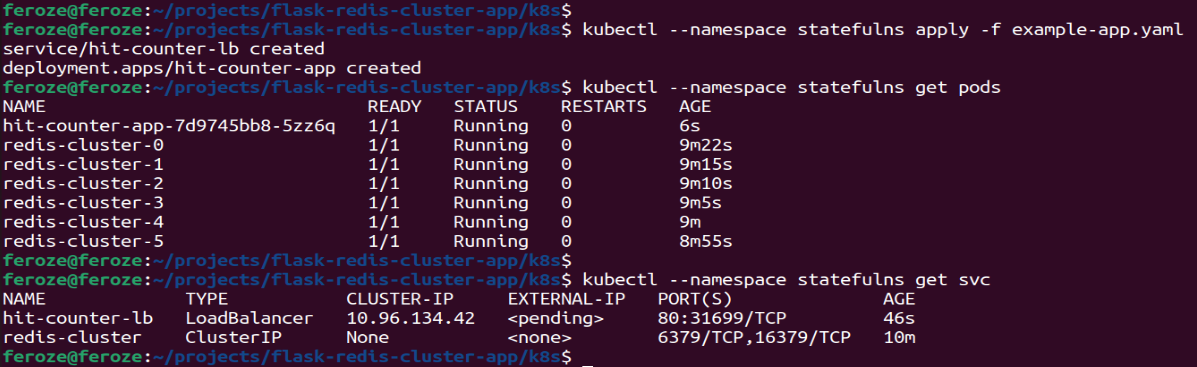

kubectl apply -f example-app.yaml --namespace statefulns

Step 8: Verify the Example App Deployment

Check the status of the Deployment and its Pods and Services.

kubectl --namespace statefulns get pods

kubectl --namespace statefulns get svc

Step 9: Testing the Example App and Data persistence

Since KinD doesnt support a Load Balancer, the EXTERNAL-IP shows as pending. To access the example app we must use PORT FORWARDING as below

kubectl port-forward --address 0.0.0.0 svc/hit-counter-lb 8080:80 --namespace statefulns

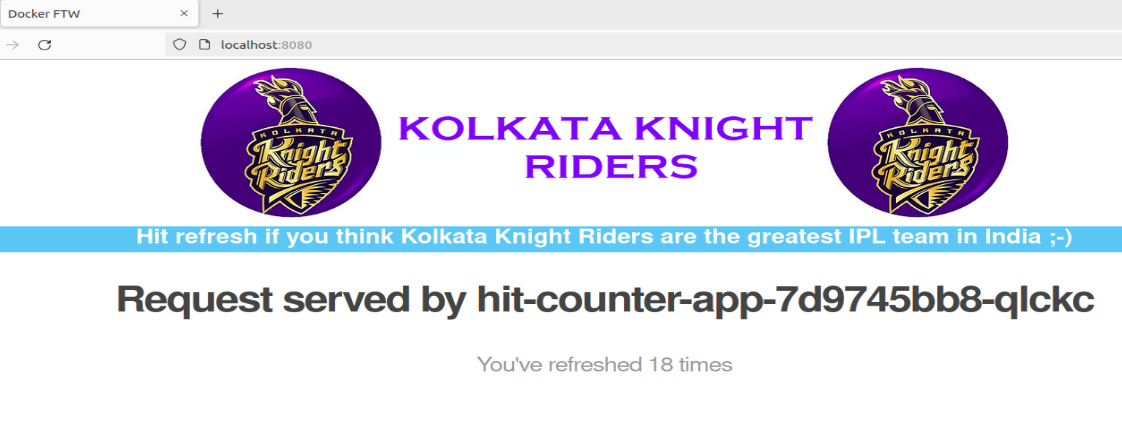

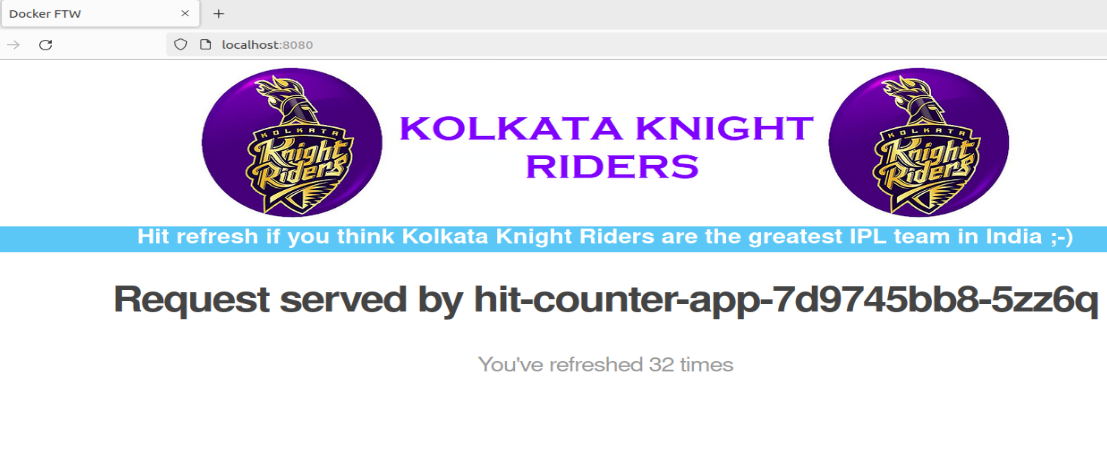

To access the Example Application, let us browse to the localhost:8080

When you open the localhost:8080 you should see the screen below. Refresh the webpage for a few times. Keep a note of the number of times you have refreshed. In my case I have refreshed the web page 18 times.

Let us open another terminal and delete 2 of the Redis Pods from the Redis Cluster (redis-cluster-3 and redis-cluster-4)

Going back to our browser to access the Example Application, at localhost:8080

We continue to get the correct numbers of webpage refreshes as shown below. my counter for refresh times continued to increased upwards of 18. This time I refreshed until 32 times.

Kubernetes manages the desired number of replicas for your deployments. Kubernetes creates news PODs whenever PODs fail, such that the current state muts always match the desired state. We deleted 2 Redis PODs, but we continue to have 5 Redis PODs running as shown above. That is Kubernetes magic that is always watching out for the PODs and starts up new ones whenever the PODs fail. The updated data is coming from the StatefulSets running the redis cluster and Persistent Volumes where the state of the redis application is retained. This is how we always get the updated data even when instances of the redis cluster has failed.

Step 10: Cleanup

To clean up the resources, delete the StatefulSet, Example App and the headless service.

kubectl delete namespace statefulns

Conclusion

StatefulSets in Kubernetes provide a robust way to manage stateful applications by ensuring that each Pod has a unique identity and stable, persistent storage. This step-by-step demo illustrated how to create and manage StatefulSets, covering essential aspects like headless services, deployment, persistent storage, and scaling. With this knowledge, you can confidently deploy stateful applications in your Kubernetes clusters.

That's all folks. That is all about StatefulSets in Kubernetes. Kindly share with the community. Until I see you next time. Cheers !

Subscribe to my newsletter

Read articles from ferozekhan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by