Apache Airflow - Installation Guide

samyak jain

samyak jainThese steps are for linux/ubuntu distribution -

Step 1: Install Python and Pip

Ensure that Python and Pip are installed on your system.

sudo apt-get update

sudo apt-get install python3 python3-pip

Step 2: Set Up the Airflow Home Directory

Set the AIRFLOW_HOME environment variable where Airflow will store its configuration files and data.

You may replace ~/airflow with the desired location for your Airflow home directory.

export AIRFLOW_HOME=~/airflow

Step 3: Install Apache Airflow

With Python and Pip installed, you can now proceed to install Apache Airflow using the following command:

pip3 install apache-airflow

Step 4: Initialize the Backend

You would need to initialize the backend database before starting airflow. Run this command for the same :

airflow db init

Step 5: Starting Web Server/UI :

Once the backend is initialized, you can start the Airflow web server :

airflow webserver -p 8080

Step 6: Run the Airflow Scheduler

To enable task scheduling and execution, you need to start the Airflow scheduler. Run the following command:

airflow scheduler

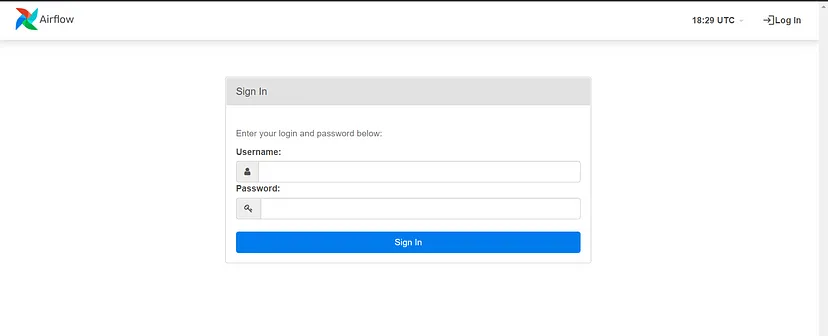

The first time you try to get in, Airflow will ask you for a username and password. To get one of these, you should create a user.

docker exec -it {containername}/bin/bash

and then

airflow users create --role Admin --username admin --email admin --firstname admin --lastname admin --password admin

To verify if Airflow is successfully installed, access thelocalhostusing the port number 8080.

As created in previous step , username- admin, password- admin:

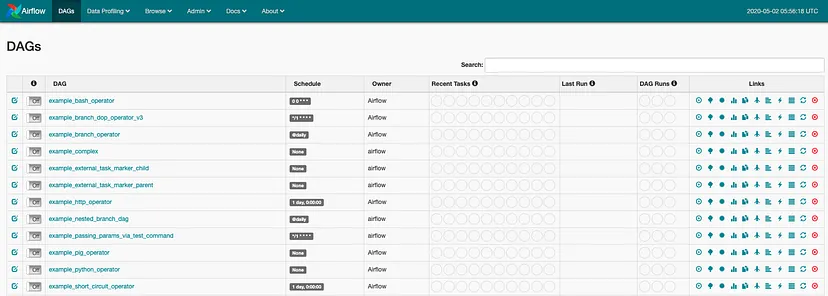

Once you are logged in , you may see the beautiful Airflow UI :

Next Steps -

Apache Airflow - Operators -

Subscribe to my newsletter

Read articles from samyak jain directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

samyak jain

samyak jain

Hi there, I'm Samyak Jain , a seasoned data & analytics professional with problem solving mindset, passionate to solve challenging real world problems using data and technology.