Kubernetes Pods: The Building Blocks of Container Orchestration on AWS Minikube with Nginx🔷

Sprasad Pujari

Sprasad Pujari

Kubernetes has revolutionized the way we deploy and manage containerized applications. At the heart of this orchestration system lies the concept of Pods, which are the smallest deployable units in Kubernetes. In this blog post, we'll delve into the world of Kubernetes Pods, exploring their functionality, real-life examples, and the ease they bring to container management.

🐳 From Docker Containers to Kubernetes Pods

In the Docker world, we typically deploy and run containers using commands like docker run -d -p -v -network. However, in Kubernetes, we deploy Pods instead of individual containers. A Pod is a group of one or more containers sharing the same network namespace, storage, and other resources.

📝 Defining Pods with YAML

Instead of running commands like we do in Docker, Kubernetes uses declarative YAML files to define and manage Pods. Here's an example of a simple Pod YAML file:

yamlCopyapiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: my-container

image: nginx:latest

ports:

- containerPort: 80

This YAML file defines a Pod named my-pod with a single container running the nginx image and exposing port 80.

🌐 Real-life Example: Deploying a nginx Application

Let's consider a real-life example of deploying a web application using Kubernetes Pods. Imagine you have a web application consisting of two components: a front-end React application and a back-end Node.js server.

You can define a Pod YAML file that includes two containers: one for the React application and one for the Node.js server. By deploying these two containers within the same Pod, they can communicate seamlessly over the shared network namespace, making it easier to manage and scale your application.

yamlCopyapiVersion: v1

kind: Pod

metadata:

name: my-web-app

spec:

containers:

- name: frontend

image: my-frontend:latest

ports:

- containerPort: 3000

- name: backend

image: my-backend:latest

ports:

- containerPort: 8080

In this example, the my-web-app Pod includes two containers: frontend (running the React application) and backend (running the Node.js server). Both containers can communicate with each other over the shared network namespace, while still being isolated from other Pods in the cluster.

🚀 Benefits of Using Kubernetes Pods

Using Kubernetes Pods offers several benefits:

🔄 Simplified container management: Pods encapsulate one or more containers, making it easier to manage and scale your applications.

🌐 Shared resources: Containers within a Pod share the same network namespace, storage, and other resources, enabling seamless communication and resource sharing.

📝 Declarative configuration: Pods are defined using YAML files, allowing for consistent and repeatable deployments.

🔁 Scaling and replication: Kubernetes provides built-in mechanisms for scaling and replicating Pods based on your application's needs.

🛡️ Isolation and security: Pods provide isolation between different applications or components, enhancing security and resource management.

🤖 Managing Pods with kubectl

Kubernetes provides a command-line interface called kubectl for managing various resources, including Pods. Here are some common kubectl commands for working with Pods:

kubectl create -f pod.yaml: Create a Pod from a YAML file.kubectl get pods: List all running Pods.kubectl describe pod my-pod: Describe the details of a specific Pod.kubectl logs my-pod: View the logs of a Pod.kubectl delete pod my-pod: Delete a Pod.

🚀 Embrace the Power of Kubernetes Pods

Kubernetes Pods are the building blocks of container orchestration, simplifying the deployment and management of containerized applications. By encapsulating containers within Pods and leveraging the declarative YAML configuration, you can streamline your development and deployment processes, enabling seamless scaling, resource sharing, and isolation. Embrace the power of Kubernetes Pods and unlock the full potential of container orchestration in your projects! 🎉

Installing Minikube on AWS and Deploying Nginx on a Pod

Minikube is a lightweight, single-node Kubernetes cluster that runs locally on your machine. It's an excellent tool for learning and developing Kubernetes applications without the need for a full-fledged Kubernetes cluster. In this blog post, we'll walk through the steps to install Minikube on an AWS EC2 instance and deploy the popular Nginx web server on a Kubernetes Pod.

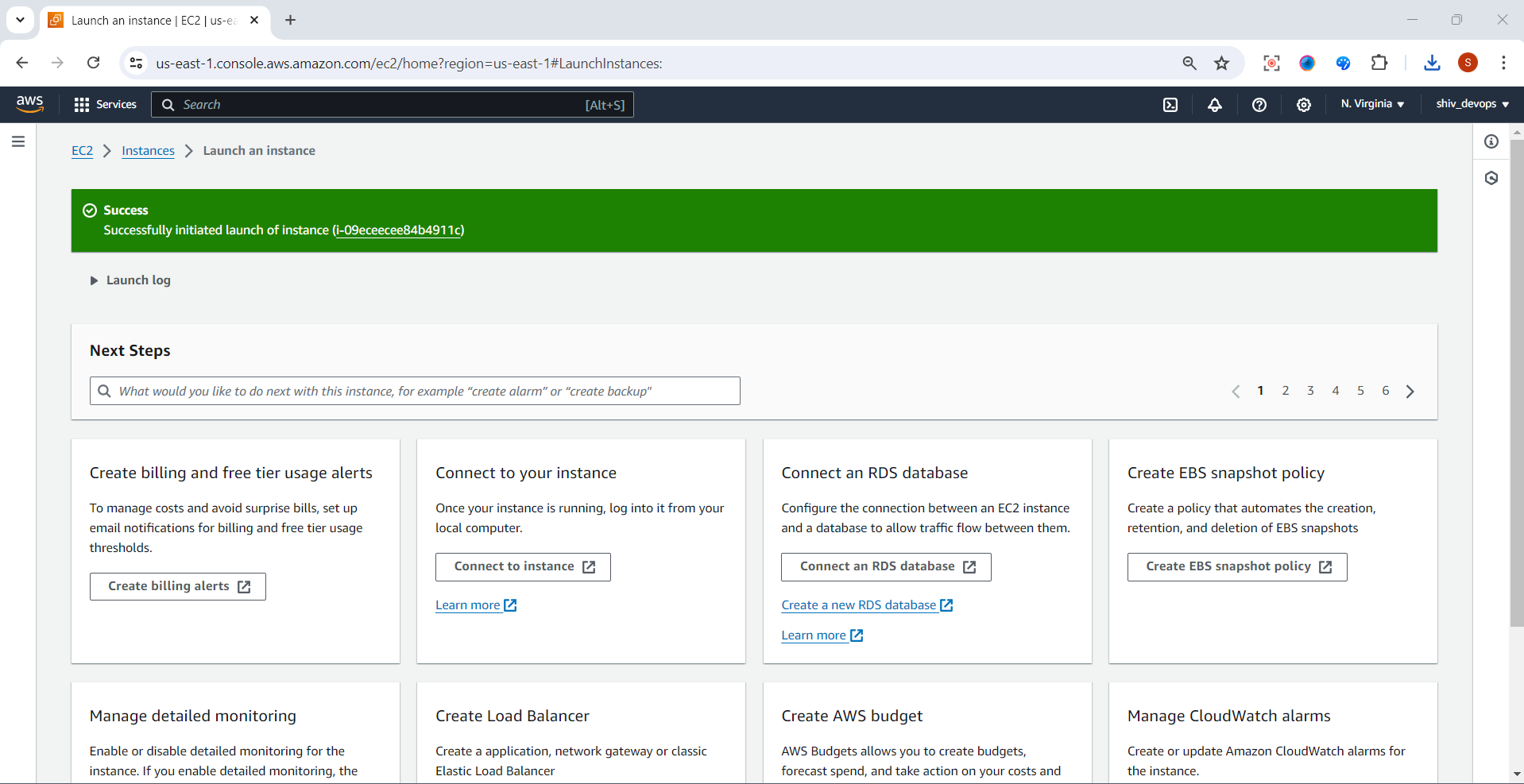

Step 1:Detailed steps to create an EC2 instance on AWS for installing Minikube:

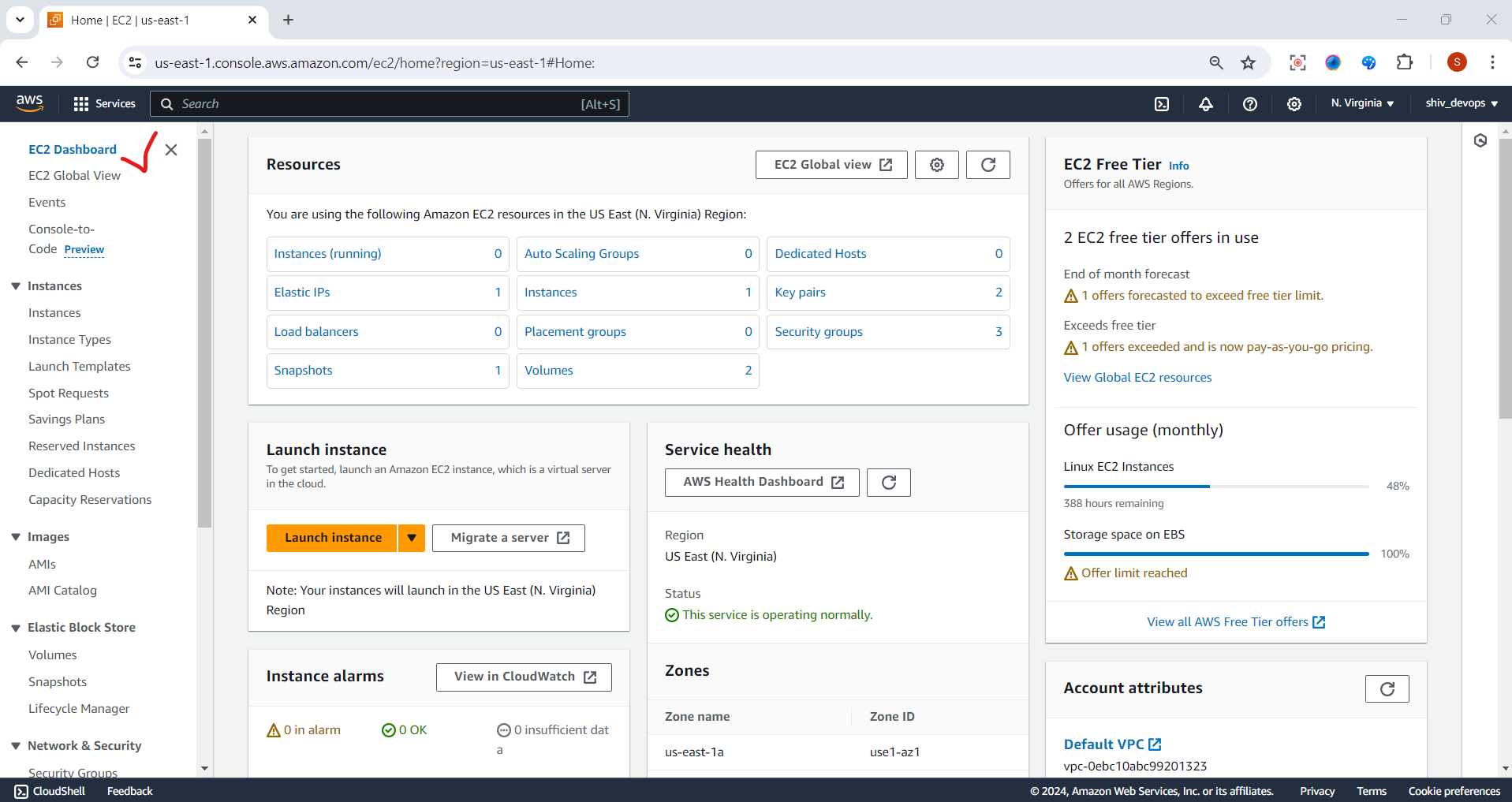

Log in to the AWS Management Console

Open your web browser and navigate to the AWS Management Console (https://console.aws.amazon.com/).

Enter your AWS account credentials to log in.

Navigate to the EC2 service

From the AWS Management Console, locate and click on the "EC2" service under the "Compute" section.

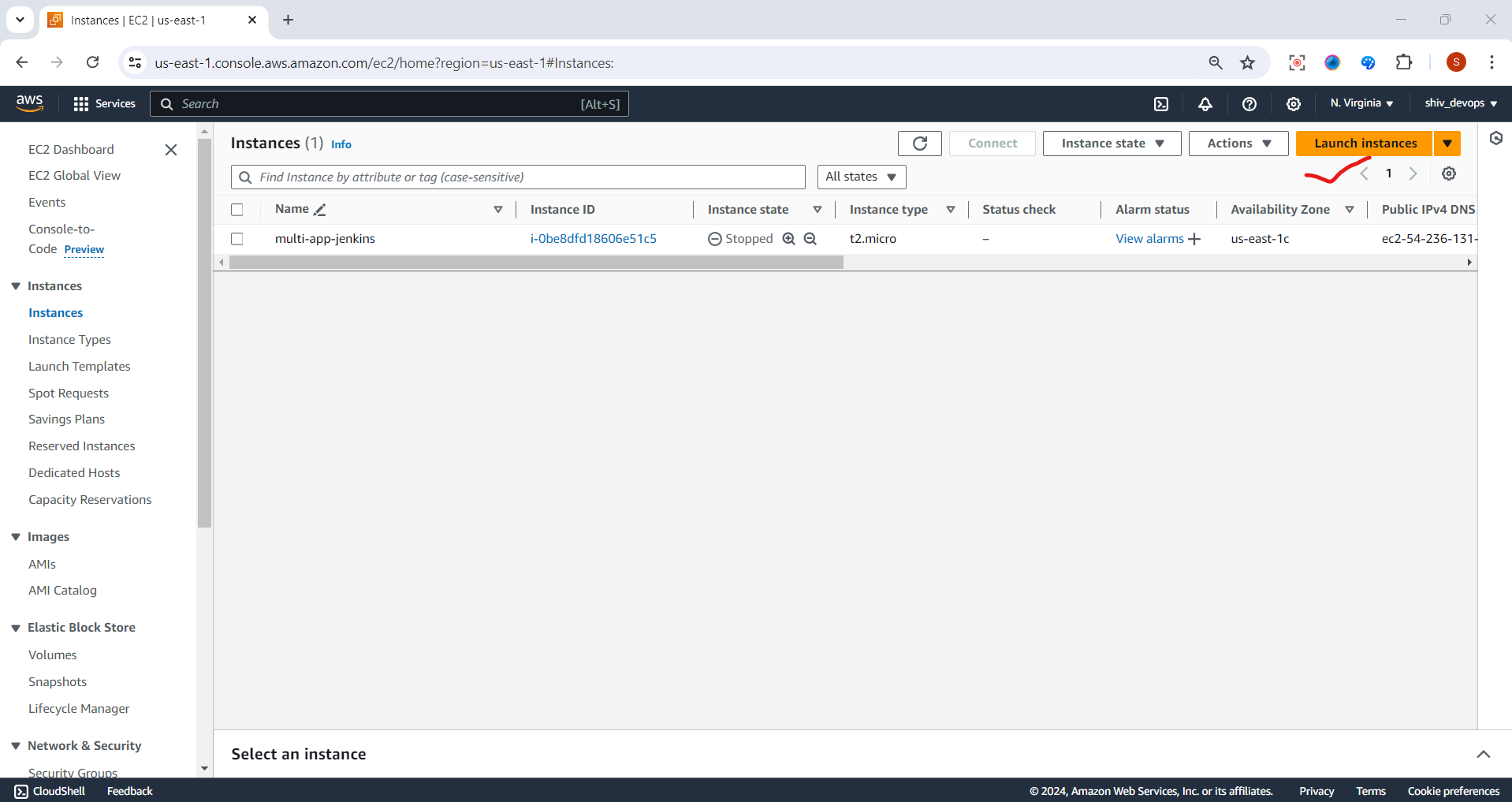

Launch a new EC2 instance

In the EC2 dashboard, click on the "Launch Instance" button.

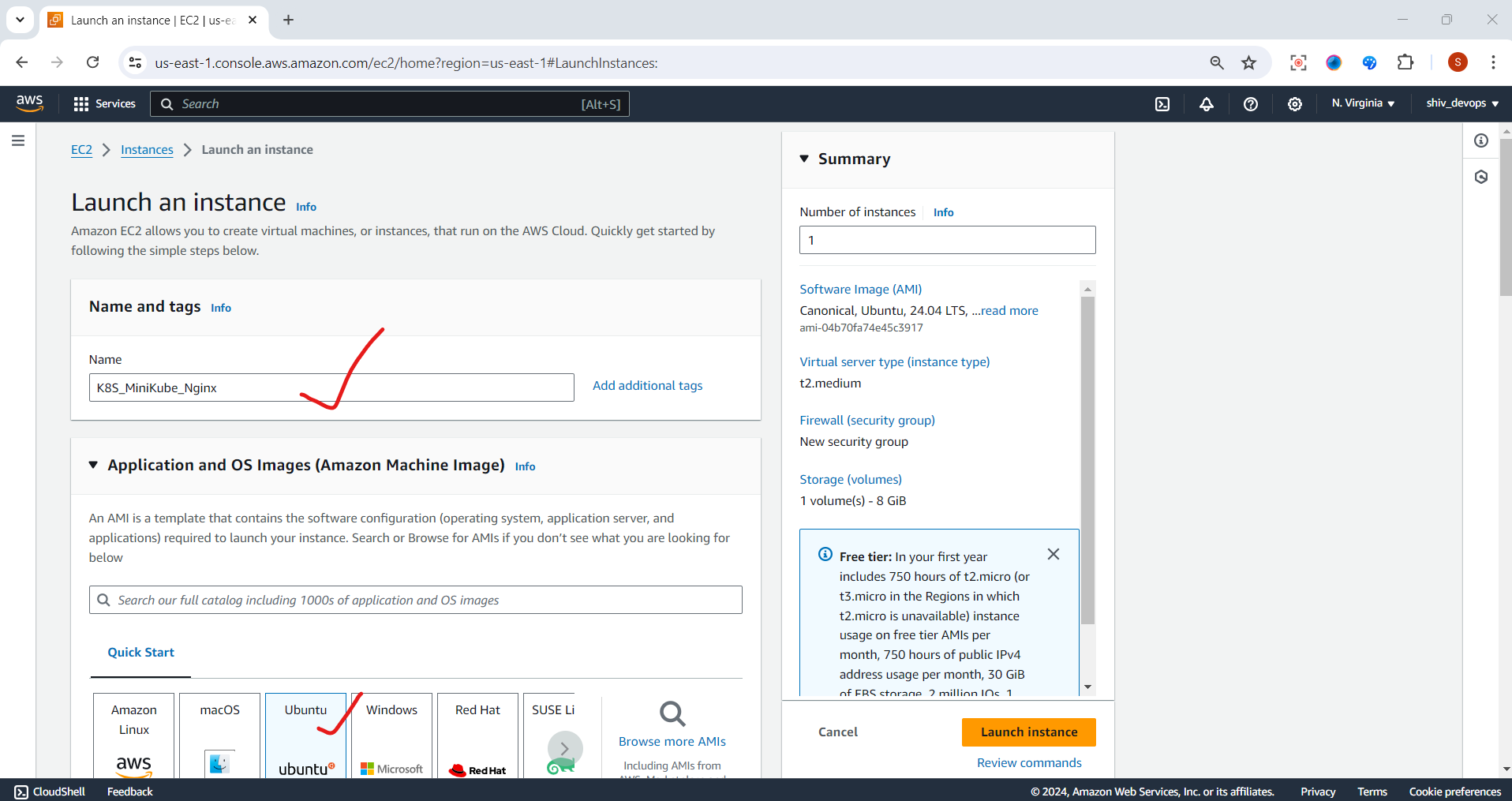

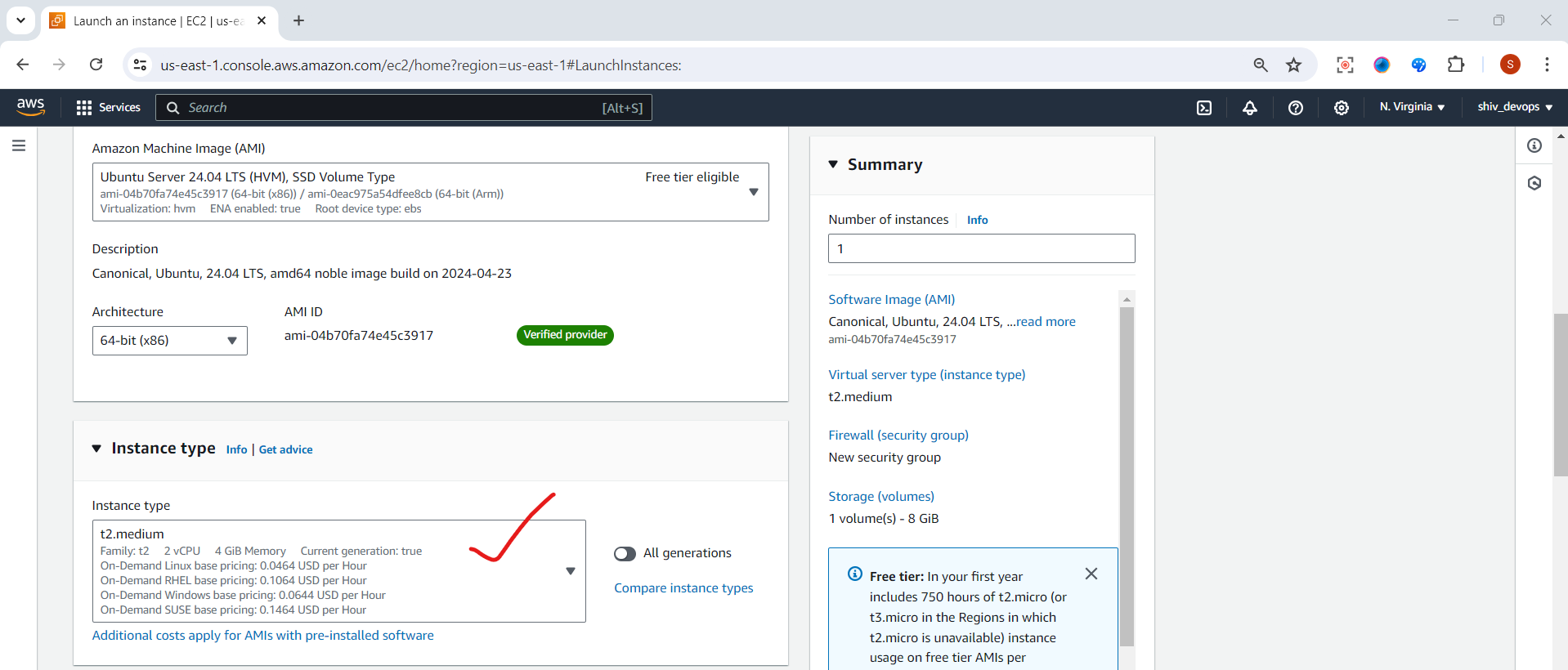

Enter Instance name ,and Choose an Ubuntu Server AMI

select the t2.medium instance type with 4GB RAM and 2 CPU.

Follow the wizard steps to configure the instance details, storage, security group, and key pair.

Review your configuration and launch the instance, instance is successfully launched

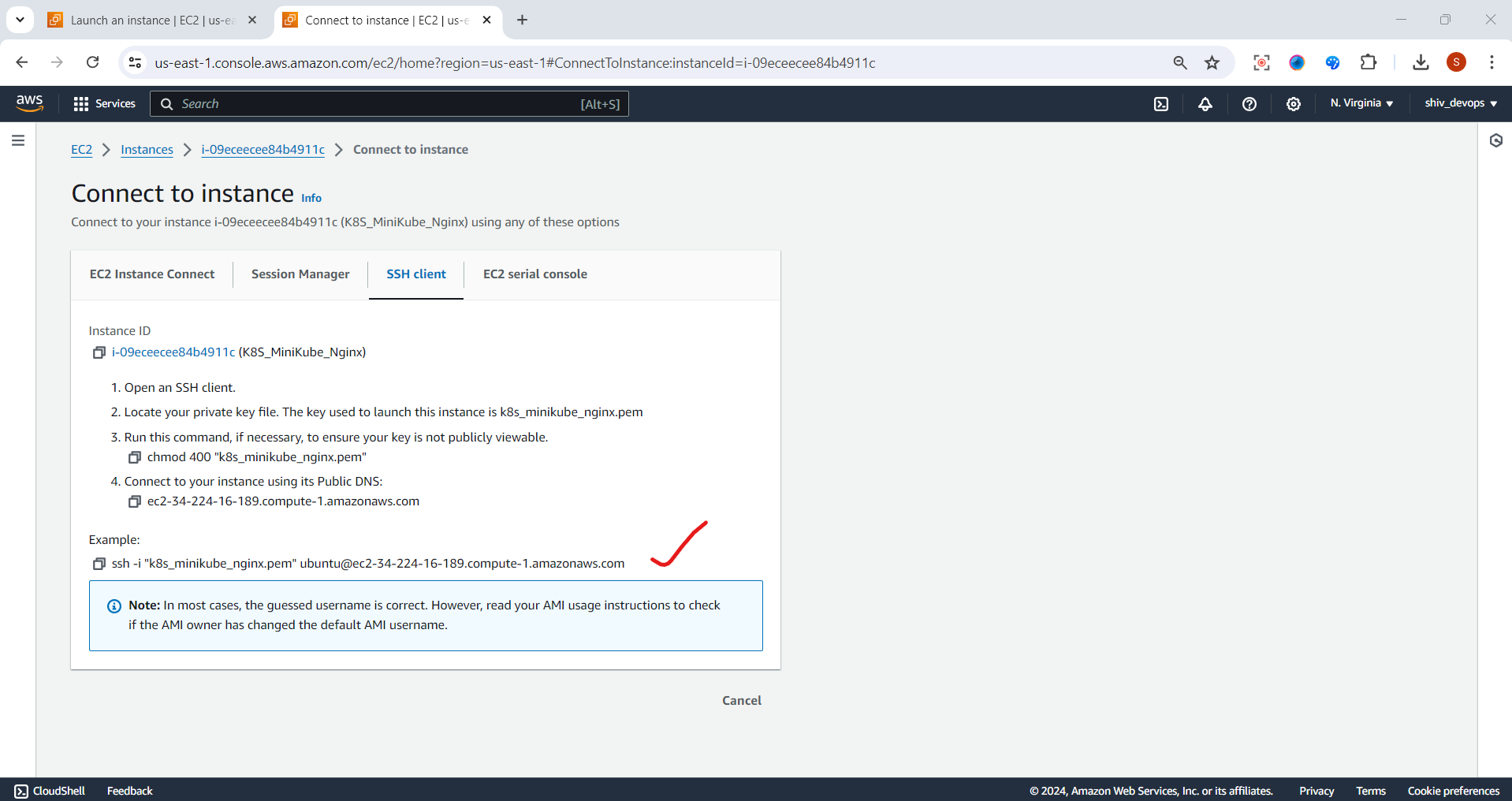

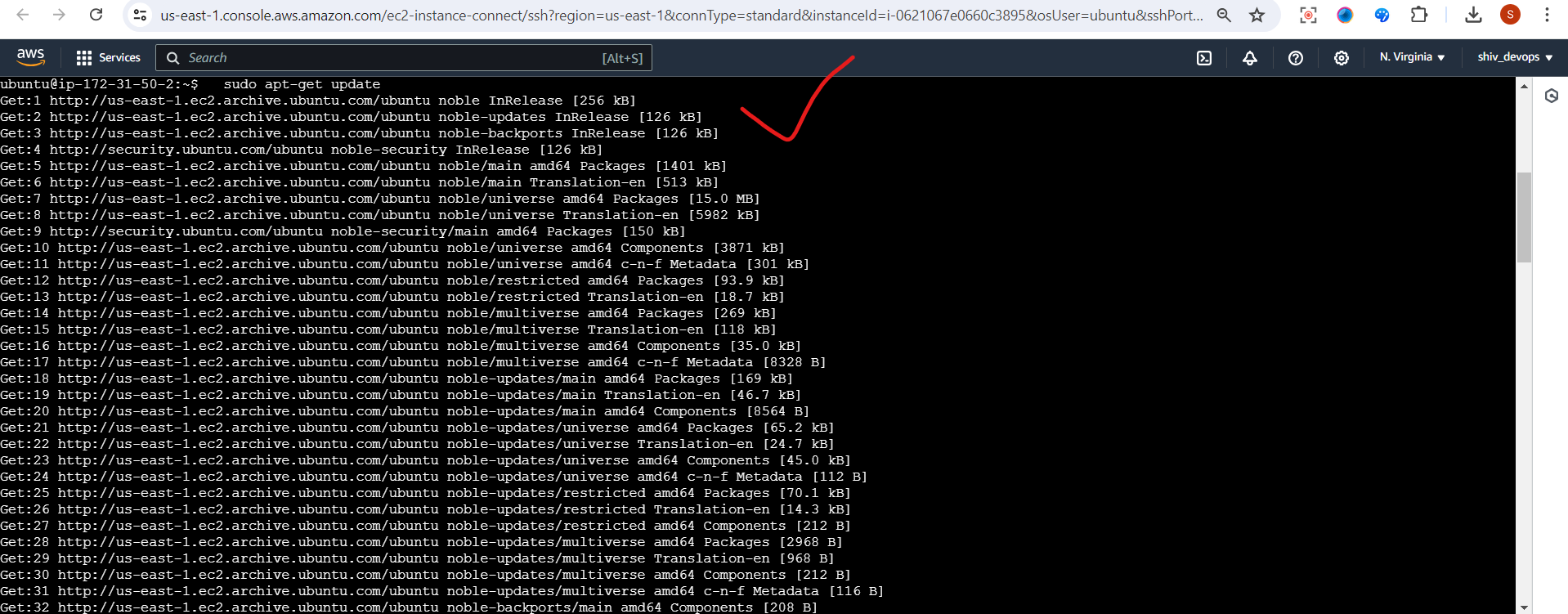

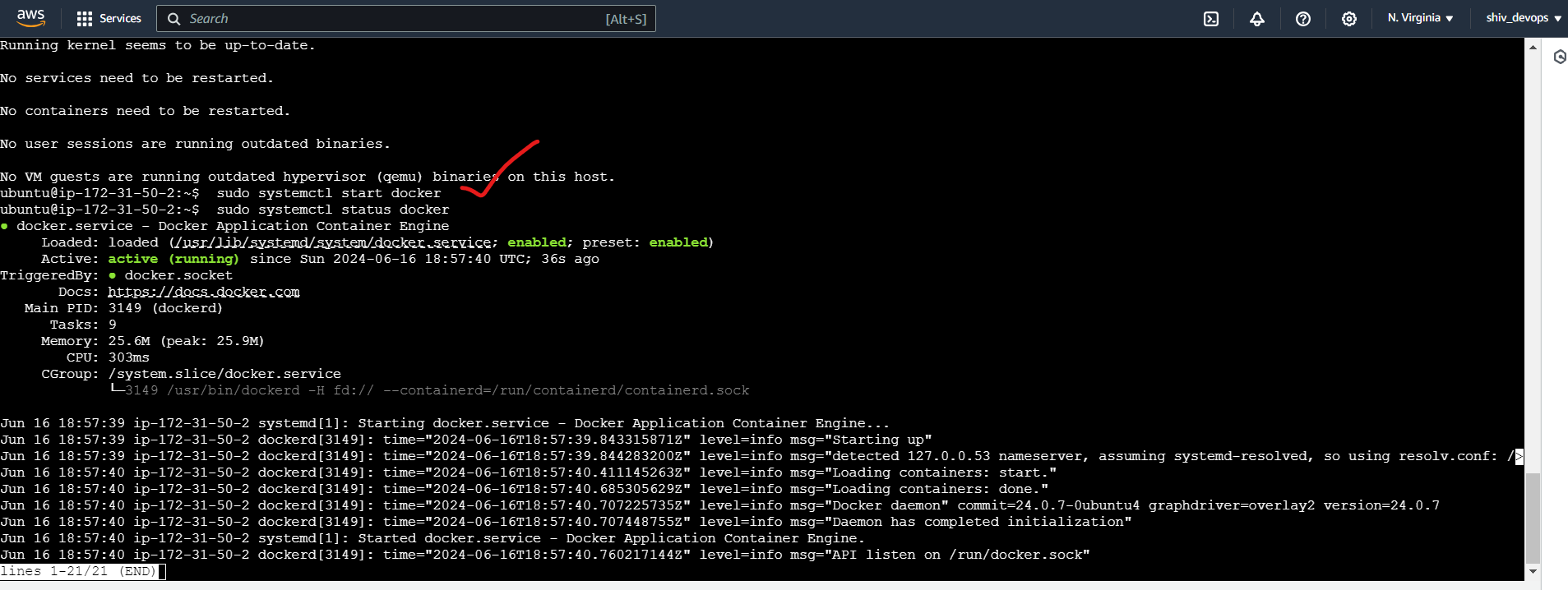

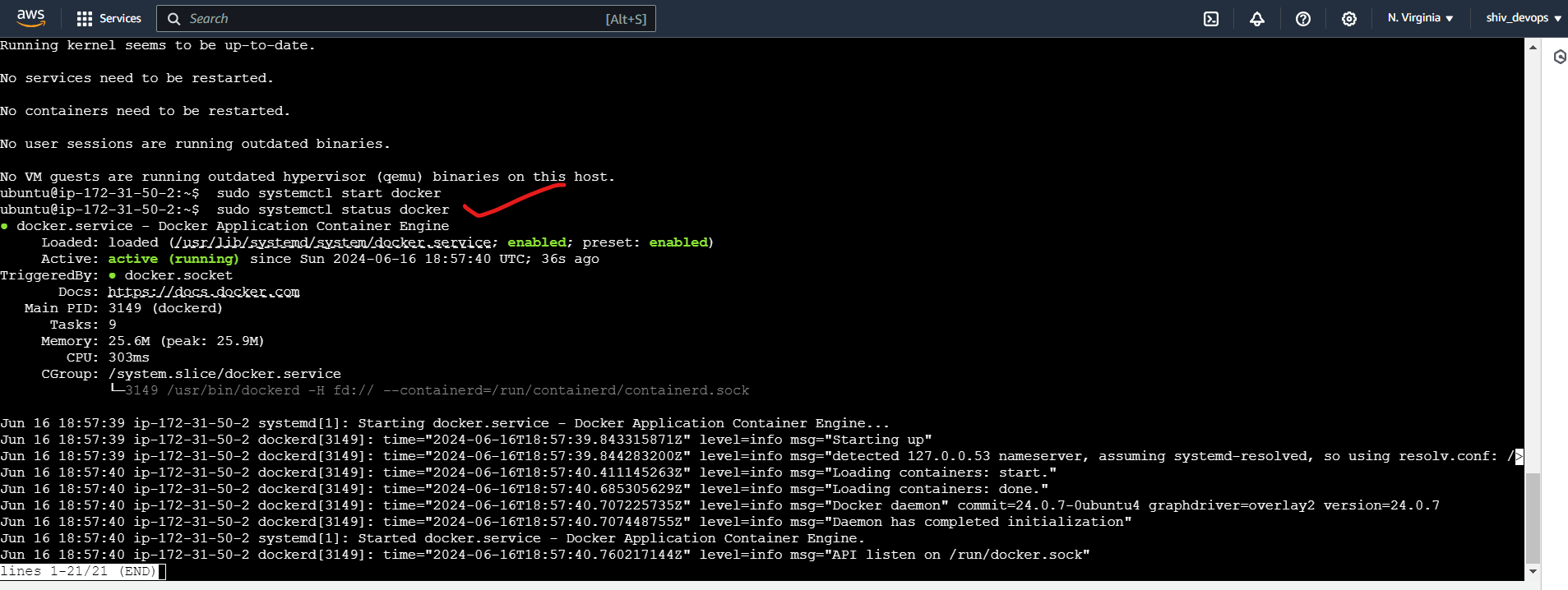

Step 2: Update and Install Required Packages

Connect to your EC2 instance using SSH.

Update the package lists:

sudo apt-get update

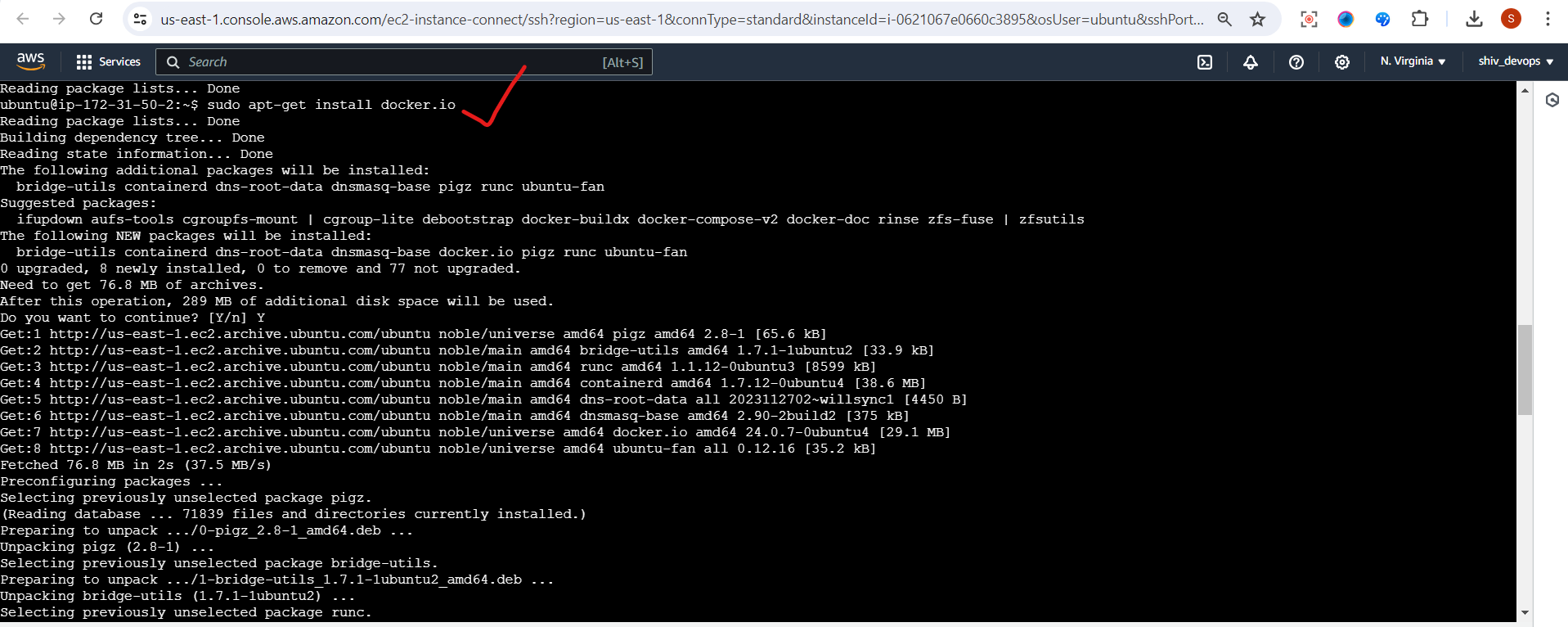

- Install Docker, which is required by Minikube:

sudo apt-get install docker.io

- Start the Docker service:

sudo systemctl start docker

Check Docker is running by using the following command:

sudo systemctl status docker

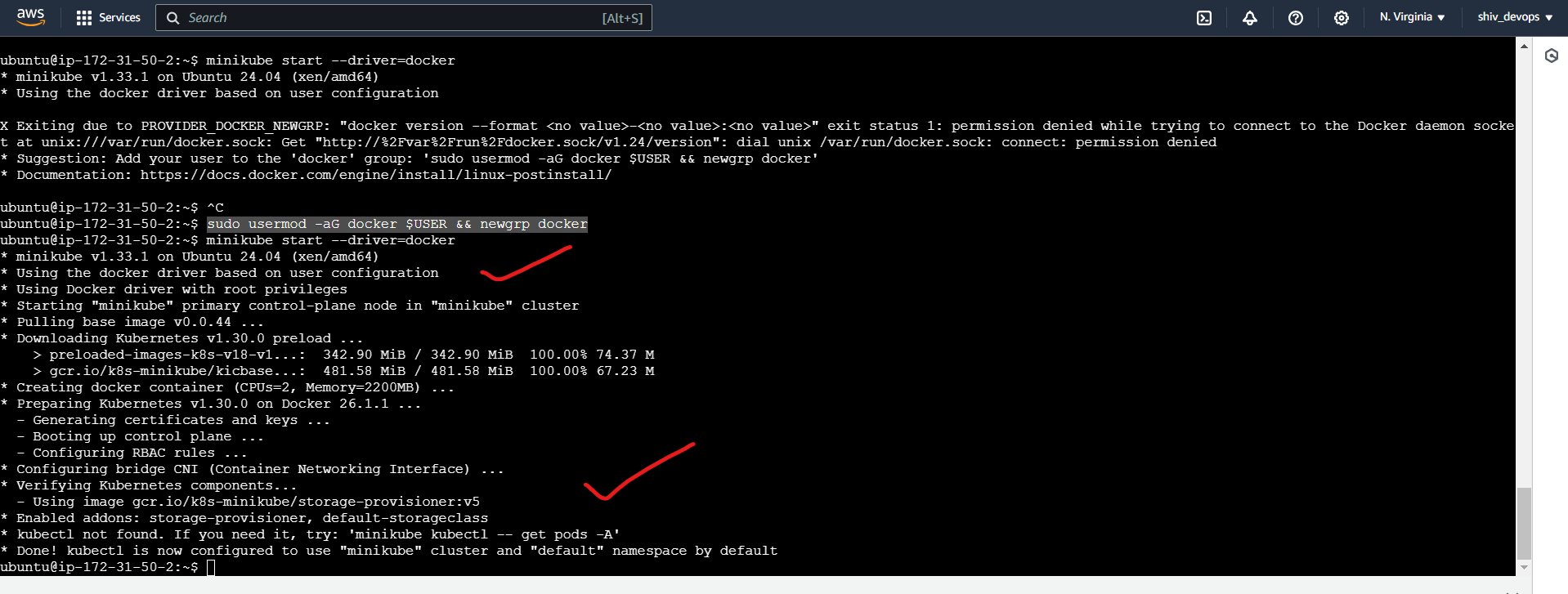

Add your user to the

dockergroup to run Docker commands withoutsudo:

sudo usermod -aG docker $USER && newgrp docker

- Log out and log back in for the group changes to take effect.

Step 3: Install Minikube

- Install the latest version of Minikube by following the official installation instructions for your Linux distribution: https://minikube.sigs.k8s.io/docs/start/

For Amazon Linux 2, you can use the following command:

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

sudo install minikube-linux-amd64 /usr/local/bin/minikube

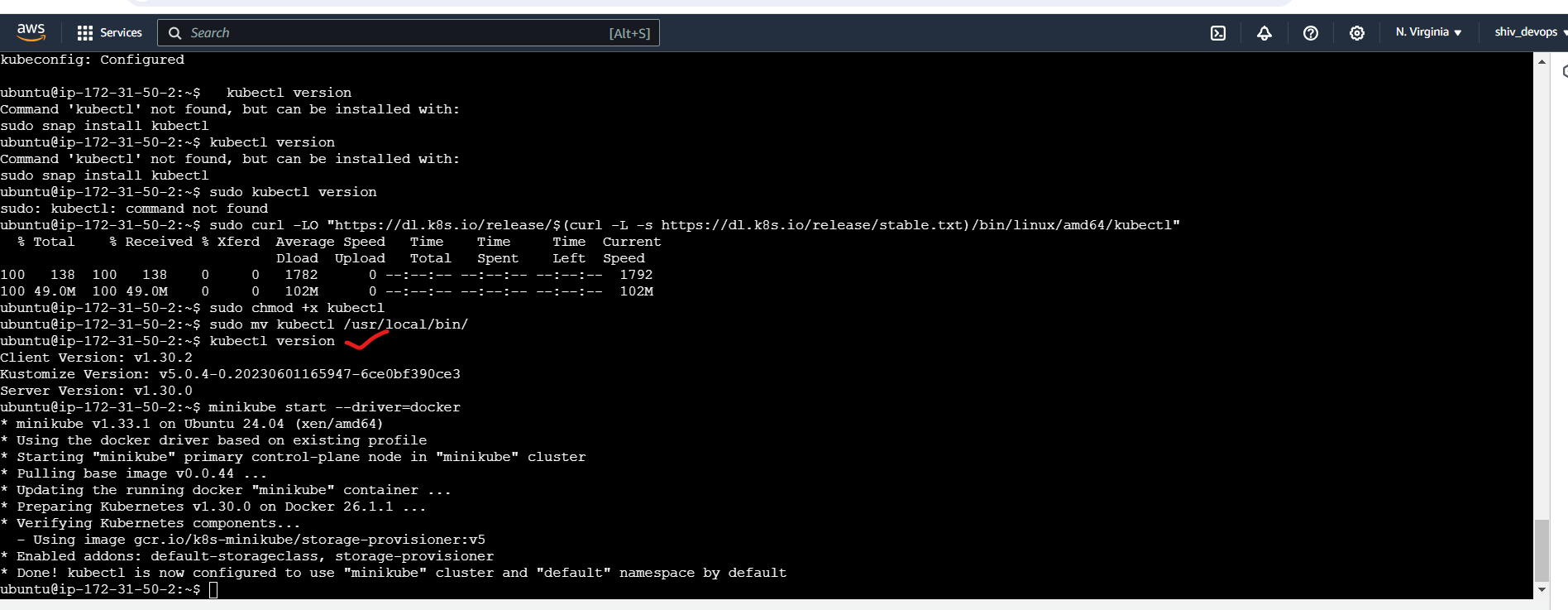

- Start Minikube with the desired VM driver (e.g., Docker):

minikube start --driver=docker

This command will create a single-node Kubernetes cluster running inside a Docker container.

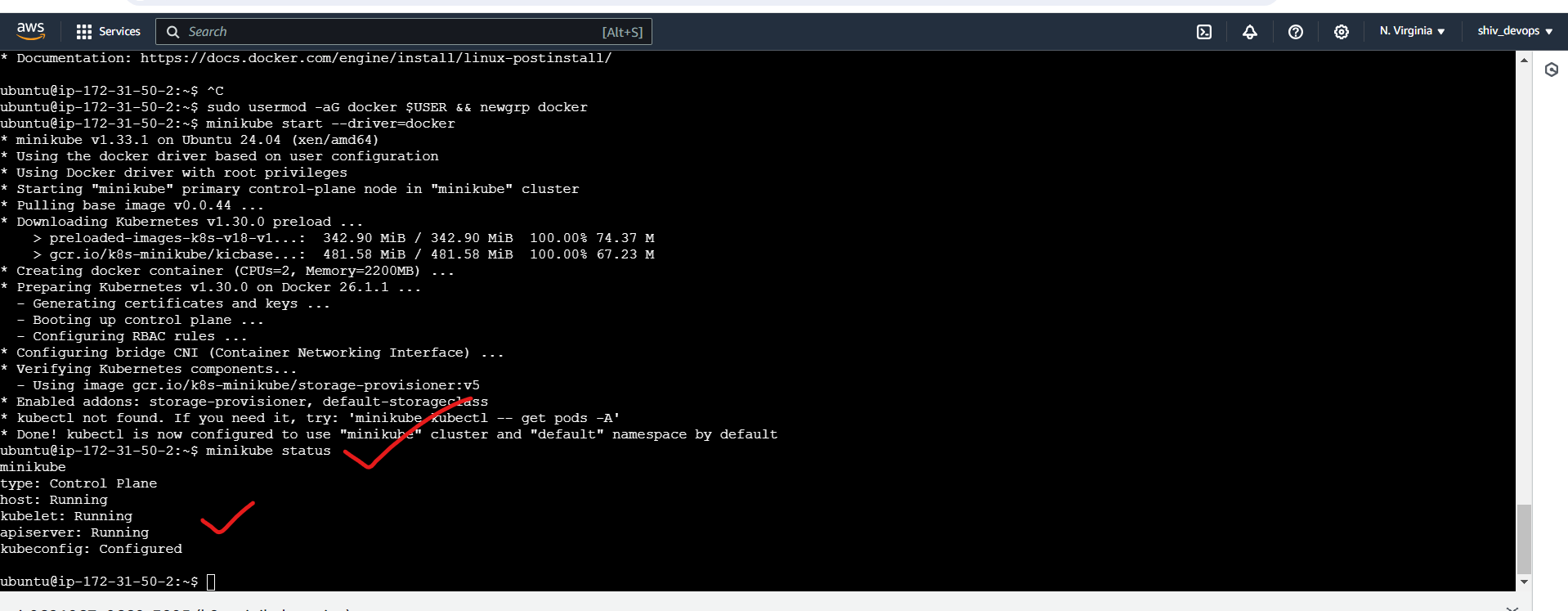

- After Minikube is successfully started, you can verify the cluster status using:

minikube status

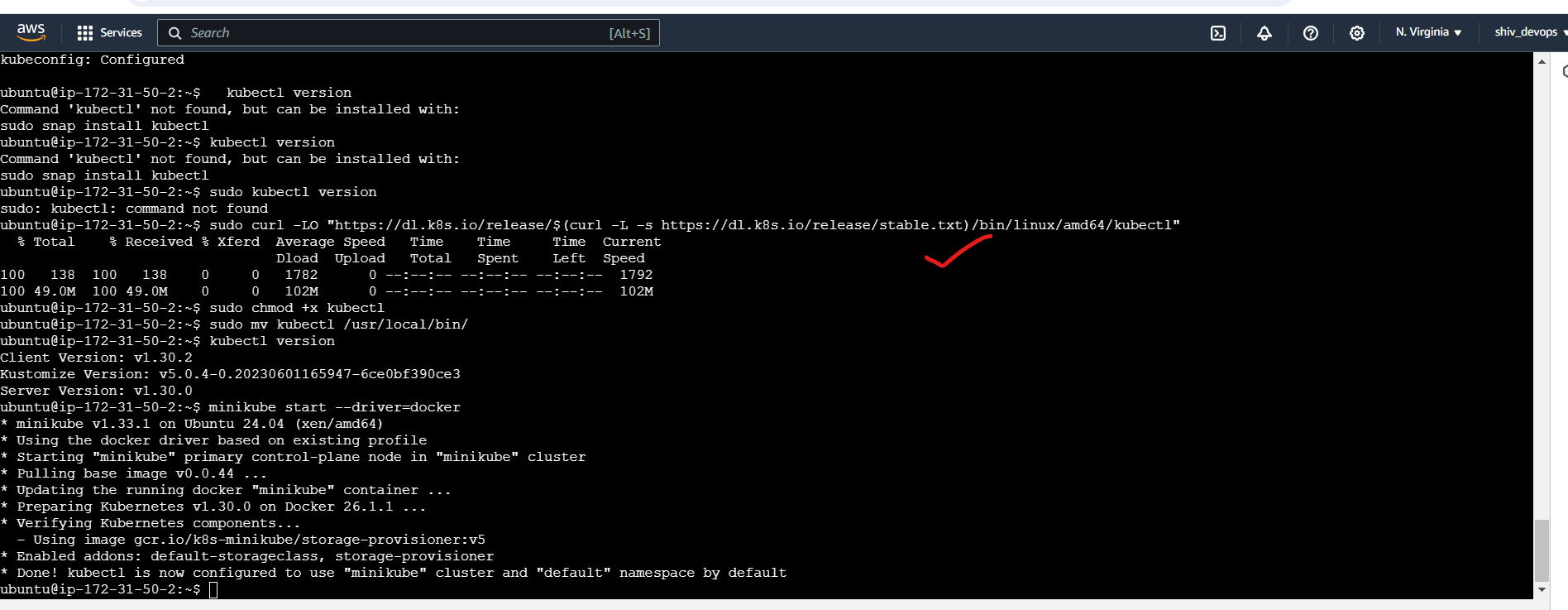

Step 4:Installing “kubectl” on Amazon Ubuntu machine

- Download the

kubectlbinary usingcurl:

sudo curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

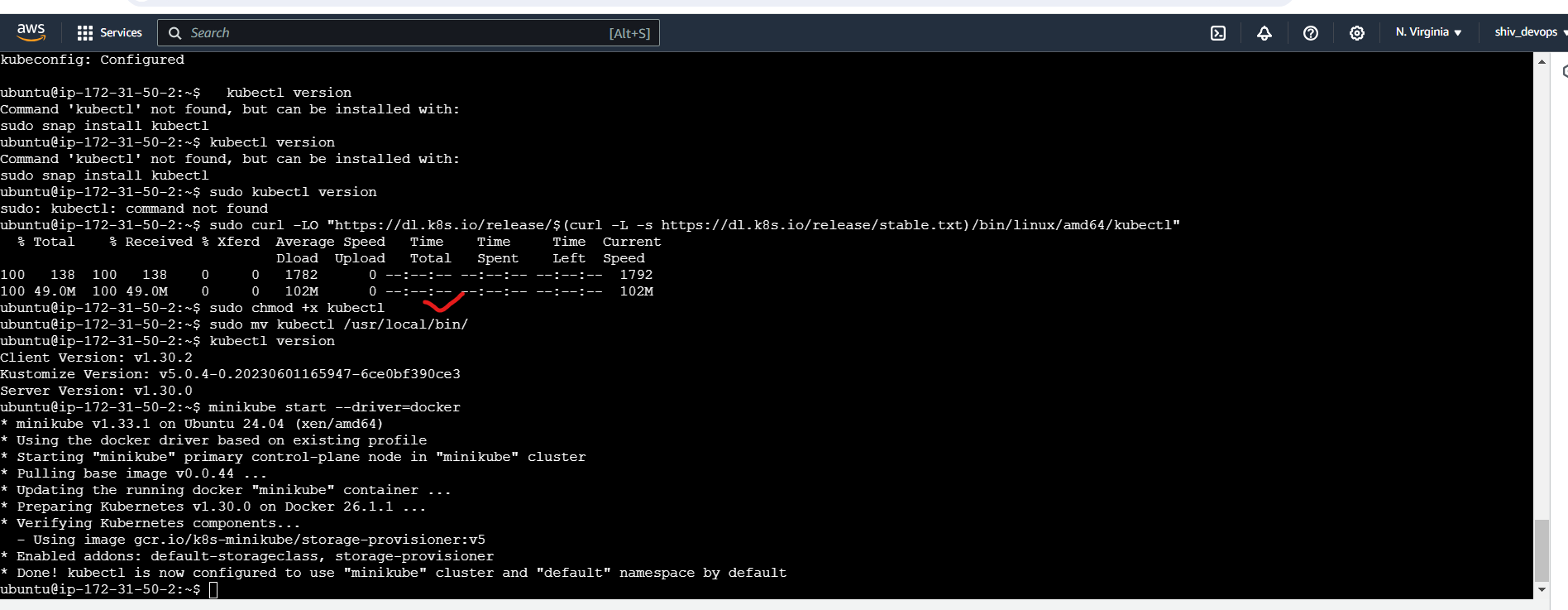

2. Change the permission:

sudo chmod +x kubectl

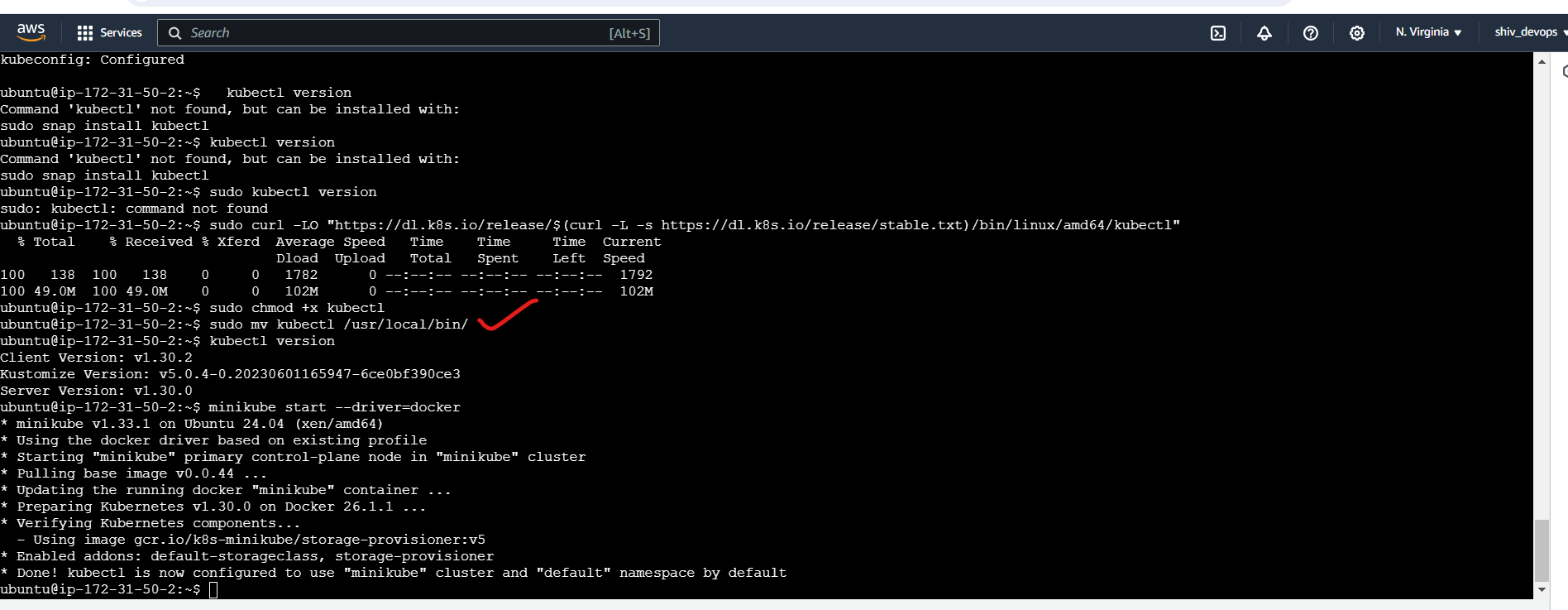

3. Move the kubectl binary to a directory that is in the system's PATH:

sudo mv kubectl /usr/local/bin/

4. Test the kubectl installation:

kubectl version

Step 5: Deploy Nginx on a Kubernetes Pod

With Minikube up and running, you can now deploy applications on your local Kubernetes cluster. Let's start by deploying the Nginx web server on a Pod.

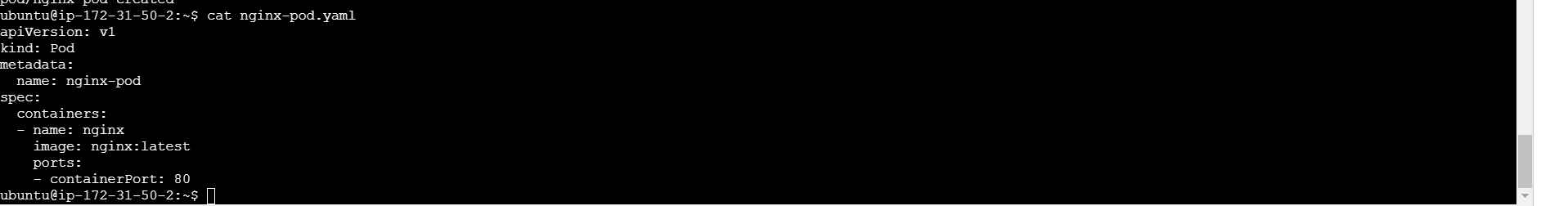

- Create a new YAML file named

nginx-pod.yamlwith the following content:

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

This YAML file defines a Pod with a single container running the latest Nginx image and exposing port 80.

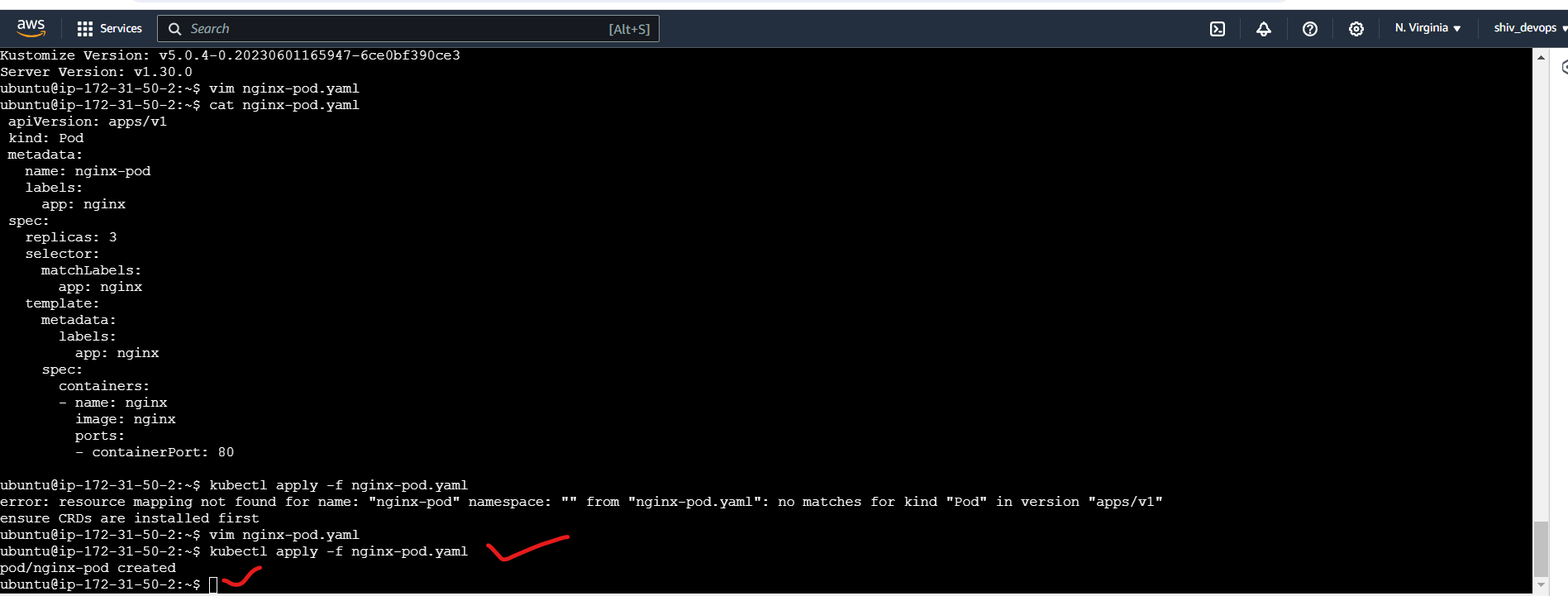

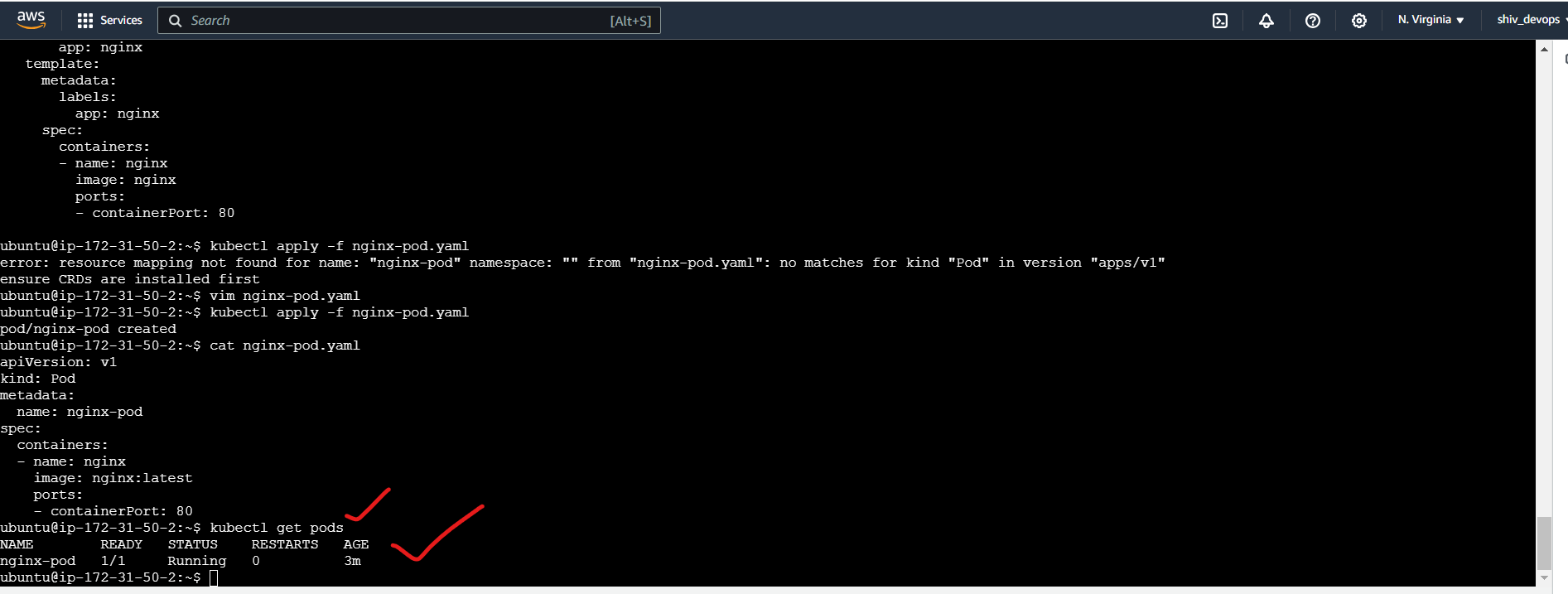

- Apply the YAML file to create the Pod:

kubectl apply -f nginx-pod.yaml

- Verify that the Pod is running:

kubectl get pods

You should see the nginx-pod in the list of running Pods.

Check Nginx service is running using the following command

kubectl get svc

This command shows the status of all the services running in the Kubernetes cluster, including the Nginx service created by the nginx-pod

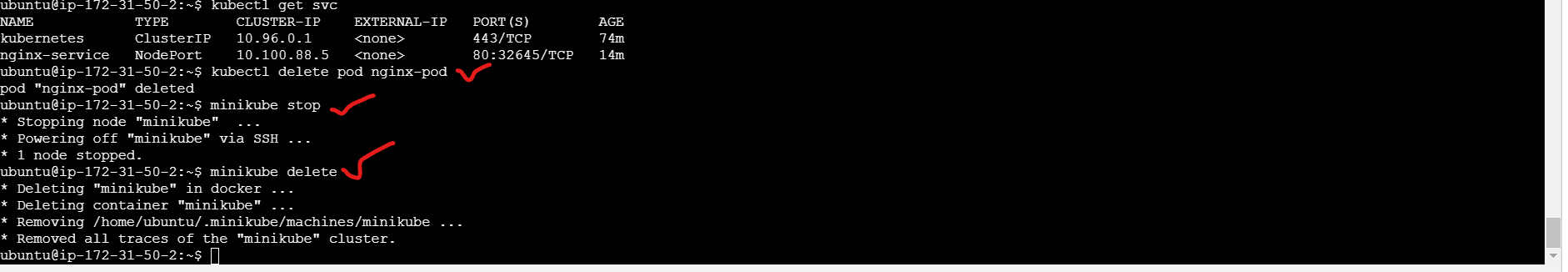

Step 6: Clean Up

When you're done experimenting with Minikube, you can stop the cluster and delete the resources using the following commands:

kubectl delete pod nginx-pod

minikube stop

minikube delete

The minikube delete command will remove the Minikube VM and all associated resources.

Conclusion

In this blog post, we covered the steps to install Minikube on an AWS EC2 instance and deploy the Nginx web server on a Kubernetes Pod. Minikube provides a convenient and lightweight way to learn and experiment with Kubernetes locally before deploying to a production environment.

By following this guide, you can explore Kubernetes concepts, deploy sample applications, and gain hands-on experience with container orchestration. As you become more comfortable with Minikube, you can move on to more advanced Kubernetes topics and eventually migrate to a full-fledged Kubernetes cluster for production workloads.

Thank you for joining me on this journey through the world of cloud computing! Your interest and support mean a lot to me, and I'm excited to continue exploring this fascinating field together. Let's stay connected and keep learning and growing as we navigate the ever-evolving landscape of technology.

LinkedIn Profile: https://www.linkedin.com/in/prasad-g-743239154/

Feel free to reach out to me directly at spujari.devops@gmail.com. I'm always open to hearing your thoughts and suggestions, as they help me improve and better cater to your needs. Let's keep moving forward and upward!

If you found this blog post helpful, please consider showing your support by giving it a round of applause👏👏👏. Your engagement not only boosts the visibility of the content, but it also lets other DevOps and Cloud Engineers know that it might be useful to them too. Thank you for your support! 😀

Thank you for reading and happy deploying! 🚀

Best Regards,

Sprasad

Subscribe to my newsletter

Read articles from Sprasad Pujari directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sprasad Pujari

Sprasad Pujari

Greetings! I'm Sprasad P, a DevOps Engineer with a passion for optimizing development pipelines, automating processes, and enabling teams to deliver software faster and more reliably.