The core Architecture of k8s Cluster

Manik Mehta

Manik Mehta"Kubernetes", I have been playing with this word for the last month. Whenever we start a journey to start learning new things, a question always rise in our mind:

Why are even we learning this?

This question can have it's own modified forms:

Why should we learn k8s?

Why pods, not containers?

Why kubelet, control plane etc. act that way?

Although there are many blogs in the market to start with Kubernetes but I will try to write this blog in a way that these questions are answered! To be honest I am writing this blog to make my concepts more clearer! So there will be always a reader of this blog and that is me.Let's start with answering a simple question:

Why k8s?

You might have made an express or a java application and may want to make it available for end users. To achieve this there are many direct services like Vercel which gives you option to deploy your application by a single click. If you will go little bit advanced, you might use an Amazon EC2 instance. Let's say you deployed your application on EC2 and boom! You start getting traffic!! Your idea was so good that it started with just 250 users visiting the server per day went upto 25000 users. Now you are a very good programmer and you have written a very optimized code that it is able to handle 25000 users but you know that it will not be able to handle more than this. Therefore you created another EC2 instance and moved to some load balancers and reverse proxies to manage the load. But do you think installing your server on an EC2 machine would be easy? May or may not be. You need to install javascript, nodejs and finally all the dependencies of your server on the machine. Let's say you did it for the second instance but what if tomorrow, more than 75000 users visited you server? Or what if they visited your server when you were sleeping? Will you monitor your app logs all the 24 hours and even if this is possible then do you think installing your server every time on Amazon EC2 would be easy? What if your app becomes more complex and more dependencies are added? Installing and managing these dependencies on different machines would be a headache! Again what will you do when one of the instance go down? I think these questions are fair enough to prove that this method is not gonna work! And here comes a savior: Containerization! Will not dive into this as this blog is not about this but if you don't have any idea about this then do read about this and come here again! Now say that you containerized your application but all the questions remain same except that launching a container is way easier than launching an EC2. So here comes the greatest friend of containers: Kubernetes or k8s. This magic was given by Google and now controlled by CNCF. Kubernetes is a container orchestrater which will:

1) Deploy your containerized application

2) Monitor all the containers and if a container goes down, will turn it up

3) Auto scale up when traffic is high and scale down when it's low.

And much more! I think this is enough intro of k8s and you must convinced now that you should definitely learn it. Let's now focus on its core concepts!

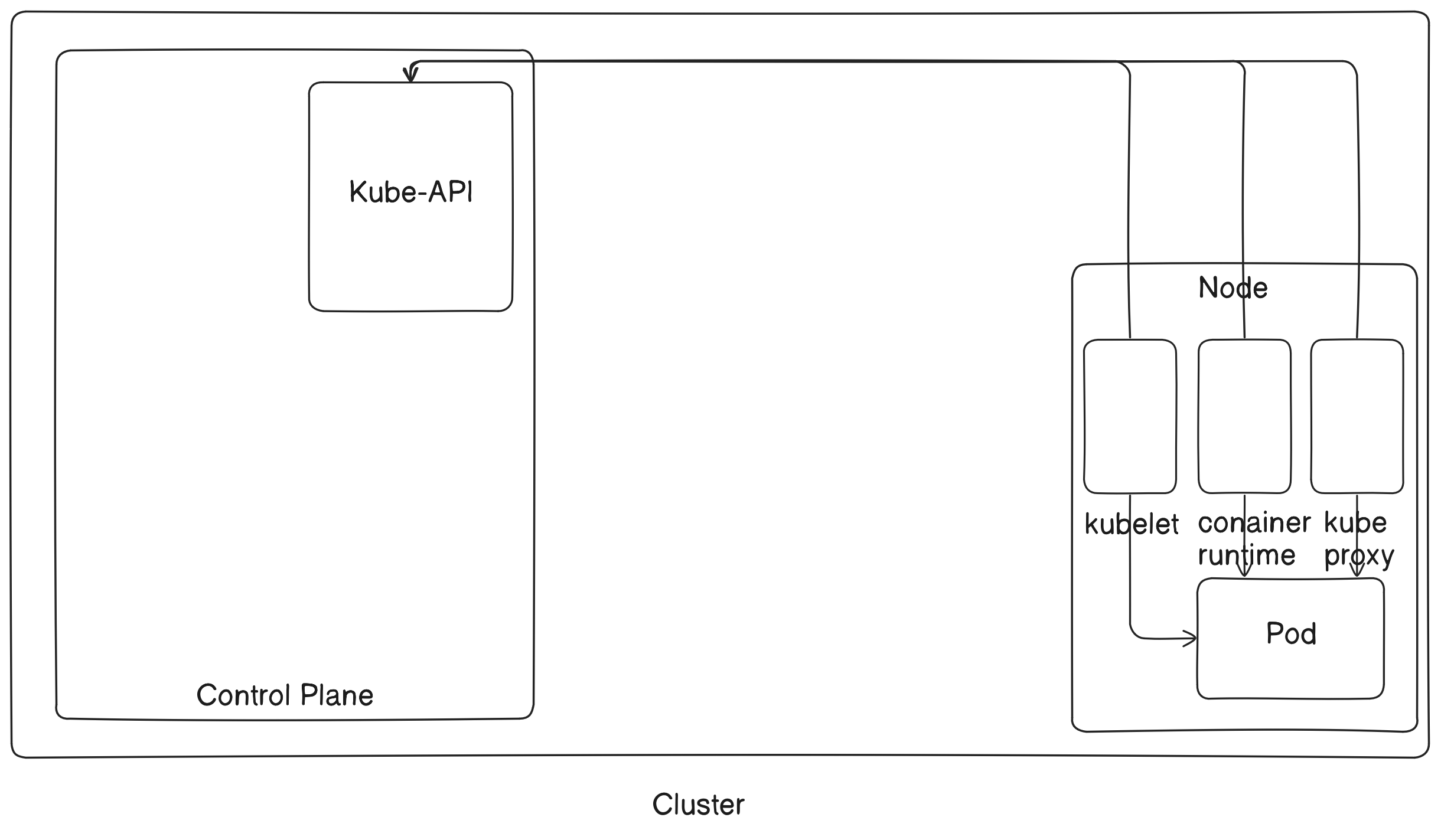

Cluster

Kubernetes cluster is the place where all of your Kubernetes components will lie. For now just understand that it is a place where all of your containers will lie and be managed. See this empty box and call it a cluster, at the end of the blog this box will be filled.

Pods

In a single line, if I have to define it I would say it's just an abstraction of containers. You can say that k8s don't know containers. So you can't directly deploy your container on k8s, rather you will first wrap it inside pod and then deploy it. But you will ask a question: Why? Answer is simple: Docker is not the only application (I know if you have studied about containers then you probably think docker is the only thing) to create containers! What if your container is created through some other service and it's environment is entirely different from that docker container. k8s will have to work hard every time to contact that container. Therefore your container is firstly converted to a common object which k8s can understand and that's what is called as a pod. So conclusively containers in k8s are called pods. Now we have pod in our cluster!

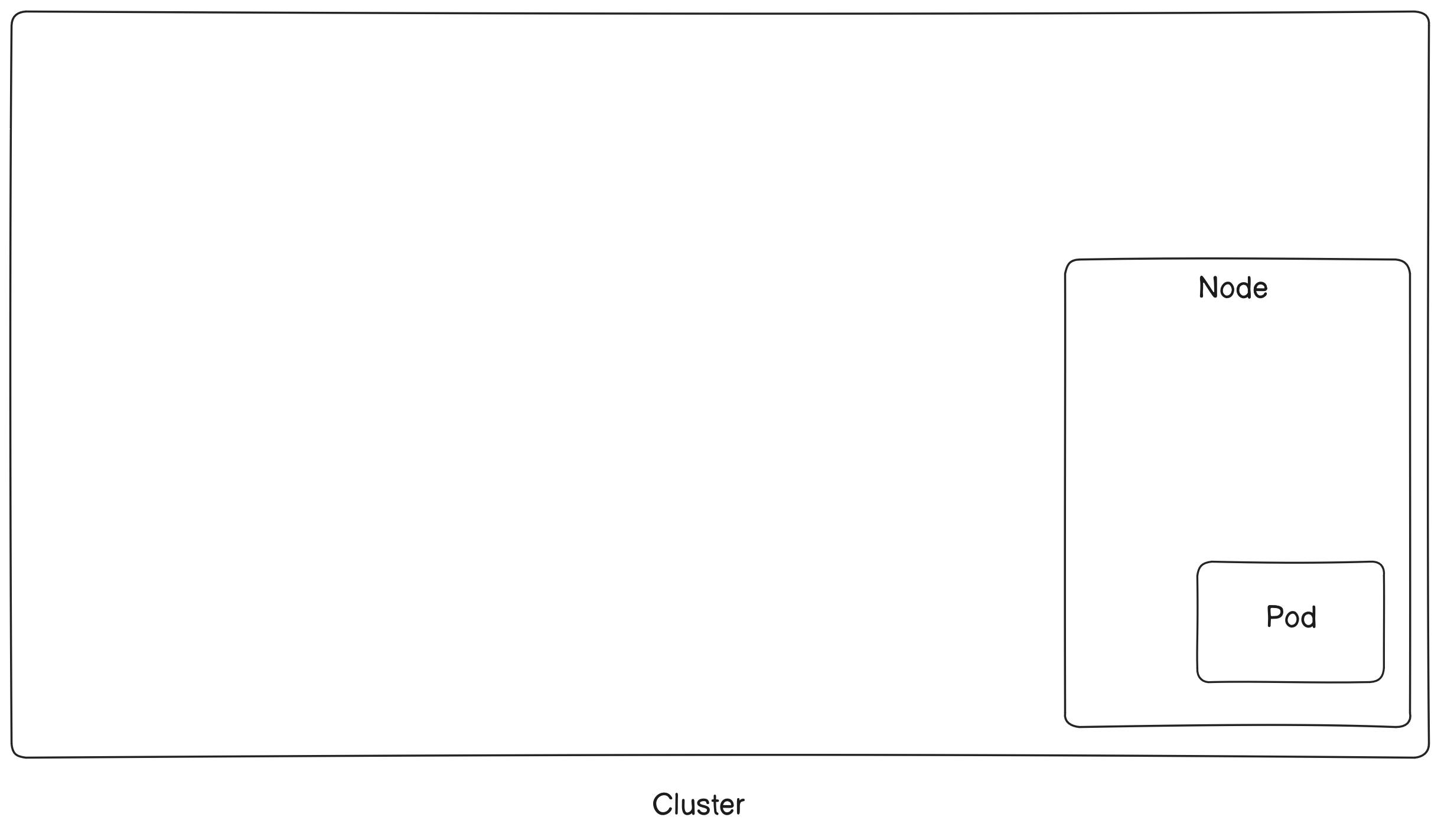

Nodes

What do you think where does container run? Probably heaven! Is it? NO! It also runs on a machine. When you turn your machine on and run container on it we say container is running on a Physical Machine and when you install Virtual box and run container inside it we say it is running on a virtual machine. When you deploy pods on k8s, where does they run? The answer is Node. Node is a physical or virtual machine depending on cluster which is responsible for running pods. So you can say that pod run inside a node or a pod is placed inside a node. There are majorly two type of nodes: Master and Worker. Master node has the responsibility to handle all the nodes. It ensures that every node is working properly, resources are managed and if any node runs into error and then that node is restarted. Worker node is simply a node which is responsible to run your containers. Now again see the figure!

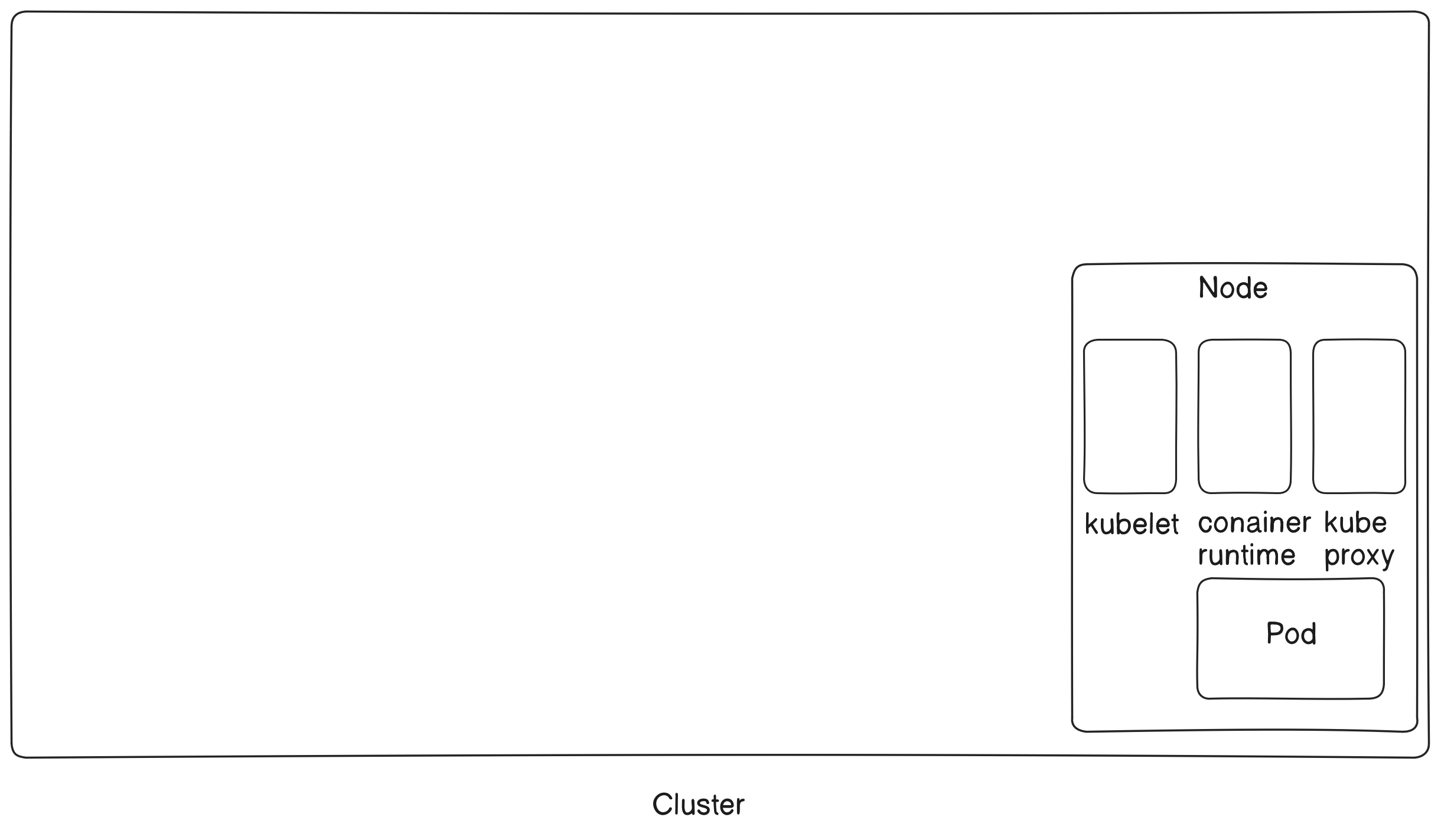

Kubelet, Container Runtime and Kube-proxy

Say that your container uses Docker-engine (Nothing but, you containerized your application through docker) and it runs on a docker image (say manik-example). Now you want to deploy your container on k8s. Some questions should rise in your mind:

Who will make ensure that my pod is running? How can I believe k8s?

What if I have used some other engine? Is docker installed on k8s?

My application has a server and database. How will my application network with database inside k8s?

To answer these questions let me define the components of a node:

Kubelet: It is kubelet which ensures that the pod is running. It ensures that your pod uses correct image, enough resources are given to each pod and if any pod dies, then restarting that pod.

Container Runtime: As an answer to above question, container runtime is an environment given to container to run it. If your container is a docker container then docker engine should be installed as container runtime. There are other container runtimes also; like CRI-O and Container-D

Kube-proxy: It is an answer to the third question. This component of the node maintains network rules to contact pods within the cluster or outside the cluster. So you may decide to not to deploy your database on k8s cluster, hence your pod needs to contact outside cluster and kube-proxy will do this work for you. Although this definition is incomplete but to better understand networking in k8s, you need to learn more concepts like services. For now it is sufficient to understand this much.

Look at the image:

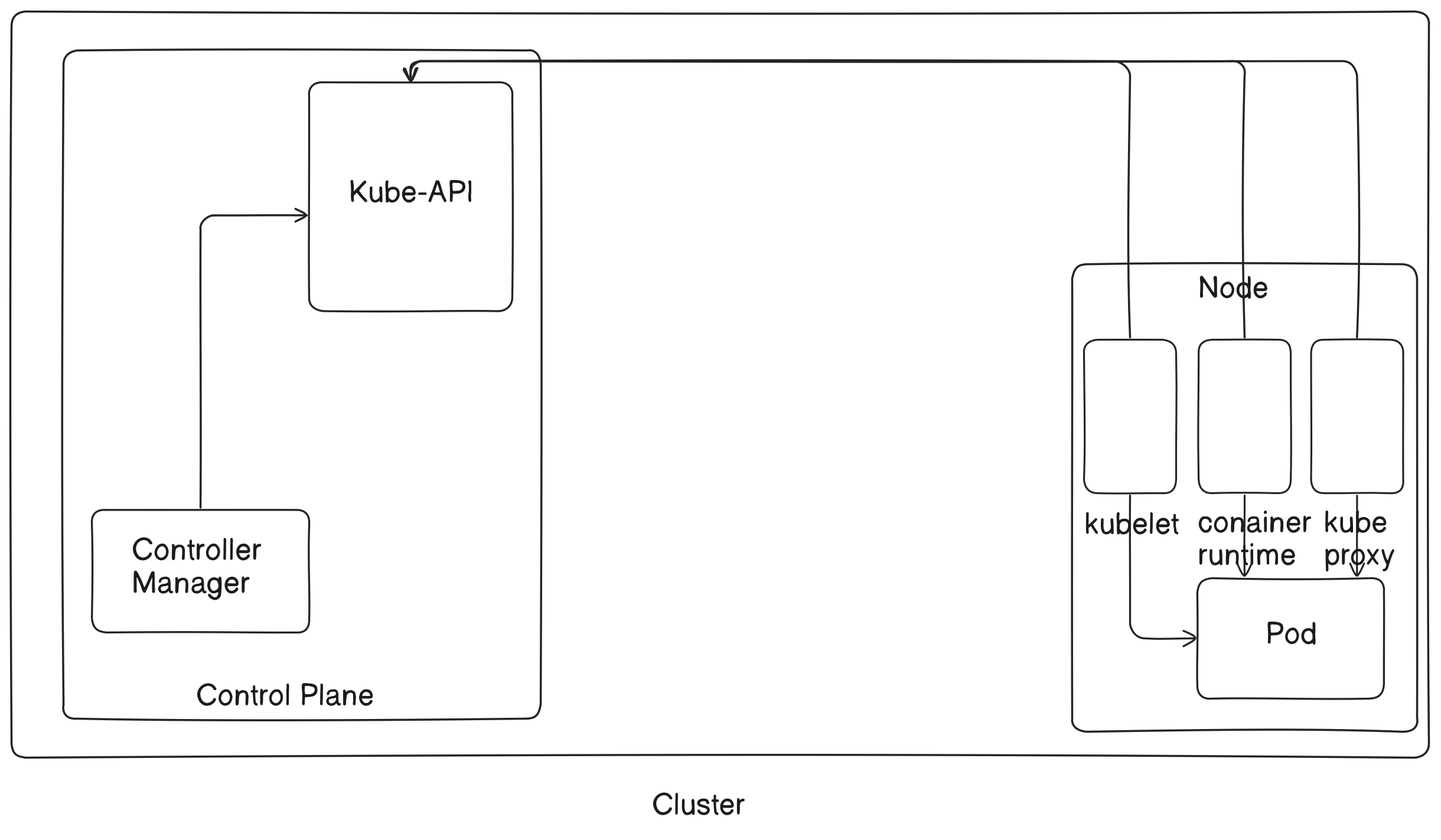

APIs, Kube-API Server and Control Plane

Now let me tell you an interesting fact about k8s, every operation inside k8s happen through a REST API. I expect that you know What are REST APIs! So from creating a pod, managing it or deleting it; every part is handled by different components of k8s and connection between them is maintained by a REST API. Now you would ask, where is the frontend to contact those APIs? There must be a store for information related to these APIs which components could use to fulfill their action and that is what we call Kube-API server. This server is a component of Control Plane but wait What is control plane? It is an orchestration layer which exposes this server to maintain the lifecycle of containers or pods. Please note that Kube-API server is not the only part of control plane! In fact next section will describing these components. Again see the figure!

Controllers and Controller Manager

As the name suggests, controllers must be controlling some part of k8s and that part is the state of the cluster. Inside the node, this work is done by kubelet. Where kubelet is responsible to handle the node only, controller manager is responsible to manage the whole cluster. Let me define a new component: Replica set, this creates a replica of your application. As you may want to balance the load among different instances, you create a replica set to manage these instances. Replica set ensures the number of replicas are running and if any replica goes down, it creates a new pod. So there is a controller named ReplicaSet Controller which monitors all the replicas on a cluster and if the desired state says 10 replicas should be there on cluster then this controller will be responsible to maintain the current state same as desired state. There are many more controllers, controller manager manages all these controllers and makes sure that they are working properly. Let's complete our diagram!

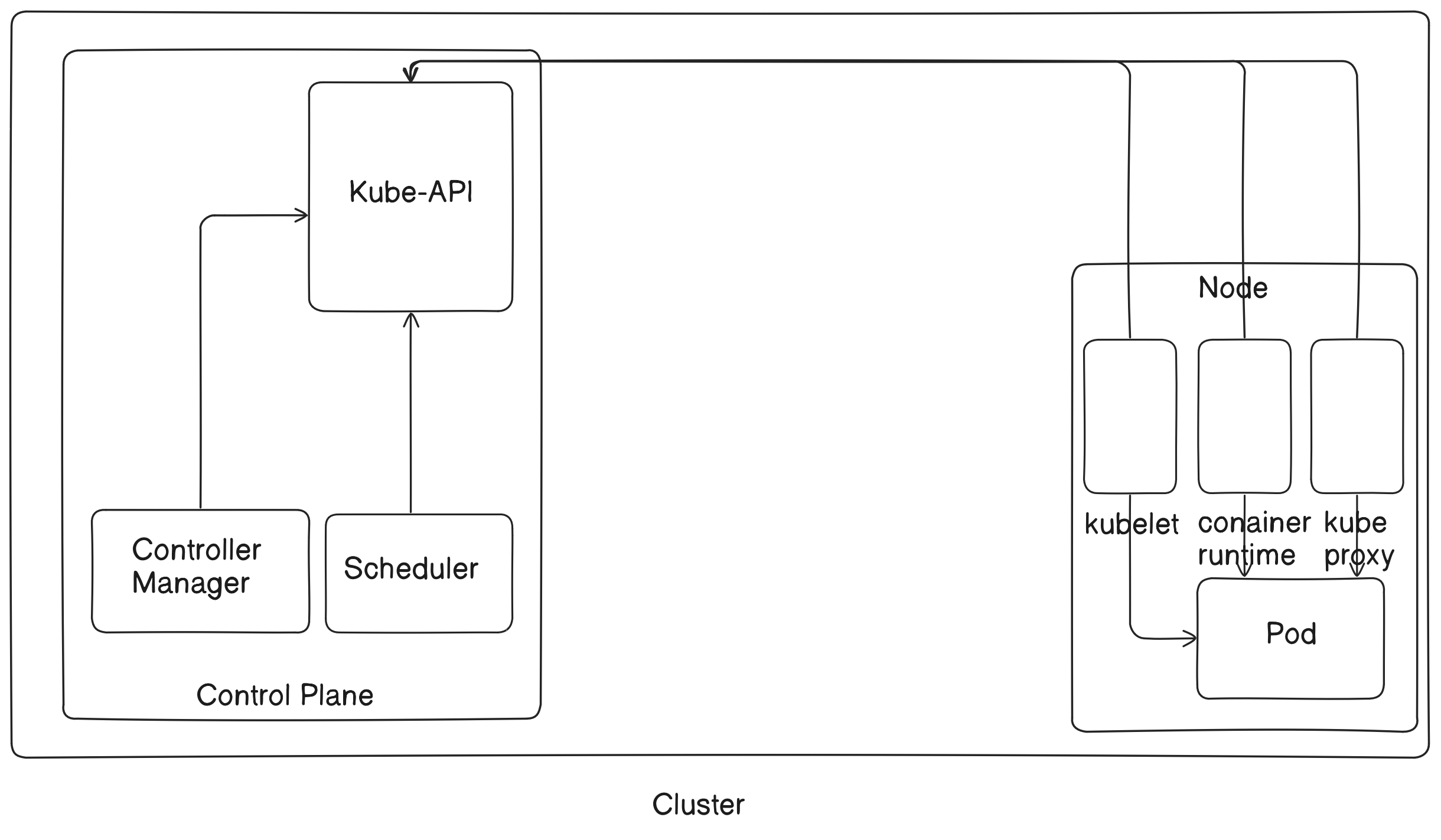

Scheduler

This is the process of the Control Plane which assign pod to the node. It consists of a Scheduling queue to which the pod to be created is sent, scheduler consumes this pod from queue and decides about the node to be assigned to this pod. This decision depends on different constraints and the resources required by the pod and resources available. There are different type schedulers in the cluster, all of them are built on the top of kube-scheduler. See this diagram:

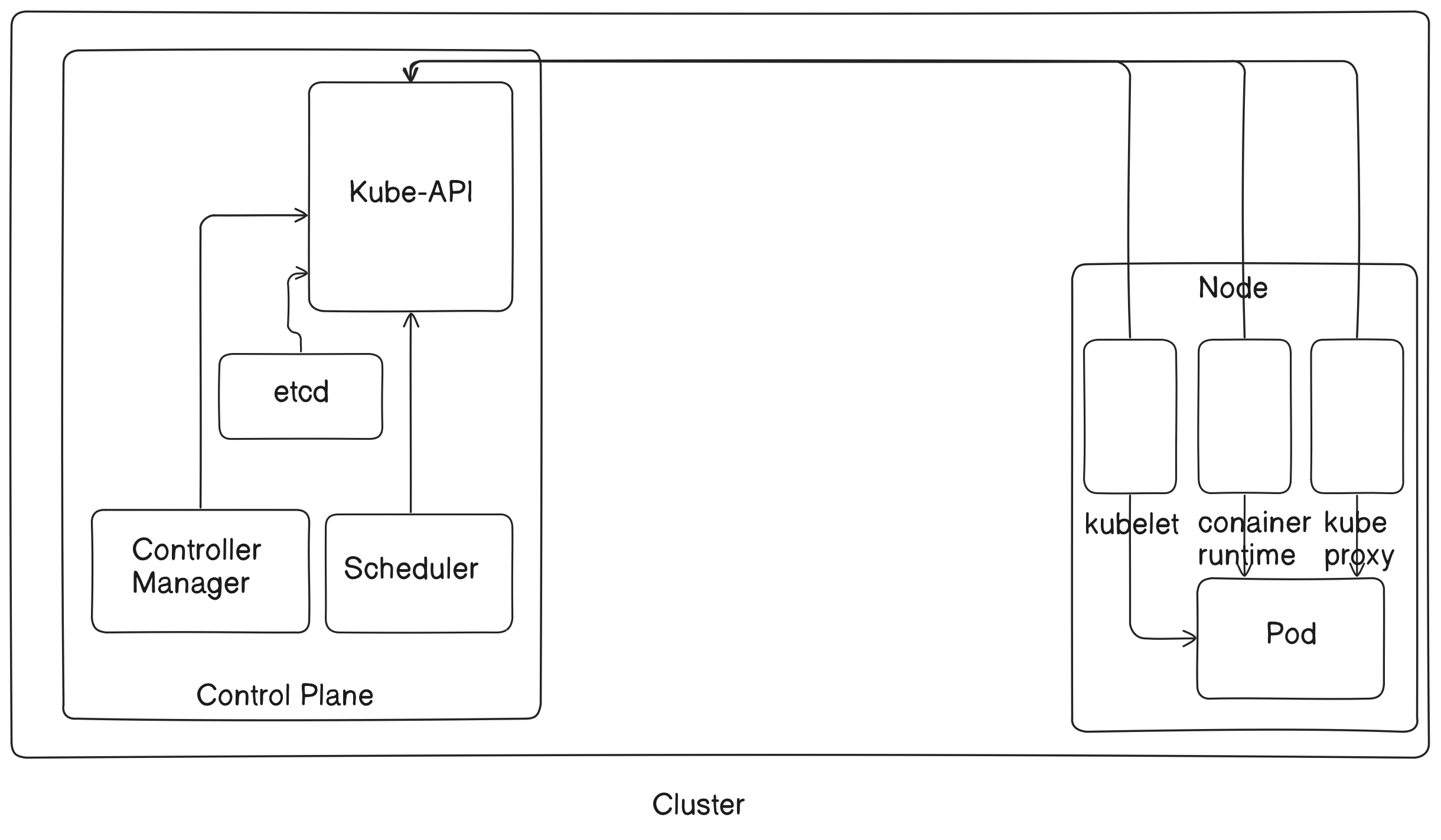

etcd

This is one of the most important component of k8s (every component is important but this one is special). There are so many things inside a k8 cluster. There are nodes, pods, services, deployments etc. So there must a very fast and distributed database (You know distributed database! Right?) storing all the information about states of nodes and other components to avoid any conflict and that's my friend is what we call etcd. It is the brain of k8s cluster! It's replica is available on every node which is synchronized with that in control plane. Now when a new node will be created, this etcd will be given to that node also, so that it is well known to it's surroundings and can contact other nodes when needed. etcd is a very vast topic and requires special attention because once etcd is gone everything related to cluster is gone! But for now just understand that it is a key-value distributed database storing all the information related to the cluster.

This box is filled! Now let's move towards the end of this blog and see the flow of k8s!

Flow: Creating a Pod

How will a pod be created in k8s? It will start with your command to create a pod, once you commanded to create a pod, request will be sent to the API server and the state will be persisted in the etcd. API server will again be informed by etcd about the status of persistence, if successful, API server will send this pod to scheduler and it will assign node to it. Scheduler will inform the API server about the node and API server will inform the kubelet of the assigned node to place the pod inside it. Congratulations!! You created your pod! Bye from k8s!!

I hope this blog will make you understand the core architecture of Kubernetes. There is much more things to do in k8s, but for a beginner this much information is sufficient to start learning k8s. Happy Learning and Bye-Bye!

Subscribe to my newsletter

Read articles from Manik Mehta directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Manik Mehta

Manik Mehta

I am Manik Mehta, student at Indian Institute of Technology, Guwahati, India. I am interested in learning Java and Android Development. I hope I will be able to contribute towards Coding Community through my blogs and projects.