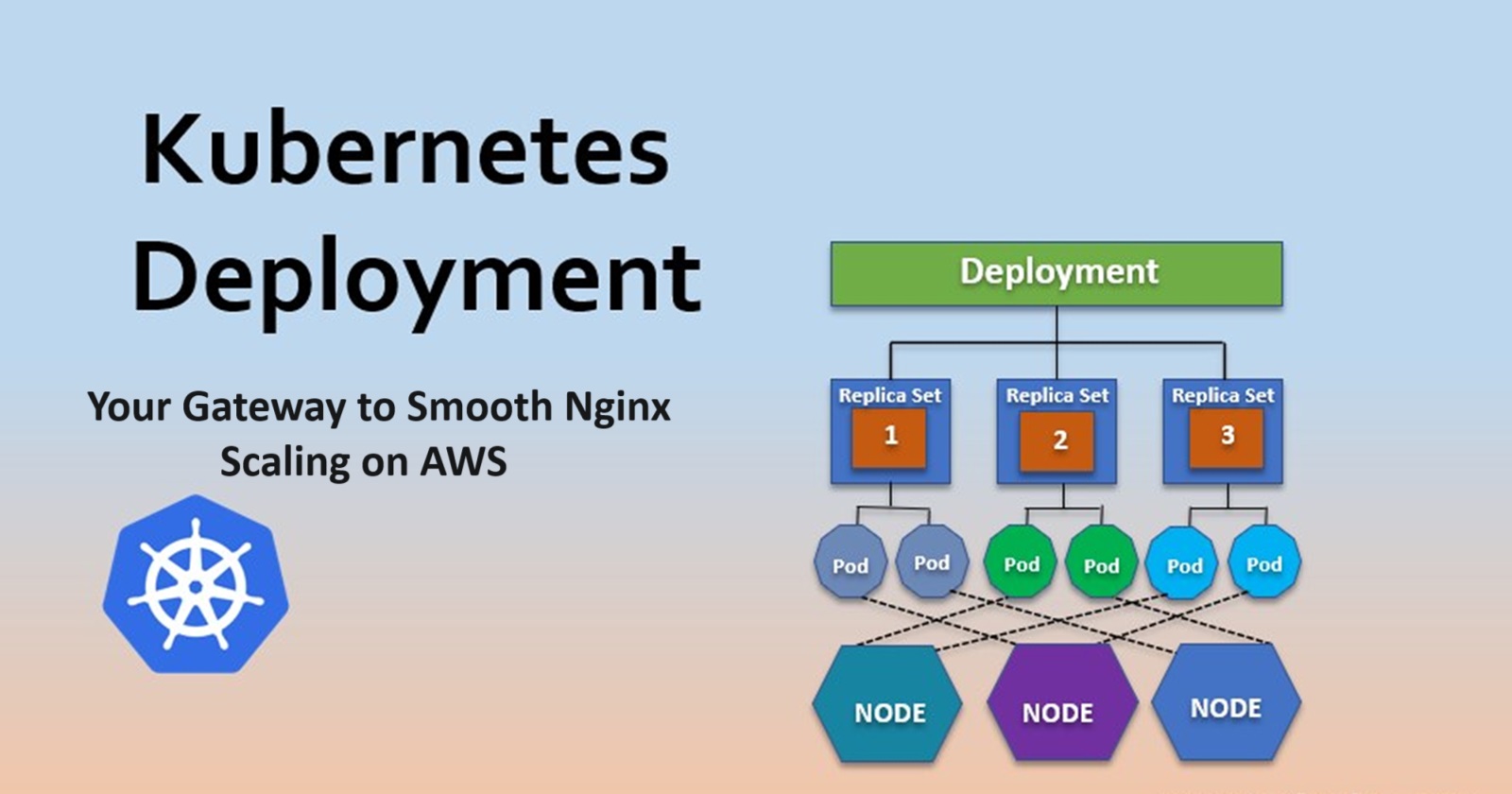

🚀Mastering Kubernetes Deployments: Achieve Flawless Nginx Delivery on AWS🚀

Sprasad Pujari

Sprasad Pujari

In the world of containerized applications, Kubernetes has emerged as the de facto standard for orchestrating and managing containerized workloads. While Pods provide the basic building blocks for running containers, Deployments offer a more robust and efficient way to manage and scale your applications. Here's why Kubernetes Deployments are essential and how they streamline the application delivery process. 💻

Understanding the Basics 📚

Before diving into Deployments, let's quickly recap the key concepts:

Container: A lightweight, standalone package containing an application and its dependencies, created using platforms like Docker.

Pod: The smallest deployable unit in Kubernetes, consisting of one or more containers that share resources like networking and storage.

Why Deployments? 🤔

While Pods allow you to run containers, they lack advanced features like auto-scaling, rolling updates, and self-healing capabilities. This is where Deployments come into play. 🌟

Deployments provide the following key advantages:

High Availability: Deployments ensure that the desired number of replicated Pods are running at all times, providing resilience against failures or unexpected downtime. 🔁

Rolling Updates: Deployments facilitate seamless application updates by rolling out changes incrementally, ensuring zero downtime during deployments. 🆙

Rollbacks: If an update fails or introduces issues, Deployments make it easy to roll back to a previous stable version, minimizing disruptions. ⏪

Scaling: Deployments enable easy scaling of applications by adjusting the number of replicated Pods, either manually or through auto-scaling mechanisms. ⬆️⬇️

Self-Healing: In case a Pod crashes or becomes unresponsive, the Deployment automatically replaces it with a new instance, ensuring continuous application availability. 🚑

Real-Time Example 🌍

Let's consider a scenario where you're deploying a web application with a front-end and a back-end component. With Deployments, you can:

Initial Deployment: Create separate Deployments for the front-end and back-end components, specifying the desired number of replicas for each. 🌐

Rolling Updates: Whenever a new version of the application is ready, update the corresponding Deployment, and Kubernetes will orchestrate a rolling update, gradually replacing the old Pods with new ones without any downtime. 🔄

Scaling: During peak traffic periods, you can scale up the Deployments by increasing the number of replicas, ensuring your application can handle the increased load. Conversely, you can scale down during off-peak hours to optimize resource utilization. ⬆️⬇️

Self-Healing: If a Pod crashes due to an unexpected issue, the Deployment will automatically spin up a new Pod to maintain the desired replica count, ensuring your application remains highly available. 🚑

By leveraging Kubernetes Deployments, you can confidently manage and deliver your applications with features like rolling updates, rollbacks, auto-scaling, and self-healing capabilities, ensuring a smooth and efficient application lifecycle. 🎉

Deploying Nginx on AWS Using Kubernetes Deployments 🚀🌏

Are you ready to take your web application hosting game to the next level? In this blog, we'll explore how to deploy the popular Nginx web server on AWS using Kubernetes Deployments. Buckle up, because we're about to embark on an exciting journey through the world of containerized application deployment! 🚢

Prerequisites ⚙️

Before we dive in, make sure you have the following prerequisites in place:

🐳 A Kubernetes cluster running on AWS (You can use services like Amazon EKS or roll out your own self-managed cluster on EC2 instances)

🔑 AWS CLI configured with appropriate credentials and permissions

📋 Basic knowledge of Kubernetes and its core concepts (Pods, Deployments, Services, etc.)

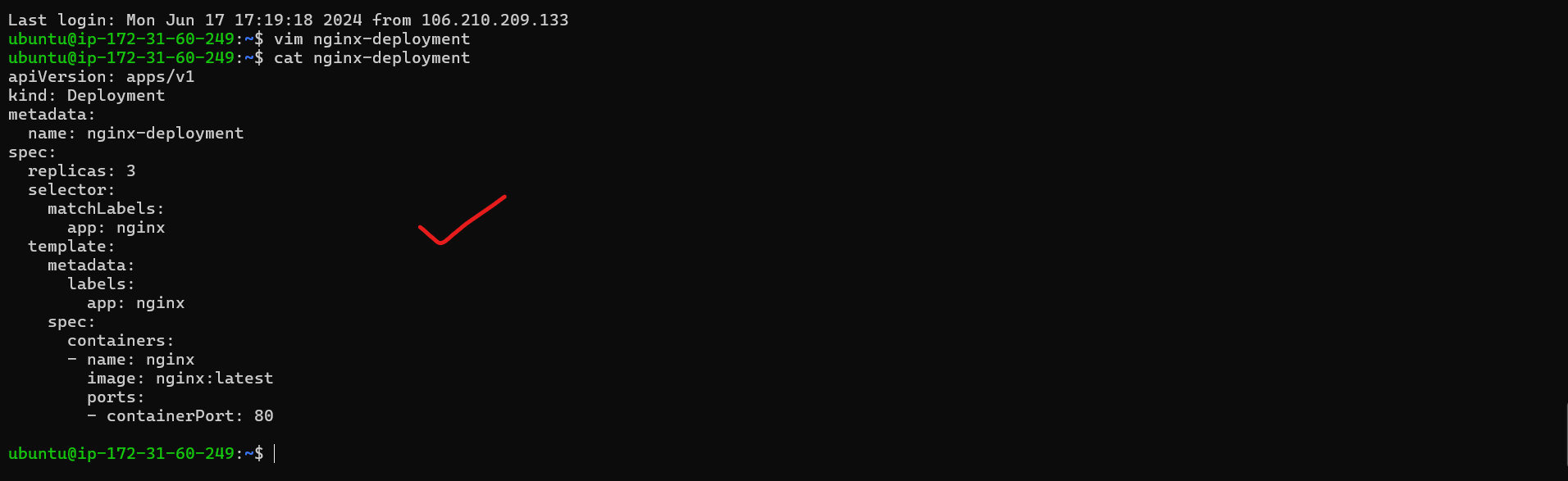

Step 1: Creating the Nginx Deployment 🛠️

Let's start by creating a Kubernetes Deployment for Nginx. We'll define the desired state of our application using a YAML file:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

Here's what's happening:

🔢 We're specifying three replicas of the Nginx Pods to ensure high availability.

🏷️ The

app: nginxlabel is used to match and manage the Pods by the Deployment.🖼️ The

nginx:latestimage from Docker Hub is used to create the container.🚪 The container exposes port 80 for incoming web traffic.

To create the Deployment, run the following command:

kubectl apply -f nginx-deployment.yaml

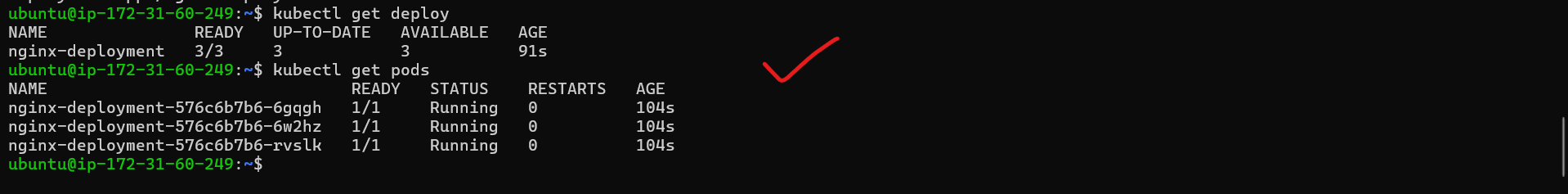

To check deployment and POD status

Kubectl get deploy

kubectl get pods

Now lets check live Auto healing practical demo

Just delete one POD using below command

Kubectl delete pod nginx-deployment-576c6b7b6-l9dh2

After that run below command to check live auto healing process

Kubectl get pods -w

W -means watch

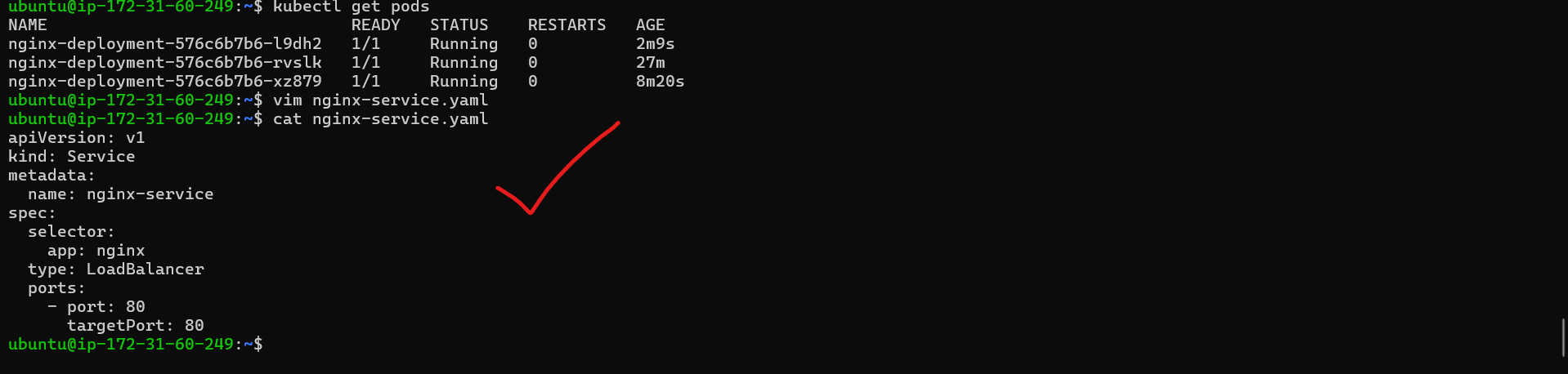

Step 2: Exposing the Nginx Service 🌐

While the Deployment ensures that the desired number of Pods are running, we still need to make our Nginx service accessible from outside the Kubernetes cluster. We'll achieve this by creating a Kubernetes Service:

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

type: LoadBalancer

ports:

- port: 80

targetPort: 80

Here's what's happening:

🎯 The

selectormatches the Pods with theapp: nginxlabel, managed by the Deployment.🚚 The

type: LoadBalancerprovisions an AWS Elastic Load Balancer (ELB) to distribute traffic across the Nginx Pods.🔑 The

port: 80exposes the service on port 80, forwarding traffic to thetargetPort: 80of the Nginx containers.

To create the Service, run:

kubectl apply -f nginx-service.yaml

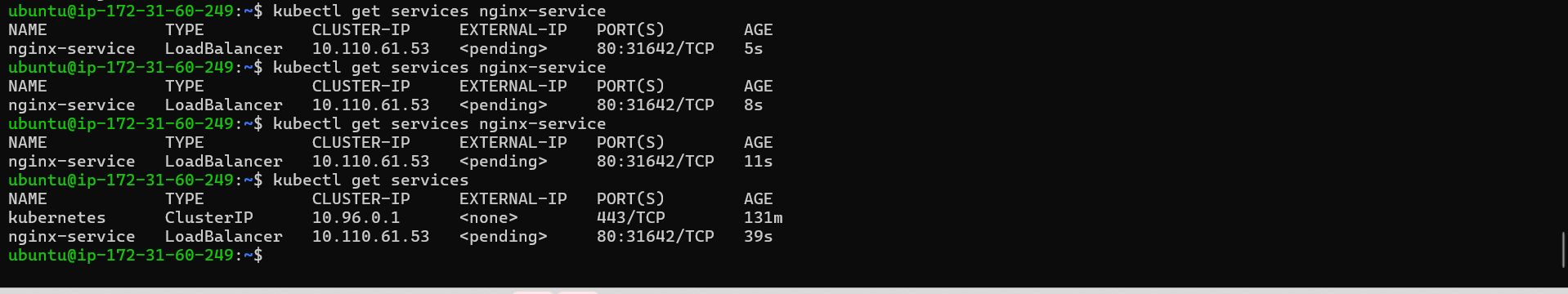

Step 3: Accessing the Nginx Service 🌐

After creating the Service, Kubernetes will provision an AWS Elastic Load Balancer and assign it a public DNS name. To find the DNS name, run:

kubectl get services nginx-service

Look for the EXTERNAL-IP field in the output. This is the public DNS name of your Nginx service hosted on AWS!

You can now access your Nginx web server by navigating to the DNS name in your web browser. You should see the default Nginx welcome page. 🎉

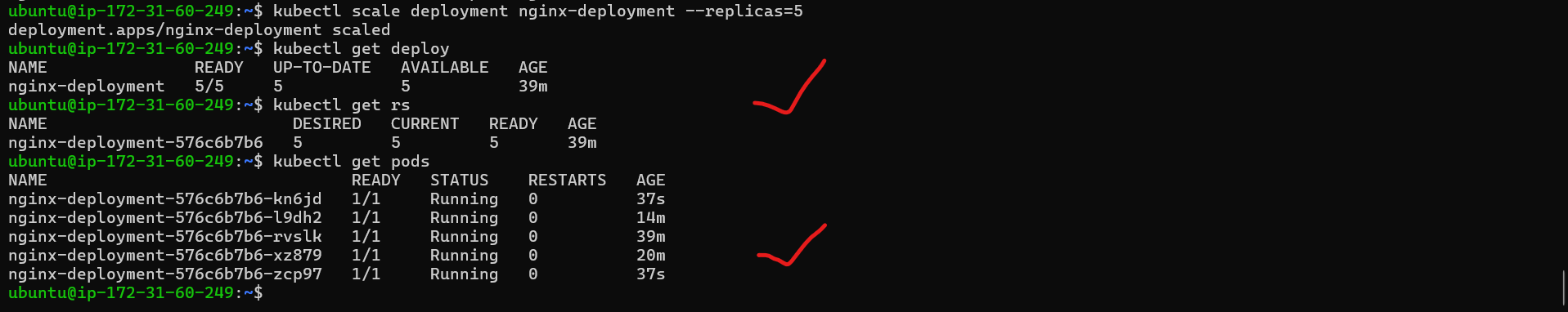

Step 4: Scaling and Updating the Deployment ⬆️🔄

One of the key benefits of Kubernetes Deployments is the ability to scale and update your application seamlessly. Let's see how it's done:

- Scaling the Deployment: To scale the number of Nginx Pods, run:

kubectl scale deployment nginx-deployment --replicas=5

This will update the Deployment to maintain five replicas of the Nginx Pods. Kubernetes will automatically spin up the additional Pods needed to meet the new desired state.

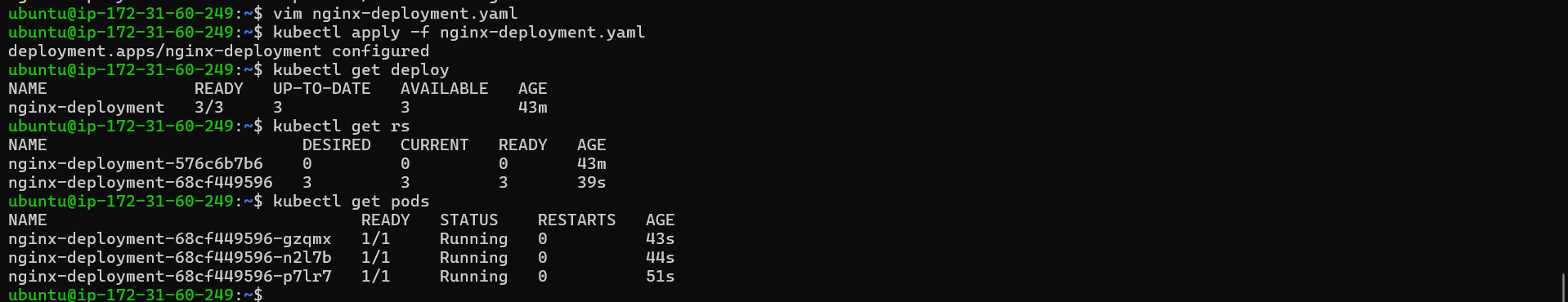

- Updating the Deployment: Let's say you want to deploy a new version of the Nginx image. Simply update the

imagefield in the Deployment YAML file and apply the changes:

# Updated nginx-deployment.yaml

spec:

containers:

- name: nginx

image: nginx:1.19.2 # Updated image tag

Then, run:

kubectl apply -f nginx-deployment.yaml

Kubernetes will automatically perform a rolling update, gradually replacing the old Pods with new ones running the updated Nginx image, ensuring zero downtime for your web application.

Wrapping Up 🏁

Congratulations! You've successfully deployed Nginx on AWS using Kubernetes Deployments. This blog covered the entire process, from creating the Deployment and Service to scaling and updating your application.

Kubernetes Deployments provide a powerful and flexible way to manage and orchestrate your containerized applications on AWS. With the knowledge gained from this blog, you're now equipped to take on more complex deployment scenarios and unleash the full potential of Kubernetes on AWS. 🚀

Happy deploying, and may your web applications always be highly available and scalable! 🎉

Thank you for joining me on this journey through the world of cloud computing! Your interest and support mean a lot to me, and I'm excited to continue exploring this fascinating field together. Let's stay connected and keep learning and growing as we navigate the ever-evolving landscape of technology.

LinkedIn Profile: https://www.linkedin.com/in/prasad-g-743239154/

Feel free to reach out to me directly at spujari.devops@gmail.com. I'm always open to hearing your thoughts and suggestions, as they help me improve and better cater to your needs. Let's keep moving forward and upward!

If you found this blog post helpful, please consider showing your support by giving it a round of applause👏👏👏. Your engagement not only boosts the visibility of the content, but it also lets other DevOps and Cloud Engineers know that it might be useful to them too. Thank you for your support! 😀

Thank you for reading and happy deploying! 🚀

Best Regards,

Sprasad

Subscribe to my newsletter

Read articles from Sprasad Pujari directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sprasad Pujari

Sprasad Pujari

Greetings! I'm Sprasad P, a DevOps Engineer with a passion for optimizing development pipelines, automating processes, and enabling teams to deliver software faster and more reliably.