Finding the Best Model For You - A Guide to Grid Search CV

Ali Vijdaan

Ali Vijdaan

I remember first starting out as a data scientist and manually changing hyper-parameters for hours. It was frustrating. It was time-consuming. Sometimes I would change the model all-together. That was until I started using GridSearchCV.

What is GridSearchCV

GridSearchCV is a tool from the scikit-learn's library made for hyper-parameter tuning. I was first introduced to it by Aurelien Geron's Machine Learning with Sckit-Learn, Keras and TensorFlow.

Implementing GridSearchCV

Suppose you have a Random Forest Classifier and want to tune its hyper-parameters. Implementing GridSearchCV would look something like this.

from sklearn.model_selection import GridSearchCV

param_grid = {'n_estimators' : [50, 100, 150],

'max_features' : [2, 4, 6, 8]}

forest_reg = RandomForestRegressor()

grid_search = GridSearchCV(forest_reg, param_grid, cv=3,

scoring='accuracy',

return_train_score=True)

grid_search.fit(X_train, y_train)

In the code above the param_grid are the combinations of hyper-parameters you want to try out using cross-validation value which you can adjust using cv.

Implementing GridSearchCV for Multiple Models and Scoring

GridSearchCV also supports multiple models and scoring at the same time.

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

from sklearn.linear_model import SGDClassifier

sgd_clf = SGDClassifier()

rf_clf = RandomForestClassifier()

models = {

'SGD' : (sgd_clf,

{'loss' : ['hinge', 'log_loss'],

'penalty' : ['l2', None]}),

'RandomForest' : (rf_clf,

{'n_estimators' : [100, 150, 200, 250],

'max_features' : ['sqrt', 'log2']})

}

scoring = {

'accuracy' : 'accuracy',

'roc_auc' : 'roc_auc'

}

for model_name, (model, param_grid) in model.items():

grid_search = GridSearchCV(model, param_grid, cv=5,

scoring=scoring,

return_true_score=True,

refit='accuracy')

grid_search.fit(X_train, y_train)

Building a dictionary of multiple models along with their parameters and multiple scorings can enable us to perform grid search on multiple models and scoring.

To find the best parameters for each model we can include the following code snippet in the for loop

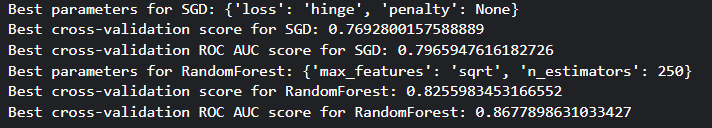

print(f"Best parameters for {model_name}: {grid_search.best_params_}")

print(f"Best cross-validation score for {model_name}: {grid_search.best_score_}")

print(f"Best cross-validation ROC AUC score for {model_name}: {grid_search.cv_results_['mean_test_roc_auc'][grid_search.best_index_]}")

Applying this on the titanic dataset we get the following output

To get the best model with the most optimal hyper-parameters you can use the following code

final_model = grid_search.best_estimator_

For further insights into how each feature affects the best model the following code can be used

feature_importances = grid_search.best_estimator_.feature_importances_

For a more comprehensive analysis the following code can give the results of each model, score and cross validation.

cv_result = grid_search.cv_results_

For a more detailed look into how GridSearchCV can be implemented visit my Kaggle Notebook where I implement it on the Titanic Dataset.

print("Happy Coding!)

Subscribe to my newsletter

Read articles from Ali Vijdaan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by