Understanding Kubernetes Services: A Comprehensive Guide 🚀

Sprasad Pujari

Sprasad Pujari

In the world of containerized applications, Kubernetes has emerged as a powerful orchestration platform, enabling developers and DevOps teams to manage and scale their applications with ease. One of the critical components of Kubernetes is the Service, which plays a crucial role in exposing and accessing your applications within the cluster and beyond. In this blog, we'll break down Kubernetes Services in a simple and easy-to-understand manner, complete with real-world examples and a step-by-step project to help you grasp the concept better. 💫

Why Do We Need Services? 🤔

Imagine you're running a popular e-commerce website on Kubernetes, with multiple instances (Pods) of your application to handle the traffic load. Without Services, each Pod would have its own unique IP address, making it challenging to access your application consistently. Here's where Kubernetes Services come into play:

🔑 Services act as a stable entry point for accessing your application, abstracting away the underlying Pods' IP addresses and ports.

🔄 Services provide load balancing capabilities, distributing traffic across multiple Pods for enhanced reliability and scalability.

🔐 Services enable secure communication between Pods and external clients, facilitating network isolation and exposing applications selectively.

Without Services, every time a Pod is rescheduled or replaced, clients would need to update the IP address and port they're connecting to, which can be a daunting task, especially in large-scale environments. Services solve this problem by providing a consistent and reliable way to access your applications running in Kubernetes. 🎯

How Do Services Work? ⚙️

Services in Kubernetes operate by leveraging labels and selectors. When you create a Service, you define a set of labels that identify the Pods it should target. The Service continuously monitors the cluster and automatically routes traffic to the Pods that match the specified labels. 🎯

Let's illustrate this with a real-world example: 🌍

Imagine you're running an e-commerce website on Kubernetes, and you have multiple replicas (Pods) of the application to handle traffic. Instead of exposing each Pod's IP address individually, you create a Service that targets all Pods with the label app=ecommerce. When a user accesses your website, the Service routes the request to one of the available Pods seamlessly. If a Pod goes down, the Service automatically redirects traffic to the remaining healthy Pods, ensuring uninterrupted service. 🛍️

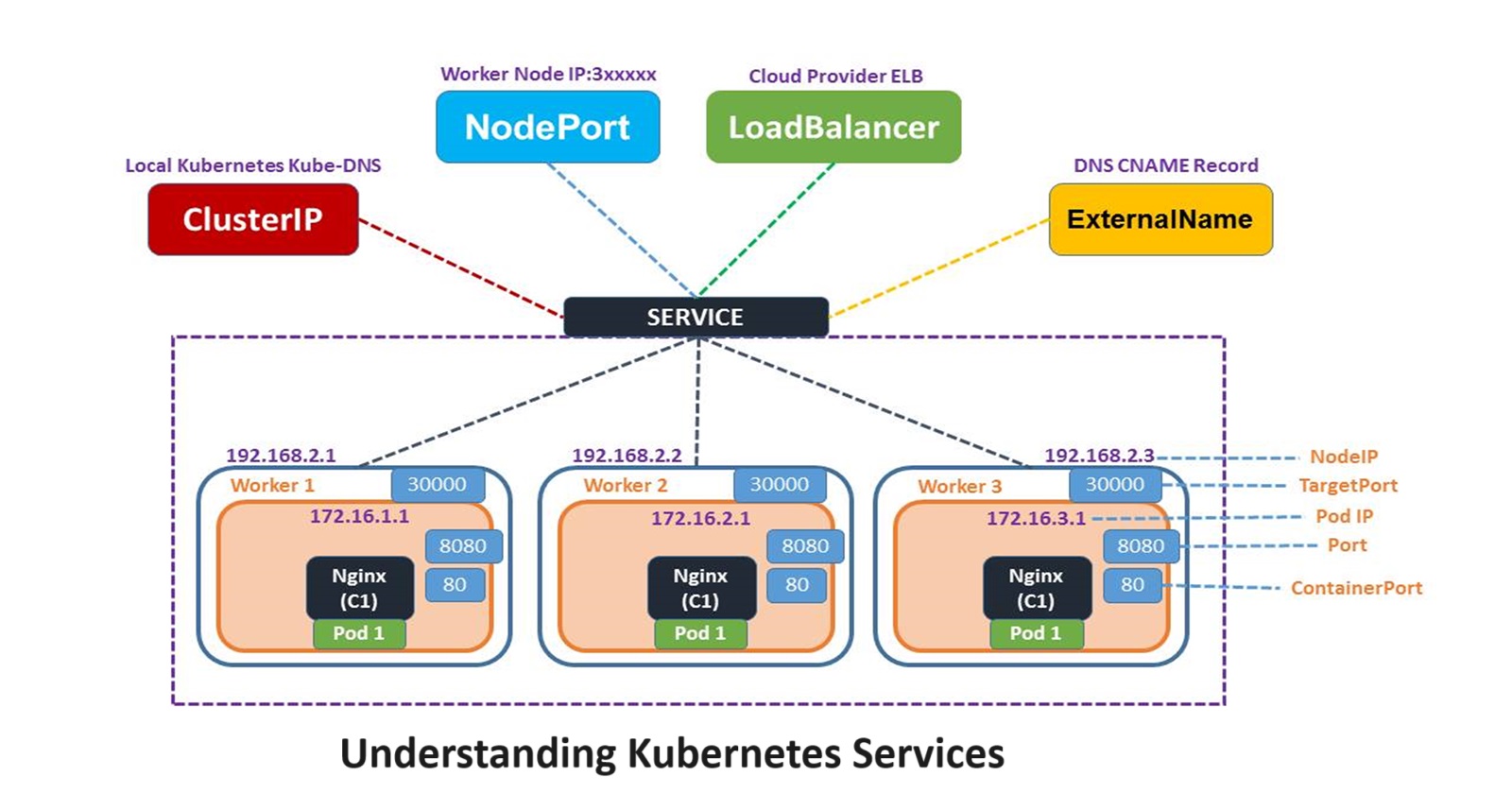

Types of Services in Kubernetes 🌐

Kubernetes offers several types of Services to cater to different use cases:

Cluster IP: This is the default Service type, which exposes the Service within the cluster only. It's useful for internal communications between Pods or for exposing applications that should not be publicly accessible.

Node Port: This type of Service exposes the application on a specific port on each Node in the cluster. It's commonly used for exposing applications within an organization or for debugging purposes.

Load Balancer: When using a cloud provider like AWS, GCP, or Azure, the Load Balancer Service type provisions an external load balancer, allowing access to your application from the internet. This is ideal for exposing public-facing applications like websites or APIs.

External Name: This Service type maps a Service to a DNS name, allowing Kubernetes to route traffic to external services outside the cluster.

By understanding these Service types, you can choose the appropriate one based on your application's requirements and desired accessibility. 🔓

Real-World Project: Deploying a Nodejs Application with Kubernetes Services 🚀

Now, let's put our knowledge into practice by deploying a simple Node.js application on a Kubernetes cluster and exposing it using Services. Follow along with the steps below:

Setup Kubernetes Cluster: Begin by setting up a Kubernetes cluster on your local machine or a cloud provider like AWS, GCP, or Azure.

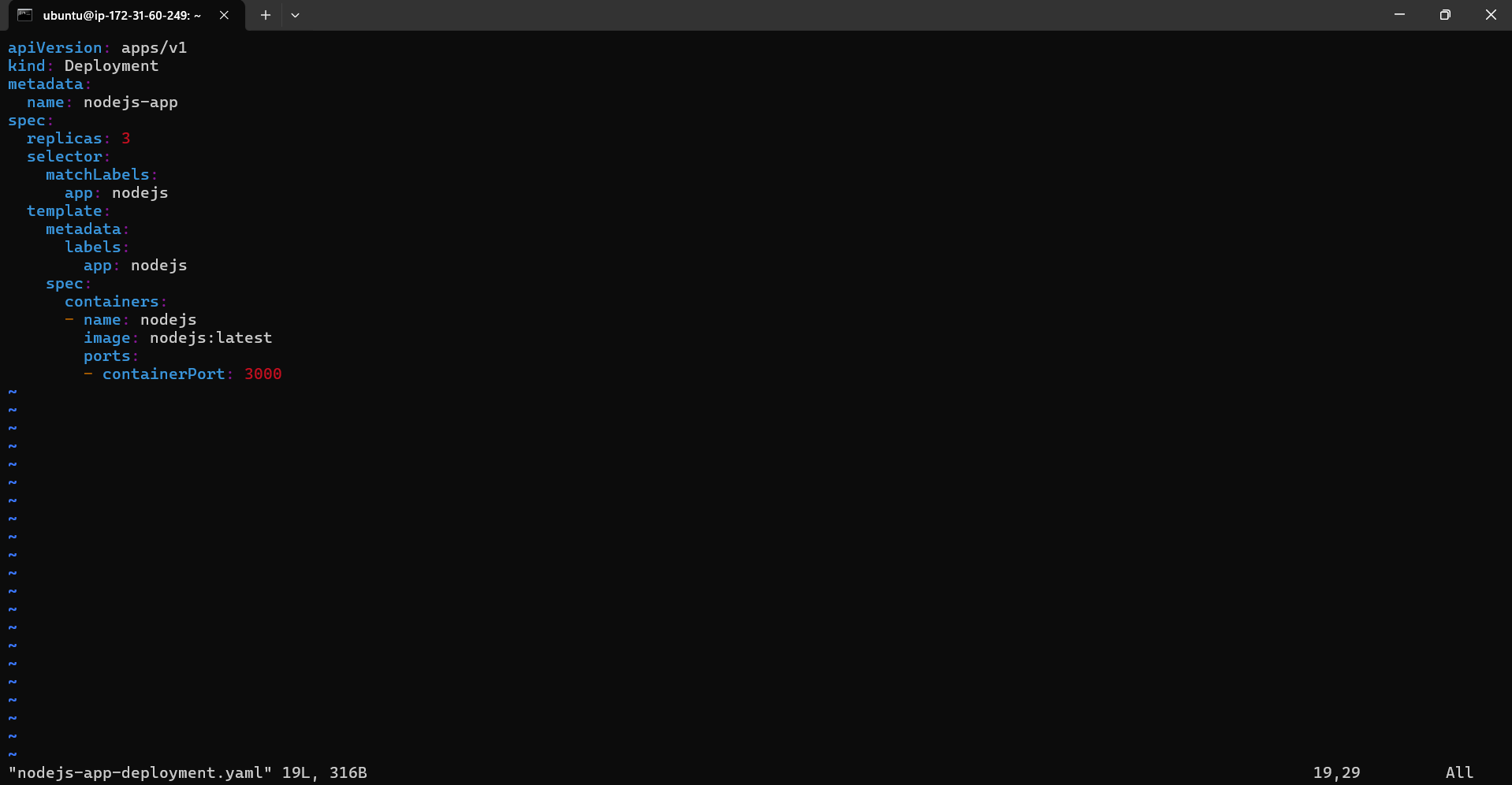

Create Deployment: Define a Deployment for your Node.js application in a file called

deployment.yaml. Here's an example:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nodejs-app

spec:

replicas: 3

selector:

matchLabels:

app: nodejs

template:

metadata:

labels:

app: nodejs

spec:

containers:

- name: nodejs

image: nodejs:latest

ports:

- containerPort: 3000

This Deployment creates three replicas (Pods) of your Node.js application.

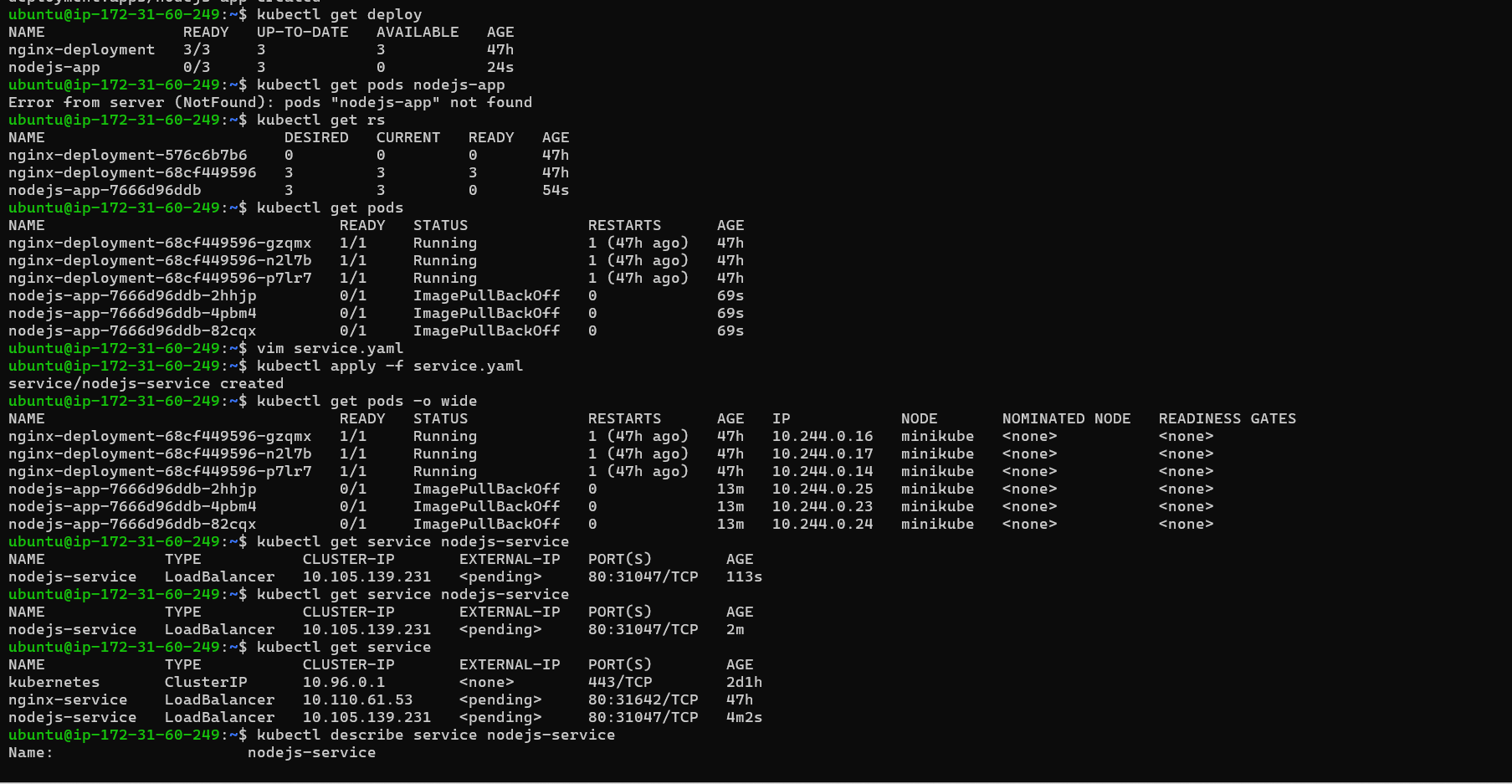

- Create Service: Next, create a Service to expose your application by defining it in a file called

service.yaml:

apiVersion: v1

kind: Service

metadata:

name: nodejs-service

spec:

type: LoadBalancer

selector:

app: nodejs

ports:

- port: 80

targetPort: 3000

This Service exposes your application using a LoadBalancer type, making it accessible from the internet.

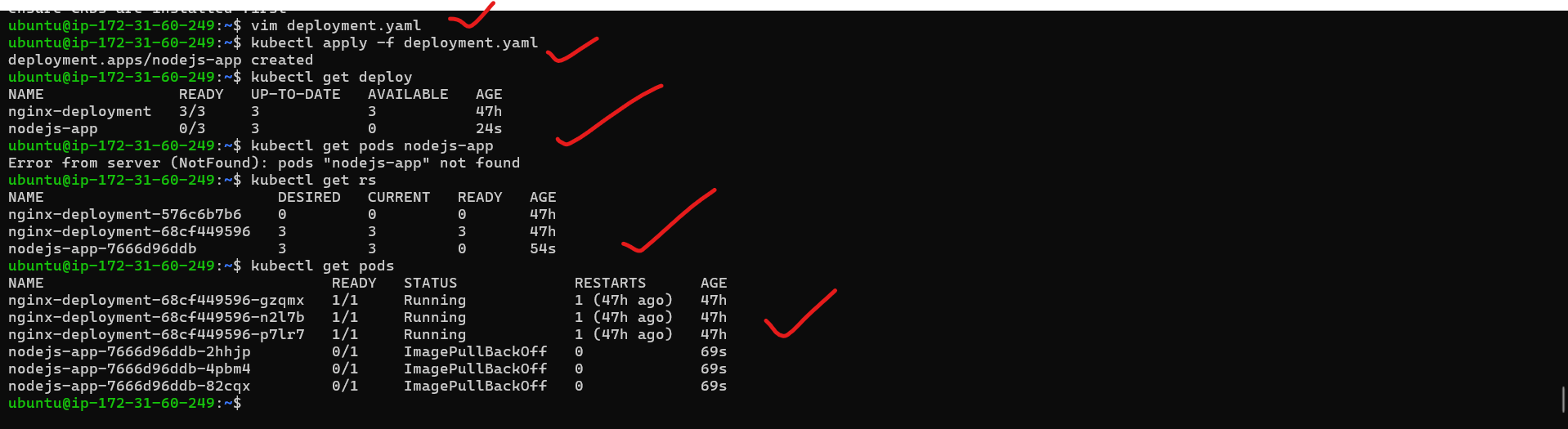

- Deploy to Kubernetes: Apply the Deployment and Service to your Kubernetes cluster using the following commands:

kubectl apply -f deployment.yaml

kubectl apply -f service.yaml

- Access Your Application: Once the Service is up and running, you can access your Node.js application using the external IP or URL provided by the Load Balancer Service.

By following these steps, you've successfully deployed a Node.js application on Kubernetes and exposed it using a LoadBalancer Service, making it accessible from anywhere in the world! 🌍

Conclusion 🎉

Kubernetes Services are a vital component that enable reliable and scalable application deployment within a Kubernetes cluster. By providing load balancing, service discovery, and secure communication, Services ensure that your applications remain accessible and highly available, even in the face of dynamic Pod rescheduling or failures.

Whether you're running a small-scale application or a large-scale, mission-critical system, understanding Kubernetes Services is essential for effectively managing and exposing your applications in a Kubernetes environment. With this knowledge, you can confidently design and deploy resilient and scalable applications that meet your business requirements. 🚀

So, embrace the power of Kubernetes Services and unlock the full potential of your applications in the cloud-native world! 🌟

Thank you for joining me on this journey through the world of cloud computing! Your interest and support mean a lot to me, and I'm excited to continue exploring this fascinating field together. Let's stay connected and keep learning and growing as we navigate the ever-evolving landscape of technology.

LinkedIn Profile: https://www.linkedin.com/in/prasad-g-743239154/

Feel free to reach out to me directly at spujari.devops@gmail.com. I'm always open to hearing your thoughts and suggestions, as they help me improve and better cater to your needs. Let's keep moving forward and upward!

If you found this blog post helpful, please consider showing your support by giving it a round of applause👏👏👏. Your engagement not only boosts the visibility of the content, but it also lets other DevOps and Cloud Engineers know that it might be useful to them too. Thank you for your support! 😀

Thank you for reading and happy deploying! 🚀

Best Regards,

Sprasad

Subscribe to my newsletter

Read articles from Sprasad Pujari directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sprasad Pujari

Sprasad Pujari

Greetings! I'm Sprasad P, a DevOps Engineer with a passion for optimizing development pipelines, automating processes, and enabling teams to deliver software faster and more reliably.