[AWS] Lambda - URL Invoking + Terraform Project 11

Mohamed El Eraki

Mohamed El Eraki

Inception

Hello everyone, This article is part of The Terraform + AWS series, And it's not depend on the previous articles, I use this series to publish out Projects & Knowledge.

Overview

Hello Everyone, Using Lambda URL invoking provides a very useful cabapility for invoking your function seamlessly, you can invoke your function through its HTTP(S) endpoint via a web browser, curl, Postman, or any HTTP client. To invoke a function URL, you must have lambda:InvokeFunctionUrl permissions.

A function URL is a dedicated HTTP(S) endpoint for your Lambda function. You can create and configure a function URL through the Lambda console or the Lambda API. When you create a function URL, Lambda automatically generates a unique URL endpoint for you. Once you create a function URL, its URL endpoint never changes. Function URL endpoints have the following format:

https://<url-id>.lambda-url.<region>.on.awsIf your function URL uses the

AWS_IAMauth type, you must sign each HTTP request using AWS Signature Version 4 (SigV4). Tools such as awscurl, Postman, and AWS SigV4 Proxy offer built-in ways to sign your requests with SigV4.If you don't use a tool to sign HTTP requests to your function URL, you must manually sign each request using SigV4. When your function URL receives a request, Lambda also calculates the SigV4 signature. Lambda processes the request only if the signatures match. For instructions on how to manually sign your requests with SigV4, see Signing AWS requests with Signature Version 4 in the Amazon Web Services General Reference Guide.

If your function URL uses the

NONEauth type, you don't have to sign your requests using SigV4. You can invoke your function using a web browser, curl, Postman, or any HTTP client.To test other HTTP requests, such as a

POSTrequest, you can use a tool such as curl. For example, if you want to include some JSON data in aPOSTrequest to your function URL, you could use the following curl command:

curl -v 'https://abcdefg.lambda-url.us-east-1.on.aws/?message=HelloWorld' \ -H 'content-type: application/json' \ -d '{ "example": "test" }'

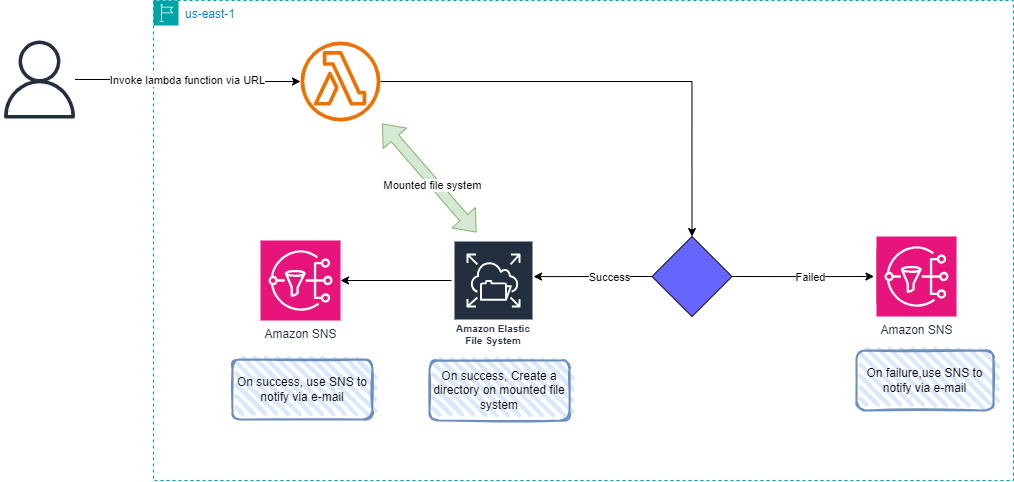

Today's Project trying to build a Lambda function That mounts EFS storage and configure SNS "simple notification service" as a destination for sending e-mails when function went through success or failure status, Will enable Function URL, Then trying to invoking the function using curl command. The project will be created using 𝑻𝒆𝒓𝒓𝒂𝒇𝒐𝒓𝒎.✨

Building-up Steps

The Architecture Design Diagram:

Building-up Steps details:

Today will Build up a lambda function, EFS storage, and SNS. The Infrastructure will build-up Using 𝑻𝒆𝒓𝒓𝒂𝒇𝒐𝒓𝒎.✨

The lambda function will mound the efs storage, Then will use curl command to send a JSON syntax to the function include a directory name, and the function will create this directory on the EFS mounted.

The deployment steps as below: ⭐

Create The function python script.

Configure the provider.

Deploy a Python lambda layer.

- 💡I have uploaded the layer package to another S3 earlier. and will mention it in the code. This is a good practice in avoid wasting time in each time we run the code should upload the package.💡If we put the package directly into the Terraform code, will effect The deployment time at every time we run terraform apply, because every time will try to upload the package.

Deploy lambda function.

Should be at the same VPC as EFS.

Holds The deployed layer.

Enable function URL as auth type NONE

Configure SNS as a destination.

Holds an IAM role.

Deploy SNS topic and subscription hold my e-mail.

Deploy an IAM role holds:

SNS full access.

EC2 full access.

S3 full access.

Cloudwatch full access.iv

Deploy a VPC and subnet.

Deploy security group allows port 2049 for efs traffic.

Deploy EFS, and configure its access point.

Clone The Project Code

Create a clone to your local device as the following:

pushd ~ # Change Directory

git clone https://github.com/Mohamed-Eleraki/terraform.git

pushd ~/terraform/AWS_Demo/19-lambda-config_EFS_storage-URL_invokation

- open in a VS Code, or any editor you like

code . # open the current path into VS Code.

Terraform Resources + Code Steps

Once you opened the code into your editor, will notice that the resources have been created already. However will discover together how Create them steps by step.

Create a Python Script

- Create a Python script under

scriptsdirectory calledURLinvokation.py

import json

import os

def lambda_handler(event, context):

# Print the raw event content for debugging

print("Received event:", json.dumps(event))

# Parse the body if it exists and is a string

if 'body' in event:

try:

event_body = json.loads(event['body'])

except json.JSONDecodeError:

return {

'statusCode': 400,

'body': json.dumps("Error: Could not decode JSON payload.")

}

else:

event_body = event

directory_name = event_body.get("directory_name")

if not directory_name:

return {

'statusCode': 400,

'body': json.dumps("Error: 'directory_name' is required in the event payload.")

}

base_path = "/mnt/efs"

new_directory_path = os.path.join(base_path, directory_name)

os.makedirs(new_directory_path, exist_ok=True)

list_directories = os.listdir(base_path)

return {

'statusCode': 200,

'body': json.dumps({

'message': f"Directory '{directory_name}' created successfully at {new_directory_path}",

'current_list_directories': list_directories,

'received_event': event_body

})

}

The Python script above works to fetch the sent event and fetch from it the "directory_name" value, then use os.makedir to create a directory with the sent file name.

I used the create_layer.sh script to create the layer then uploaded to a predefined S3.

Configure the Provider

Create a new file called

configureProvider.tfHold the following content# Configure aws provider terraform { required_providers { aws = { source = "hashicorp/aws" version = "~> 5.0" } } backend "s3" { bucket = "erakiterrafromstatefiles" key = "19-lambda-config_EFS_storage-URL_invokation/lambda_S3Trigger.tfstate" region = "us-east-1" profile = "eraki" } } # Configure aws provider provider "aws" { region = "us-east-1" profile = "eraki" }

Deploy lambda resources

Create a new file called lambda.tf Hold the following content

# Deploy lambda layer resource - Create your layer zip file first , Then upload it to Bucket

resource "aws_lambda_layer_version" "url_invokation_layer" {

# mention the layer pacakge from S3 Bucket instead

# This is to avoid upload the packge every time you run your code.

s3_bucket = "erakiterrafromstatefiles" # S3 Bucket name

s3_key = "19-lambda-config_EFS_storage-URL_invokation/URL_Invokation_depends.zip" # path to your Package

layer_name = "invokationLayer"

compatible_runtimes = ["python3.11"]

}

# Deploy lambda function

resource "aws_lambda_function" "url_invokation_function" {

function_name = "invokationFunction"

# zip file path holds python script

filename = "${path.module}/scripts/URLinvokation05.zip"

source_code_hash = filebase64sha256("${path.module}/scripts/URLinvokation05.zip")

# handler name = file_name.python_function_name

handler = "URLinvokation.lambda_handler"

runtime = "python3.11"

timeout = 120

# utilizing deployed Role

role = aws_iam_role.lambda_iam_role.arn

# utilizing deployed layer

layers = [aws_lambda_layer_version.url_invokation_layer.arn]

file_system_config {

# EFS file system access point ARN

arn = aws_efs_access_point.access_point_for_lambda.arn

# Local mount path inside the lambda function. Must start with '/mnt/'.

local_mount_path = "/mnt/efs"

}

vpc_config {

# Every subnet should be able to reach an EFS mount target in the same Availability Zone. Cross-AZ mounts are not permitted.

subnet_ids = [aws_subnet.subnet_01.id]

security_group_ids = [aws_security_group.lambda_sg.id]

}

# Explicitly declare dependency on EFS mount target.

# When creating or updating Lambda functions, mount target must be in 'available' lifecycle state.

depends_on = [aws_efs_mount_target.efs_mount_target]

# Specifically, create_before_destroy ensures that when a resource needs to be replaced, the new resource is created before the old resource is destroyed

lifecycle {

create_before_destroy = true

}

}

# Lambda destination configuration for failures

resource "aws_lambda_function_event_invoke_config" "invokation_lambda_sns_destination" {

depends_on = [aws_iam_role.lambda_iam_role]

function_name = aws_lambda_function.url_invokation_function.function_name

destination_config {

on_failure {

destination = aws_sns_topic.url_invokation_lambda_failures_success.arn

}

on_success {

destination = aws_sns_topic.url_invokation_lambda_failures_success.arn

}

}

}

resource "aws_lambda_function_url" "lambda_url" {

function_name = aws_lambda_function.url_invokation_function.function_name

authorization_type = "NONE"

}

output "lambda_function_url" {

value = aws_lambda_function_url.lambda_url.function_url

}

Deploy SNS resources

Create a new file called sns.tf Hold the following content

# Create an sns topic

resource "aws_sns_topic" "url_invokation_lambda_failures_success" {

name = "lambda-URLinvoke-topic"

}

resource "aws_sns_topic_subscription" "sns_url_invokation_lambda_email_subscription" {

topic_arn = aws_sns_topic.url_invokation_lambda_failures_success.arn

protocol = "email"

endpoint = "YOUR-EMAIL-HERE"

}

Deploy IAM resources

Create a new file called iam.tf Hold the following content

# Create an IAM role of lambda function

resource "aws_iam_role" "lambda_iam_role" {

name = "lambda_iam_role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Principal = {

Service = "lambda.amazonaws.com"

}

Effect = "Allow"

Sid = "111"

},

]

})

managed_policy_arns = [

#"arn:aws:iam::aws:policy/AmazonSSMFullAccess",

"arn:aws:iam::aws:policy/AmazonEC2FullAccess",

"arn:aws:iam::aws:policy/AmazonS3FullAccess",

"arn:aws:iam::aws:policy/AmazonSNSFullAccess",

"arn:aws:iam::aws:policy/CloudWatchFullAccess"

]

}

Deploy EFS resources

Create a new file called efs.tf Hold the following content

resource "aws_vpc" "vpc_01" {

cidr_block = "10.0.0.0/16"

tags = {

Name = "VPC_01"

}

}

resource "aws_subnet" "subnet_01" {

vpc_id = aws_vpc.vpc_01.id

cidr_block = "10.0.1.0/24"

tags = {

Name = "SUBNET"

}

}

resource "aws_security_group" "lambda_sg" {

vpc_id = aws_vpc.vpc_01.id

ingress {

from_port = 2049

to_port = 2049

protocol = "tcp"

cidr_blocks = [aws_vpc.vpc_01.cidr_block]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

# EFS file system

resource "aws_efs_file_system" "efs_for_lambda" {

tags = {

Name = "efs_for_lambda"

}

}

# Mount target connects the file system to the subnet

resource "aws_efs_mount_target" "efs_mount_target" {

file_system_id = aws_efs_file_system.efs_for_lambda.id

subnet_id = aws_subnet.subnet_01.id

security_groups = [aws_security_group.lambda_sg.id]

}

# EFS access point used by lambda file system

resource "aws_efs_access_point" "access_point_for_lambda" {

file_system_id = aws_efs_file_system.efs_for_lambda.id

root_directory {

path = "/lambda"

creation_info {

owner_gid = 1000

owner_uid = 1000

permissions = "777"

}

}

posix_user {

gid = 1000

uid = 1000

}

}

Apply Terraform Code

After configured your Terraform Code, It's The exciting time to apply the code and just view it become to Real. 😍

- First the First, Let's make our code cleaner by:

terraform fmt

- Plan is always a good practice (Or even just apply 😁)

terraform plan

- Let's apply, If there's No Errors appear and you're agree with the build resources

terraform apply -auto-approve

Invoking function using curl command

Now we are ready to test our function by using the following command, Replace the URL with your function URL.

curl -v 'functionURLHere' \

-H 'content-type: application/json' \

-d '{ "directory_name": "DirectoryNameHere" }'

Destroy environment

- Destroy all resources using terraform

terraform destroy -auto-approve

Resources

That's it, Very straightforward, very fast🚀. Hope this article inspired you and will appreciate your feedback. Thank you.

Subscribe to my newsletter

Read articles from Mohamed El Eraki directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Mohamed El Eraki

Mohamed El Eraki

Cloud & DevOps Engineer, Linux & Windows SysAdmin, PowerShell, Bash, Python Scriptwriter, Passionate about DevOps, Autonomous, and Self-Improvement, being DevOps Expert is my Aim.