Operating System Part-2

bandeji

bandejiTable of contents

- How OS creates a process?

- What is the Architecture of Process?

- What is PCB?

- What is purpose of Registers in PCB?

- What are different Process States ?

- What is Orphan process ?

- What is Zombie process ?

- Some CPU scheduling Jargons :

- What is Non-Preemptive Scheduling ?

- What is Convoy Effect ?

- What is Preemptive Scheduling ?

- How to implement Ageing Technique ?

- What is Round Robin Algorithm ?

- What is Multilevel Scheduling Algorithm ?

- What is Multilevel Feedback Scheduling Algorithm ?

- Summary :

- What is Concurrency ?

- What is Critical Section and Major Thread scheduling issue and How to address it ?

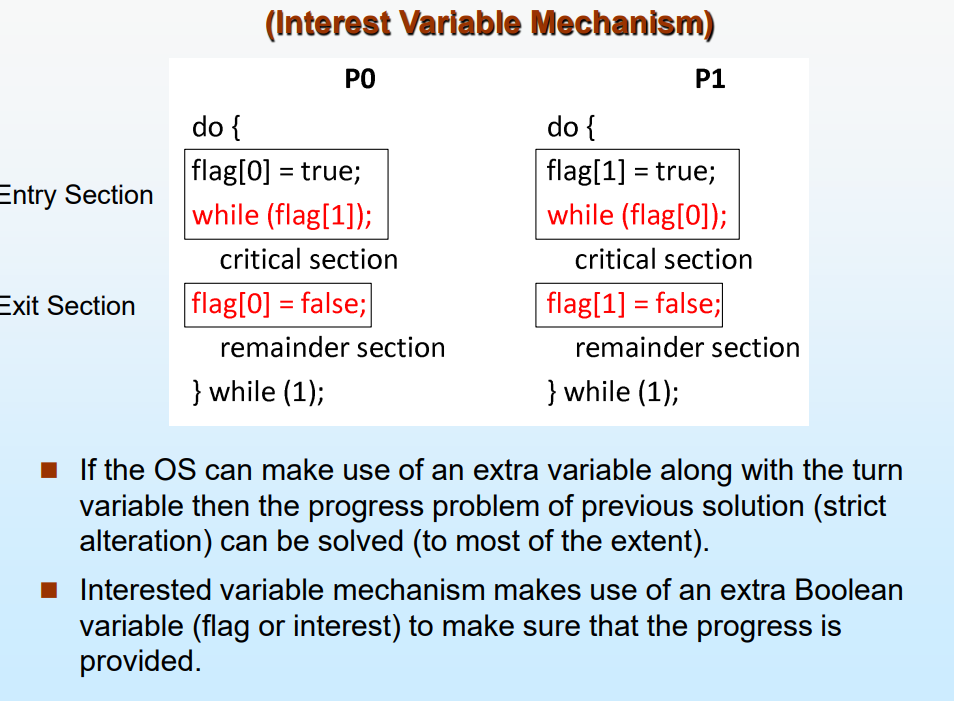

- Explain Interest Variable Mechanism .

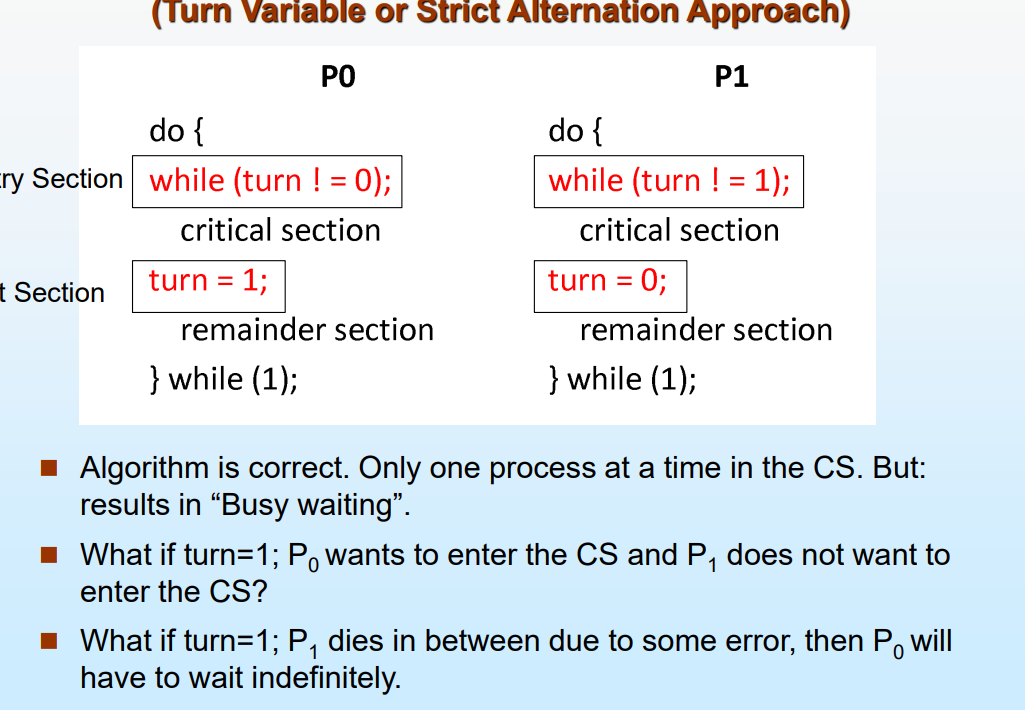

- Explain Turn Variable or Strict Alteration Approach.

- How to use Conditional Variables for thread synchronization ?

- How to use Semaphores for thread synchronization ?

- What is Bounded Buffer Problem of Synchronization?

- What is the Dining Philosopher Problem?

How OS creates a process?

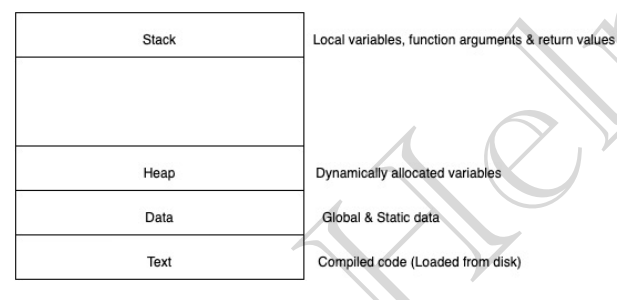

Initializes the program and the static data.

Allocate stack for variables.

Allocate memory space from heap.

I/O tasks.

OS handles the stuff to main().

What is the Architecture of Process?

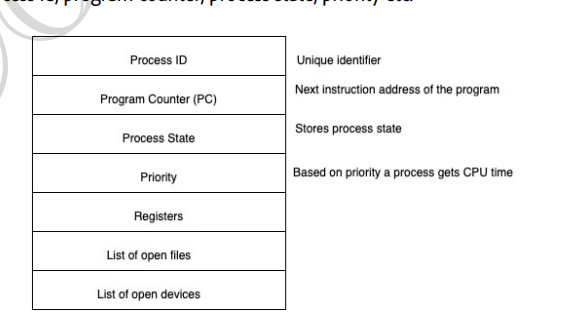

What is PCB?

Process Control Block is a type of data structure that stores the entire info of a process. It is used to uniquely identify the processes using the process table present in the PCB.

What is purpose of Registers in PCB?

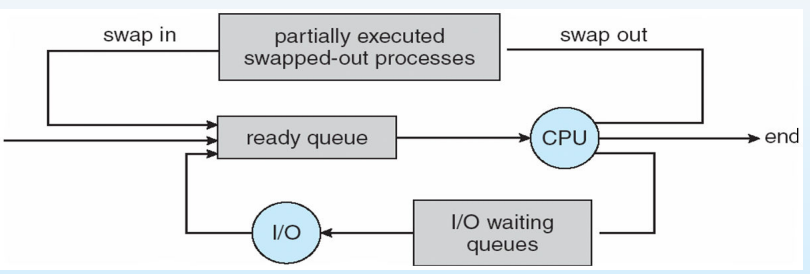

Registers in PCB is a like data structure . When a process is running and time slice expires the current value of the process is stored in the registers and swapped out. When the program is scheduled to run the value inside these registers are read from the PCB and written to CPU registers .

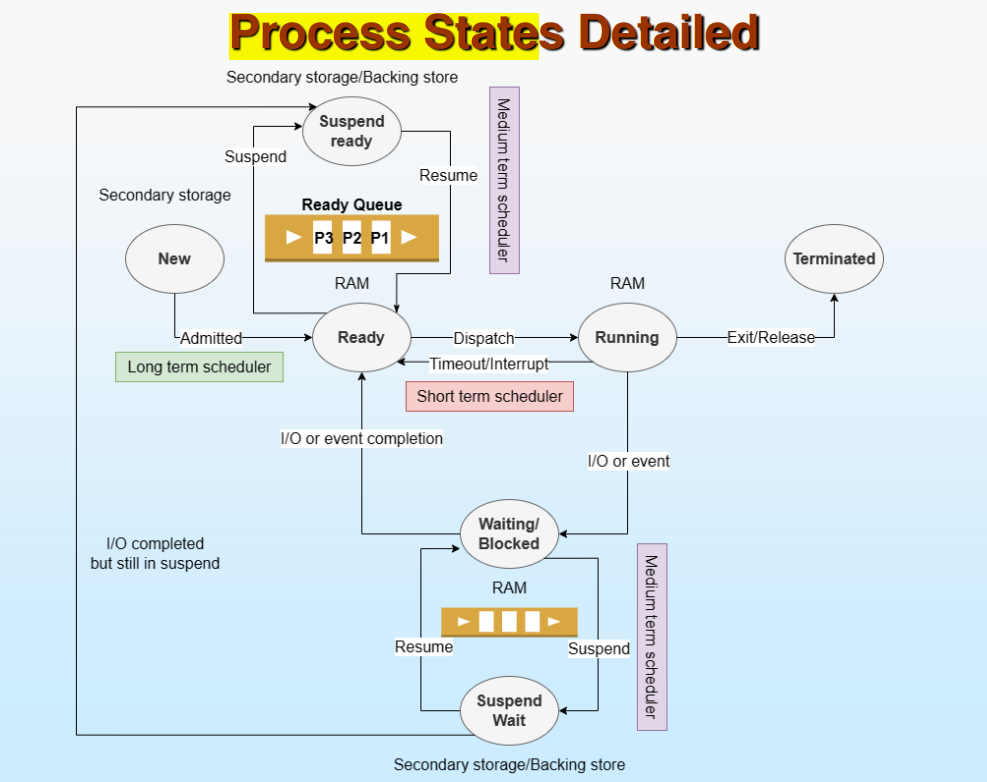

What are different Process States ?

The degree of programming is the no. of processes in the memory . The Long term scheduler controls the degree of programming.

Medium Term Scheduler :

What is Orphan process ?

When the parent process exits before receiving the return value from its child process the child process becomes orphan , this is called orphan process. Orphan processes are adopted by init process (pid =1).

What is Zombie process ?

A zombie process is a process which still has an entry in the process table whose execution is completed. It usually occurs for child processes , as parent process still waits to read the exit status of the child process.

Some CPU scheduling Jargons :

AT : Arrival time is the time when process is arrived at the ready queue.

BT : Burst Time is the time taken under execution of a process.

TAT : Turn around time is the time taken by the process when first time it arrives at ready state till it exits (CT-AT).

CT : Completion time is the time taken by the process till it terminates .

WT : Wait time is the time taken to get the CPU (TAT-BT).

Response Time : Time taken between the process enters the ready queue and getting CPU for the first time.

What is Non-Preemptive Scheduling ?

Once the CPU is allotted to a process it keeps the CPU under use until the process terminates after execution or moves to waiting state.

Leads to starvation as process with higher burst time may cause longer waiting time for other processes.

Low CPU utilization.

Types are : FCFS(First Come First Serve i.e. process with least arrival time),SJF(Shortest Job First i.e. process with least burst time will proceed without preemption, priority scheduling without preemption)

What is Convoy Effect ?

When CPU scheduler uses FCFS as a scheduling algorithm , Convoy effect can occur. It occurs when a process with larger burst time comes first it makes the Waiting time bigger for other processes too eventually increasing the average waiting time. This cause poor resource management.

What is Preemptive Scheduling ?

Once the CPU is allotted to a process it keeps the CPU for a fix time quantum under use along with the process terminates after execution or moves to waiting state.

Less starvation as process gets time in a alternate manner.

High CPU utilization.

Types : SJF (Shortest Job First i.e. process with least burst time will proceed with preemption , priority scheduling with preemption has convoy effect).

How to implement Ageing Technique ?

When low priority processes don't get CPU , ageing technique is used. OS gradually increases the priority of the processes with low priority so they get CPU eventually.(Used to avoid extreme Convoy effect)

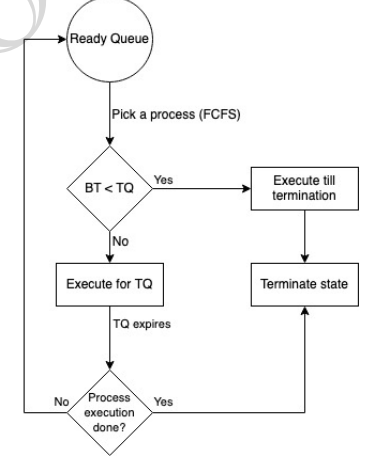

What is Round Robin Algorithm ?

If time quantum decreases context switching increases (creating more overheads).

Most efficient algorithm.

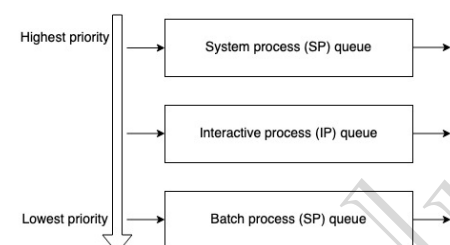

What is Multilevel Scheduling Algorithm ?

The ready queue is divided into multiple level queue depending upon priority.

Each queue has its own scheduling algorithm.

Problem occurs as only processes will be executed from top level to bottom level .

This causes starvation of low priority queues and leads to convoy effect.

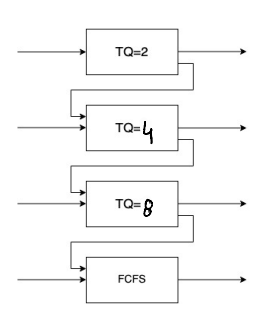

What is Multilevel Feedback Scheduling Algorithm ?

Multiple sub-queues are present.

Allows the process to move between queues. The idea is to separate processes according to the characteristics of their BT. If a process uses too much CPU time, it will be moved to lower priority queue. This scheme leaves I/O bound and interactive processes in the higher-priority

Convoy effect is present but is reduced using aging technique and leads to less starvation.

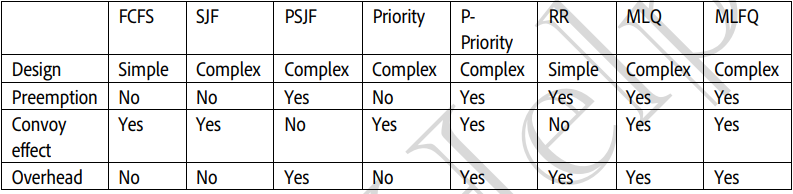

Summary :

What is Concurrency ?

Multiple process threads run in parallel using different CPU cores is called concurrency. Revise threads info form part-1 multithreading concept.

Each thread has its own access to program counter and shared memory space. Depending upon OS scheduling algorithm it schedules the threads to execution.

Multithreading leads to better resource sharing and responsiveness it can only be leveraged if the OS has multiple CPU's.

What is Critical Section and Major Thread scheduling issue and How to address it ?

When various threads try to access the shared resources inconsistency between data occurs to avoid this critical section is introduced.

So critical section is the segment of the code where threads access the shared resources for common files or variables and perform write operations over them. Since these threads execute concurrently any thread can be interrupted at mid evaluation.

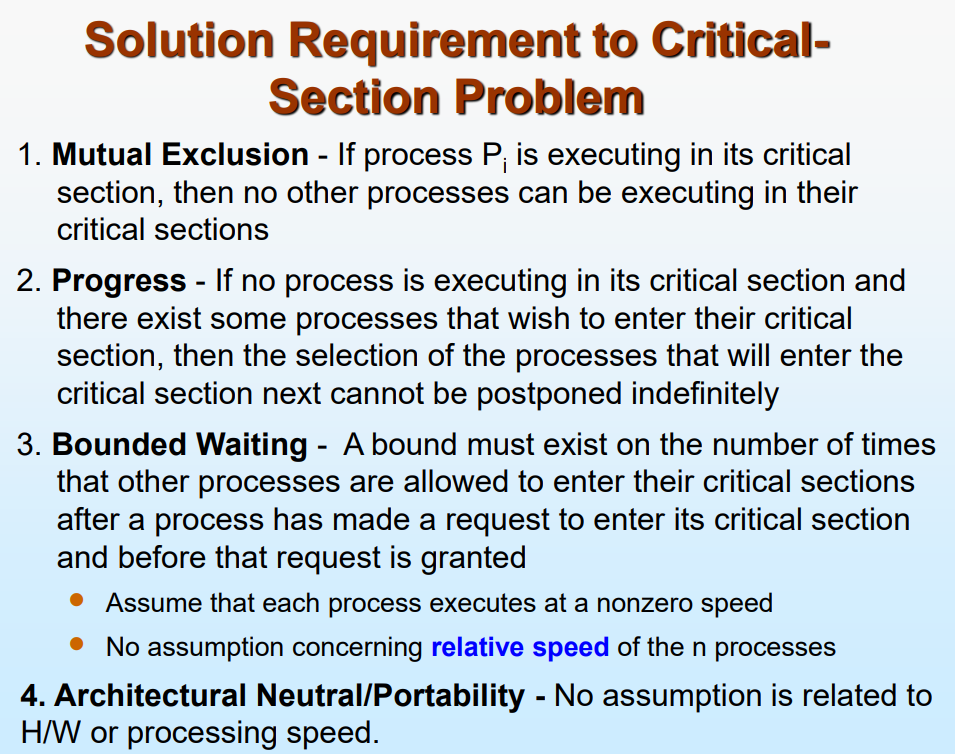

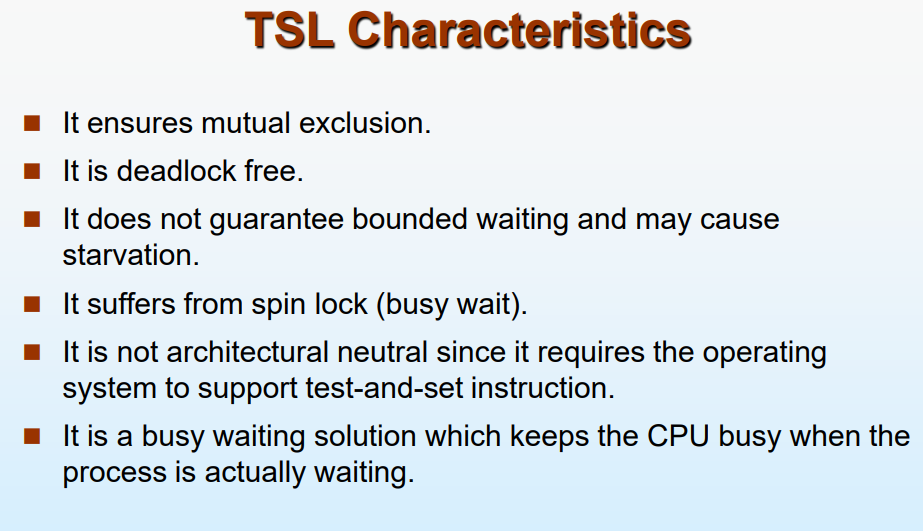

Due to this Race Condition occurs between threads to access the shared data and overwrite it simultaneously. The solution to this race condition are (any solution for this must give mutual exclusion ,bounded waiting , progress of process and architectural portability ):

Semaphores are just normal variables used to coordinate the activities of multiple processes in a computer system. They are used to enforce mutual exclusion, avoid race conditions, and implement synchronization between processes and accessed only through two atomic operations called wait() and signal().

Mutex or Locks can be used to implement mutual exclusion and avoid race condition by allowing only one thread/process to access critical section.

Disadvantage of locks are :

Contention (if a thread enters a critical section by using a lock and dies in middle then other threads will be waited for indefinite time to open the lock. To overcome this there must be some bounded waiting.)

Deadlock (will see further in detail).

Starvation of high priority process.

Atomic Operations. (the CPU mainly uses two cycle to add and update when we change a variable. e.g. cnt=cnt+1 so first cnt+1 is stored in a temp variable and then cnt is updated with temp .)

Using single flag we can achieve mutual exclusion but cannot achieve progress as a fixed output is generated based on input given.

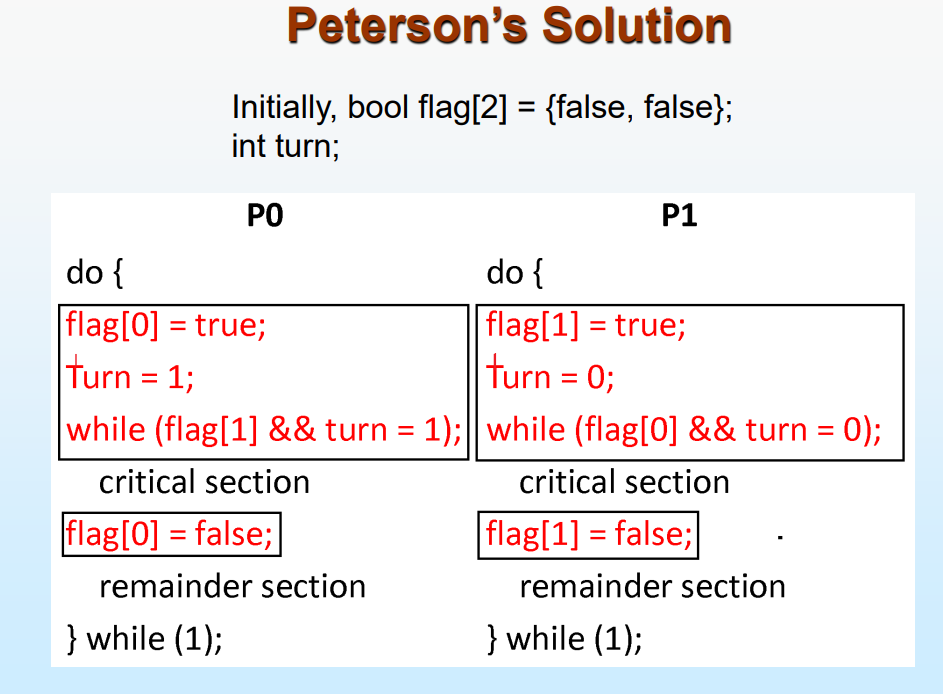

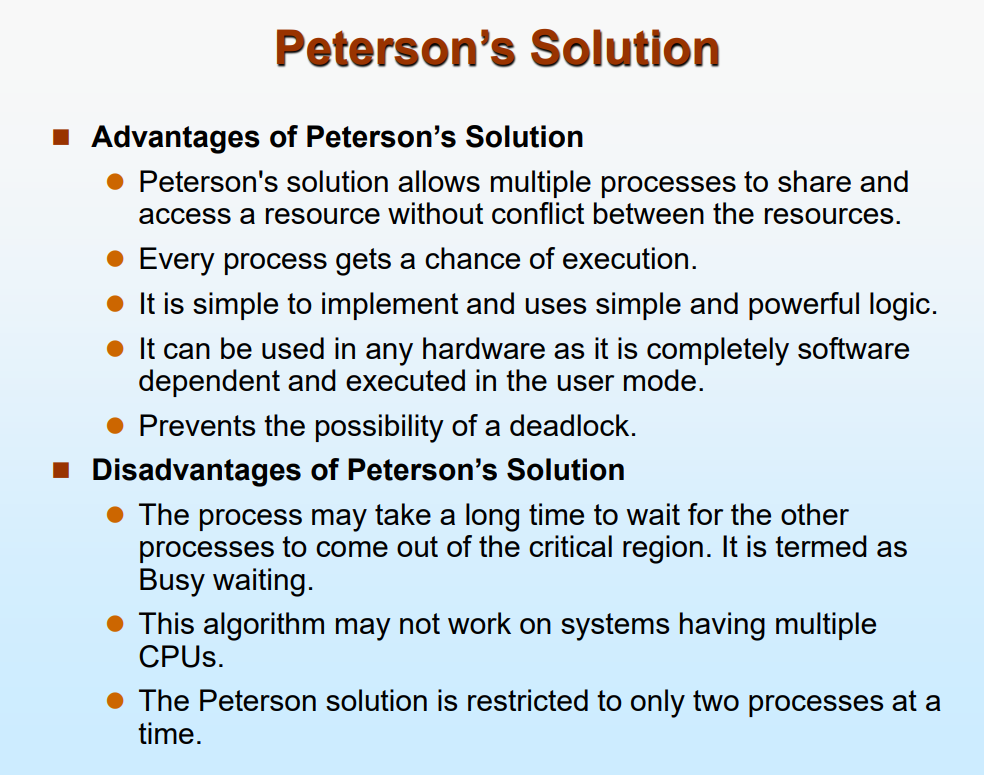

So Peterson's flag solution came into existence but only for two threads to execute where we use two flags and one turn variable.(watch neso video if needed.)

Explain Interest Variable Mechanism .

- There is a deadlock case due to failure of bounded waiting .

Explain Turn Variable or Strict Alteration Approach.

- No progress but has mutual exclusion ,bounded waiting and portability.

How to use Conditional Variables for thread synchronization ?

a. The condition variable is a synchronization primitive that lets the thread wait until a certain condition occurs.

b. Works with a lock .

c. Thread can enter a wait state only when it has acquired a lock. When a thread enters the wait state, it will release the lock and wait until another thread notifies that the event has occurred. Once the waiting thread enters the running state, it again acquires the lock immediately and starts executing.

d. Why to use conditional variable? -> To avoid busy waiting.

e. Contention is not here.

How to use Semaphores for thread synchronization ?

a. Semaphores allows multiple program threads to access the finite instance of resources whereas mutex allows multiple threads to access a single shared resource one at a time.

b. Binary semaphore: value can be 0 or 1. Aka, mutex locks

c. Counting semaphore

Can range over an unrestricted domain.

Can be used to control access to a given resource consisting of a finite number of instances.

d. To overcome the need for busy waiting, we can modify the definition of the wait () and signal () semaphore operations. When a process executes the wait () operation and finds that the semaphore value is not positive, it must wait. However, rather than engaging in busy waiting, the process can block itself. The block operation places a process into a waiting queue associated with the semaphore, and the state of the process is switched to the Waiting state. Then control is transferred to the CPU scheduler, which selects another process to execute.

e. A process that is blocked, waiting on a semaphore S, should be restarted when some other process executes a signal () operation. The process is restarted by a wakeup () operation, which changes the process from the waiting state to the ready state. The process is then placed in the ready queue.

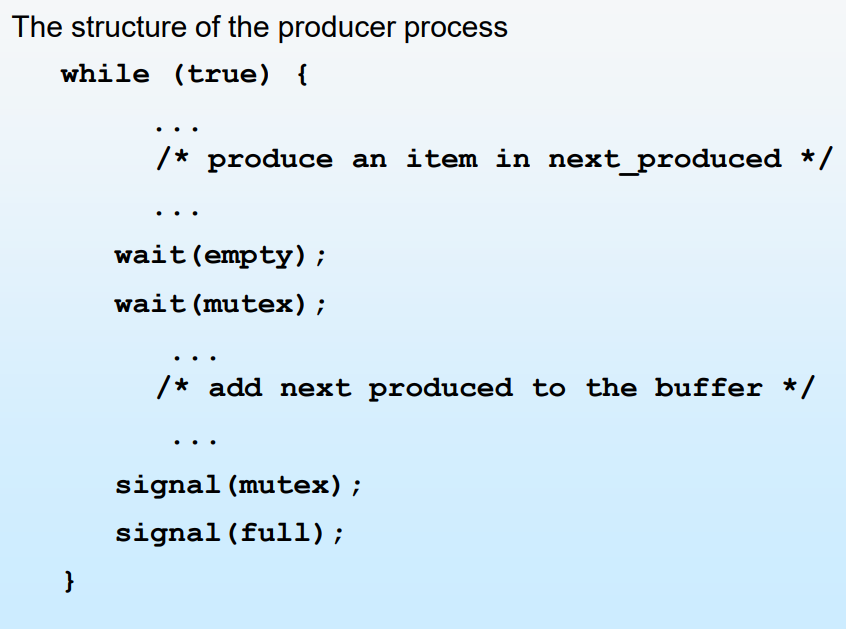

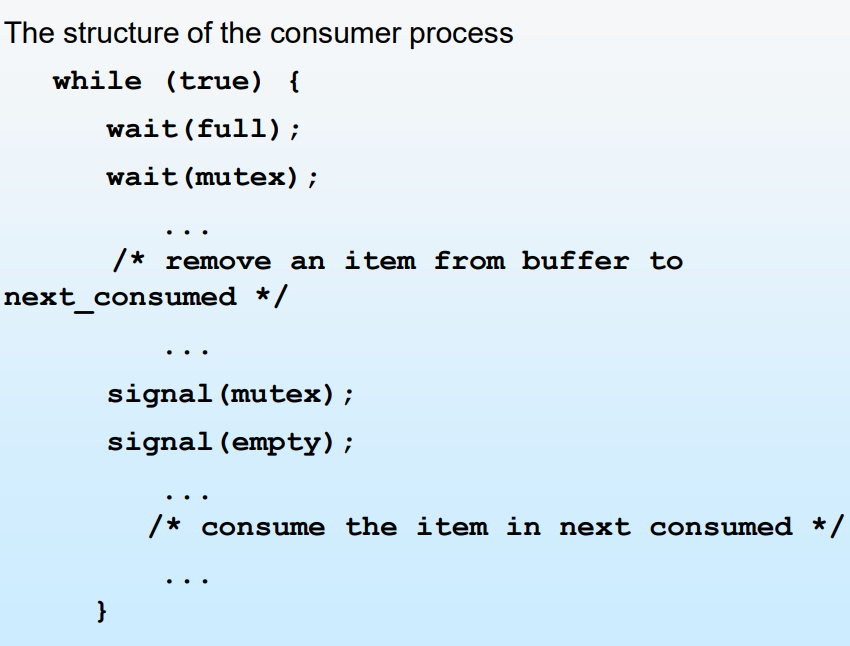

What is Bounded Buffer Problem of Synchronization?

n buffers, each can hold one item .

Semaphore mutex initialized to the value 1 .

Semaphore full initialized to the value 0 .

Semaphore empty initialized to the value n.

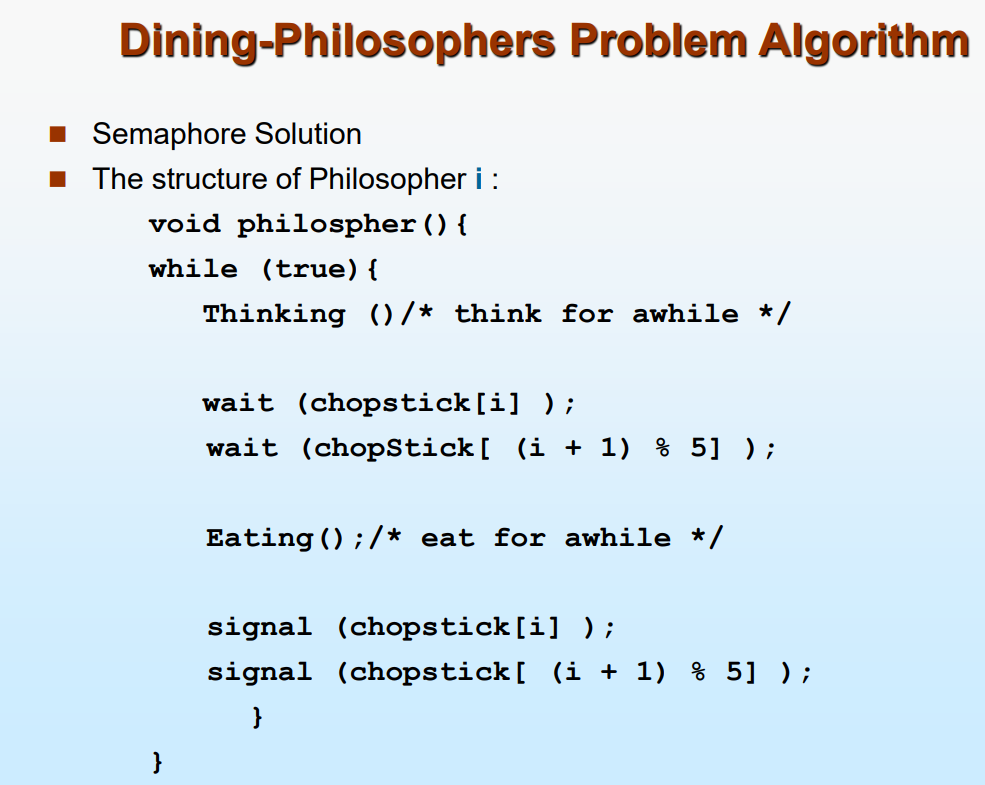

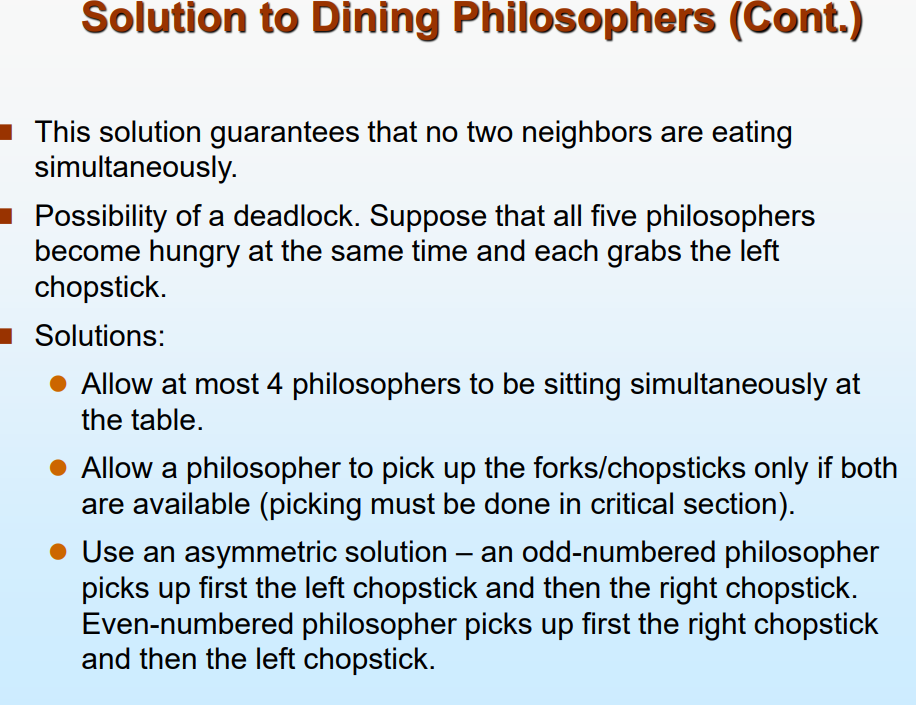

What is the Dining Philosopher Problem?

Subscribe to my newsletter

Read articles from bandeji directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by