JetBrains Releases Cody for General Use

Melvin Donato

Melvin DonatoThis article is based on a publication by: Alex Isken, Justin Dorfman, Chris Sev June 4, 2024 in sourcegraph.com with modifications for Hashnode.com

Cody for JetBrains IDEs is now available for all users. It offers better performance, increased stability, and new features to help you stay focused and reduce daily tasks in your workflow.

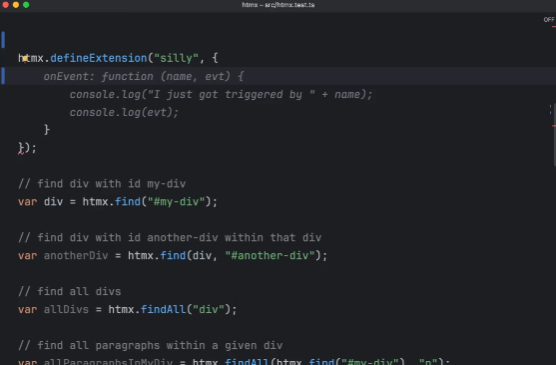

The JetBrains extension now supports new commands, inline code edits, and all of Cody’s key features like multi-line autocomplete, hot-swappable LLMs, and multi-repo context search.

Better Autocomplete with More Hotkeys

Cody offers multi-line autocomplete as you type. They've fixed some visual and performance bugs, making the autocomplete experience much smoother.

They’ve also added new hotkeys for using autocomplete:

Tab to accept a suggestion

Alt + [ (Windows) or Opt + [ (macOS) to cycle suggestions

Alt + \ (Windows) or Opt + \ (macOS) to manually trigger autocomplete

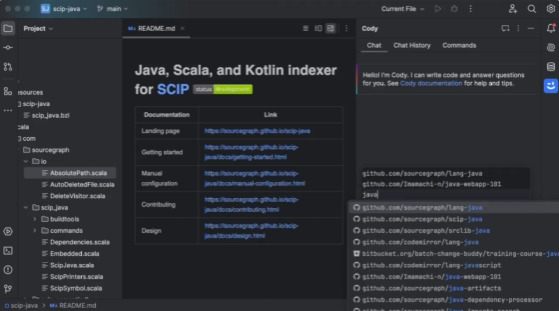

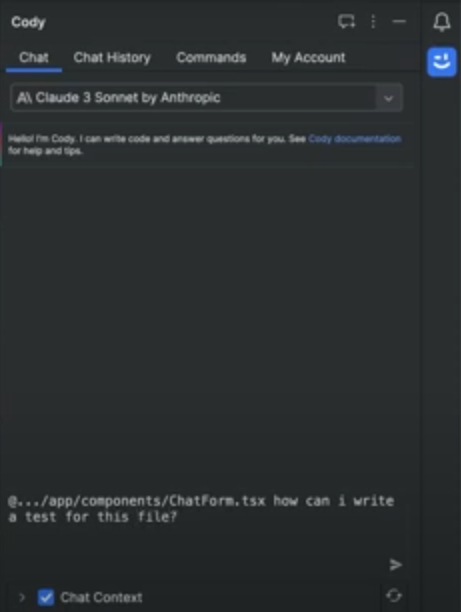

Chat with Multi-Repo Context

They’ve improved Cody’s sidebar chat interface for a better experience when selecting repositories as context.

Now, you can see an interface in the sidebar to choose chat context and toggle the local project context on or off.

If you’re a Cody Enterprise user, you can also add remote repositories from your Sourcegraph instance. Type the names of your repositories into this interface and select up to 10. Cody will search these repositories and get relevant files to answer your chat prompts.

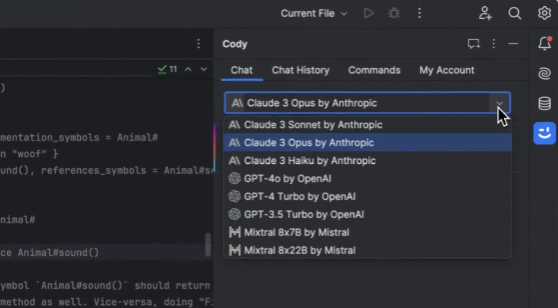

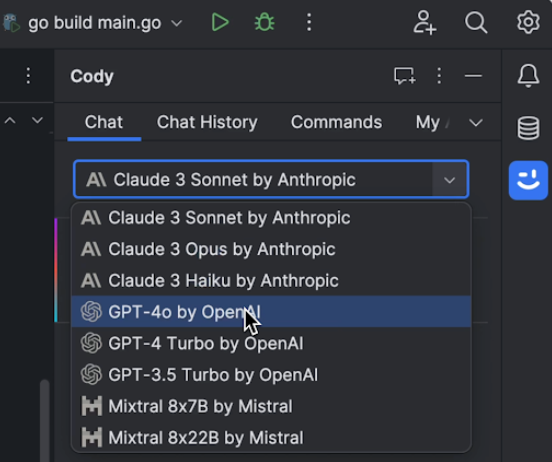

LLM Switching

Cody is built on the idea of giving you options. It works with multiple IDEs, any git code host, and various LLMs to suit your needs.

Cody Pro users can easily switch between the latest models from the extension, including GPT-4o, Claude 3 Opus, Mixtral 8x22B, and others.

Updated Commands to Keep You Productive

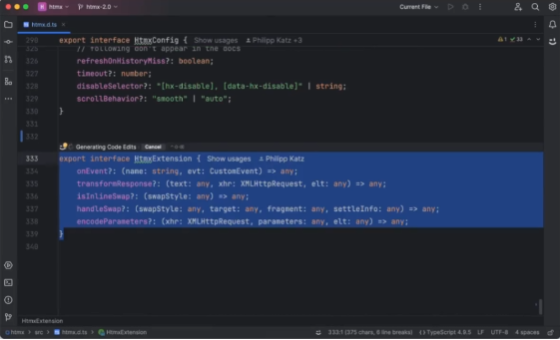

Cody can now edit code directly in your files. They have revamped Cody's commands and divided them into two types: edit commands and chat commands.

Chat commands are the ones you already know. They show results from Cody in the chat window. These include:

Explain Code: Gives a high-level explanation of a code snippet or file, including key properties, arguments, and algorithms.

Smell Code: Reviews a selected code snippet and suggests ways to improve its quality.

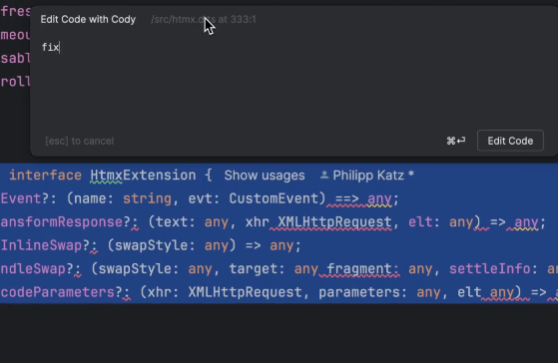

Edit commands make changes directly in your files (and sometimes create new files). These new commands include:

Edit Code

Document Code

Generate Unit Tests

Edit Code

This command lets you ask Cody to directly edit any code selection, like refactoring or fixing bugs.

Select the code you want to edit and press Shift + Ctrl + Enter. This opens a floating edit box where you can describe the change you want. You can also move and resize the edit box on your screen. Use the Up arrow to go through previous prompts.

After you submit your prompt, Cody will make the change. You can accept, undo, view a diff for the change, or update your prompt and have Cody try again.

You can also place the cursor on a blank line in your file without selecting any code. In this case, the command will insert code at the cursor based on your prompt.

Generate Unit Tests

This command replaces Cody’s old unit testing command, which only showed test code in the chat window. Now, when you ask Cody to generate unit tests, it will:

Check if you’re using a test framework.

See if you already have a test file.

Insert new unit tests directly into the existing test file or create a new file if none exists.

Try the new command in the Cody sidebar or with the Shift + Ctrl + T hotkey.

Document Code

They’ve made Cody’s documentation command better. Now, Cody will add documentation right into your file instead of showing it in a sidebar chat window.

You can start the documentation command with the Shift + Ctrl + H hotkey.

GPT-4o Support for Pro and Enterprise

OpenAI’s new model, GPT-4o, is now available for Pro and Enterprise users. It’s twice as fast as GPT-4 Turbo and does better in general reasoning tests.

You can use GPT-4o for chat and commands, and its speed makes it great for code edit commands. Cody Pro users will see GPT-4o as an option in the LLM dropdown menu today. Cody Enterprise admins can enable GPT-4o for their teams by updating their Sourcegraph instance to the latest version.

You can also try it in our LLM Litmus Test at Sourcegraph labs.

Expanded context windows

For those who missed it, we introduced new context windows for Cody Free and Cody Pro users in v5.5.8. Cody now supports much more context when used with Claude 3 models. This means a few things:

You can send more context to Cody when asking questions. You can include more and larger files that would have previously exceeded the context limit.

You can have much longer back-and-forth chats with Cody before it forgets the context from earlier in the conversation. Previously, Cody would tend to "forget" context early in a conversation when the total amount of context was too large.

They’ve also increased Cody’s output token limit, meaning answers will be more comprehensive and won't get cut off mid-text anymore.

Stability and performance improvements

They've fixed some bugs and greatly improved the JetBrains extension's performance and stability. All JetBrains users should notice these improvements, and Apple Silicon users will experience much better stability.

My Point of View!

It's important for every developer that tools like these, which improve coding efficiency and speed, continue to evolve to meet today's needs.

Thanks for these advances! Good job.

Original Publication:

Subscribe to my newsletter

Read articles from Melvin Donato directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Melvin Donato

Melvin Donato

I'm System System Engineer that like working with software and Hardware, where I can contribut my knowledge and learn from others coworkers too. I am a lover of good communication between work teams for the benefit and comfort of users and clients. In case you are interested in knowing more about me, feel free to connect with me through the following ways. melvin_d015@yahoo.es 809-708 2548 809-4916089