Understanding the Basics of Artificial Intelligence (AI)

Gaurav Chandwani

Gaurav Chandwani

In this blog, we'll dive into Artificial Intelligence (AI), its fundamentals, and its latest advancements. We'll discuss key concepts like machine learning, neural networks, and deep learning, and explore AI's applications in various fields such as healthcare, finance, and entertainment. By the end, you'll have a clear understanding of AI.

What is Artificial Intelligence (AI)?

The founder of Artificial Intelligence (AI), John McCarthy, defines AI as "The Science and Engineering of making Intelligent Machines." AI aims to create systems that can perform tasks requiring human intelligence, from recognizing speech or images to making decisions and solving problems. McCarthy's definition emphasizes both creating smart systems and the science behind them. AI focuses on theory and practice to replicate and exceed human thinking in various areas.

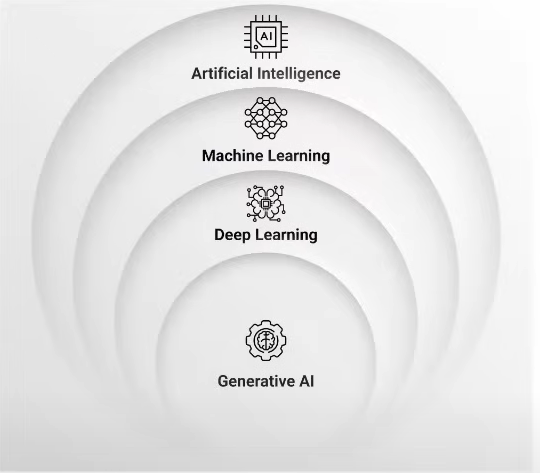

Let's now explore the distinctions between AI, Machine Learning, Deep Learning, and Generative AI.

Exploring AI, Machine Learning, Deep Learning, and Generative AI: Key Differences and Applications

Artificial Intelligence

Let's imagine a universe dedicated to this specific field, and let's call this universe AI. Now, what is AI? Its main goal is to build applications that can perform tasks on their own without any human intervention. This is the simplest and most important definition of AI.

Let's look at some common examples we use in our daily lives:

Netflix: Netflix has an impressive AI recommendation system that suggests movies and shows. Here, human intervention is not needed to provide recommendations. The AI model analyzes your browsing history, the movies you watch, and your preferences to offer personalized suggestions. This helps keep you engaged on the platform for a longer time.

Speech Recognition: Imagine a traveler calling an airline to book a flight. The entire conversation can be managed by an automated speech recognition and dialogue management system. This AI system understands the traveler's requests, processes the information, and responds appropriately, making the booking process seamless and efficient without needing a human operator.

Smart Phones: AI is used extensively in smartphones, enhancing various features that we use daily. One prominent example is voice assistants like Siri and Google Assistant. These voice assistants leverage AI to understand and respond to user commands, providing a hands-free way to interact with your device. They can set reminders, send messages, make calls, and even control smart home devices, all through voice commands.

These examples show how AI is integrated into our everyday activities, making tasks easier and more efficient by eliminating the need for constant human input.

Now, let's delve into the fascinating world of Machine Learning.

Machine Learning

Machine Learning

Machine Learning (ML) is a subset of AI. The primary goal of ML is to provide statistical tools to perform various tasks such as statistical analysis, data visualization, predictions, and forecasting. These tasks are typically performed on large sets of data to extract meaningful insights and information.

ML involves using algorithms and models to analyze data, identify patterns, and make decisions or predictions based on the data. For instance, businesses can use ML to predict customer behavior, optimize supply chains, or even detect fraudulent activities. By analyzing historical data, ML models can forecast future trends and outcomes, helping organizations make informed decisions.

Types of Machine Learning Techniques

Supervised Machine Learning

Supervised machine learning involves understanding the relationship between input data and output data to make predictions on new inputs. This technique is widely used in various applications, from predicting house prices to identifying spam emails.

Supervised machine learning is divided into two main categories: regression and classification.

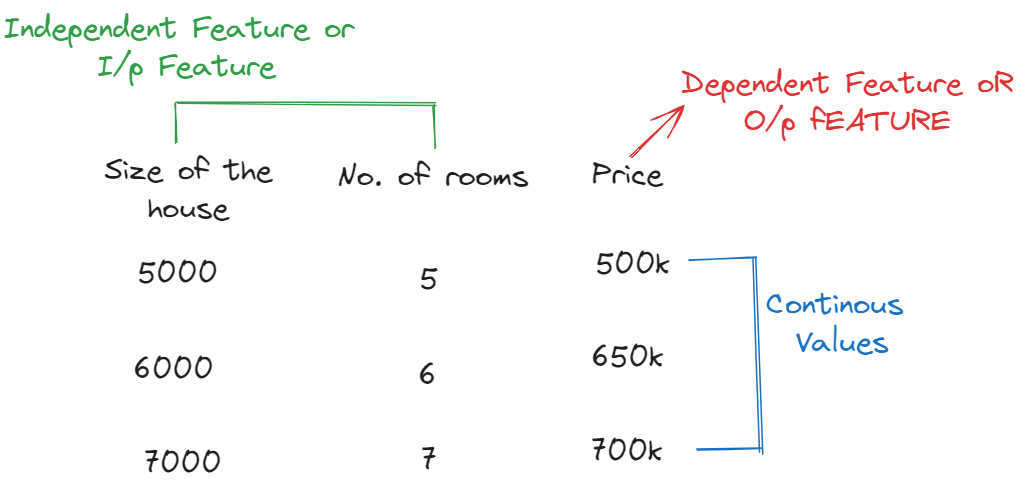

Regression is used when the output variable is numerical. For example, predicting the price of a house based on features like location, size, and number of bedrooms is a regression problem. The goal is to find a continuous value that best represents the output.

In this example, we predict house prices based on features like size and number of rooms. A regression model is trained on historical data to learn the relationship between these features and prices. Once trained, the model can estimate prices for new houses based on their size and number of rooms. This helps provide insights into the real estate market and aids buyers and sellers in making informed decisions.

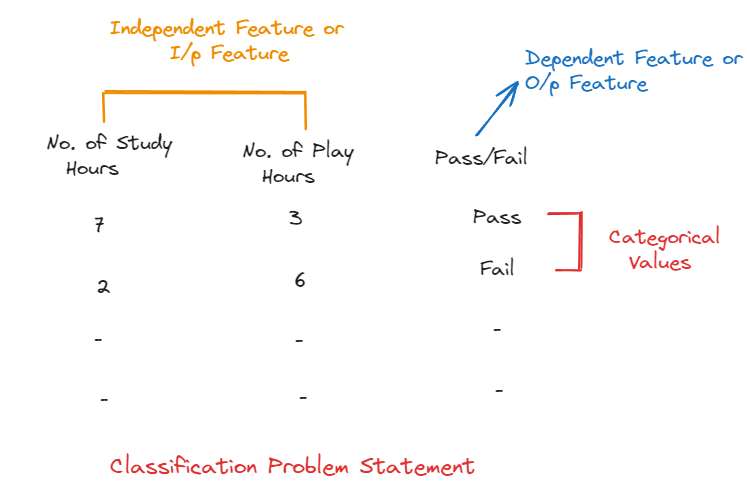

Classification is used when the output variable is categorical. For instance, determining whether an email is spam or not is a classification problem. The aim is to assign the input data to one of several predefined categories.

In this example, we are determining whether a student will pass or fail based on two input features: the number of study hours and the number of play hours. This type of problem is known as Binary Classification because there are only two possible outcomes: pass or fail. If there were more than two possible outcomes, such as grading the student with an A, B, C, or D, it would be called Multi-Class Classification.

Supervised learning can be applied to a wide range of real-world problems. For example, in healthcare, it can be used to predict patient outcomes based on historical medical records. In finance, it can help in credit scoring by assessing the likelihood of a borrower defaulting on a loan. The versatility and effectiveness of supervised learning make it a fundamental technique in the field of machine learning.

Unsupervised Machine Learning

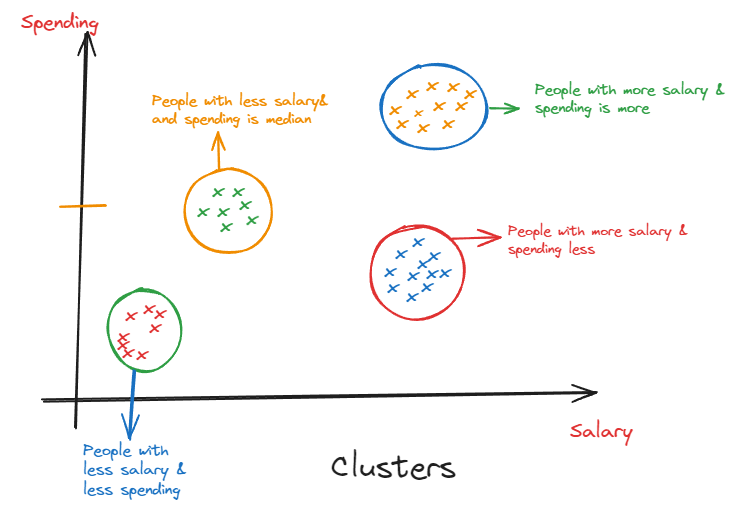

Unsupervised machine learning groups data points into clusters based on their natural features and similarities. Unlike supervised learning, which aims to predict an outcome, unsupervised learning doesn't use predefined labels or outcomes to guide the process.

In unsupervised machine learning, there is no specific output to predict. Instead, the algorithm looks for hidden patterns or structures in the data.

Clustering is a key technique in unsupervised learning. It groups data points into clusters based on their features, making points in the same cluster more similar to each other than to those in other clusters. This method is useful in customer segmentation, fraud detection, and organizing large datasets.

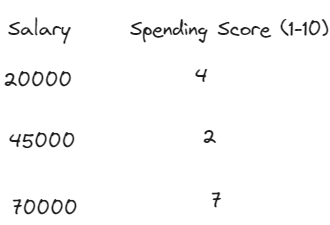

For example: Let's say I have a dataset with two features: Salary and Spending Score (ranging from 1 to 10). The Spending Score indicates how much a person is spending—the closer to ten, the more they spend. I might have a lot of data like this. Here, we don't treat the Spending Score as an output feature to predict based on salary. That's not the goal. Suppose I want to launch a product for my e-commerce company and send a discount coupon to people based on their Spending Score. How do I do that? Using unsupervised machine learning, we can create clusters to group similar data points.

Based on the dataset, we create clusters to group similar data points. For example, if I am launching a product, I would give discount coupons to people with the highest salary and highest spending to encourage them to buy my product. In short, in unsupervised algorithms, we create clusters.

Reinforcement Machine Learning

Reinforcement Learning is a type of machine learning where the computer learns by interacting with an environment and receiving rewards or penalties. It's like learning by trial and error, improving based on the outcomes of its actions.

Example: Teaching a robot to move.

Imagine teaching a robot to navigate a room. Instead of giving it specific instructions, we let it explore. When it moves correctly, it gets a reward. If it moves incorrectly, it gets a penalty. This way, the robot learns to adapt and improve without explicit programming for every scenario. Reinforcement learning is useful when the best solution isn't obvious and needs to be discovered through exploration.

Let's now delve into the concept of Deep Learning.

Deep Learning

Deep Learning is a subset of Machine Learning, and it is important to understand why deep learning emerged and why it has gained such widespread popularity.

The primary goal of deep learning is to mimic the way the human brain processes information. Researchers asked themselves whether it was possible to create a machine that could learn in a manner similar to how humans learn various tasks. This led to the development of deep learning techniques, which aim to replicate the human learning process in machines.

Deep learning involves the use of multi-layered neural networks, which are designed to simulate the neural networks in the human brain.

with respect to deep learning we specifically learn about three things

ANN (Artificial Neural Network)

Artificial Neural Networks are the foundation of deep learning. They consist of layers of interconnected nodes, or neurons, that process data in a way that mimics the human brain.

CNN (Convolutional Neural Network) and Object Detection

Convolutional Neural Networks are specialized types of neural networks designed specifically for processing structured grid data, such as images. CNNs are widely used in computer vision tasks, including image and video recognition, image classification, and object detection. Object detection involves identifying and locating objects within an image, making CNNs essential for applications like facial recognition, autonomous driving, and medical image analysis.

RNN (Recurrent Neural Network) and Its Variants

Recurrent Neural Networks are designed to handle sequential data, making them ideal for tasks that involve time series or text. This makes them particularly useful for tasks such as language modeling, speech recognition, and time series forecasting.

In summary, deep learning came into existence to enable machines to learn and make decisions in a way that closely resembles human learning. This has been made possible through the use of multi-layered neural networks, which have proven to be highly effective in processing large datasets and performing complex tasks.

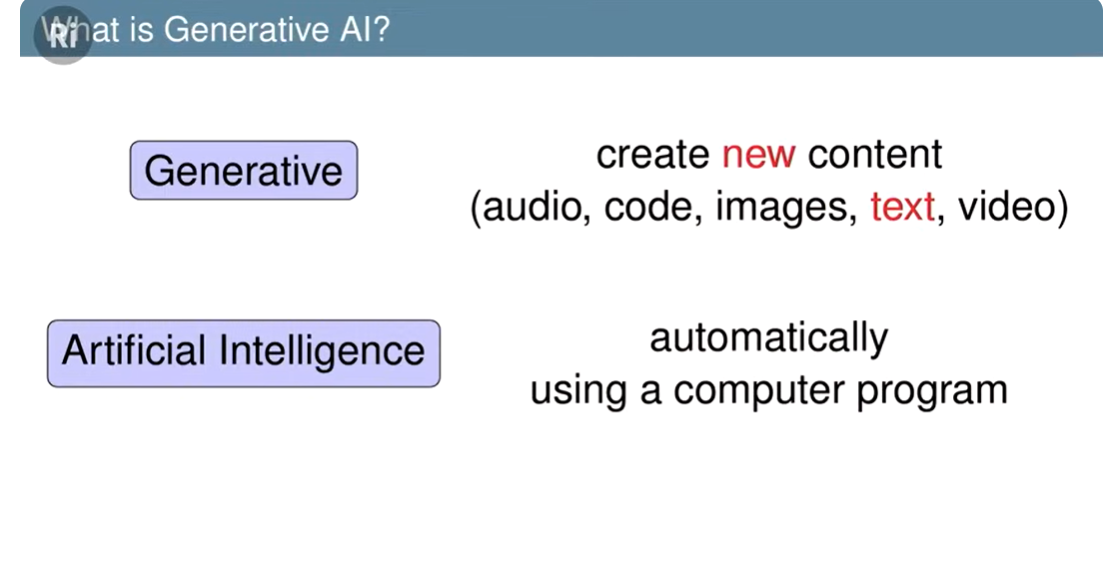

Generative AI(GENAI)

Generative AI (GENAI) is a subset of Deep Learning. Unlike traditional AI techniques that predict outcomes based on patterns in the data they are trained on, Generative AI focuses on creating new data. In the past, using Machine Learning, Natural Language Processing (NLP), and Deep Learning, we could only classify, predict, or analyze sentiments based on existing data. We were not able to generate new data, images, or videos.

Generative AI changes this paradigm by enabling the creation of new content. This is achieved by training models on large datasets and then using these models to generate new data that follows the learned patterns. Let's take an example. Suppose there is a person who has read 100 books on AI. This means they have been trained on a large amount of data. Now, if anyone asks them questions about AI, they can answer in their own words. That is how GENAI models work. By training with large datasets, they learn patterns and structures. Once trained, they can generate new, coherent, and contextually relevant content. For example, if a GENAI model is trained on thousands of AI articles, it can create new articles, summaries, or answer questions about AI like a human would.

The reason Generative AI is generating so much excitement is because of its ability to produce new and unique content. This capability opens up a wide range of applications, from creating realistic images and videos to generating human-like text and even composing music. By learning the patterns in the data, Generative AI can create new outputs that are both unique and closely related to the data it was trained on.

With respect to GENAI we learn about two types of Models

- LLM

"LLM" stands for "Large Language Model." This term refers to language models that are extremely large, both in terms of the number of parameters they contain and the amount of training data they are exposed to. These models are designed to understand and generate human-like text across a wide range of tasks. For example, LLMs can be used for Text Classification, which involves categorizing text into predefined categories. They can also be used for Text Generation, where the model creates new text based on a given prompt. Text Summarization is another application, where the model condenses long pieces of text into shorter summaries.

In addition to these tasks, LLMs are also employed in Conversation AI, such as chatbots that can engage in human-like conversations. They are capable of Question Answering, where they provide precise answers to questions posed by users. Furthermore, LLMs are used in Speech Recognition, converting spoken language into text, and Speech Identification, recognizing and verifying the identity of speakers. They can even function as Spelling Correctors, identifying and correcting spelling errors in text.

But why is it called a Language Model? In the context of "Large Language Models" (LLMs), the term "language model" refers to the neural network architectures that are used to process and generate human-like text. These models are trained using advanced deep learning techniques, which enable them to learn and understand the intricate patterns and structures present in the training data. They are called "language models" because they model the language, allowing them to generate text that is coherent and contextually relevant. This ability to model language is what makes LLMs so powerful and versatile in a wide range of applications.

- LIM

"LIM" stands for "Large Image Model.", specifically pertains to the processing of images and videos. This advanced model has the capability to take textual input and transform it into corresponding images or videos. For instance, if you provide a piece of text, LIM can generate a visual representation of that text, either in the form of a static image or a dynamic video.

How LIM works?

These models are trained on massive amounts of data, which means they're shown millions of images with their corresponding captions. This training helps the model learn to recognize patterns, like shapes, colors, and objects, within the images.Think of it like learning a new language. At first, you don't understand the words, but as you practice, you start to recognize patterns and meanings. Similarly, the Large Image Model learns to recognize patterns in images and associate them with meanings.

Real-world applications

Large Image Models have many practical uses, such as:

Self-driving cars: They can help cars recognize objects, like pedestrians, lanes, and traffic signs.

Medical imaging: They can assist in diagnosing diseases by analyzing medical images, like X-rays and MRIs.

E-commerce: They can help with product search, recommendation, and image-based shopping.

Security: They can be used for surveillance, facial recognition, and object detection.

In summary, Generative AI is a big step forward in AI because it can create new and original data, not just predict or classify. This ability to generate new content is why Generative AI is a hot topic in the tech world today.

Let's explore some of the top AI tools currently available in the market.

Some of the AI tools available in the market that can be beneficial for your daily activities

-

This tool is similar to ChatGPT, but it also allows you to use the GPT-4 model, which is a paid service provided by OpenAI.

-

It is an AI language model designed to assist and engage in conversation with humans, providing accurate and helpful information to help users in various ways.

-

Perplexity AI is a new AI chat tool that acts as a powerful search engine, providing real-time answers with the source of information included.

-

It is an AI tool that helps you create presentations and websites quickly. By providing a prompt, it generates professional-quality presentations and functional websites in minutes. This is useful for those needing high-quality content fast.

Blackbox.ai

Blackbox AI’s main work is to assist developers by autocompleting code, generating programs quickly, and extracting code from different media, enhancing coding efficiency.merlin

Merlin AI is a Chrome extension that enhances browsing by summarizing articles and YouTube videos. It's useful for quickly understanding key points of long content.ChatGPT for DevOps: Introducing Kubiya.ai

It provides an AI-powered assistant that simplifies and automates complex DevOps tasks through a conversational interface like Slack, Teams, and CLI.

-

It is designed for creating realistic human-like voices and music. This tool is notable for its use in content creation, enabling users to generate high-quality audio for entertainment and professional media production.

In conclusion, may you leave with a solid understanding of AI basics and an appreciation for its potential to transform our lives and society.

Subscribe to my newsletter

Read articles from Gaurav Chandwani directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gaurav Chandwani

Gaurav Chandwani

B.Tech Graduate with knowledge of OS, and DSA, and currently learning about python and web development.