The Kafka Journey

arnab paul

arnab paulTable of contents

🚀 My Journey into the World of Kafka! 🚀

I am excited to share my recent deep dive into the world of Apache Kafka! 🎉 Kafka, a powerful distributed event streaming platform, is reshaping the way we handle real-time data. Here's a quick overview of what I've learned and accomplished:

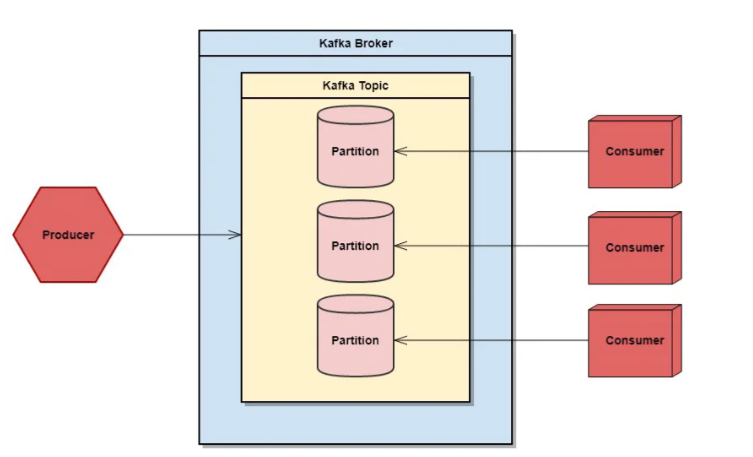

🔹 Understanding the Basics: Started with the fundamentals of Kafka - producers, consumers, brokers, topics, and partitions. Realized how Kafka decouples data streams to enhance scalability and fault tolerance.

🔹 Setting Up Kafka: Successfully installed and configured Kafka locally. Overcame initial hurdles with ZooKeeper and broker setup, gaining a solid understanding of Kafka’s architecture.

🔹 Producing and Consuming Messages: Wrote my first producer and consumer applications in JavaScript using KafkaJS. It was thrilling to see messages flow seamlessly between them!

🔹 Handling Kafka Errors: Debugged common errors like KafkaJSError: The producer is disconnected. Learned how to handle disconnections and maintain robust error-handling mechanisms.

🔹 Creating Topics and Managing Data: Explored topic creation and data management strategies. Understood the importance of replication and partitioning in ensuring data durability and performance.

🔹 Advanced Kafka Features: Delved into Kafka Streams and Connect. Realized the immense potential of stream processing and integrating Kafka with other data systems.

🔹 Building a Scalable Chat Application: Applied my Kafka knowledge to build a scalable chat application. Implemented message queues to handle real-time messaging efficiently.

Key Takeaways: Event-Driven Architecture: Kafka’s event-driven approach is transformative for real-time data processing and microservices architectures. Scalability and Reliability: Kafka's design ensures high throughput and low latency, making it ideal for large-scale applications. Resilience: Understanding and handling errors in Kafka is crucial for building resilient data pipelines.

Why Kafka Matters: In today's data-driven world, real-time processing is essential. Kafka enables businesses to handle vast streams of data efficiently, ensuring timely insights and actions. Whether it's real-time analytics, log aggregation, or microservices communication, Kafka is a game-changer.

Final Thoughts: Embarking on this Kafka journey has been incredibly rewarding. The skills and insights gained are invaluable in the rapidly evolving landscape of data engineering and stream processing. I am looking forward to leveraging Kafka in future projects and exploring its endless possibilities. If you're also working with Kafka or have any insights to share, I’d love to connect and discuss!

GitHub Link to my Kafka journey: https://github.com/arnab1656/KafkaApp

#ApacheKafka #DataEngineering #StreamProcessing #RealTimeData #LearningJourney

Subscribe to my newsletter

Read articles from arnab paul directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by