Super fast CPU inference with onnx and .Net

Kevin Loggenberg

Kevin LoggenbergTable of contents

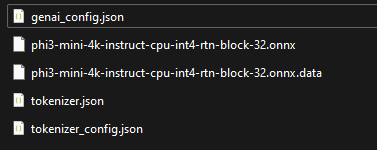

Files to download

These files can be found at this link. Thank you Huggingface.

As long as these files are all stored together in the location you specify in your code, you are good to go.

NB: I had to rename the ".json" files as above

Here's the code

I just used System.Speech.Synthesis to make the console app speak the answer, System.Speech.Synthesis is like 15 years old so it doesn't sound very good, but it works.

using Microsoft.ML.OnnxRuntimeGenAI;

using System.Reflection.Emit;

using System.Reflection;

using System.Speech.Synthesis;

using System.Collections.Concurrent;

using System.Threading;

using static System.Net.Mime.MediaTypeNames;

using System.Speech.Recognition;

internal class Program

{

private static ConcurrentQueue<string> speakQueue = new ConcurrentQueue<string>();

private static SpeechSynthesizer synthesizer = new SpeechSynthesizer();

private static bool isSpeaking = false;

private static SemaphoreSlim semaphore = new SemaphoreSlim(0);

private static SpeechRecognitionEngine recognizer = new SpeechRecognitionEngine();

static async Task Main(string[] args)

{

string modelPath = @"D:\Models\onnx\cpu-int4-rtn-block-32";

var model = new Model(modelPath);

var tokenizer = new Tokenizer(model);

//_ = Task.Run(ProcessSpeechQueue);

var systemPrompt = "You are an AI assistant that helps people find information. Answer questions using a direct style. Do not share more information that the requested by the users.";

// chat start

Console.WriteLine(@"Ask your question. Type an empty string to Exit.");

synthesizer.Rate = 1;

var speak = "";

// chat loop

while (true)

{

Console.WriteLine();

Console.Write(@"Q: ");

string userQ = Console.ReadLine();

if (string.IsNullOrEmpty(userQ))

{

break;

}

// show phi3 response

Console.Write("Phi3: ");

Console.Clear();

var fullPrompt = $"<|system|>{systemPrompt}<|end|><|user|>{userQ}<|end|><|assistant|>";

var tokens = tokenizer.Encode(fullPrompt);

var generatorParams = new GeneratorParams(model);

generatorParams.SetSearchOption("max_length", 2048);

generatorParams.SetSearchOption("past_present_share_buffer", false);

generatorParams.SetInputSequences(tokens);

var generator = new Generator(model, generatorParams);

speak = "";

while (!generator.IsDone())

{

generator.ComputeLogits();

generator.GenerateNextToken();

var outputTokens = generator.GetSequence(0).ToArray(); // Convert to array immediately

var newToken = new int[1] { outputTokens[outputTokens.Length - 1] }; // Extract the last token into a new array

var output = tokenizer.Decode(newToken); // Decode the new tokensArray[outputTokensArray.Length - 1] };

speak += output;

}

var ssml = $@"

<speak version='1.0' xmlns='http://www.w3.org/2001/10/synthesis' xml:lang='en-US'>

<prosody pitch='+20%' rate='-10%' volume='x-loud' duration='2'>

{speak}

</prosody>

</speak>";

synthesizer.SpeakSsml(ssml);

Console.WriteLine();

}

}

}

Big thank you to Microsoft for open sourcing some of their stuff. Original article can be found here

Subscribe to my newsletter

Read articles from Kevin Loggenberg directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Kevin Loggenberg

Kevin Loggenberg

With an unwavering passion for metrics and an innate talent for problem-solving, I possess a wealth of experience as a seasoned full-stack developer. Over the course of my extensive tenure in software development, I have honed my skills and cultivated a deep understanding of the intricacies involved in solving complex challenges. Also, I like AI.