Paper Review: Reducing BGP Data Redundancy with MVP

Mingwei Zhang

Mingwei ZhangTitle: Measuring Internet Routing from the Most Valuable Points

Authors: Thomas Alfroy, Thomas Holterbach, Thomas Krenc, KC Claffy, Cristel Pelsser

Paper link: https://arxiv.org/abs/2405.13172

This paper introduces a novel method to quantify and minimize data redundancy among BGP data vantage points (VPs). The authors implemented their algorithm in a system called Most Valuable Points (MVP), demonstrating that MVP can reduce the number of vantage points needed for BGP data analysis, thereby decreasing the necessary data volume. Their system is available online, but unfortunately, the MVP live data query feature is unavailable as of June 25, 2024.

Problem: too much similar BGP data

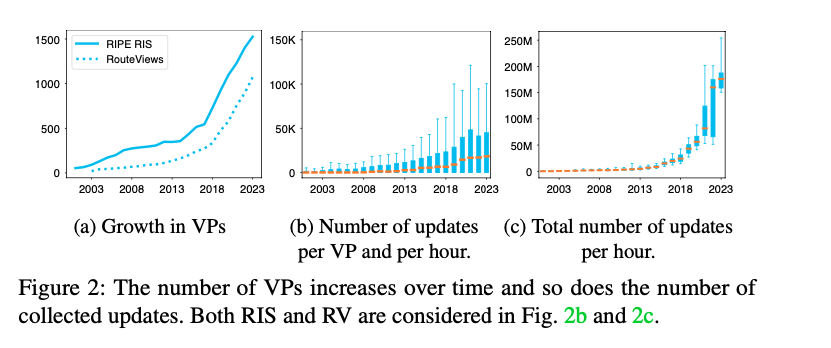

The core problem highlighted in this paper is that the most commonly used public BGP data archives (RIS and RouteViews) have too many collectors and vantage points (BGP peers), resulting in an excessive amount of BGP data. The data volume has grown significantly in recent years, which isn't inherently bad, but the redundancy rate is also high, as the authors argue. This high data volume may be linked to the limited number of data collectors used in some research studies surveyed by the authors, potentially leading to suboptimal results and discoveries.

Solution: check redundancy and pick the best ones

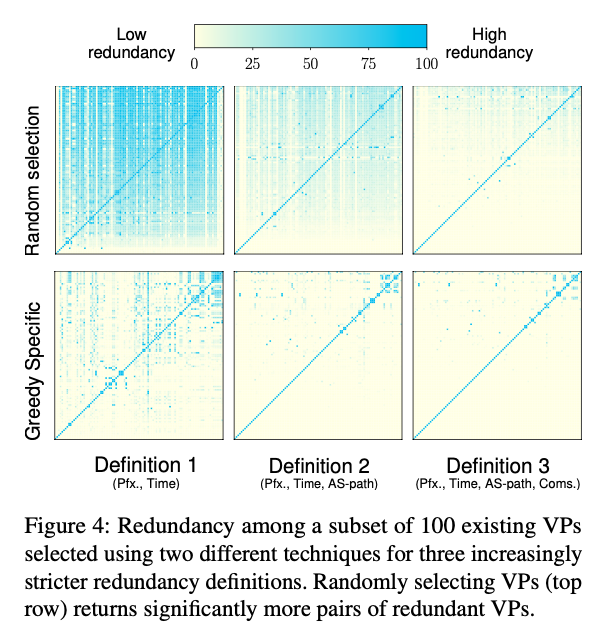

The authors proposed a solution involving an algorithm that calculates the pair-wise data redundancy score between any two vantage points, and another algorithm to optimize the combination of vantage points.

The core idea is very succinctly summarized by the authors as:

MVP relies on a greedy algorithm that considers both data redundancy and its volume to build a set of the most valuable VPs. MVP first adds the VP with the lowest average Euclidean distance to all other VPs, and then greedily adds the VP that balances minimal redundancy with already selected VPs and minimal additional data volume that the VP brings.

With no surprises, MVP achieves its goal of reducing redundancy and data volume consumption by optimally selects the best vantage points while without sacrificing the data coverage.

Some concerns

1. consuming from all collectors is not too bad

The main motivation for this work is the problem of having too much data to process from all vantage points. The number of vantage points has indeed grown quickly, as the authors mentioned, and we have heard from archive operators who want to slow down this expansion. However, the available data bandwidth, computing power, and tools for BGP data processing have also improved significantly over the years. I have several data pipelines for BGPKIT that process all RIB dumps and updates from all collectors for years on a single VM (though a powerful one, but still consumer-level), all available online here. There have been no concerns about the data volume for these pipelines, nor do they struggle to keep up with the periodic data processing. It does not seem outrageous to use all available data instead of having to select a subset.

2. MRT files archived at collector-level, not vantage-point-level

Of course, we cannot assume all users have enough resources to consume data from all collectors, and we also should not assume the data collectors can keep up if consumers demand too much. So, it is a valid goal to optimize data coverage and reduce redundancy to minimize the required data volume.

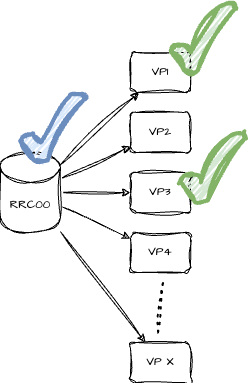

However, using fewer vantage points does not mean applications will use less data. The author did not mention that BGP data for these public collectors are archived as files per collector rather than per vantage point. This means fetching BGP data for one vantage point from a collector costs the same as fetching for all vantage points from the same collector. Using MVP at the vantage point level does not necessarily improve data consumption for projects.

For example, we have X VPs for rrc00, and MVP chooses vp1 and vp3 as the optimal vantage points. The application would still need to download the RIB dump or updates for the entire rrc00, and then add filtering logic to remove messages from vantage points other than vp1 and vp3. Depending on the application logic, it might be just as costly to process all VPs as it is to filter out some VPs and process the rest.

Conclusions

In conclusion, the paper "Measuring Internet Routing from the Most Valuable Points" improves BGP data analysis by using the MVP algorithm to reduce redundancy. Although current methods of archiving BGP data per collector instead of per vantage point may limit its immediate use, the paper's insights and techniques are valuable for future research. The algorithm for detecting redundancy is especially useful in practical applications, and the information on the growth of public collectors provides a clear view of the current BGP data collection landscape.

Subscribe to my newsletter

Read articles from Mingwei Zhang directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Mingwei Zhang

Mingwei Zhang

Senior System Engineer at Cloudflare, founder and maintainer of BGPKIT. Build tools and pipelines to watch BGP data across the Internet.