Implementing First-Person-View Movement in Godot using C++

Zacryon

ZacryonTable of contents

GitHub repository and specific commit corresponding to this blog post:

https://github.com/Zacrain/a-fancy-tree commit hash: f1b6bd8.

One important feature in my endeavour to create a fancy tree walking-simulator-like game experience is to be able to walk from a first-person view, like in first-person shooters. But since this is not going to be a shooter (yet), it's just the first-person view movements I would like to have.

An important disclaimer: There may be better ways to implement such a feature. I haven't really looked into it, as I wanted to come up with something clever myself. So I don't claim that this is the way or even the best one. If you're interested in that, it might make sense to see how other people did it, make comparisons, and draw your own conclusions based on that.

Doing this myself might be reinventing the wheel, which is generally frowned upon in software development, but I want to handle most aspects of this project on my own. This doesn't mean coding an entire engine from scratch, but rather focusing on the parts that challenge me or that I'm interested in learning. So, my decisions on what to develop myself and where to rely on external knowledge might seem somewhat arbitrary. As this is a learning project for me, I think it will be fine.

Getting and Processing User Inputs

There are two kinds of inputs I'm intending to use: one for ground movement, the mere translational part, so to speak, and one for view orientation, the rotational part.

As I am currently working on and developing for PC, this means using keyboard inputs for ground movement (the classic WASD) and the mouse for orientation (dragging your mouse will change the view orientation, looking up-down and sideways). However, in the future, I want players to be able to set those input sources themselves, maybe even using a gamepad. That's where Godot's InputMap comes in handy. This allows us to define specific actions (like moving forward, left, right, back, jumping) independently of the specific input source (like a pressed key from the keyboard) as it handles a mapping between those actions and their sources. It's basically a key-value pairing (key in its general meaning in computer science, not meant as a key on your keyboard now). That way, we can decouple our code from specific mouse buttons or gamepad buttons or wherever those signals come from.

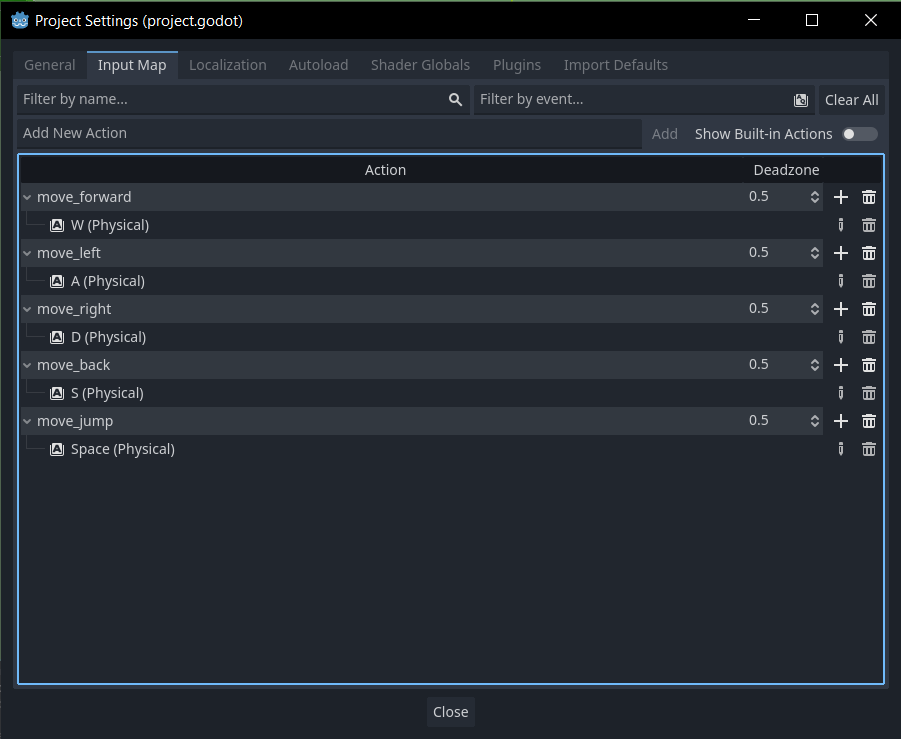

All we need is a name for our action, and the input can then be mapped arbitrarily. (For now, I mapped it in Godot's project settings. In the future, I'll add an options menu for players.) In my case, that looks like this:

I named the actions for movement move_forward, move_left, and so on. There is still something missing though: our view rotation using mouse movements. Sadly, it seems that Godot's input map does not support changes in the mouse axes. That might change in the future, but for now, getting the signal when you move your mouse is handled differently. I will detail that later on. First, I'll explain how I implemented the ground translation.

Since those action names are currently set once, I wanted to have them as a hardcoded property in the member variables of a class that will process these movement inputs. (At some point, I might make that dynamic and therefore omit hardcoding. For now, this will suffice.) So, I created a class called FPVPlayer, meaning "First Person View Player," and wanted to include some of Godot's own String objects in it.

How to String or How Not to String

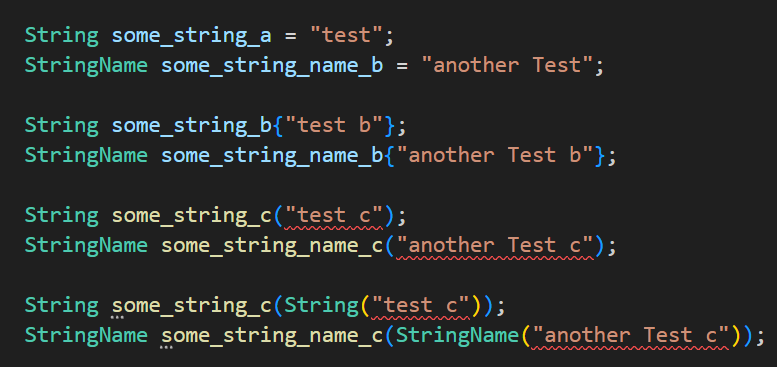

That's where I encountered minor issues. Godot's implementation of strings is not exactly the same as what you would expect from the C++ STL string class. Specifically, initialising them using direct initialisation like:

String some_string("Test");

does not work as there is no suitable constructor for this. We'll get an error like expected a type specifier C/C++(79) from IntelliSense and expected identifier before string constant as well as expected ',' or '...' before string constant from the compiler. Instead, we're left with two other options: uniform initialisation and copy-initialisation. The same goes for Godot's StringName class, which I stumbled upon; it is a special kind of string. I'll say a few words about that later. So, as an overview, I made a screenshot of what is working, and what isn't:

As you can see, uniform initialisation using curly braces works as well as copy initialisation does. Direct initialisation, also with an anonymous, direct-initialised object as a parameter, does not.

String versus StringName

The StringName class realises so called "string interning" . String interning is an optimization method that makes operations on strings (like comparisons) very fast. This works, roughly speaking, by creating immutable unique, i.e. distinct, strings that are placed in memory only once and then referenced wherever needed. Comparison operations are then reduced to pointer equality checks, which alleviates the O(n) worst case comparison complexity of typical strings.

The classic String doesn't come with that advantage but has other uses, like being mutable. Godot's String class comes with some optimizations but is no match for the internalized StringName class in terms of comparison speed.

And what do we need to check whether our action names are relevant for the current user input? Correct: we need to compare their names. So using StringName becomes an obvious choice for that, which is probably why Godot also enforces its use when processing inputs using methods like is_action_just_pressed(). This method allows us to check whether our action — as defined in our InputMap — was just pressed.

Having the method alone and knowing how to use Godot's string classes is a start, but that alone will not help us understand how we can use that kind of information to move our character. For that, we need some further definitions and concepts.

Coordinate Systems

There are entire textbooks about coordinate systems and the various kinds of fancy things you can do with them. I know because I'm a roboticist and have read such textbooks (and papers, and lecture slides and idk what else but it feels like there must be a third item on this list). I'll spare you that. If you must know (and you should know how to deal with them if you want to follow along), go get some good math resources on the topic.

So now just the basics:

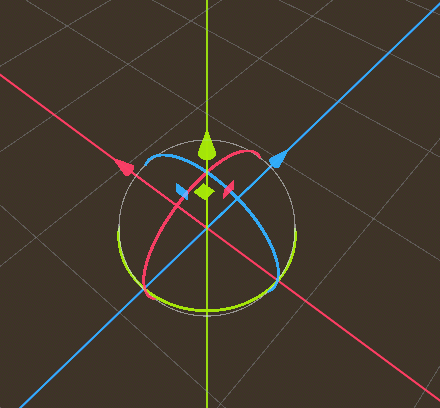

Godot's coordinate system, if I am not mistaken, is right-handed, with the axes defined as follows:

x-axis: "side" axis (positive x points to the right side, to be precise)

y-axis: "up" axis

z-axis: "backwards" axis

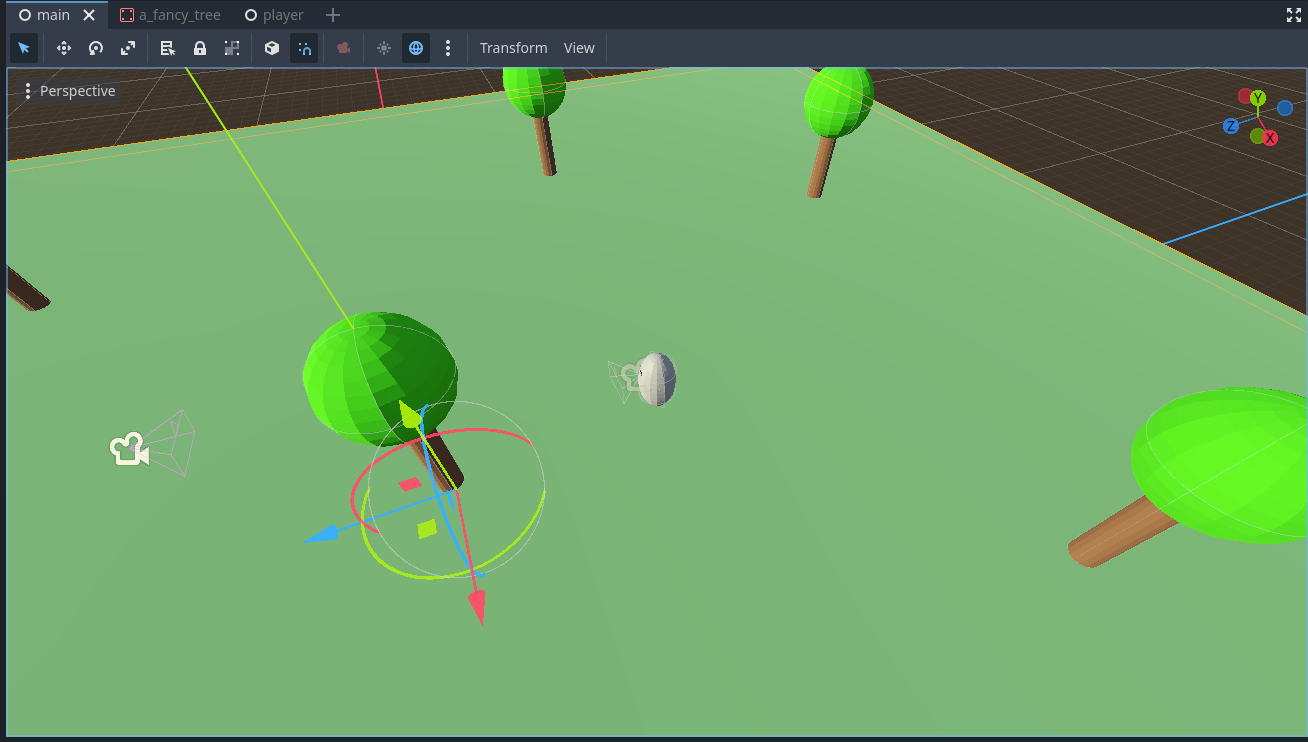

Here are some pictures:

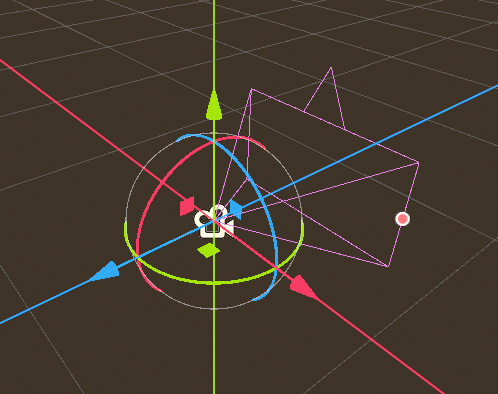

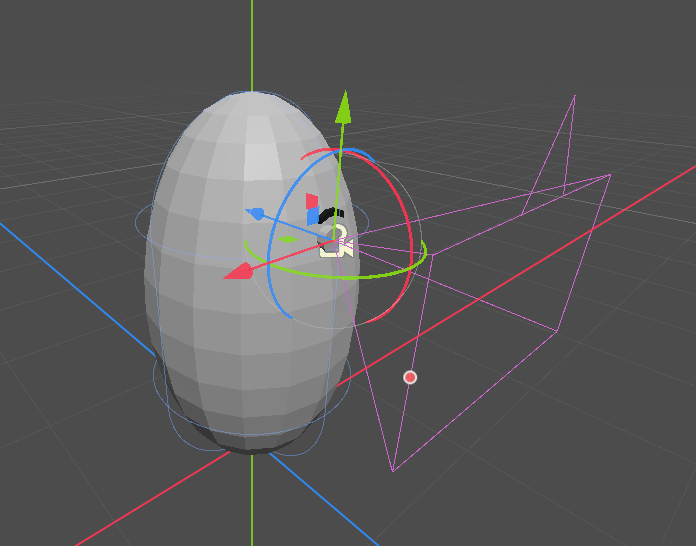

The red arrow points towards the positive x-direction. The green one is for the positive y-direction, and the blue arrow points towards the positive values of the z-axis.

(The circles are gizmos for rotations around the specific axis, and the small coloured squares are gizmos for translating an object.)

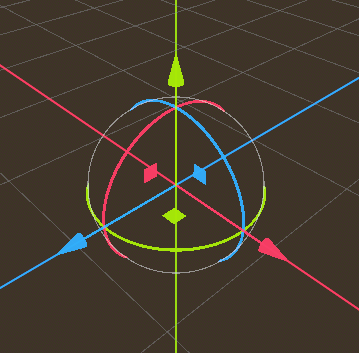

Another picture from the other side.

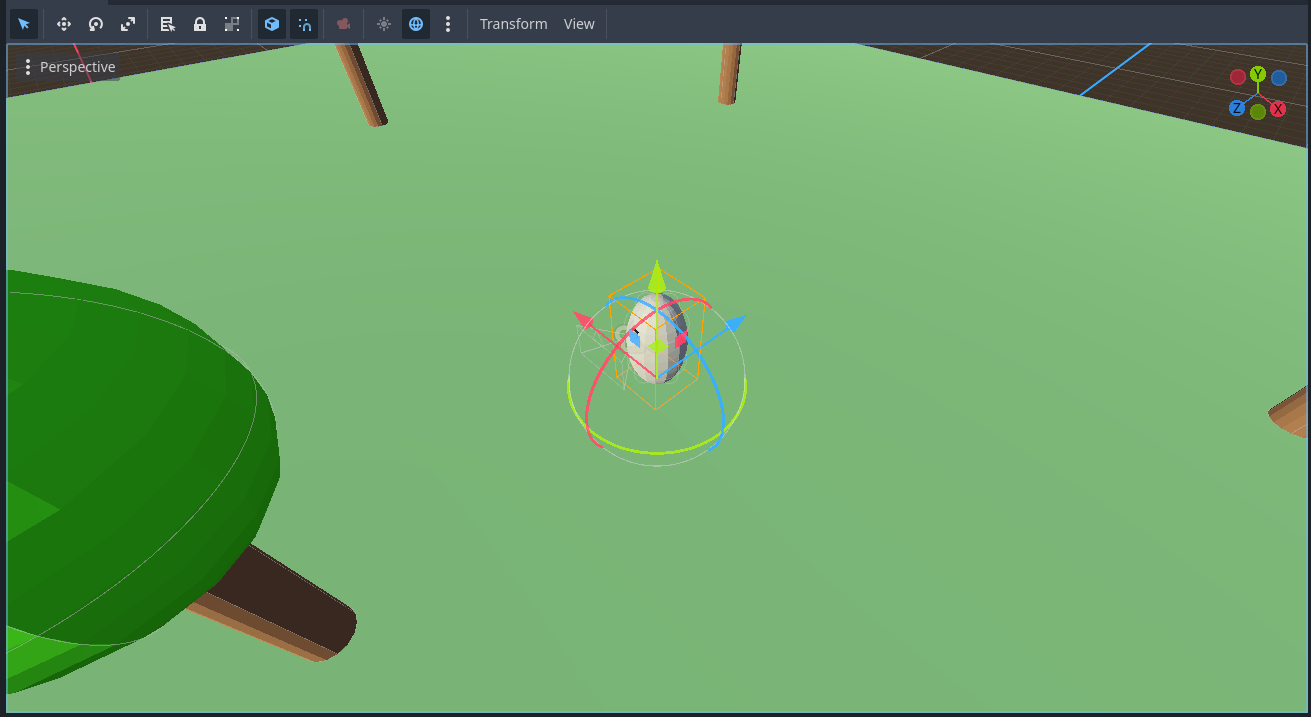

In general, you could use those axes as you like and define them on your own, although it is a good idea to have a convention which you follow strictly because this helps to avoid issues due to conflicting coordinate system conventions. At first, I was thinking of using the positive z-axis as the forward direction. But it seems that Godot handles it the other way around, which becomes apparent with the camera's coordinate system. See for yourself, a default instantiated Camera3D node, and the local coordinate system toggled on:

The z-axis (blue arrow) points "inside" the camera, not outside. I guess the intuition behind it is that objects further away from the camera have a smaller value (they also appear smaller in depth), while their z-value increases towards zero the closer they get to the camera. Conventions can truly be nasty, but that's a matter of preference. It's just important to stick to a common way of labeling axes and coordinate systems.

As the world coordinate system of Godot (the very basis and universal point of reference for every other object and their local coordinate systems in the game world) has already defined the y-axis as the up-axis, I decided to stick with Godot's coordinate convention.

So, all that's left to do is to put those coordinate systems to work and move our player object!

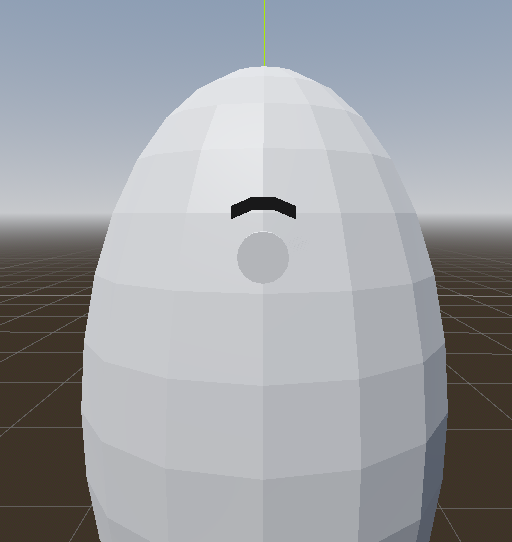

Wait, we don't have a player object yet. Or do we? Hmm... I see something, something round with one eye and a very fancy eyebrow. Can you see it too?

Meet Hobba

This is Hobba. I created them quickly in Blender; made from a stretched sphere, a cylinder for an eye, and a modified cube for an eyebrow. This is my placeholder player character object. At first, I wanted to give them two eyes, but after seeing them with one, I found it hilarious. (Also, it is more "realistic" now to just have one camera placed in the eye. Immersion, yeah! 😎) I don't really know why I called them Hobba, though. It just felt right.

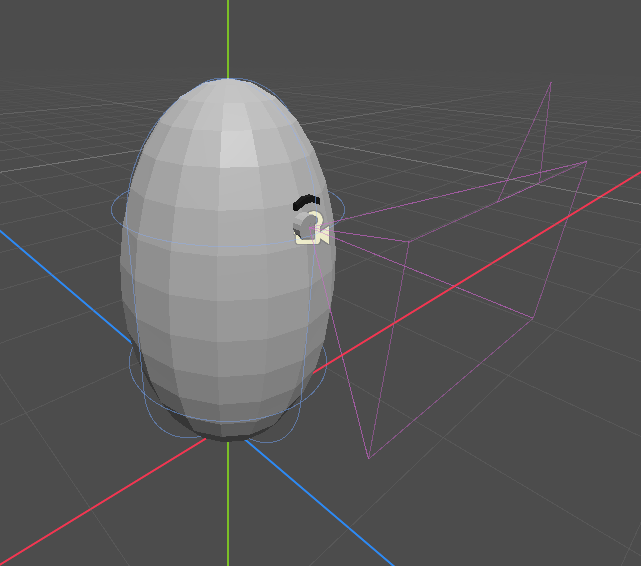

As you can see below, I attached a capsule-shaped collider around Hobba (which allows us to do collision detection and let Hobba bump into stuff as well as not fall through the ground) and placed a camera directly in their eye. (I assure you, they weren't hurt!)

Ground Movement Control

With our player character model in place and a basic understanding of input mappings and coordinate systems, let's get to the juicy stuff: coding the ground movement translational control.

Physical processing, which includes the movement of bodies, should be done in the physics_process(double delta) method, which can be overridden by any node inheriting from basic nodes. As my FPVPlayer class inherits from CharacterBody3D, this requirement is already fulfilled. (By the way, I placed that class as the root node in the Hobba scene.)

Below is the complete method, I'll explain below:

void FPVPlayer::_physics_process(double delta) {

// Reset movement. If no button is pressed, movement should stop and therefore be zero.

move_direction.zero();

// ***** Ground Movement & Jump *****

// Reasoning for using += and -= below instead of a single = :

// If the player presses two opposite keys (almost) simultaneously, they should cancel each other out instead of

// having the last pressed key overwrite the previous one.

if (input.is_action_pressed(action_name_move_forward))

move_direction.z -= 1;

if (input.is_action_pressed(action_name_move_back))

move_direction.z += 1;

if (input.is_action_pressed(action_name_move_left))

move_direction.x -= 1;

if (input.is_action_pressed(action_name_move_right))

move_direction.x += 1;

if (input.is_action_pressed(action_name_move_jump)) // bunny hop?

move_direction.y += 1;

// Normalize vector if inequal to zero. Otherwise we would destroy the universe. (Division by zero.)

if (move_direction != Vec3_ZERO)

move_direction.normalize();

// Get the move direction related to the global coordinate system. This will make the succeeding computations

// perform in the global coordinate system, which seems to be used by set_velocity() and move_and_slide();

move_direction = to_global(move_direction) - get_transform().get_origin();

// Fall back to floor when in air.

if (!is_on_floor())

target_vel.y -= (move_fall_speed * delta);

else // Jump. Bunny hop if is_action_pressed() is used instead of is_action_just_pressed()

target_vel.y = move_direction.y * move_jump_speed;

// Set Ground movement velocity.

target_vel.x = move_direction.x * move_speed;

target_vel.z = move_direction.z * move_speed;

set_velocity(target_vel);

move_and_slide();

}

The first thing to do is to reset the move_direction variable (which is a Vector3) as it stores information about the direction the player wants to move in. After that, several if-branches check which of our input actions was pressed. We get that information by calling the is_action_pressed() method of our Input object. By the way, that one is a global singleton (there is only one instance of that object for all of Godot), which I retrieve and set during the construction of the FPVPlayer class like this:

/** @brief Constructor, sets the singleton reference upon construction. */

FPVPlayer() : input(*Input::get_singleton()) {};

Where input (lower case 'i') is a member variable of my class, which will then hold a reference to the singleton. That one can be retrieved by calling Input::get_singleton().

The move direction vector is then set according to our desired movement direction. To go forward, the z-coordinate of the vector must be set to -1, and +1 for backward. Similarly, for left and right movement using the x-axis. I realised jumping as a single +1 along the y-axis.

The resulting vector (if not zero) has to be normalized. (I defined the Vec3_ZERO constant myself, as it curiously seems to be missing in Godot's C++ API. This is curious because it has such constants for GDScript and C#.) Normalized means its length is exactly 1. This is important, as the movement speed towards the movement direction can then be easily scaled by the lines:

target_vel.x = move_direction.x * move_speed;

target_vel.z = move_direction.z * move_speed;

Where target_vel is again a Vector3 object and move_speed is just a double determining the translational velocity. There is some stuff in-between that needs more explanation:

In the end, I'm calling these two methods:

set_velocity(target_vel);

move_and_slide();

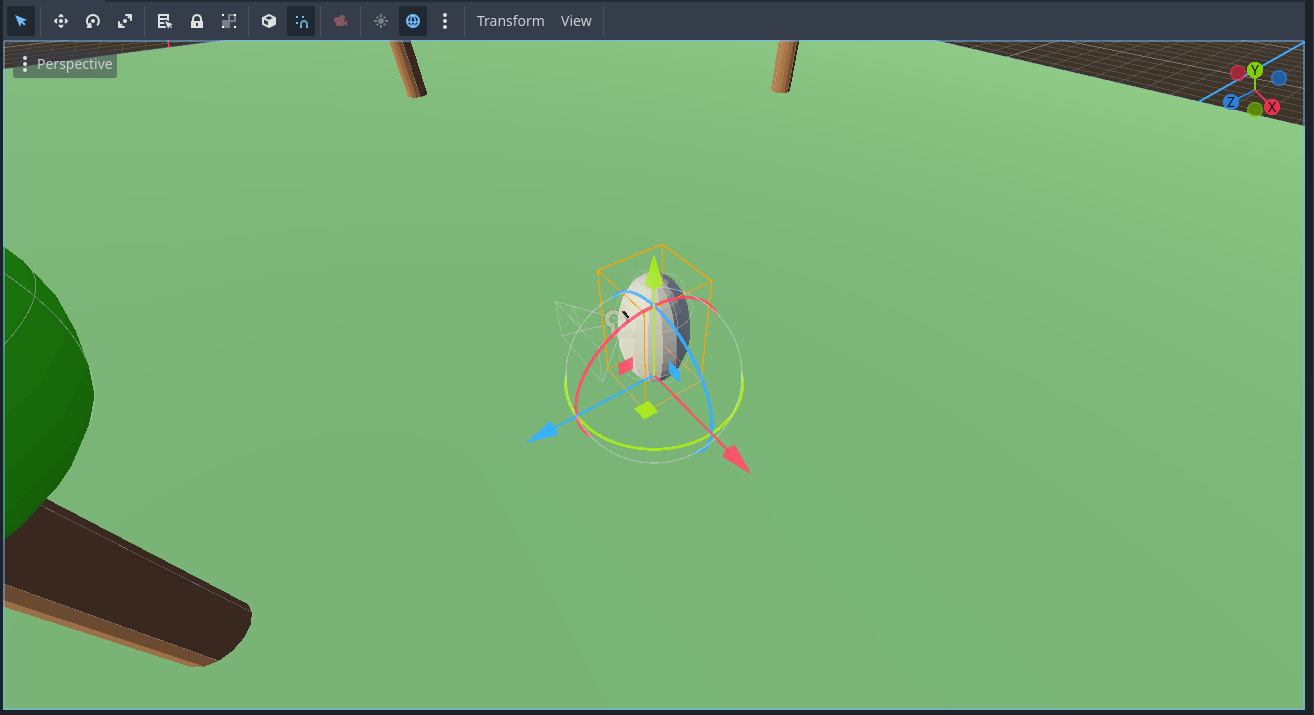

As far as I understand, those two methods operate in the global coordinate system, so in the world's frame of reference. This is problematic since the local coordinate system of our player character Hobba is always oriented such that his eye (the camera) looks towards the local negative z-direction and basically never changes. It just changes in relation to the world coordinates. I'll make this clearer with screenshots:

Here you see how the axes are directed in world coordinates. However, they are still displaced, as the true origin lies somewhere else, which is at coordinates (0,0,0):

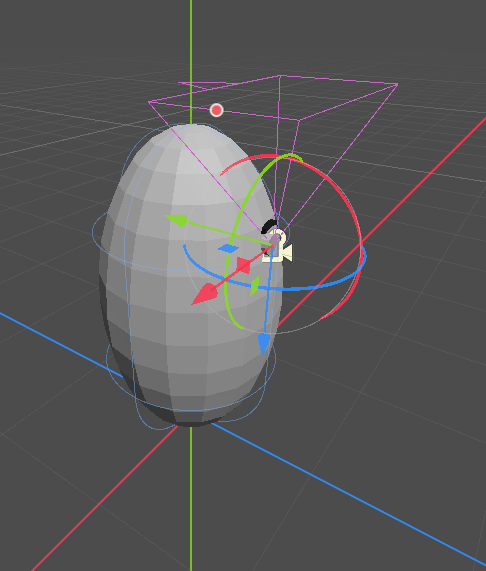

If we toggle the box symbol at the top bar right beside the magnet symbol, we can see the local coordinate system. As we can see now, the axes of Hobba's local coordinates are oriented (and translated) differently from the world coordinate system:

In this picture, it is essentially a 180° turn around the y-axis. In global coordinates, the blue arrow pointed to the bottom left of the screenshot. Now, it points to the upper-right of the picture. These rotation differences will change dynamically depending on how we, as players, move our character Hobba through the world.

(Oh and yes, I — temporarily! — placed some additional trees for visual pleasure and to have some further points of orientation when testing the controls. There will, of course, only be one fancy tree at the end of this project. 😁)

What we, or at least I, want to do is make the movement occur relative to how we are currently looking through Hobba's eye. This makes it feel more natural and just like players know it from other games with similar movement mechanics.

To achieve that, it is necessary to somehow "translate" the movement direction in the local frame of reference to the global frame of reference. The mathematical term for this is transform; we need to perform a coordinate transformation. Classically, we would use transformation matrices for that, which encode the relative translation and orientation between a successive chain of coordinate systems. For example, we have a chain of coordinate systems towards Hobba's camera node, starting from the world coordinate system: World frame → Hobba's frame → camera frame. Each transition is defined by its own transformation. Getting an overall transformation from one frame to another is then as simple as chaining those transformation matrices up using simple matrix multiplications.

We could, of course, do that. Godot already provides some methods for that, like to_global() using a Vector as an argument. However, we would need to perform some additional computations since matrix transforms only transform points in space between coordinate systems and not directional vectors. Technically, any vector can be seen as an arrow originating from the coordinate system's origin (0,0,0) towards a point, even if the vector should describe a direction between two points in space. (If you have difficulties understanding this, I strongly encourage you to learn more about linear algebra, as I don't intend to provide fully qualified mathematical lessons here.)

And I've found a relatively simple way of transforming our movement direction into the global coordinate system without heavily relying on matrix transformations, as most of the information we need is already present:

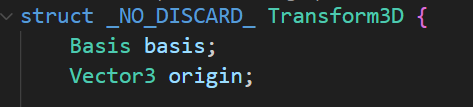

Each 3D node in Godot (and each child class of a Node3D) has a transform property, which holds information about the node's basis (the three unit vectors defining the directions and orientations of the local coordinate system relative to the global one) and its translation distance and direction to the world coordinate system. This transform property is a Godot type called Transform3D and holds the members Basis basis and Vector3 origin

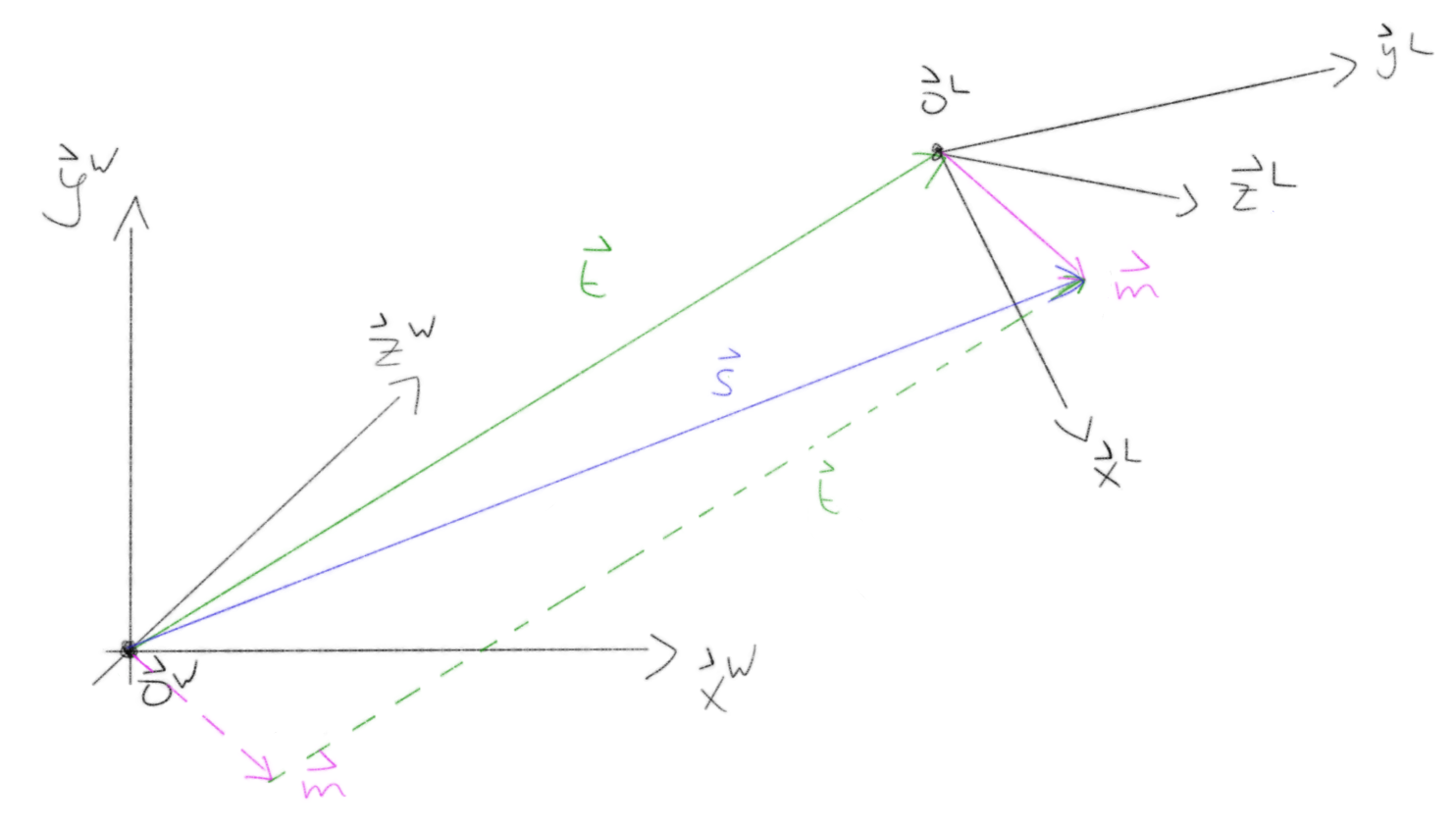

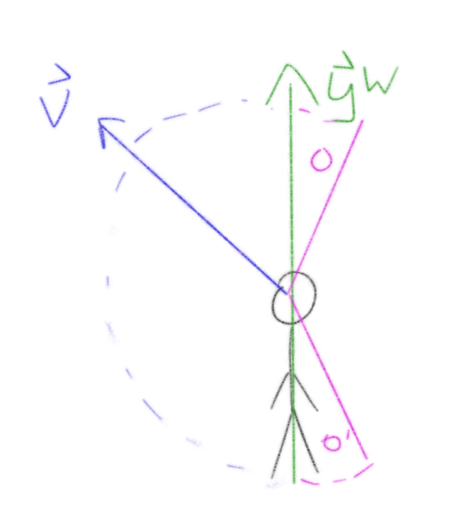

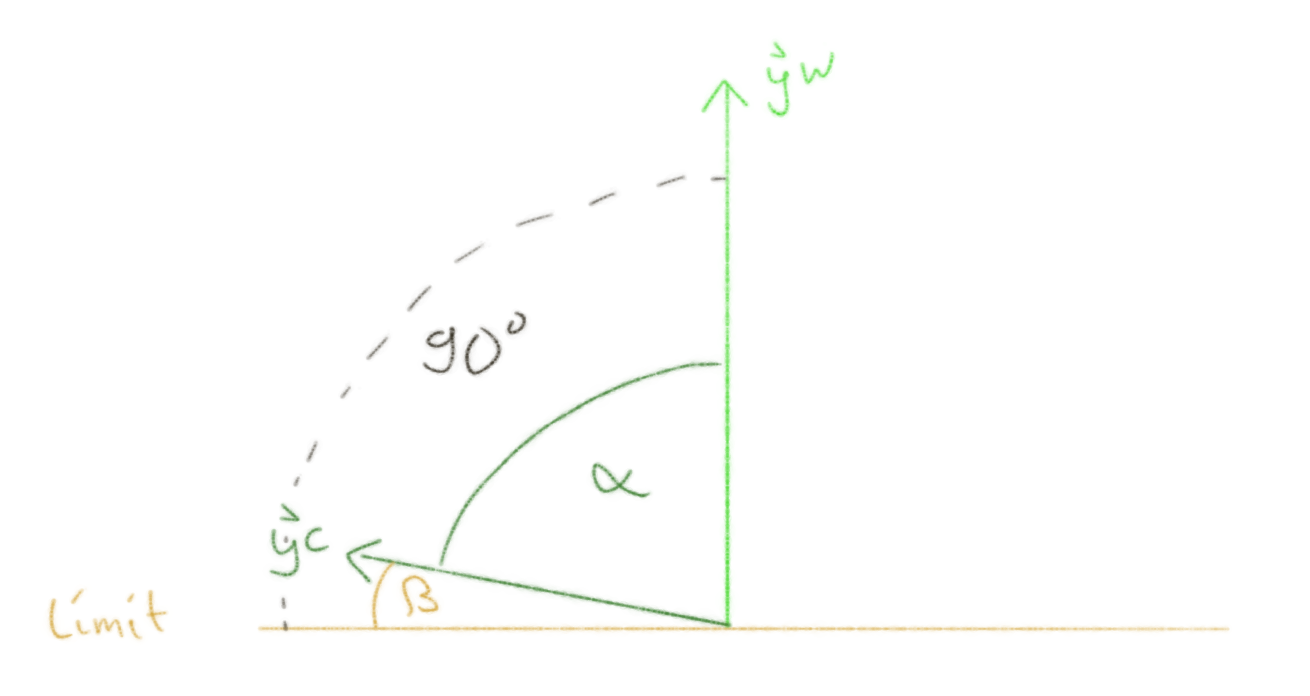

I'll illustrate what that means for us in a sketch:

Let's break this down. Afterwards, I'll explain how I utilized this:

On the left side, you see the world coordinate system with the axes

x_W,y_W, andz_W, as well as the world origin point (and origin of the coordinate system)o_W. (The arrow-like lines above the variables are my way of marking them as vectors.)Technically, in this case, those axes and the origin point have the following values:

x_W = (1, 0, 0)y_W = (0, 1, 0)z_W = (0, 0, 1)o_W = (0, 0, 0)

Those axes are the

Basis basisof the world coordinate frame ando_Wis the origin pointVector3 origin.On the right side, there is another coordinate system. This is the local coordinate system of an object. For example, Hobba's local coordinate system. It is rotated and translated in some arbitrary way relative to the global coordinate frame.

Although Godot knows the specific values, we, from this visualisation alone, would have to compute them first. So we replace the entries of the basis vectors with variables marking the respective coordinate component:

x_L = (x_L_x, x_L_y, x_L_z)y_L = (y_L_x, y_L_y, y_L_z)z_L = (z_L_x, z_L_y, z_L_z)o_L = (o_L_x, o_L_y, o_L_z)(Sorry for the ugly text, I hope, you can follow. The

_x,_yand_zparts at the end just mark the respective axis coordinate of the vector.)

Again, the first three vectors form the base, while

o_Lis the origin. But these are now expressed relative to the world coordinate system.The green vector

tpoints from the world's origin to the origin of our local coordinate frame. From that depiction, we can conclude that the origin of our local coordinate frame is actually the vectort! Meaning:o_L = tThe purple vector

mdescribes our local desired movement direction as set by our player input keys.The blue vector

spoints from the origin of the world coordinate frame to the point where our vectormis pointing in its local frame.The vectors

mandtare also drawn again using dashed lines and a parallel shift.

What we have is the vector m in its local coordinate system, as well as the basis and origin of both coordinate systems. What we now need to obtain is the vector m in the global coordinate system, so that we can safely use our set_velocity(target_vel) and move_and_slide() methods. The vector m, when shifted to the global coordinate system, is depicted by the dashed vector m. You might already have an idea of what we need to do in order to compute the values of the dashed vector m. If not, no worries, I'll tell you:

We can compute the vector m by subtracting the vector t from the blue vector s. When we do that, due to the rules of linear algebra, we get exactly our vector m expressed in global coordinates. And even better: we can retain its length and direction that way, even in our global coordinate system! This is because the dashed vector m and the solid vector m are exactly the same, just shifted in a direction perpendicular to the vector itself.

Isn't math just magical? 🧙

We've got our m and we've got our t; all that's left to do is to compute our vector s and perform the subtraction to get the vector m shifted towards the global coordinate system. As mentioned before, a vector is essentially a line, pointing from the origin of a coordinate system to a specific point. In my sketch, you can see that s and the solid line m are pointing towards the same point in space, just starting from different origins. That means we can compute s by performing a coordinate transformation of vector m to global coordinates. In pseudocode:

m_from_global_origin = s - t

=> m_from_global_origin = to_global_coords(m) - t

And in C++ I have reused the variable move_direction by overwriting it as follows:

move_direction = to_global(move_direction) - get_transform().get_origin();

You can see that I get the vector s from the sketch by calling to_global(move_direction) with the move direction in local coordinates as a parameter. With get_transform(), we retrieve the Transform3D object of our local coordinate system. We can access its origin vector (remember, o_L = t) by calling get_origin() on the returned object.

The last part of the code, which remained unexplained, is how I've implemented jumping. We detect whether our player character Hobba is currently touching the ground by calling is_on_floor(). If that's not the case, the target velocity in the y-direction is subtracted by the configurable move_fall_speed times the frame delta. This, in the case of 60 frames per second for the physics processing, will result in a delta time of 1/60 seconds between frames. If Hobba is currently on the ground, the jump is initiated by multiplying the vertical movement direction "upwards" with the jump speed:

// Fall back to floor when in air.

if (!is_on_floor())

target_vel.y -= (move_fall_speed * delta);

else // Jump. Bunny hop if is_action_pressed() is used instead of is_action_just_pressed()

target_vel.y = move_direction.y * move_jump_speed;

Since I've used is_action_pressed() before when setting the movement directions, we can actually perform bunny hops by holding the jump button (which I've currently set to space). I like that; it's fun. If I change my mind, or you would like to do it differently, is_action_just_pressed() will help execute the jump just once at the moment the jump button is pressed. This means the movement direction for the jump will not be set when the player keeps the jump button pressed without releasing it first.

I might revisit this at some point in the future, as I would rather have global physics drag the player back towards the ground instead of computing the fall-velocity that way. Using the delta value like this might not be accurate as I'm pretty sure that the jump dynamics are non-linear, which is why a linear approximation is not really a good solution. Also, it would be nice to somehow retain the jump momentum. But for now that'll do.

On a side note, I've declared virtually all variables for the movement controls in the header of my FPVPlayer class for performance reasons. This avoids reconstructing the variables and objects used for computing player movement in each frame. Since we run this method 60 times per second, this is a small but meaningful optimization. As we all know, even little things add up after a while.

Since it's exhausting for me and probably not that helpful to you, I will not explain every minor detail of my code and project decisions here. So, if you would like to get a complete picture of the code, scroll up to the very beginning of this blog post where I've put a link to my GitHub repository for this project and also the corresponding commit hash, so you'll be able to explore the repository in the state of this post yourself. But feel free to reach out to me here in the comments or via GitHub if you have any questions that you think I might be able to answer. :)

Respawn player

Since we are able to move now, Hobba might fall off the edge of my simple scene. In that case, it would be nice to respawn, which is as simple as teleporting Hobba back to the top of the plane. For that, I created another class, which I then set as the root node of the main scene. It is called MainNode, inherits from Godot's Node class, and the important bits happen in the _ready() method, which is called right after all nodes are instantiated in the scene tree, and in the _physics_process() method. Here are the code snippets:

void MainNode::_ready() {

// ... other stuff ...

player_node_ptr = get_node<FPVPlayer>(player_node_path); // May become a nullptr!

}

void MainNode::_physics_process(double delta) {

// TODO: preliminary polling. Use events / signals instead via colliders.

// If a player node was found (!nullptr) check whether their position is below a certain threshold.

// if so: reset position to somewhere on the map.

if (player_node_ptr && player_node_ptr->get_position().y < -10) {

player_node_ptr->set_position(teleport_pos);

}

}

When _ready() is called, I try to get a node pointer for my player character by utilizing the get_node<T>(const NodePath& p_path) method, where the template parameter is the type of the node I would like to retrieve (which is my FPVPlayer class) and the path is its relative path within the scene tree, which I made sure to set correctly. I intend to improve the way the node path is set, as this is currently hardcoded. It would be nice to just drop the node in the inspector of Godot's editor and issue a warning if it is not set correctly, similar to what we have with some of Godot's own nodes when they rely on specific child nodes.

In the _physics_process() method, I first make sure that the player node pointer is set (as it may become a nullptr) and then check whether its position in the y-direction is below a certain threshold, which means the player has fallen about 10 meters below the plane. I leverage short-circuit evaluation: if the pointer is a null pointer, the position check will not be performed.

If the check is performed, I reset the player's position to somewhere on the plane using the set_position() method and a Vector3 variable, which I've predefined with some position.

Currently, the main node actively polls 60 times per second, checking the player's position to see if they need to be reset. This is not ideal. It would be better to use an event system like Godot's signals. We could use colliders for this purpose. So, that is now on the to-do list for the future.

Orientation Control

Changing the orientation and view angles of the player is, unfortunately, not as straightforward as processing simple translational movement controls. This is partly because Godot currently doesn't seem to support input mappings for mouse axis movements. However, it's not impossible, but it took me a while longer to figure out. (Admittedly, I was thinking in the wrong direction in my first attempts and ended up in a dead end, which I should have foreseen. Anyway, I've solved it now.)

One reason I had to spend more time on this, as well as on the ground movement part, is that I find it harder to find appropriate C++ documentation for Godot. There is great documentation available for GDScript and C#. Although Godot itself is written in C++, the API documentation doesn't seem to have received the same attention. Many things are easily transferable, but some crucial commands are not even mentioned. Researching online and, most importantly, reading through the source code helped me overcome several obstacles, though it was a cumbersome endeavor.

But enough of the rambling and more of the juice!

Mouse movements are handled entirely differently in Godot. Instead of using the Input singleton as before, we need to code our own event handlers now. There is a whole hierarchy of input event handling in Godot, which is described here:

https://docs.godotengine.org/en/stable/tutorials/inputs/inputevent.html

Input events are passed from one hierarchy level to another until the event gets consumed, ignored, or accepted, but still passed further down by all levels. Technically, we could also process our player's ground movements that way. (And that might be an optimization step in the future. Although for now, due to the reliance on methods like move_and_slide(), having those in the physical processing method is meaningful.)

Long story short, what we need to do is override our inherited unhandled_input(const Ref<InputEvent> &event) event handler method. Here, we accept a general object of type InputEvent (as a reference), typecast it to a reference to an InputEventMouseMotion, and then access and process the InputEventMouseMotion object's properties. This will provide all the information we need to turn our camera and character according to the user's mouse movements.

The typecast is performed when copying the reference of the general InputEvent object as follows.

void FPVPlayer::_unhandled_input(const Ref<InputEvent> &event) {

const Ref<InputEventMouseMotion> mouse_motion_event = event;

That's basically it. Now, after we make sure that the reference is_valid() (meaning it's not a nullptr), we can start to rotate the camera view in the horizontal and vertical directions.

On a side note, it's a bit confusing that Godot's C++ API calls these reference objects "references" as they seem to behave more like some kind of smart pointers (without destructive abilities).

Horizontal View Rotation

Rotating the camera in our first-person view control horizontally means rotating the player object (Hobba) horizontally as well, since the camera and Hobba's eye should always look in the same horizontal direction. Since the camera is attached as a child node to Hobba, turning Hobba will turn the camera.

Depending on the desired look and feel, we can extend this in the future by decoupling the horizontal camera movement from the character body's rotation. You might have played games where you turned around, but the character only started moving their feet when you looked sufficiently far to the left or right.

Performing the horizontal rotation turned out to be just one line of code. (In my prototypes it was longer, but it can be beautifully condensed.)

// Ensure that the underlying pointer of Ref is not nullptr.

if (mouse_motion_event.is_valid()) {

// Rotate player horizontally.

// x-axis is the "side"-axis in view coords, thereby determines the rotation around y, the up axis in local coords.

rotate_y(mouse_motion_event->get_relative().x * horizontal_rot_direction * horizontal_rot_speed);

The command rotate_y(...) will rotate our current object (which is FPVPlayer and in our case Hobba) around the y-axis, meaning we can turn left and right. The amount of rotation is given by an angle, and this angle is expressed in radians (a cool and fancy version of degrees).

The first part of computing that angle is to get the relative x position of our mouse movement. "Relative to what?", you might ask. The answer is: relative to the center of the screen. This is enabled by setting our mouse mode such that the mouse cursor is always captured at the center of the screen. I've set this in the _ready() method of my MainNode class:

void MainNode::_ready() {

if (Engine::get_singleton()->is_editor_hint())

// stuff

else {

// more stuff

// Hide mouse cursor and capture mouse in middle of screen.

input.set_mouse_mode(Input::MouseMode::MOUSE_MODE_CAPTURED);

}

Make sure to make it conditional on where the node is currently running: the editor or the game. I forgot to do this at first, which led to my mouse cursor being captured at the center of Godot's editor screen, making it really hard to click something with the mouse because the cursor became invisible and reset to the screen center. As I just wanted that capturing behaviour within the game, and not in the editor, I put the line setting the mouse mode in the appropriate place in the else-branch.

You can read more about that in Godot's manual: https://docs.godotengine.org/en/stable/tutorials/inputs/mouse_and_input_coordinates.html

The value returned by get_relative().x will be a difference in pixels to the screen's center and not an angle in radians or degrees. This is unproblematic as it's not necessary to reconstruct an accurate angle for this, since we can modify this pixel difference with multiplicative factors which we can tailor to our preferences. In other words, we can set what is commonly known as "mouse sensitivity" by that. You can see the other two parameters, which play a part in realising this: horizontal_rot_direction will invert or not invert the direction of rotation. It will be a simple 1 or -1 depending on the settings. This will have the effect that, for example, when you move your mouse to the left, you will look to the left. But if that value is inverted, you will look to the right when you move your mouse to the left. You might know this from other games as "invert X-axis." With the variable horizontal_rot_speed, we can fine-tune the "sensitivity," i.e., the amount of rotation per mouse movement, or just "horizontal rotation speed" put differently.

Although this works perfectly fine for me, I already see an issue with this. Since the total difference in pixels is used, the rotation speed will significantly vary with different screen resolutions. For example, the pixel difference on the x-axis might become much larger on higher screen resolutions for the same mouse movement than on lower resolutions. This might become a nuisance for players and complicate things. Therefore, I think it's advisable to normalize this pixel difference depending on the screen resolution. (You'll basically get a percentage value by that and see to how many percent of the total screen width the mouse was moved in the x-direction.) This could help to get a uniform behaviour using the same horizontal rotation speed but on different screen resolutions. I'll put that to a test in the future.

After rotating around the y-axis, we need to do the following:

// Orthonormalize transform. It may get non-orthonormal with time due to floating point represenation precision.

characterBody_transform = get_transform();

characterBody_transform.orthonormalize();

set_transform(characterBody_transform);

This is called orthonormalisation and will ensure that our coordinate system's basis remains normalised to a length of 1 per axis and that the axes stay perpendicular to each other. (You might remember the basis concepts from the section on ground movement control.) We have to do this because transformations of a coordinate system, like rotations, are not computed in a mathematically exact manner. We can represent floating point numbers only up to a certain precision. Although we are well equipped with 64-bit floating point numbers (which is usually the size of a double), they too have (minor) imprecisions. Such imprecisions add up and can screw skew up our coordinate system's bases. Quite literally, they may become skewed. This is bad for maintaining the correct movement behaviour of bodies. To compensate for that, we get the current Transform3D object of the FPVPlayer instance via get_transform(). The command orthonormalize() called upon the transform will perform the orthonormalisation. And with set_transform(), we write back our corrected transform.

You might think that these changes are so insignificant that this is totally unnecessary. I thought that as well, which is why I tested it. For that, I just added some temporary code, which printed error messages (I'll explain further down how to print to Godot's editor console using C++) whenever either the FPVPlayer instance or its Camera3D child node were no longer orthonormal. And it just took between a couple of seconds and up to about a minute until that was the case when I ran different tests.

So, even though it's an additional computational load, it is necessary. Thank Stroustrup for C++ being fast!

Vertical View Rotation

The vertical view rotation proved to be more challenging. At first glance, you might think you can handle it the same way as the horizontal view rotation with just a simple line of code. However, if you have tested it up to this point, you might have noticed that you can rotate Hobba endlessly in a horizontal direction. But that's not what you typically see with vertical view mechanics. Without restricting vertical movement, we could rotate endlessly up or down and end up looking at our own feet from behind. In most games, the vertical view rotation is locked at the sky and the ground. You can't look "beyond the global y-axis" in a rotational manner.

I always hated that. In reality, you can lean back or forward a bit and look beyond such fixed points above or below you to a certain extent. So, what I wanted to achieve for the vertical view rotation was to allow a certain range of "overstretching" while still preventing endless vertical view rotations. Here is a quick sketch illustrating what I mean:

The green arrow is the global y-axis (the "up" axis). The blue arrow v is the view direction vector of the player. In most first-person-view games, this view direction can only be rotated within a 180° angle: from the feet to the head, indicated by the dashed blue half-circle. What I would like to be able to do is look beyond those 180° in the up and down directions. These are the pink lines with different angles. Ideally, this should be configurable.

Limiting the overall vertical rotation and allowing some freedom for this kind of overstretching can be achieved using two additional variables. One keeps track of our overall performed vertical view rotation, and the other encodes our allowed overstretching amount.

We start similarly to before by calculating the angle based on the player's mouse movement:

// y-axis is the "up"-axis in view coords, thereby determines the rotation around x, the side-axis in local coords.

vertical_rot_ang = mouse_motion_event->get_relative().y * vertical_rot_direction * vertical_rot_speed;

But unlike before, we don't rotate the view (our camera) yet. Then we increment the variable that tracks overall vertical rotation:

accumulated_vertical_rot += vertical_rot_ang;

We can use these accumulated values to ensure that our vertical rotation will not reach beyond the y-axis in the upward or downward direction, plus a possibly allowed overstretch amount. The values we need to compare it to are -90° and +90° (plus/minus the overstretch).

Where do these angles come from? From our camera. If you look at the camera's coordinate system, you see that at a level parallel to the ground (when looking forward, for example), the y-axis of the camera is parallel to the world's y-axis:

(Reminder: the green arrow is the positive y-direction.) If we now want to look up or down, this means a rotation around the x-axis of the camera's coordinate system. If we want to look at the sky, see for yourself how the camera's base is oriented:

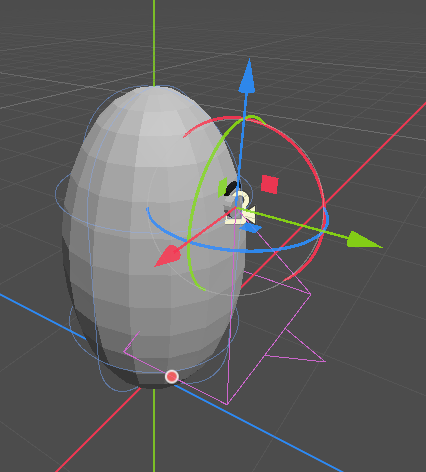

The y-axis now points "inside" Hobba, which means it was rotated by 90° around the x-axis. If we want to look at the ground, something similar becomes clear:

Now the y-axis points "out of Hobba's eye." As we can see, this is equivalent to a negative rotation around the x-axis by 90°, so -90° around x. That's where these limits come from. If we want to allow overstretch, we would add or subtract from these limits accordingly.

In radians, 90° equals half pi:

$$90° = {\pi \over 2} \textrm{rad}$$

With that in mind, we can perform our comparison and check whether the accumulated rotation, as determined by the player's mouse movement, would exceed our allowed limits:

if (accumulated_vertical_rot > Math_PI_HALFED + vertical_overstretch_up) {

//...

}

else if (accumulated_vertical_rot < -(Math_PI_HALFED + vertical_overstretch_down)) {

//...

}

In case it is not exceeding the limits, we can proceed as before and just:

camera_node_ptr->rotate_x(vertical_rot_ang);

Otherwise, we need to adjust the vertical_rot_ang by an amount that guarantees it will only rotate as far as the respective limit. For that, more mathemagics comes into play.

Consider a rendered frame before the player moved the mouse in a way that would exceed our limits, as shown in the sketch below:

Here, the light-green y-axis is from the world coordinate system, and the dark-green y-axis is from our camera coordinate system. The orange line marks our limits up to where the camera's y-axis is allowed to be rotated. The angle alpha represents the angle between the camera's and world's y-axes, while beta is the angle between the limit and the camera's y-axis. The dashed black lines indicate the 90-degree angle between the world's y-axis and the limiting line.

If the player now moves their mouse in a way that the camera's y-axis would lie below the limiting line (and therefore would have an angle of more than 90° to the world's y-axis), we need to modify the rotation such that the final rotation would move the camera's y-axis exactly onto the limiting line, but not beyond. And what is this angle we need to rotate by then? You can infer it from the sketch. Give it a try!

It's the angle beta. Computing that one is the crucial part now. We know the overall allowed angle is 90° (and possibly some added overstretch later on), but we do not know the angle alpha or the angle beta. And we require at least one of those. Luckily, Godot allows us to compute the angle between two vectors with the method angle_to(), which can be called on a Vector3. And, as we also know at this point, we can get the bases (which are essentially vectors) of our coordinate systems. Using that, we could easily compute the angle alpha by computing the angle from the camera's y-axis to the global y-axis or vice versa. And this is a valid approach. In code, this can look like this:

double angle_y_glob_to_y_loc =

get_global_basis().get_column(1).angle_to(camera_node_ptr->get_basis().get_column(1));

With get_global_basis(), we retrieve the global basis where we can get a copy of the y-axis by get_column(1), which addresses the second column of the basis (using array-like indexing that starts at 0 for the first element). The angle_to() method will compute the angle to another vector. That vector will be the camera's y-axis, which we can retrieve in a similar manner by using our camera node pointer and calling get_basis().get_column(1) on it.

Technically, we would be done here. But I had the feeling that this computation could be optimized a bit. The angle_to() method seemed like a general method for general vectors, which is fine in principle. But since we are using orthonormal bases as our arguments, I suspected that this computation could be made more efficient by exploiting their properties.

Looking into Godot's C++ API code revealed the following for the angle computation method:

real_t Vector3::angle_to(const Vector3 &p_to) const {

return Math::atan2(cross(p_to).length(), dot(p_to));

}

Rewritten as a mathematical formula between to arbitrary vectors a and b:

$$\alpha = \textrm{atan2} (|\vec{a} \times \vec{b}|, \vec{a} \cdot \vec{b} )$$

Which means, we compute the following step by step:

The cross product between the two vectors.

The length of the cross product.

The dot product of the vectors.

The angle using the

atan2(y, x)function and the previously computed elements as its parameters.

Since the vectors in our coordinate system's bases are orthonormal, they all have a length of exactly 1. That means that a cross product will also have a length of 1. And if we're interested in just using the length of the cross product anyway, can't we just do that and skip the computation of the cross product?

Indeed we can. For our purposes we can utilise another formula for computing angles between vectors. You might remember this beautiful but mighty little thing here from school or linear algebra courses:

$$\alpha = \textrm{acos}\left( {\vec{a} \cdot \vec{b}} \over {|\vec{a}|\cdot |\vec{b}|} \right)$$

Due to the lengths of our basis vectors being 1, this simplifies to:

$$\alpha = \textrm{acos}\left( \vec{a} \cdot \vec{b} \right)$$

Our computation now has fewer steps:

Compute the dot product between the vectors.

Compute the inverse cosine function of the dot product.

Et voilà, we are done. Fewer (and less complex) computations mean faster code. On modern machines, the first approach shouldn't hurt much. But why not optimize if we can? Small things do add up after a while. And since this computation is performed on every frame of Godot's physical processing, it becomes especially important to keep the computational load between frames low.

In code, this now looks like this:

angle_y_glob_to_y_loc =

Math::acos(get_global_basis().get_column(1).dot(camera_node_ptr->get_basis().get_column(1)));

Having computed the angle alpha (from the sketch before) this way, adjusting the total allowed rotation beta is now as simple as subtracting alpha from +/-90° (plus the additional overstretch):

if (accumulated_vertical_rot > Math_PI_HALFED + vertical_overstretch_up) {

vertical_rot_ang = Math_PI_HALFED + vertical_overstretch_up - angle_y_glob_to_y_loc;

accumulated_vertical_rot = Math_PI_HALFED + vertical_overstretch_up;

}

else if (accumulated_vertical_rot < -(Math_PI_HALFED + vertical_overstretch_down)) {

vertical_rot_ang = -(Math_PI_HALFED + vertical_overstretch_down - angle_y_glob_to_y_loc);

accumulated_vertical_rot = -(Math_PI_HALFED + vertical_overstretch_down);

}

The accumulated rotation variable is also set to the limit.

As with the horizontal rotation, it's important to orthonormalize the camera basis after rotating as well:

// Orthonormalize transform. It may get non-orthonormal with time due to floating point represenation precision.

camera_transform = camera_node_ptr->get_transform();

camera_transform.orthonormalize();

camera_node_ptr->set_transform(camera_transform);

And with this, the core of my first-person-view movement implementation is concluded. Yay! 🥳

Here is a little demo:

As you can see, it works perfectly. You can move around, bunny hop, and look up, down, and sideways. In the upward direction, I allowed an overstretch of 30°. When looking up and down, you'll also notice that the camera view clips with Hobba's 3D model. This might be improved in the future by using view layers or something similar, essentially telling the camera what it can and cannot see.

Don't be confused by the "loading" mouse cursor being visible. This was caused by my screen capture software. When I wasn't recording, the mouse cursor wasn't visible as intended.

In principle, it is also perfectly fine to use negative values for the overstretch. This would lead to a more drastic limitation in the up-down look direction, as even 180° degrees would not be possible anymore. You wouldn't be able to look up or down that far, including directly up or down. There might be use cases for that. For example, if you use the FPVPlayer class for a vehicle like a car, you might not want the player to be able to look that far up.

Printing to Editor Console and Other Quirks

For the remaining part of this blog post, I want to tell you a few things about how we can print to Godot's editor console from our C++ code, briefly touch on the topic of getters and setters so that we can change some variables of the FPVPlayer class from the editor, and mention that it might make sense to change the float steps for changing values in the inspector window of the editor. Also, I will quickly say something about the two different cameras that are currently present in the scene.

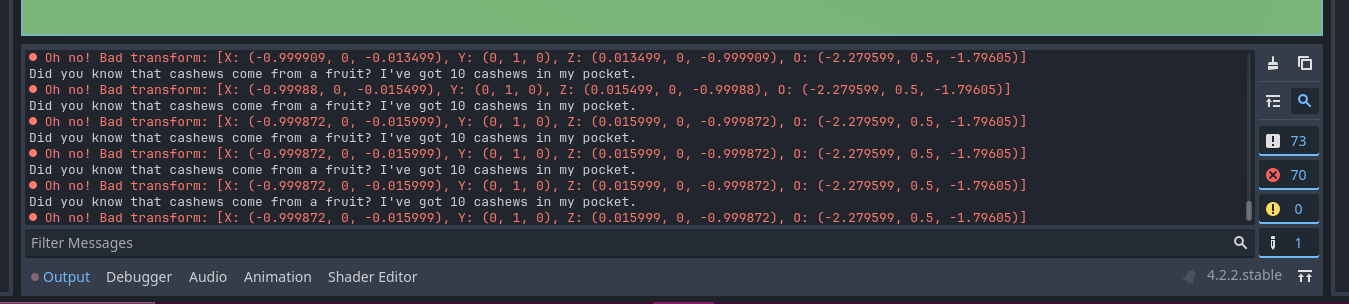

Printing to the Console

As every programmer knows, printing some output once in a while can be extremely useful. Luckily, we don't need to code that ourselves as the C++ API already provides us with the functionality. There is an (as far as I've seen undocumented) header which can be included:

#include <godot_cpp/variant/utility_functions.hpp>

In the utility functions header, we find several print methods, such as simple printing or printing as an error:

UtilityFunctions::print("Did you know that cashews come from a fruit? I've got ", 10, " cashews in my pocket.");

UtilityFunctions::printerr("Oh no! Bad transform: ", get_transform());

As you might know from print methods in other libraries, it is also possible to include variable values in the output, as I did in the example code snippet above.

In Godot, this is what our output looks like:

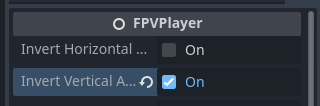

Getters & Setters

If you've completed the C++ GDExtension tutorial, you might already be familiar with how we "export" the member variables of our class to make them visible and changeable in the inspector. I've done exactly that for the following variables:

Invert horizontal axis.

Invert vertical axis.

Horizontal rotation speed.

Fall speed.

Jump speed.

Ground movement speed.

Vertical overstretch downwards.

Vertical overstretch upwards.

Vertical rotation speed.

Since almost each of these variables is a floating point number type, there is not much difference from the tutorial. A bit more interesting is the inversion of the rotation axes. Here we use Variant::BOOL. Even though the horizontal and vertical directions are of type double to avoid typecasts during the computation of the rotations, they can be interpreted as boolean values, with 1 and -1 corresponding to true and false respectively.

// Horizontal Rotation Direction

ClassDB::bind_method(D_METHOD("get_horizontal_rot_direction"), &FPVPlayer::get_horizontal_rot_direction);

ClassDB::bind_method(D_METHOD("set_horizontal_rot_direction", "invert"), &FPVPlayer::set_horizontal_rot_direction);

ClassDB::add_property("FPVPlayer", PropertyInfo(Variant::BOOL, "invert horizontal axis"),

"set_horizontal_rot_direction", "get_horizontal_rot_direction");

// Vertical Rotation Direction

ClassDB::bind_method(D_METHOD("get_vertical_rot_direction"), &FPVPlayer::get_vertical_rot_direction);

ClassDB::bind_method(D_METHOD("set_vertical_rot_direction", "invert"), &FPVPlayer::set_vertical_rot_direction);

ClassDB::add_property("FPVPlayer", PropertyInfo(Variant::BOOL, "invert vertical axis"),

"set_vertical_rot_direction", "get_vertical_rot_direction");

While the getters return the value as usual, the setters invert it:

inline void FPVPlayer::set_horizontal_rot_direction(const bool invert) {

horizontal_rot_direction = -horizontal_rot_direction;

}

inline void FPVPlayer::set_vertical_rot_direction(const bool invert) {

vertical_rot_direction = -vertical_rot_direction;

}

These setters will be called each time the inversion value is changed. Since boolean values are represented as a simple checkbox in Godot's inspector, the setter is called each time we check or uncheck the box.

Here is a screenshot of how that can look like:

Regarding the float values, it might make sense to limit the possibilities to a certain range, or at least a minimum value, as I would not like to have negative speeds, for example.

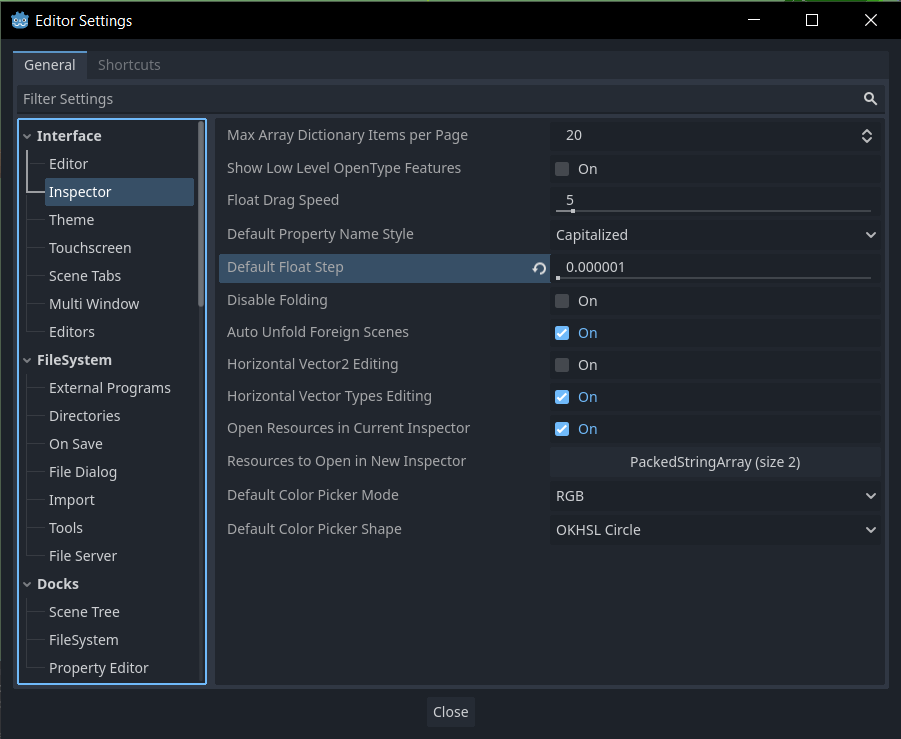

Inspector Float Steps

When I was trying to lower my "mouse sensitivity" in the horizontal and vertical directions, i.e., the horizontal and vertical rotation speeds, I found that Godot clamped the value to 0.001 when I tried to set it below that via the inspector. Luckily for me, someone else had similar problems and received help:

This behaviour is due to the float step configured in the inspector settings of the editor settings. I changed this to 0.000001 for more fine-grained control. That way, I could lower the (rather high) sensitivity of my mouse for the game.

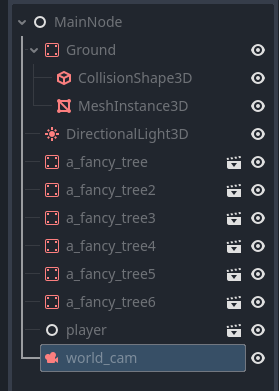

Multiple Cameras in Scene

Having multiple cameras in a Godot scene can become quite problematic. This is due to the following fact:

Only one camera can be active per viewport.

As per the documentation on the Camera3D node: https://docs.godotengine.org/en/stable/classes/class_camera3d.html

Since I now had two cameras in my scene, the first camera I set up when I first imported my first fancy tree model, and the camera now at Hobba's eye (the player view camera), this led to unexpected results when I wanted to test the first-person view camera. The result was that only the first camera I added was used to render the scene.

So, after reading a bit about viewports and cameras in Godot, I found a solution for this. There is a checkbox called "Current" which makes the selected camera node the "current" node for rendering. I just disabled that for my first camera (not the one attached to Hobba) in the inspector.

Since the documentation on camera nodes also says:

Cameras register themselves in the nearest Viewport node (when ascending the tree).

I also placed the camera node below my player node (of type FPVPlayer) just to make sure:

Now it works. I didn't want to delete the world camera node yet, as I thought about using it at some point in the future for a start screen of the fancy tree game. The idea is that upon starting the game, the world camera node is used so that the tree is viewed. A menu can then be overlaid on top of that. And as soon as the player hits a button like "play," the world camera gets disabled, i.e., "current" will be unchecked, and the view switches to the camera attached to Hobba's eye.

That's it! This was a rather lengthy blog post again. Hopefully, you can get something out of it. For me, writing this down served as my own notes. I also discovered some minor improvements that could be made in the code while writing this. So, it was definitely useful to rethink my implementation while documenting it.

Next, I would like to add some sort of sky dome over the map so that we don't have to see the ugly grey background anymore. But this will only be a temporary solution as I would like to make an even better sky at some point in the future.

Until next time!

— Zacryon

Subscribe to my newsletter

Read articles from Zacryon directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by