AMD Unveils ROCm 6.1.3 Al Software with Dual Slot Radeon PRO W7900

Spheron Network

Spheron Network

AMD has unveiled its ROCm 6.1.3 software suite, featuring new AI enhancements and expanded support, alongside the introduction of the Radeon PRO W7900 Dual Slot GPU.

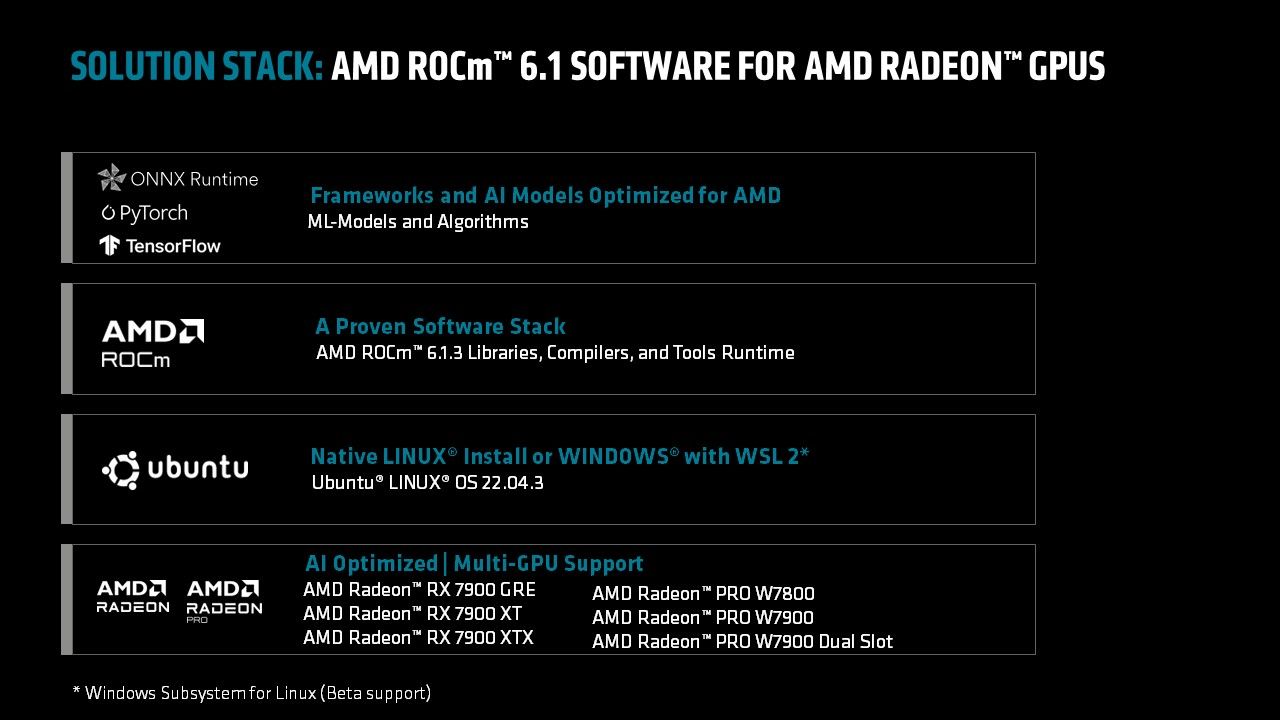

ROCm 6.1.3 Software Stack Adds Multi-GPU, TensorFlow, and WSL 2 Support

At Computex 2024, AMD announced the retail availability of the Radeon PRO W7900 Dual Slot GPU, priced at $3499 USD. This GPU is designed for superior performance in AI-intensive tasks, boasting a 48 GB memory capacity to enhance speed in large language models (LLMs).

The Radeon PRO W7900 features a dual-slot design, ideal for workstations that can accommodate up to four of these powerful units. The Navi 31 XTX GPU core powers the card with 6144 cores across 96 compute units. It has a 384-bit wide bus and 48 GB of GDDR6 memory, delivering up to 864 GB/s bandwidth. Additionally, the card includes 96 MB of Infinity Cache for even higher bandwidth performance.

Key Features:

High Performance: Equipped with 96 compute units, 192 AI accelerators, and 96 ray accelerators, achieving up to 61.32 TFLOPs at peak single precision (FP32) and 122.64 TFLOPS at peak half precision (FP16).

Cost Efficiency: Offers up to 38% better performance-per-dollar in Llama 3 70B Q4 compared to competitors, capable of handling the 70B parameter model on a single GPU framebuffer.

Exceptional Memory: 48GB memory with error-correcting code (ECC) technology ensures data integrity, facilitating seamless multitasking and complex project handling.

Desktop AI Capability: Supports up to four AMD Radeon PRO W7900 Dual Slot graphics cards, adding significant AI performance to desktop IT infrastructures, ideal for sensitive and mission-critical projects.

Alongside the GPU launch, AMD is releasing the ROCm 6.1.3 open software suite, enhancing accessibility and support for consumer-grade Radeon and Radeon PRO graphics cards.

ROCm 6.1.3 Highlights

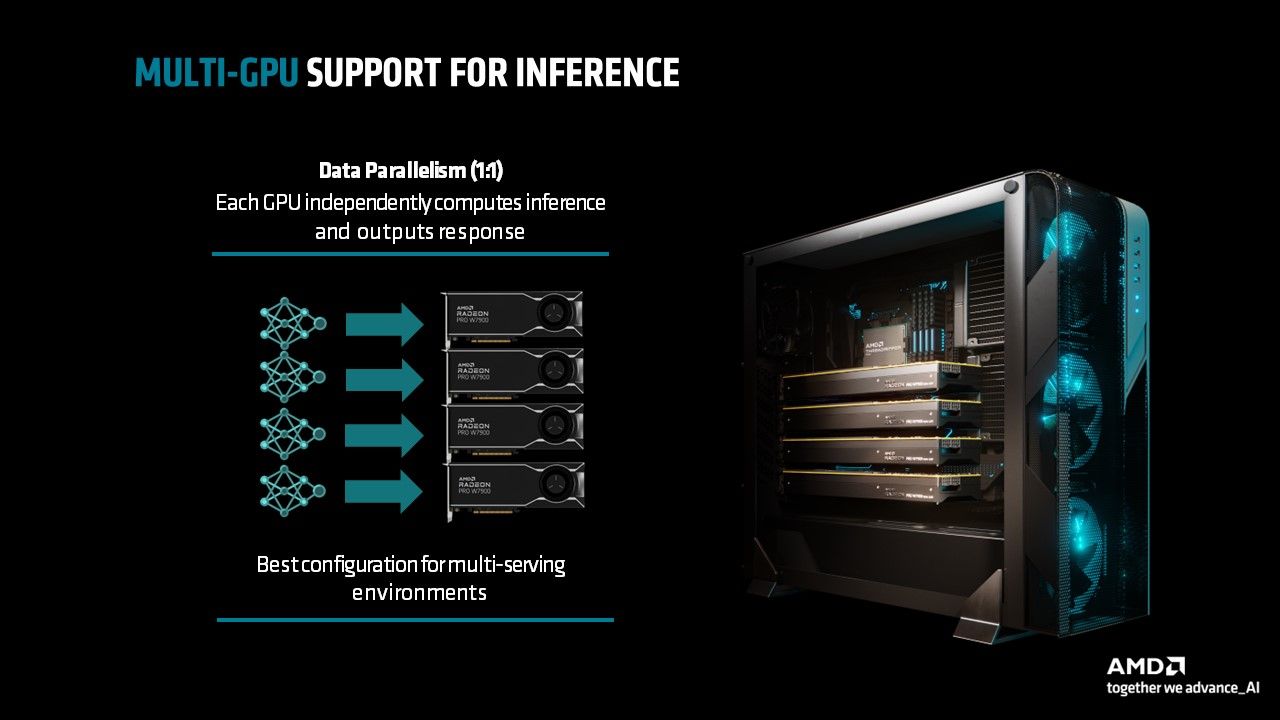

Multi-GPU Support: Facilitates the construction of scalable AI desktops for multi-serving, multi-user environments.

WSL 2 Support: Beta support for Windows Subsystem for Linux, allowing ROCm functionality on Windows OS.

TensorFlow Framework Support: Expands options for AI development.

AMD states that ROCm 6.1.3 supports up to four compatible Radeon RX and Radeon PRO GPUs, including the Radeon RX 7900 XTX, 7900 XT, 7900 GRE, Radeon PRO W7900, PRO W7900 DS, and PRO W7800. These GPUs can be configured for independent inference computation, enhancing performance, scalability, and accessibility.

Boost Your Desktop's ML Performance

As modern models surpass the limits of typical hardware and software not optimized for AI, ML engineers seek cost-effective solutions for developing and training ML applications. With substantial GPU memory sizes of 24GB or 48GB, utilizing a local PC or workstation with the latest high-end AMD Radeon 7000 series GPUs presents a powerful yet economical option to address these expanding ML workflow challenges.

Advantages of AMD Radeon 7000 Series GPUs

Built on RDNA 3 Architecture: Offers over twice the AI performance per Compute Unit (CU) compared to the previous generation.

Enhanced AI Accelerators: Now featuring up to 192 AI accelerators.

Large GPU Memory: Available with up to 24GB or 48GB of GPU memory, enabling the handling of large ML models.

Combined Memory Capacity: With up to 48GB memory per GPU, users can achieve a total pool of up to 192GB, meeting extensive AI requirements.

While PyTorch and ONNX remain popular choices, ROCm 6.1.3 now qualifies the TensorFlow framework, providing users with an additional option for AI development.

Here is the chart based on the provided information about the AMD Radeon Pro W7000 series graphics cards:

AMD Radeon Pro W7000 GPUs

| Graphics Card Name | GPU | Process Node | Compute Units | Stream Processors | Clock Speed (Peak) | VRAM | Memory Bandwidth | Memory Bus | Compute Rate (FP32) | TDP | Price (USD) | Launch Year |

| RADEON PRO W7900 | Navi 31 | 5nm + 6nm | 96 CU | 6,144 | ~2.5 GHz | 48 GB GDDR6 | 864 GB/s | 384-bit | 61.3 TFLOPs | 295W | $3,999 | 2023 |

| RADEON PRO W7900DS | Navi 31 | 5nm + 6nm | 96 CU | 6,144 | ~2.5 GHz | 48 GB GDDR6 | 864 GB/s | 384-bit | 61.3 TFLOPs | 295W | $3,499 | 2024 |

| RADEON PRO W7800 | Navi 31 | 5nm + 6nm | 70 CU | 4,480 | ~2.5 GHz | 32 GB GDDR6 | 576 GB/s | 256-bit | 45.2 TFLOPs | 260W | $2,499 | 2023 |

| RADEON PRO W7700 | Navi 32 | 5nm + 6nm | 64 CU | 3,072 | ~2.3 GHz | 16 GB GDDR6 | 576 GB/s | 256-bit | 28.3 TFLOPs | 190W | $999 | 2023 |

| RADEON PRO W7600 | Navi 33 | 6nm | 32 CU | 2,048 | ~2.5 GHz | 8 GB GDDR6 | 288 GB/s | 128-bit | 20 TFLOPs | 127W | $599 | 2023 |

| RADEON PRO W7500 | Navi 33 | 6nm | 28 CU | 1,792 | ~1.7 GHz | 8 GB GDDR6 | 176 GB/s | 128-bit | 12 TFLOPs | 69W | $429 | 2023 |

Subscribe to my newsletter

Read articles from Spheron Network directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Spheron Network

Spheron Network

On-demand DePIN for GPU Compute