Set up an AWS EKS cluster with a managed node group using custom launch templates

Saurabh Adhau

Saurabh Adhau

Introduction

Amazon Elastic Kubernetes Service (Amazon EKS) is a fully managed Kubernetes service offered by AWS. It allows users to deploy Kubernetes applications without installing and managing the Kubernetes control plane or worker nodes. AWS EKS ensures High Availability, Security, and automates critical tasks such as patching, node provisioning, and updates.

This tutorial will demonstrate setting up an EKS cluster with managed node groups using custom launch templates.

Prerequisites:

AWS Account

Basic familiarity with AWS, Terraform, and Kubernetes

Access to a server with Terraform installed or an Ubuntu machine.

Let's proceed with creating Terraform code for provisioning the AWS EKS cluster. We will organize this process into different modules. Below is the structure for our approach and there is a script that you can create all the files and directories in one go:

.

├── README.md

├── main.tf

├── modules

│ ├── eks

│ │ ├── main.tf

│ │ ├── outputs.tf

│ │ └── variables.tf

│ ├── iam

│ │ ├── main.tf

│ │ └── outputs.tf

│ ├── security-group

│ │ ├── main.tf

│ │ ├── outputs.tf

│ │ └── variables.tf

│ └── vpc

│ ├── main.tf

│ ├── outputs.tf

│ └── variables.tf

├── provider.tf

├── terraform.tfvars

└── variables.tf

5 directories, 16 files

#!/bin/bash

# Create directories

mkdir -p modules/eks

mkdir -p modules/iam

mkdir -p modules/security-group

mkdir -p modules/vpc

# Create empty files

touch README.md

touch main.tf

touch provider.tf

touch terraform.tfvars

touch variables.tf

# Create files in modules/eks

touch modules/eks/main.tf

touch modules/eks/outputs.tf

touch modules/eks/variables.tf

# Create files in modules/iam

touch modules/iam/main.tf

touch modules/iam/outputs.tf

# Create files in modules/security-group

touch modules/security-group/main.tf

touch modules/security-group/outputs.tf

touch modules/security-group/variables.tf

# Create files in modules/vpc

touch modules/vpc/main.tf

touch modules/vpc/outputs.tf

touch modules/vpc/variables.tf

echo "Directory structure and files created successfully."

Save the above script to a file, for example, create_directory_structure.sh, and make it executable:

chmod +x create_directory_structure.sh

Then, run the script:

./create_directory_structure.sh

This will create the entire directory structure with empty files as specified in your requirement.

Step 1: Create the module for VPC

- Create

main.tffile and add the below code to it.

# Creating VPC

resource "aws_vpc" "eks_vpc" {

cidr_block = var.vpc_cidr

instance_tenancy = "default"

enable_dns_hostnames = true

tags = {

Name = "${var.cluster_name}-vpc"

Env = var.env

Type = var.type

}

}

# Creating Internet Gateway and attach it to VPC

resource "aws_internet_gateway" "eks_internet_gateway" {

vpc_id = aws_vpc.eks_vpc.id

tags = {

Name = "${var.cluster_name}-igw"

Env = var.env

Type = var.type

}

}

# Using data source to get all Avalablility Zones in region

data "aws_availability_zones" "available_zones" {}

# Creating Public Subnet AZ1

resource "aws_subnet" "public_subnet_az1" {

vpc_id = aws_vpc.eks_vpc.id

cidr_block = var.public_subnet_az1_cidr

availability_zone = data.aws_availability_zones.available_zones.names[0]

map_public_ip_on_launch = true

tags = {

Name = "Public Subnet AZ1"

Env = var.env

Type = var.type

}

}

# Creating Public Subnet AZ2

resource "aws_subnet" "public_subnet_az2" {

vpc_id = aws_vpc.eks_vpc.id

cidr_block = var.public_subnet_az2_cidr

availability_zone = data.aws_availability_zones.available_zones.names[1]

map_public_ip_on_launch = true

tags = {

Name = "Public Subnet AZ2"

Env = var.env

Type = var.type

}

}

# Creating Route Table and add Public Route

resource "aws_route_table" "public_route_table" {

vpc_id = aws_vpc.eks_vpc.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.eks_internet_gateway.id

}

tags = {

Name = "Public Route Table"

Env = var.env

Type = var.type

}

}

# Associating Public Subnet in AZ1 to route table

resource "aws_route_table_association" "public_subnet_az1_route_table_association" {

subnet_id = aws_subnet.public_subnet_az1.id

route_table_id = aws_route_table.public_route_table.id

}

# Associating Public Subnet in AZ2 to route table

resource "aws_route_table_association" "public_subnet_az2_route_table_association" {

subnet_id = aws_subnet.public_subnet_az2.id

route_table_id = aws_route_table.public_route_table.id

}

- Create

variables.tffile and add the below code to it.

# Environment

variable "env" {

type = string

}

# Type

variable "type" {

type = string

}

# Stack name

variable "cluster_name" {

type = string

}

# VPC CIDR

variable "vpc_cidr" {

type = string

default = "10.0.0.0/16"

}

# CIDR of public subet in AZ1

variable "public_subnet_az1_cidr" {

type = string

default = "10.0.1.0/24"

}

# CIDR of public subet in AZ2

variable "public_subnet_az2_cidr" {

type = string

default = "10.0.2.0/24"

}

- Create

outputs.tffile and add the below code to it.

# VPC ID

output "vpc_id" {

value = aws_vpc.eks_vpc.id

}

# ID of subnet in AZ1

output "public_subnet_az1_id" {

value = aws_subnet.public_subnet_az1.id

}

# ID of subnet in AZ2

output "public_subnet_az2_id" {

value = aws_subnet.public_subnet_az2.id

}

# Internet Gateway ID

output "internet_gateway" {

value = aws_internet_gateway.eks_internet_gateway.id

}

Step 2: Create the module for the Security Group

- Create

main.tffile and add the below code to it.

# Create Security Group for the EKS

resource "aws_security_group" "eks_security_group" {

name = "Worker node security group"

vpc_id = var.vpc_id

ingress {

description = "All access"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

description = "outbound access"

from_port = 0

to_port = 0

protocol = -1

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "${var.cluster_name}-EKS-security-group"

Env = var.env

Type = var.type

}

}

From the tutorial's perspective, inbound ports from all IP addresses are configured to allow unrestricted access.

- Create

variables.tfand add the below code to it.

# VPC ID

variable "vpc_id" {

type = string

}

# Environment

variable "env" {

type = string

}

# Type

variable "type" {

type = string

}

# Stack name

variable "cluster_name" {

type = string

}

- Create

outputs.tffile and add the below code to it.

# EKS Security Group ID

output "eks_security_group_id" {

value = aws_security_group.eks_security_group.id

}

Step 3: Create the module for the IAM Role

- Create

main.tffile and add the below code to it.

# Creating IAM role for Master Node

resource "aws_iam_role" "master" {

name = "EKS-Master"

assume_role_policy = jsonencode({

"Version" : "2012-10-17",

"Statement" : [

{

"Effect" : "Allow",

"Principal" : {

"Service" : "eks.amazonaws.com"

},

"Action" : "sts:AssumeRole"

}

]

})

}

# Attaching Policy to IAM role

resource "aws_iam_role_policy_attachment" "AmazonEKSClusterPolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.master.name

}

# Attaching Policy to IAM role

resource "aws_iam_role_policy_attachment" "AmazonEKSServicePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSServicePolicy"

role = aws_iam_role.master.name

}

# Attaching Policy to IAM role

resource "aws_iam_role_policy_attachment" "AmazonEKSVPCResourceController" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSVPCResourceController"

role = aws_iam_role.master.name

}

# Creating IAM role for Worker Node

resource "aws_iam_role" "worker" {

name = "ed-eks-worker"

assume_role_policy = jsonencode({

"Version" : "2012-10-17",

"Statement" : [

{

"Effect" : "Allow",

"Principal" : {

"Service" : "ec2.amazonaws.com"

},

"Action" : "sts:AssumeRole"

}

]

})

}

# Creating IAM Policy for auto-scaler

resource "aws_iam_policy" "autoscaler" {

name = "ed-eks-autoscaler-policy"

policy = jsonencode({

"Version" : "2012-10-17",

"Statement" : [

{

"Action" : [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeTags",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeLaunchTemplateVersions"

],

"Effect" : "Allow",

"Resource" : "*"

}

]

})

}

# Attaching Policy to IAM role

resource "aws_iam_role_policy_attachment" "AmazonEKSWorkerNodePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

role = aws_iam_role.worker.name

}

# Attaching Policy to IAM role

resource "aws_iam_role_policy_attachment" "AmazonEKS_CNI_Policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

role = aws_iam_role.worker.name

}

# Attaching Policy to IAM role

resource "aws_iam_role_policy_attachment" "AmazonSSMManagedInstanceCore" {

policy_arn = "arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore"

role = aws_iam_role.worker.name

}

# Attaching Policy to IAM role

resource "aws_iam_role_policy_attachment" "AmazonEC2ContainerRegistryReadOnly" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = aws_iam_role.worker.name

}

# Attaching Policy to IAM role

resource "aws_iam_role_policy_attachment" "x-ray" {

policy_arn = "arn:aws:iam::aws:policy/AWSXRayDaemonWriteAccess"

role = aws_iam_role.worker.name

}

# Attaching Policy to IAM role

resource "aws_iam_role_policy_attachment" "s3" {

policy_arn = "arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess"

role = aws_iam_role.worker.name

}

# Attaching Policy to IAM role

resource "aws_iam_role_policy_attachment" "autoscaler" {

policy_arn = aws_iam_policy.autoscaler.arn

role = aws_iam_role.worker.name

}

resource "aws_iam_instance_profile" "worker" {

depends_on = [aws_iam_role.worker]

name = "EKS-worker-nodes-profile"

role = aws_iam_role.worker.name

}

The above code will create the IAM role for the master and worker nodes and attach the necessary policy to it.

Create

outputs.tffile and add the below code to it.

# IAM Wokrer Node Instance Profile

output "instance_profile" {

value = aws_iam_instance_profile.worker.name

}

# IAM Role Master's ARN

output "master_arn" {

value = aws_iam_role.master.arn

}

# IAM Role Worker's ARN

output "worker_arn" {

value = aws_iam_role.worker.arn

}

Step 5: Create the module for the EKS

Using a launch template, we will create an EKS cluster with the custom AMI.

In the production environment, you will have automation that will create the AMI for the worker nodes but from the tutorial perspective I have created the custom AMIs using Packer. You can create the AMI from here.

Create the

main.tffile and add the below code to it.

# Creating EKS Cluster

resource "aws_eks_cluster" "eks" {

name = var.cluster_name

role_arn = var.master_arn

version = var.cluster_version

vpc_config {

subnet_ids = [var.public_subnet_az1_id, var.public_subnet_az2_id]

}

tags = {

key = var.env

value = var.type

}

}

# Using Data Source to get all Avalablility Zones in Region

data "aws_availability_zones" "available_zones" {}

# Fetching Ubuntu 20.04 AMI ID

data "aws_ami" "amazon_linux_2" {

most_recent = true

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-focal-20.04-amd64-server-*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

owners = ["099720109477"]

}

# Creating kubectl server

resource "aws_instance" "kubectl-server" {

ami = data.aws_ami.amazon_linux_2.id

instance_type = var.instance_size

associate_public_ip_address = true

subnet_id = var.public_subnet_az1_id

vpc_security_group_ids = [var.eks_security_group_id]

tags = {

Name = "${var.cluster_name}-kubectl"

Env = var.env

Type = var.type

}

}

# Creating Launch Template for Worker Nodes

resource "aws_launch_template" "worker-node-launch-template" {

name = "worker-node-launch-template"

block_device_mappings {

device_name = "/dev/sdf"

ebs {

volume_size = 20

}

}

image_id = var.image_id

instance_type = "t2.micro"

user_data = base64encode(<<-EOF

MIME-Version: 1.0

Content-Type: multipart/mixed; boundary="==MYBOUNDARY=="

--==MYBOUNDARY==

Content-Type: text/x-shellscript; charset="us-ascii"

#!/bin/bash

/etc/eks/bootstrap.sh Prod-Cluster

--==MYBOUNDARY==--\

EOF

)

vpc_security_group_ids = [var.eks_security_group_id]

tag_specifications {

resource_type = "instance"

tags = {

Name = "Worker-Nodes"

}

}

}

# Creating Worker Node Group

resource "aws_eks_node_group" "node-grp" {

cluster_name = aws_eks_cluster.eks.name

node_group_name = "Worker-Node-Group"

node_role_arn = var.worker_arn

subnet_ids = [var.public_subnet_az1_id, var.public_subnet_az2_id]

launch_template {

name = aws_launch_template.worker-node-launch-template.name

version = aws_launch_template.worker-node-launch-template.latest_version

}

labels = {

env = "Prod"

}

scaling_config {

desired_size = var.worker_node_count

max_size = var.worker_node_count

min_size = var.worker_node_count

}

update_config {

max_unavailable = 1

}

}

locals {

eks_addons = {

"vpc-cni" = {

version = var.vpc-cni-version

resolve_conflicts = "OVERWRITE"

},

"kube-proxy" = {

version = var.kube-proxy-version

resolve_conflicts = "OVERWRITE"

}

}

}

# Creating the EKS Addons

resource "aws_eks_addon" "example" {

for_each = local.eks_addons

cluster_name = aws_eks_cluster.eks.name

addon_name = each.key

addon_version = each.value.version

resolve_conflicts_on_update = each.value.resolve_conflicts

}

From the above code, you can see that I have created the launch template for the node group.

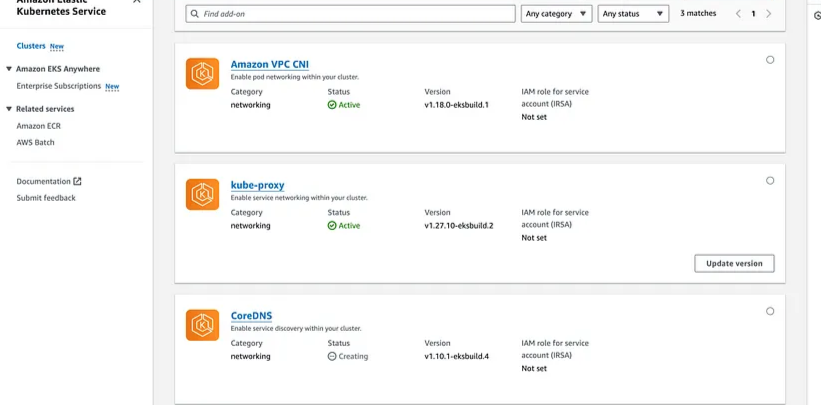

user_data— This config must be exactly set as shown, this is to make sure that during the node startup, it connects to the EKS control plane.I am also installing the VPC CNI & Kube Proxy add-ons.

I am also creating a separate server for Kubelet so that we can run all the commands from that server.

Create

variables.tffile and add the below code to it.

# Environment

variable "env" {

type = string

description = "Environment"

}

# Type

variable "type" {

type = string

description = "Type"

}

# Stack name

variable "cluster_name" {

type = string

description = "Project Name"

}

# Public subnet AZ1

variable "public_subnet_az1_id" {

type = string

description = "ID of Public Subnet in AZ1"

}

# Public subnet AZ2

variable "public_subnet_az2_id" {

type = string

description = "ID of Public Subnet in AZ2"

}

# Security Group

variable "eks_security_group_id" {

type = string

description = "ID of EKS worker node's security group"

}

# Master ARN

variable "master_arn" {

type = string

description = "ARN of master node"

}

# Worker ARN

variable "worker_arn" {

type = string

description = "ARN of worker node"

}

# Worker Node & Kubectl instance size

variable "instance_size" {

type = string

description = "Worker node's instance size"

}

# node count

variable "worker_node_count" {

type = string

description = "Worker node's count"

}

# AMI ID

variable "image_id" {

type = string

description = "AMI ID"

}

# Cluster Version

variable "cluster_version" {

type = string

description = "Cluster Version"

}

# VPC CNI Version

variable "vpc-cni-version" {

type = string

description = "VPC CNI Version"

}

# Kube Proxy Version

variable "kube-proxy-version" {

type = string

description = "Kube Proxy Version"

}

- Create

outputs.tffile and add the below code to it.

# EKS Cluster ID

output "aws_eks_cluster_name" {

value = aws_eks_cluster.eks.id

}

Step 6: Calling the modules

We are done creating all the modules. Now, we need to call all the modules to create the resources.

Create

main.tffile and add the below code to it.

# Creating VPC

module "vpc" {

source = "./modules/vpc"

cluster_name = var.cluster_name

env = var.env

type = var.type

}

# Creating security group

module "security_groups" {

source = "./modules/security-group"

vpc_id = module.vpc.vpc_id

cluster_name = var.cluster_name

env = var.env

type = var.type

}

# Creating IAM resources

module "iam" {

source = "./modules/iam"

}

# Creating EKS Cluster

module "eks" {

source = "./modules/eks"

master_arn = module.iam.master_arn

worker_arn = module.iam.worker_arn

public_subnet_az1_id = module.vpc.public_subnet_az1_id

public_subnet_az2_id = module.vpc.public_subnet_az2_id

env = var.env

type = var.type

eks_security_group_id = module.security_groups.eks_security_group_id

instance_size = var.instance_size

cluster_name = var.cluster_name

worker_node_count = var.instance_count

image_id = var.ami_id

cluster_version = var.cluster_version

vpc-cni-version = var.vpc-cni-version

kube-proxy-version = var.kube-proxy-version

}

- Create

provider.tffile and add the below code to it.

# configure aws provider

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

# Configure the AWS Provider

provider "aws" {

region = "ap-northeast-1"

}

Note: You need to change the bucket, region & dynamodb table name

- Create

variables.tffile and add the below code to it.

# Stack Name

variable "cluster_name" {

type = string

}

# Worker Node instance size

variable "instance_size" {

type = string

}

# Region

variable "region" {}

# Environment

variable "env" {

type = string

default = "Prod"

}

# Type

variable "type" {

type = string

default = "Production"

}

# Instance count

variable "instance_count" {

type = string

}

# AMI ID

variable "ami_id" {

type = string

}

# Cluster Version

variable "cluster_version" {

type = string

}

# VPC CNI Version

variable "vpc-cni-version" {

type = string

description = "VPC CNI Version"

}

# Kube Proxy Version

variable "kube-proxy-version" {

type = string

description = "Kube Proxy Version"

}

- Create

terraform.tfvarsfile and add the below code to it.

cluster_name = "Prod-Cluster"

instance_count = 1

instance_size = "t2.micro"

region = "ap-northeast-1"

cluster_version = "1.27"

ami_id = "ami-0595d6e81396a9efb"

vpc-cni-version = "v1.18.0-eksbuild.1"

kube-proxy-version = "v1.27.10-eksbuild.2"

Note: You need to change the ami_id & region.

You can find whole code here:

Step 7: Initialize the Working Directory

Execute the terraform init command in your working directory. This command downloads necessary providers, modules, and initializes the backend configuration.

Step 8: Generate a Terraform Execution Plan

Run terraform plan in the working directory to generate an execution plan. This plan outlines the actions Terraform will take to create, modify, or delete resources as defined in your configuration.

Step 9: Apply Terraform Configuration

Execute terraform apply in the working directory. This command applies the Terraform configuration and provisions all required AWS resources based on your defined infrastructure.

Step 10: Retrieve the Kubernetes Configuration

To obtain the Kubernetes configuration file for your cluster, run the following command:

aws eks update-kubeconfig --name <cluster-name> --region <region>

Replace <cluster-name> with your EKS cluster's name and <region> with the AWS region where your cluster is deployed.

Step 11: Verify Node Details

Run the following command to verify details about the nodes in your Kubernetes cluster:

kubectl get nodes

This command displays information about all nodes currently registered with your Kubernetes cluster.

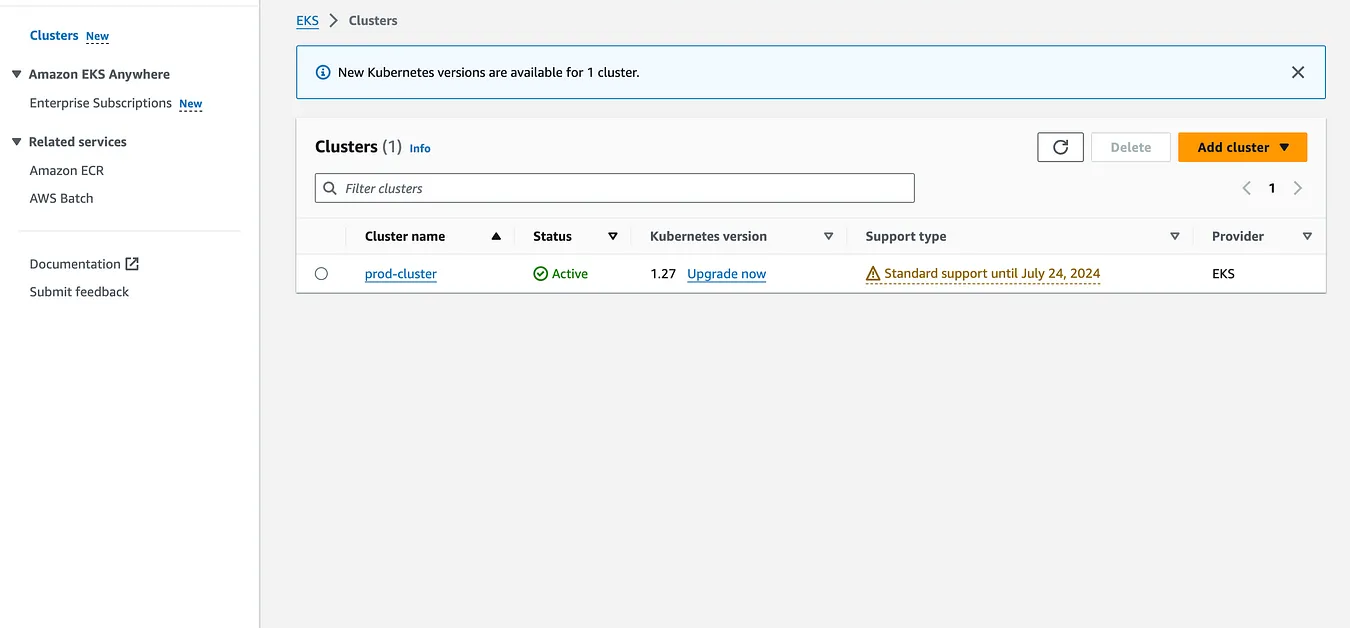

I've installed the CoreDNS add-on from the UI

Code can be found at: https://github.com/Saurabh-DevOpsVoyager77/EKS-managed_node_group_Terraform_Custom_Templates.git

Conclusion

Amazon Elastic Kubernetes Service (Amazon EKS) simplifies Kubernetes deployment by managing the control plane and worker nodes. It ensures high availability, enhances security, and automates critical tasks like patching and updates. This tutorial demonstrated setting up an EKS cluster with managed node groups using custom launch templates, enabling efficient and scalable Kubernetes environments on AWS. EKS empowers teams to focus on application development, making it ideal for modern cloud-native architectures and DevOps practices.

Subscribe to my newsletter

Read articles from Saurabh Adhau directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Saurabh Adhau

Saurabh Adhau

As a DevOps Engineer, I thrive in the cloud and command a vast arsenal of tools and technologies: ☁️ AWS and Azure Cloud: Where the sky is the limit, I ensure applications soar. 🔨 DevOps Toolbelt: Git, GitHub, GitLab – I master them all for smooth development workflows. 🧱 Infrastructure as Code: Terraform and Ansible sculpt infrastructure like a masterpiece. 🐳 Containerization: With Docker, I package applications for effortless deployment. 🚀 Orchestration: Kubernetes conducts my application symphonies. 🌐 Web Servers: Nginx and Apache, my trusted gatekeepers of the web.