Task Adaptation in Small Language Models: Fine-Tuning Phi-3

Pablo Salvador Lopez

Pablo Salvador Lopez

Small Language Models (SLMs) are gaining popularity due to their efficiency and speed. They are ideal for specialized tasks, especially in projects with limited resources. SLMs offer faster data processing, making them suitable for real-time applications and niche areas, and they can be fine-tuned easily for custom solutions.

🆚 Comparing Small and Large Language Models

The main difference between Small Language Models (SLMs) and Large Language Models (LLMs) is their training scale and capabilities. LLMs are trained on large amounts of data, allowing them to handle tasks that need a broad understanding of the world, complex reasoning, and advanced functions like orchestration and tool use. These models are ideal for applications where a wide-ranging grasp of context and creativity is important.

On the other hand, SLMs, such as Phi-3, excel in more specific roles. They are especially useful in situations that require precise language understanding within a limited domain. This makes them very effective for specialized tasks where extensive world knowledge is not as important.

When to Opt for SLMs:

Resource Efficiency: SLMs use fewer resources, making them perfect for projects with limited computational power or budget constraints.

Faster Inference: SLMs process data quickly, making them suitable for real-time applications, even on less powerful machines.

Domain-Specific Tasks: For niche areas, SLMs are practical and cost-effective. They can be fine-tuned more easily, providing custom solutions without a large investment.

One of the best SLM options today is Phi-3, "Tiny but mighty." So let's explore...

🌐The Phi-3 Universe

Microsoft released exciting updates to their Phi-3 model lineup, adding multimodal capabilities to small language models with impressive results. Here's the lowdown on the new features:

Phi-3-Vision (4.2 billion parameters, 128K context length): This multimodal marvel integrates language and vision seamlessly, making it perfect for interpreting real-world images, extracting text from visuals, and analyzing charts and diagrams.

Phi-3-Small (7 billion parameters, 128K and 8K context lengths): A versatile powerhouse in language, reasoning, coding, and math. It sets new benchmarks for performance, combining efficiency with cost-effectiveness.

Phi-3-Medium (14 billion parameters, 128K and 4K context lengths): Known for surpassing larger models in understanding and reasoning tasks, this model excels in complex language and coding challenges.

Phi-3-Mini (3.8 billion parameters, 128K and 4K context lengths): Perfect for long-context scenarios given its size, it excels in reasoning tasks. Available through MaaS, these models are easy to integrate into projects (accessed in regions like East US2 and Sweden Central on Azure AI Studio).

🤔 How to get access to Phi-3?

It is available on Microsoft Azure AI Studio, Hugging Face, and Ollama. For more details, check out Phi-3 Models & Availability across platforms.

👀 Why Fine-Tuning Phi-3?

When optimizing language models for specific scenarios, I always recommend starting with a structured approach:

Prompt Engineering: Begin with simple, clear prompts to direct the model's responses efficiently.

Retrieval Augmented Generation (RAG): Enhance these prompts with timely, external data for precision and relevance.

Fine-Tuning: Dive deeper to tailor models to particular tasks. This step is less common due to its complexity but incredibly effective.

Training a New Model: The most customized, resource-intensive option involves building a model from scratch to meet unique requirements.

However, with the release of Phi-3 and their improved scores and capabilities, fine-tuning might be your go-to option in these situations:

Efficiency on the Edge: Perfect for environments with limited computational power, these models are great for tasks on edge devices.

Instant Insights, Anywhere: Designed for quick, local inference, Phi-3 models are ideal for responsive offline applications.

Enhance agent architecture with domain-specific, low-latency agents. Multi-agent architecture allows you to create "conversation" specialized agents and low latency domain-specific tasks require fine-tuning Phi-3, which is definitely an option.

👨🔧Before to Start Fine-Tuning

Let's understand the training pipeline for a LLM/SLM at a high level. It involves three main steps: starting with pretraining, where the model learns to predict the next token in unlabeled text sequences using unsupervised learning from a large text corpus; moving to finetuning, where it undergoes supervised learning to improve its predictions based on labeled data for more accuracy in specific tasks; and ending with alignment, where advanced techniques like Proximal Policy Optimization further refine the model's responses to align closely with specific goals or standards. The output is a foundational language model (also known as pretrained) with a very large number of parameters (or "weights").

There are different approaches to fine-tuning

Supervised Fine-Tuning (SFT): Adapts models to new tasks using prompt-completion pairs, needing high-quality data.

Reinforcement Learning from Human Feedback (RLHF): Aligns models with human preferences through feedback, providing high steerability but requiring quality data.

Continual Pre-Training (CPT): Further pre-training with extra data, though it can be expensive and has limited use.

Why might you not want to do a full fine-tuning?

Full fine-tuning, where all model weights are updated, comes with several drawbacks:

Memory-Intensive Requirements: Managing large models and their associated data during training demands substantial memory, which can be prohibitive on standard hardware.

Catastrophic Forgetting: Full fine-tuning risks overwriting previously learned information, which can degrade the model’s performance on earlier tasks.

High Storage and Computational Costs: Maintaining multiple model versions for different tasks escalates both storage and computational expenses.

So, for practical reasons, full fine-tuning might not be the best idea..

All you need to know is Parameter Efficient Fine-Tuning (PEFT)

To tackle above challenges, researchers have developed PEFT methods. PEFT is a type of supervised fine-tuning that aims to improve model behavior without extensive retraining.

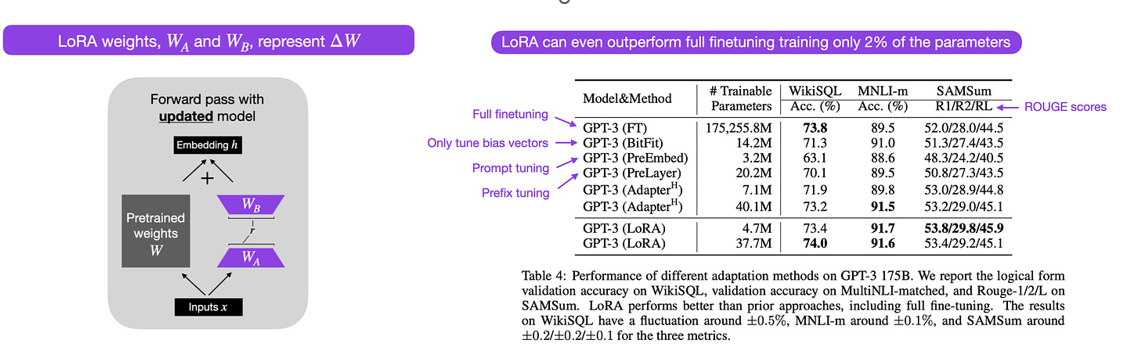

PEFT techniques focus on fine-tuning fewer parameters to maintain or even enhance the model's performance while using fewer resources. Two notable PEFT methods are Low-Rank Approximation (LoRA) and Prompt Tuning. These methods allow for efficient adaptation of LLMs to specific tasks, making them practical for various applications.

LoRA introduces a pair of rank decomposition matrices that modify a portion of the original model weights. These matrices are smaller and capture essential task-specific information without overhauling the entire parameter set.

https://arxiv.org/abs/2106.09685

Benefits:

Reduced Trainable Parameters: LoRA targets a minimal number of parameters, significantly cutting down memory usage and computational demands.

Efficient Inference: The smaller dimensions of the rank matrices ensure that inference remains swift, avoiding latency issues.

Selective Application: Primarily applied to attention layers, LoRA maximizes parameter efficiency without sacrificing performance.

🤖 How to Fine-Tune Phi-3 with Azure

When fine-tuning the Phi-3 model, Azure offers two pathways tailored to different needs and expertise levels: Cloud-Hosted Managed Services and Self-Managed Fine-Tuning. Each option has unique benefits, allowing you to choose the best fit for your project.

Azure's Cloud-Hosted Managed Services provide an easy way to fine-tune your models, ideal for teams wanting to streamline AI operations without managing infrastructure. It uses low-rank approximation (LoRA) for efficient fine-tuning, allowing you to focus on adjusting a subset.

This option is perfect for your team if you are looking for:

Fully Managed Services: Azure handles all the infrastructure, letting you focus solely on fine-tuning your models.

Scalability: Easily scale your operations up or down based on your needs, without worrying about hardware limitations.

Security and Compliance: Benefit from Azure's built-in security features, ensuring your data and models are protected to the highest standards.

Example: Check out the easy-to-use fine-tuning of Phi-3 in Azure AI Studio: Phi-3 Fine-Tuning Guide

Self-Managed Fine-Tuning: For teams that can manage their own infrastructure and prefer a hands-on approach, Self-Managed Fine-Tuning on Azure offers the flexibility to use open-source tools and frameworks.

This option is ideal for:

"White Box" LLMs Fine-Tuning: Efficiently fine-tune open-source or "white box" Large Language Models on small GPU clusters or even single GPU machines.

Advanced Techniques: Use Azure's powerful infrastructure to experiment with advanced fine-tuning methods, such as reinforcement learning, to enhance your models' capabilities.

Customization and Control: Have complete control over your fine-tuning environment, allowing for deep customization and optimization of your models and infrastructure.

Let's use Azure ML to manage your infrastructure remotely and use the peft library to apply QLoRA for fine-tuning. Check out the example here: azure-llm-fine-tuning/fine-tuning/1_training_mlflow.ipynb at main · daekeun-ml/azure-llm-fine-tuning (github.com)

Did you find it interesting? Subscribe to receive automatic alerts when I publish new articles and explore different series.

More quick how-to's in this series here: 📚🔧 Azure AI Practitioner: Tips and Hacks 💡

Explore my insights and key learnings on implementing Generative AI software systems in the world's largest enterprises. GenAI in Production 🧠

Join me to explore and analyze advancements in our industry shaping the future, from my personal corner and expertise in enterprise AI engineering. AI That Matters: My Take on New Developments 🌟

And... let's connect! We are one message away from learning from each other!

🔗 LinkedIn: Let’s get linked!

🧑🏻💻GitHub: See what I am building.

Subscribe to my newsletter

Read articles from Pablo Salvador Lopez directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Pablo Salvador Lopez

Pablo Salvador Lopez

As a seasoned engineer with extensive experience in AI and machine learning, I possess a blend of skills in full-stack data science, machine learning, and software engineering, complemented by a solid foundation in mathematics. My expertise lies in designing, deploying, and monitoring GenAI & ML enterprise applications at scale, adhering to MLOps/LLMOps and best practices in software engineering. At Microsoft, as part of the AI Global Black Belt team, I empower the world's largest enterprises with cutting-edge AI and machine learning solutions. I love to write and share with the AI community in an open-source setting, believing that the best part of our work is accelerating the AI revolution and contributing to the democratization of knowledge. I'm here to contribute my two cents and share my insights on my AI journey in production environments at large scale. Thank you for reading!