ElasticSearch on AWS EC2 using Terraform

Sagar Budhathoki

Sagar Budhathoki

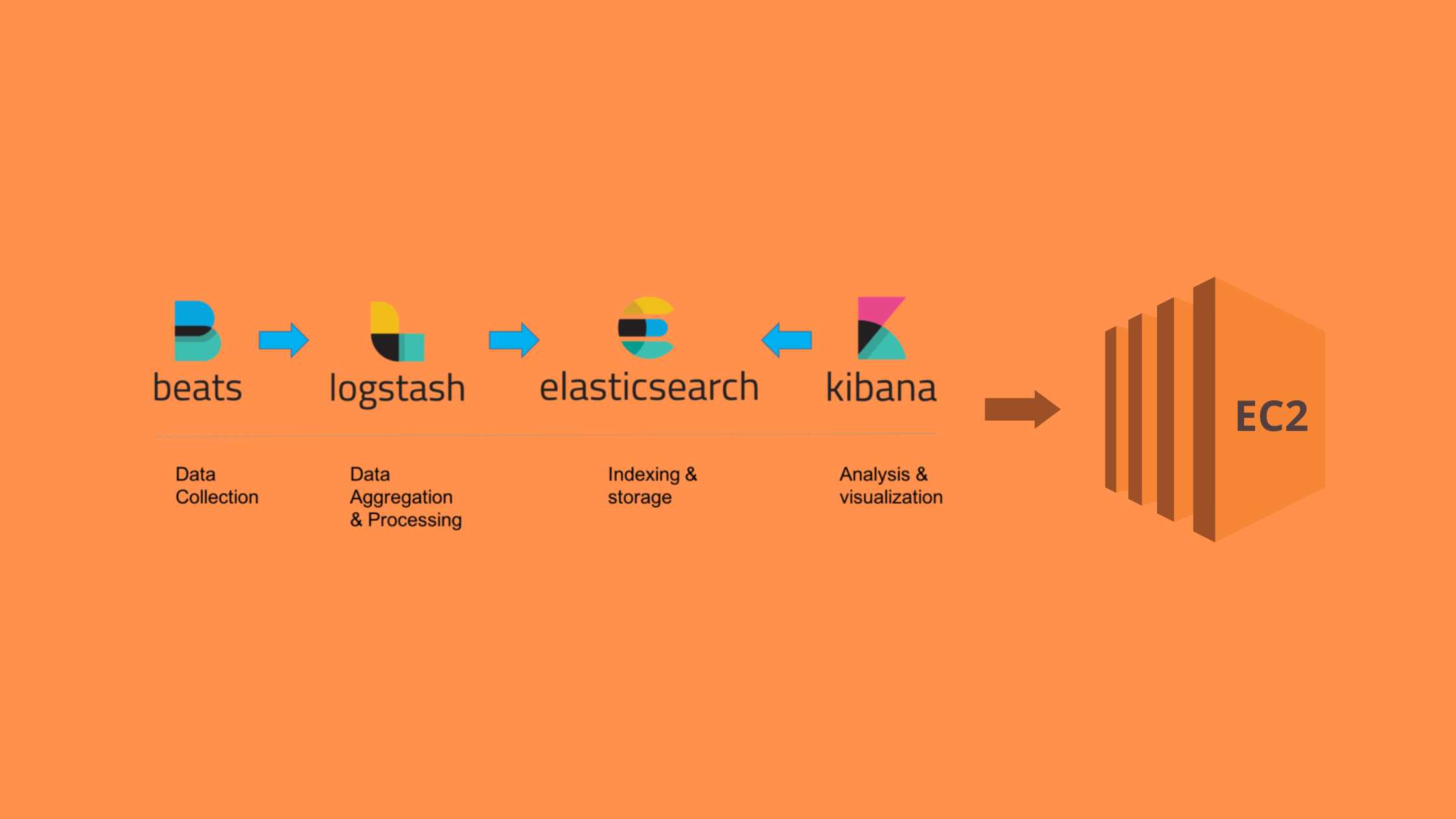

In this, we’ll learn to set up an ElasticSearch Stack on AWS EC2. Elastic Stack consists of ElasticSearch, Filebeat, LogStash, and Kibana(ELK stack) which brings all the logs and traces into a single place. This is one of the most popular tools for storing and viewing logs.

In Elastic Stack—

ElasticSearch is used to store data.

Filebeat transfers the logs into ElasticSearch through LogStash

LogStash filters the logs

Kibana helps in visualizing the data and navigating the logs.

In this article, we’re going to deploy ElasticStack on AWS EC2 using Terraform.

Why run your own Elastic Stack on AWS EC2 instead of hosted services

We can create ElasticSearch in AWS either by using Elastic Cloud or by using AWS ElasticSearch Service(OpenSearch). But running our own ElasticSearch on AWS EC2 instead of hosted services has the following advantages:

Cheaper

Full control over configuration, accessibility, and visibility.

Easy plugins installation

Access logs

Perform any configuration changes

No boundary in choosing any instance type

PREREQUISITES

AWS and Terraform Knowledge

AWS Credentials

Creating Elastic Stack on AWS EC2

Here we’ll create an elastic stack in a VPC, and set up Filebeat on EC2 which helps to view logs, and then, LogStash to apply some filters to the data/logs. All the logs are created and stored inside /var/log. Filebeat takes all these logs and sends them to LogStash. LogStash then applies filters to send them to ElasticSearch.

Finally, Kibana will be configured to display logs from ElasticSearch. Which can be accessed from the Kibana dashboard.

Configuration Set-Up(Important)

To set up the configuration for each component, first, we need to install components on our EC2 server. For the sake of simplicity, we’ll be using Terraform data_template method to replace the default file. And it must be done before starting the component.

VPC, subnets set-up

#basic setup

resource "aws_vpc" "elastic_stack_vpc"{

cidr_block = cidrsubnet("172.20.0.0/16",0,0)

tags={

Name="example-elasticsearch_vpc"

}

}

resource "aws_internet_gateway" "elastic_stack_ig" {

vpc_id = aws_vpc.elastic_vpc.id

tags = {

Name = "example_elasticsearch_igw"

}

}

resource "aws_route_table" "elastic_stack_rt" {

vpc_id = aws_vpc.elastic_vpc.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.elastic_internet_gateway.id

}

tags = {

Name = "example_elasticsearch_rt"

}

}

resource "aws_main_route_table_association" "elastic_stack_rt_main" {

vpc_id = aws_vpc.elastic_vpc.id

route_table_id = aws_route_table.elastic_rt.id

}

resource "aws_subnet" "elastic_stack_subnet"{

for_each = {ap-south-1a=cidrsubnet("172.20.0.0/16",8,10),ap-south-1b=cidrsubnet("172.20.0.0/16",8,20)}

vpc_id = aws_vpc.elastic_vpc.id

availability_zone = each.key

cidr_block = each.value

tags={

Name="elasticsearch_subnet_${each.key}"

}

}

Now, Setup ElasticSearach Cluster:

ElasticSearch cluster set-up

In this, we’ll set up a two-masternode and one-datanode ElasticSearch cluster in different AZs. In the security group for ElasticSearch, add the inbound access rule to port 9200. This is required so that Kibana can access it.

# elasticsearch security group

resource "aws_security_group" "elasticsearch_sg" {

vpc_id = var.vpc_id

description = "ElasticSearch Security Group"

ingress {

description = "ingress rules"

cidr_blocks = [ "0.0.0.0/0" ]

from_port = 22

protocol = "tcp"

to_port = 22

}

ingress {

description = "ingress rules"

from_port = 9200

protocol = "tcp"

to_port = 9300

security_groups = [aws_security_group.kibana_sg.id] # Kibana security group to access ElasticSearch

}

ingress {

description = "ingress rules"

from_port = 9200

protocol = "tcp"

to_port = 9300

security_groups = [var.lambda_sg] # If you're using lambda to access ES.

}

egress {

description = "egress rules"

from_port = 0

protocol = "-1"

to_port = 0

cidr_blocks = ["0.0.0.0/0"]

}

tags={

Name="elasticsearch_sg"

}

}

ElasticSearch master nodes:

# Elastic-Search master nodes

resource "aws_key_pair" "elastic_ssh_key" {

key_name="elasticsearch_ssh"

public_key= file("~/.ssh/elasticsearch_keypair.pub")

}

resource "aws_instance" "elastic_nodes" {

count = 2

ami = var.elastic_aws_ami

instance_type = var.elastic_aws_instance_type

# subnet_id = aws_subnet.elastic_subnet[var.azs[count.index]].id

subnet_id = var.public_subnet_ids[count.index]

vpc_security_group_ids = [aws_security_group.elasticsearch_sg.id]

key_name = aws_key_pair.elastic_ssh_key.key_name

iam_instance_profile = "${aws_iam_instance_profile.elastic_ec2_instance_profile.name}"

associate_public_ip_address = true

tags = {

Name = "elasticsearch dev node-${count.index}"

}

}

data "template_file" "init_elasticsearch" {

depends_on = [

aws_instance.elastic_nodes

]

count = 2

template = file("./elasticsearch/configs/elasticsearch_config.tpl")

vars = {

cluster_name = "elasticsearch_cluster"

node_name = "node_${count.index}"

node = aws_instance.elastic_nodes[count.index].private_ip

node1 = aws_instance.elastic_nodes[0].private_ip

node2 = aws_instance.elastic_nodes[1].private_ip

node3 = aws_instance.elastic_datanodes[0].private_ip

}

}

data "template_file" "init_backupscript" {

depends_on = [

aws_instance.elastic_nodes

]

count = 2

template = file("./elasticsearch/configs/s3_backup_script.tpl")

vars = {

cluster_name = "elasticsearch_cluster"

node = aws_instance.elastic_nodes[count.index].private_ip

node1 = aws_instance.elastic_nodes[0].private_ip

node2 = aws_instance.elastic_nodes[1].private_ip

node3 = aws_instance.elastic_datanodes[0].private_ip

}

}

resource "aws_eip" "elasticsearch_eip"{

count = 2

instance = element(aws_instance.elastic_nodes.*.id, count.index)

vpc = true

tags = {

Name = "elasticsearch-eip-${terraform.workspace}-${count.index + 1}"

}

}

resource "null_resource" "move_es_file" {

count = 2

connection {

type = "ssh"

user = "ec2-user"

private_key = file("~/.ssh/elasticsearch_keypair.pem")

host = aws_instance.elastic_nodes[count.index].public_ip

}

provisioner "file" {

content = data.template_file.init_elasticsearch[count.index].rendered

destination = "elasticsearch.yml"

}

provisioner "file" {

content = data.template_file.init_backupscript[count.index].rendered

destination = "s3_backup_script.sh"

}

}

resource "null_resource" "start_es" {

depends_on = [

null_resource.move_es_file

]

count = 2

connection {

type = "ssh"

user = "ec2-user"

private_key = file("~/.ssh/elasticsearch_keypair.pem")

host = aws_instance.elastic_nodes[count.index].public_ip

}

provisioner "remote-exec" {

inline = [

"#!/bin/bash",

"sudo yum update -y",

"sudo yum install java-1.8.0 -y",

"sudo rpm -i <https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.16.1-x86_64.rpm>",

"sudo systemctl daemon-reload",

"sudo systemctl enable elasticsearch.service",

"sudo chmod -R 777 /etc/elasticsearch",

"sudo sed -i 's@-Xms1g@-Xms${aws_instance.elastic_nodes[count.index].root_block_device[0].volume_size/2}g@g' /etc/elasticsearch/jvm.options",

"sudo sed -i 's@-Xmx1g@-Xmx${aws_instance.elastic_nodes[count.index].root_block_device[0].volume_size/2}g@g' /etc/elasticsearch/jvm.options",

# "sudo sed -i 's/#network.host: 192.168.0.1/network.host: 0.0.0.0/g' /etc/elasticsearch/elasticsearch.yml",

"sudo rm /etc/elasticsearch/elasticsearch.yml",

"sudo cp elasticsearch.yml /etc/elasticsearch/",

"sudo systemctl start elasticsearch.service",

]

}

}

# Elastic-Search data nodes setup

resource "aws_key_pair" "elastic_datanode_ssh_key" {

key_name="elasticsearch_datanode_ssh"

public_key= file("~/.ssh/elasticsearch_keypair.pub")

}

resource "aws_instance" "elastic_datanodes" {

count = 1

ami = var.elastic_aws_ami

instance_type = var.elastic_aws_instance_type

# subnet_id = aws_subnet.elastic_subnet[var.azs[count.index]].id

subnet_id = var.public_subnet_ids[count.index]

vpc_security_group_ids = [aws_security_group.elasticsearch_sg.id]

key_name = aws_key_pair.elastic_ssh_key.key_name

iam_instance_profile = "${aws_iam_instance_profile.elastic_ec2_instance_profile.name}"

associate_public_ip_address = true

tags = {

Name = "elasticsearch dev node-${count.index + 2}"

}

}

data "template_file" "init_es_datanode" {

depends_on = [

aws_instance.elastic_datanodes

]

count = 1

template = file("./elasticsearch/configs/elasticsearch_datanode_config.tpl")

vars = {

cluster_name = "elasticsearch_cluster"

node_name = "datanode_${count.index}"

node = aws_instance.elastic_datanodes[count.index].private_ip

node1 = aws_instance.elastic_nodes[0].private_ip

node2 = aws_instance.elastic_nodes[1].private_ip

node3 = aws_instance.elastic_datanodes[0].private_ip

}

}

data "template_file" "init_backupscript_datanode" {

depends_on = [

aws_instance.elastic_datanodes

]

count = 1

template = file("./elasticsearch/configs/s3_backup_script.tpl")

vars = {

cluster_name = "elasticsearch_cluster"

node = aws_instance.elastic_datanodes[count.index].private_ip

node1 = aws_instance.elastic_nodes[0].private_ip

node2 = aws_instance.elastic_nodes[1].private_ip

node3 = aws_instance.elastic_datanodes[0].private_ip

}

}

# Uncomment following if you want to attach elastic IP to your data nodes.

# resource "aws_eip" "elasticsearch_datanode_eip"{

# count = 1

# instance = element(aws_instance.elastic_datanodes.*.id, count.index)

# vpc = true

# tags = {

# Name = "elasticsearch-eip-datanode-${count.index + 1}"

# }

# }

resource "null_resource" "move_es_datanode_file" {

count = 1

connection {

type = "ssh"

user = "ec2-user"

private_key = file("~/.ssh/elasticsearch_keypair.pem")

host = aws_instance.elastic_datanodes[count.index].public_ip

}

provisioner "file" {

content = data.template_file.init_es_datanode[count.index].rendered

destination = "elasticsearch_datanode.yml"

}

provisioner "file" {

content = data.template_file.init_backupscript_datanode[count.index].rendered

destination = "s3_backup_script.sh"

}

}

resource "null_resource" "start_es_datanodes" {

depends_on = [

null_resource.move_es_datanode_file

]

count = 1

connection {

type = "ssh"

user = "ec2-user"

private_key = file("~/.ssh/elasticsearch_keypair.pem")

host = aws_instance.elastic_datanodes[count.index].public_ip

}

provisioner "remote-exec" {

inline = [

"#!/bin/bash",

"sudo yum update -y",

"sudo yum install java-1.8.0 -y",

"sudo rpm -i <https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.16.1-x86_64.rpm>",

"sudo systemctl daemon-reload",

"sudo systemctl enable elasticsearch.service",

"sudo chmod -R 777 /etc/elasticsearch",

"sudo sed -i 's@-Xms1g@-Xms${aws_instance.elastic_datanodes[count.index].root_block_device[0].volume_size/2}g@g' /etc/elasticsearch/jvm.options",

"sudo sed -i 's@-Xmx1g@-Xmx${aws_instance.elastic_datanodes[count.index].root_block_device[0].volume_size/2}g@g' /etc/elasticsearch/jvm.options",

# "sudo sed -i 's/#network.host: 192.168.0.1/network.host: 0.0.0.0/g' /etc/elasticsearch/elasticsearch.yml",

"sudo rm /etc/elasticsearch/elasticsearch.yml",

"sudo cp elasticsearch_datanode.yml /etc/elasticsearch/elasticsearch.yml",

"sudo systemctl start elasticsearch.service"

]

}

}

Set-up Kibana

resource "aws_security_group" "kibana_sg" {

vpc_id = aws_vpc.elastic_stack_vpc.id

ingress {

description = "ingress rules"

cidr_blocks = [ "0.0.0.0/0" ]

from_port = 22

protocol = "tcp"

to_port = 22

}

ingress {

description = "ingress rules"

cidr_blocks = [ "0.0.0.0/0" ]

from_port = 5601

protocol = "tcp"

to_port = 5601

}

egress {

description = "egress rules"

cidr_blocks = [ "0.0.0.0/0" ]

from_port = 0

protocol = "-1"

to_port = 0

}

tags={

Name="kibana_security_group"

}

}

Kibana:

# Kibana setup

resource "aws_instance" "kibana" {

depends_on = [

null_resource.start_es

]

ami = "ami-0ed9277fb7eb570c9"

instance_type = "t2.small"

subnet_id = aws_subnet.elastic_subnet[var.az_name[0]].id

vpc_security_group_ids = [aws_security_group.kibana_sg.id]

key_name = aws_key_pair.elastic_ssh_key.key_name

associate_public_ip_address = true

tags = {

Name = "kibana"

}

}

data "template_file" "init_kibana" {

depends_on = [

aws_instance.kibana

]

template = file("./configs/kibana_config.tpl")

vars = {

elasticsearch = aws_instance.elastic_nodes[0].public_ip

}

}

resource "null_resource" "move_kibana_file" {

depends_on = [

aws_instance.kibana

]

connection {

type = "ssh"

user = "ec2-user"

private_key = file("elasticsearch_keypair.pem")

host = aws_instance.kibana.public_ip

}

provisioner "file" {

content = data.template_file.init_kibana.rendered

destination = "kibana.yml"

}

}

resource "null_resource" "install_kibana" {

depends_on = [

aws_instance.kibana

]

connection {

type = "ssh"

user = "ec2-user"

private_key = file("elasticsearch_keypair.pem")

host = aws_instance.kibana.public_ip

}

provisioner "remote-exec" {

inline = [

"sudo yum update -y",

"sudo rpm -i <https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.16.1-x86_64.rpm>",

"sudo rm /etc/kibana/kibana.yml",

"sudo cp kibana.yml /etc/kibana/",

"sudo systemctl start kibana"

]

}

}

Set-up LogStash

This takes up logs sent by Filebeats and applies filters to them before sending them to ElasticSearch. For this, add the inbound rule to port 5044.

resource "aws_security_group" "logstash_sg" {

vpc_id = aws_vpc.elastic_stack_vpc.id

ingress {

description = "ingress rules"

cidr_blocks = [ "0.0.0.0/0" ]

from_port = 22

protocol = "tcp"

to_port = 22

}

ingress {

description = "ingress rules"

cidr_blocks = [ "0.0.0.0/0" ]

from_port = 5044

protocol = "tcp"

to_port = 5044

}

egress {

description = "egress rules"

cidr_blocks = [ "0.0.0.0/0" ]

from_port = 0

protocol = "-1"

to_port = 0

}

tags={

Name="logstash_sg"

}

}

LogStash:

resource "aws_instance" "logstash" {

depends_on = [

null_resource.install_kibana

]

ami = "ami-04d29b6f966df1537"

instance_type = "t2.large"

subnet_id = aws_subnet.elastic_subnet[var.az_name[0]].id

vpc_security_group_ids = [aws_security_group.logstash_sg.id]

key_name = aws_key_pair.elastic_ssh_key.key_name

associate_public_ip_address = true

tags = {

Name = "logstash"

}

}

data "template_file" "init_logstash" {

depends_on = [

aws_instance.logstash

]

template = file("./logstash_config.tpl")

vars = {

elasticsearch = aws_instance.elastic_nodes[0].public_ip

}

}

resource "null_resource" "move_logstash_file" {

depends_on = [

aws_instance.logstash

]

connection {

type = "ssh"

user = "ec2-user"

private_key = file("tf-kp.pem")

host = aws_instance.logstash.public_ip

}

provisioner "file" {

content = data.template_file.init_logstash.rendered

destination = "logstash.conf"

}

}

resource "null_resource" "install_logstash" {

depends_on = [

aws_instance.logstash

]

connection {

type = "ssh"

user = "ec2-user"

private_key = file("tf-kp.pem")

host = aws_instance.logstash.public_ip

}

provisioner "remote-exec" {

inline = [

"sudo yum update -y && sudo yum install java-1.8.0-openjdk -y",

"sudo rpm -i <https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.16.1-x86_64.rpm>",

"sudo cp logstash.conf /etc/logstash/conf.d/logstash.conf",

"sudo systemctl start logstash.service"

]

}

}

Filebeat Setup

It takes logs from /var/logs/ and sends them to LogStash on port 5044.

resource "aws_security_group" "filebeat_sg" {

vpc_id = aws_vpc.elastic_stack_vpc.id

ingress {

description = "ingress rules"

cidr_blocks = [ "0.0.0.0/0" ]

from_port = 22

protocol = "tcp"

to_port = 22

}

egress {

description = "egress rules"

cidr_blocks = [ "0.0.0.0/0" ]

from_port = 0

protocol = "-1"

to_port = 0

}

tags={

Name="filebeat_sg"

}

}

Filebeat:

resource "aws_instance" "filebeat" {

depends_on = [

null_resource.install_logstash

]

ami = "ami-04d29b6f966df1537"

instance_type = "t2.large"

subnet_id = aws_subnet.elastic_subnet[var.az_name[0]].id

vpc_security_group_ids = [aws_security_group.filebeat_sg.id]

key_name = aws_key_pair.elastic_ssh_key.key_name

associate_public_ip_address = true

tags = {

Name = "filebeat"

}

}

resource "null_resource" "move_filebeat_file" {

depends_on = [

aws_instance.filebeat

]

connection {

type = "ssh"

user = "ec2-user"

private_key = file("tf-kp.pem")

host = aws_instance.filebeat.public_ip

}

provisioner "file" {

source = "filebeat.yml"

destination = "filebeat.yml"

}

}

resource "null_resource" "install_filebeat" {

depends_on = [

null_resource.move_filebeat_file

]

connection {

type = "ssh"

user = "ec2-user"

private_key = file("tf-kp.pem")

host = aws_instance.filebeat.public_ip

}

provisioner "remote-exec" {

inline = [

"sudo yum update -y",

"sudo rpm -i <https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.16.1-x86_64.rpm>",

"sudo sed -i 's@kibana_ip@${aws_instance.kibana.public_ip}@g' filebeat.yml",

"sudo sed -i 's@logstash_ip@${aws_instance.logstash.public_ip}@g' filebeat.yml",

"sudo rm /etc/filebeat/filebeat.yml",

"sudo cp filebeat.yml /etc/filebeat/",

"sudo systemctl start filebeat.service"

]

}

}

Now, you have successfully set up Elastic Stack on AWS EC2 using Terraform.

Visit, <public_ip_of_any_es_node>:9200/_cluster/health to see ElasticSearch Status

Visit, <public_ip_of_any_es_node>:9200/_cat/nodes?v to see ElasticSearch nodes

Visit, <public_ip_of_kibana_instance>:5601/_cluster/health to see Kibana

After accessing Kibana, go to settings > Index Patterns > Add the logstash index

To check logs, SSH into each component and run the command:

$ sudo systemctl status <component-name> -l

Then SSH int Filebeat EC2 instance and add sample .log file inside /var/log/ . And you can search for the logs inside the Kibana dashboard.

For a sample log run the following and see a record on Kibana:

echo "echo 'This is a sample log for test' >> /var/log/test-log.log" | sudo bash

That’s It.

Conclusion

Congratulations, you have successfully learned about how to set up Elastic Stack on AWS EC2 using Terraform.

Thank You!

Subscribe to my newsletter

Read articles from Sagar Budhathoki directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sagar Budhathoki

Sagar Budhathoki

I am a Python/DevOps Engineer. Skilled with hands-on experience in Python frameworks, system programming, cloud computing, automating, and optimizing mission-critical deployments in AWS, leveraging configuration management, CI/CD, and DevOps processes. From Nepal.