Kubernetes Pod Anti-Affinity: A Beginner's Guide

Maxat Akbanov

Maxat Akbanov

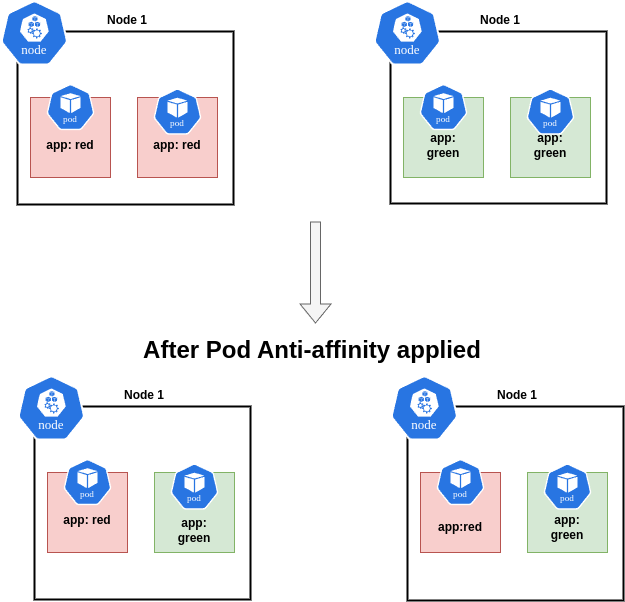

Pod anti-affinity is a Kubernetes feature that allows you to specify that certain pods should not be scheduled on the same nodes as other specified pods. This is useful for ensuring high availability and resilience by spreading out workloads across different nodes.

topologyKey value in the affinity specification.Let's imagine a scenario where you have multiple applications running on your cluster in a microservice architecture, and your cluster consists of several nodes hosting your application pods. While the scheduler ensures that all your pods are placed on healthy nodes with adequate resources, it doesn't consider which specific applications are running in each pod when deciding on placement. This could lead to a situation where all the pods from a particular deployment are placed on the same node. The major drawback of this situation is that it exposes that application to the risk of failure if that node goes down. The Pod Anti-Affinity helps us to spread the pods evenly across the nodes in the cluster.

Difference between Node Affinity and Pod Affinity/Anti-Affinity

Node Affinity and Inter-Pod Affinity/Anti-Affinity are Kubernetes features that help control the placement of pods within a cluster. However, they serve different purposes and operate based on different criteria.

Node Affinity

Node Affinity is used to control which nodes a pod can be scheduled on based on node labels. It allows you to specify rules that must be satisfied for a pod to be scheduled onto a node.

Key Points:

Based on Node Labels: Node Affinity uses the labels assigned to nodes to determine where pods can be scheduled.

Types of Affinity:

requiredDuringSchedulingIgnoredDuringExecution: The scheduler can't schedule the Pod unless the rule is met. This is a hard requirement.

preferredDuringSchedulingIgnoredDuringExecution: The scheduler tries to find a node that meets the rule. If a matching node is not available, the scheduler still schedules the Pod.

IgnoredDuringExecution means that if the node labels change after Kubernetes schedules the Pod, the Pod continues to run.Example:

apiVersion: v1

kind: Pod

metadata:

name: with-node-affinity

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: topology.kubernetes.io/zone

operator: In

values:

- antarctica-east1

- antarctica-west1

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: another-node-label-key

operator: In

values:

- another-node-label-value

containers:

- name: with-node-affinity

image: registry.k8s.io/pause:2.0

Inter-Pod Affinity/Anti-Affinity

Inter-Pod Affinity and Pod Anti-Affinity control the placement of pods relative to other pods based on their labels. These features allow you to specify rules for placing pods in relation to other pods.

Key Points:

Based on Pod Labels: Pod Affinity/Anti-Affinity uses labels assigned to pods to determine placement.

Types of Affinity:

Inter-Pod Affinity: Ensures that pods are scheduled on the same node or close to other specified pods.

Pod Anti-Affinity: Ensures that pods are not scheduled on the same node or close to other specified pods.

Topology Key: Specifies the failure domain within which the affinity or anti-affinity rule applies (e.g.,

kubernetes.io/hostnamefor nodes,topology.kubernetes.io/zonefor zones in the cloud).❗Pod anti-affinity requires nodes to be consistently labeled, in other words, every node in the cluster must have an appropriate label matchingtopologyKey. If some or all nodes are missing the specifiedtopologyKeylabel, it can lead to unintended behavior.

Examples:

Inter-Pod Affinity:

apiVersion: v1

kind: Pod

metadata:

name: example-pod

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- my-app

topologyKey: "kubernetes.io/hostname"

In this example, the pod will be scheduled on the same node as other pods with the label app=my-app.

Pod Anti-Affinity:

apiVersion: v1

kind: Pod

metadata:

name: example-pod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- my-app

topologyKey: "kubernetes.io/hostname"

In this example, the pod will be scheduled on different nodes from pods with the label app=my-app.

Summary of Differences

Node Affinity:

Focuses on the relationship between pods and nodes.

Uses node labels.

Controls which nodes a pod can be scheduled on.

Pod Affinity/Anti-Affinity:

Focuses on the relationship between pods.

Uses pod labels.

Controls placement of pods relative to other pods.

Can specify inter-affinity (co-location) or anti-affinity (separation).

Both Node Affinity and Pod Affinity/Anti-Affinity are powerful tools for managing pod placement and ensuring high availability, performance, and compliance with deployment policies in a Kubernetes cluster.

Use Cases for Pod Anti-Affinity

High Availability:

Ensure that replicas of a critical application are not placed on the same node to prevent a single point of failure.

Example: Deploying replicas of a web server across different nodes to ensure availability if one node goes down.

Resource Contention:

Prevent pods from competing for the same resources by spreading them across different nodes.

Example: Avoiding multiple CPU-intensive workloads on the same node to ensure they do not affect each other's performance.

Failure Isolation:

Reduce the risk of correlated failures by ensuring that similar pods are not scheduled on the same node.

Example: Distributing pods of a stateful application across different nodes to prevent losing data in case of a node failure.

Compliance and Security:

Enforce organizational policies that require certain types of workloads to be isolated from each other.

Example: Ensuring that test and production environments do not share the same nodes.

Example Scenario

Imagine you have a web application with multiple replicas (pods) and a database. You want to ensure that:

No two replicas of the web application are on the same node.

The database pod is not on the same node as any web application pod.

You would use pod anti-affinity rules to achieve this separation, thereby enhancing the resilience and performance of your application.

Workshop Exercise: Pod Anti-Affinity with Minikube

This exercise demonstrates how to set up and observe pod anti-affinity rules in a Kubernetes cluster using Minikube. By following these steps, you can see how Kubernetes schedules pods across different nodes to ensure high availability and fault tolerance.

Prerequisites:

Minikube installed on your local machine.

kubectl installed and configured to interact with Minikube.

Step 1: Start Minikube

Start a Minikube cluster with multiple nodes to see the anti-affinity rules in action.

minikube start --nodes 6

Verify that the nodes are up and running:

kubectl get nodes

Step 2: Create a Namespace

Create a new namespace for the exercise:

kubectl create namespace anti-affinity-demo

Step 3: Define a Deployment with Anti-Affinity

Create a deployment configuration file with pod anti-affinity rules. Save the following YAML content to a file named deployment.yaml.

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

namespace: anti-affinity-demo

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- my-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: my-app-container

image: nginx

ports:

- containerPort: 80

Step 4: Apply the Deployment

Apply the deployment to your Minikube cluster:

kubectl apply -f deployment.yaml

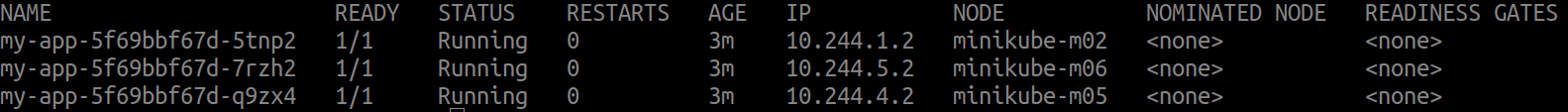

Step 5: Verify Pod Distribution

Check the status of the pods and verify their node placement:

kubectl get pods -o wide -n anti-affinity-demo

You should see that the pods are distributed across different nodes, adhering to the anti-affinity rule specified.

Step 6: Inspect Node and Pod Details

Inspect the pods to verify the labels and affinity rules:

# Describe a pod

kubectl describe pod <pod-name> -n anti-affinity-demo

# Get the yaml output

kubectl get pod <pod-name> -n anti-affinity-demo -o yaml

Replace <pod-name> with the name of one of your pods.

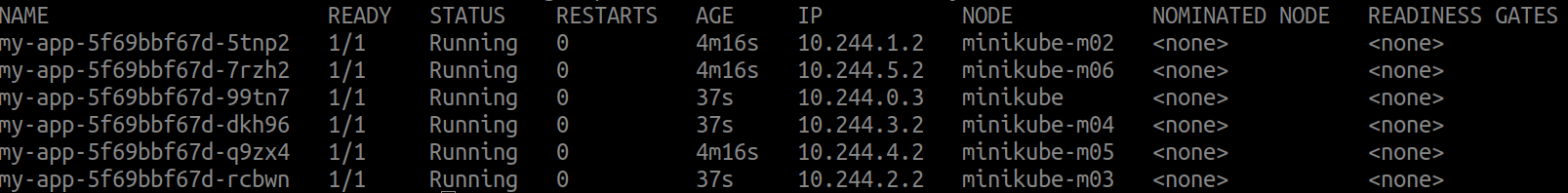

Step 7: Observe Anti-Affinity in Action

To observe the anti-affinity rules in action, try scaling the deployment up or down and see how Kubernetes schedules the pods.

# Scale up the deployment

kubectl scale deployment my-app --replicas=6 -n anti-affinity-demo

# Verify pod distribution again

kubectl get pods -o wide -n anti-affinity-demo

Scaling up should distribute the new pods across different nodes as much as possible, adhering to the anti-affinity rules.

Cleanup

After completing the exercise, clean up the resources:

# Delete the deployment

kubectl delete deployment my-app -n anti-affinity-demo

# Delete the namespace

kubectl delete namespace anti-affinity-demo

# Stop Minikube

minikube stop

References

Subscribe to my newsletter

Read articles from Maxat Akbanov directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Maxat Akbanov

Maxat Akbanov

Hey, I'm a postgraduate in Cyber Security with practical experience in Software Engineering and DevOps Operations. The top player on TryHackMe platform, multilingual speaker (Kazakh, Russian, English, Spanish, and Turkish), curios person, bookworm, geek, sports lover, and just a good guy to speak with!