Day 12/40 Days of K8s: Exploring Kubernetes Daemonset, Job and Cronjob

Gopi Vivek Manne

Gopi Vivek Manne

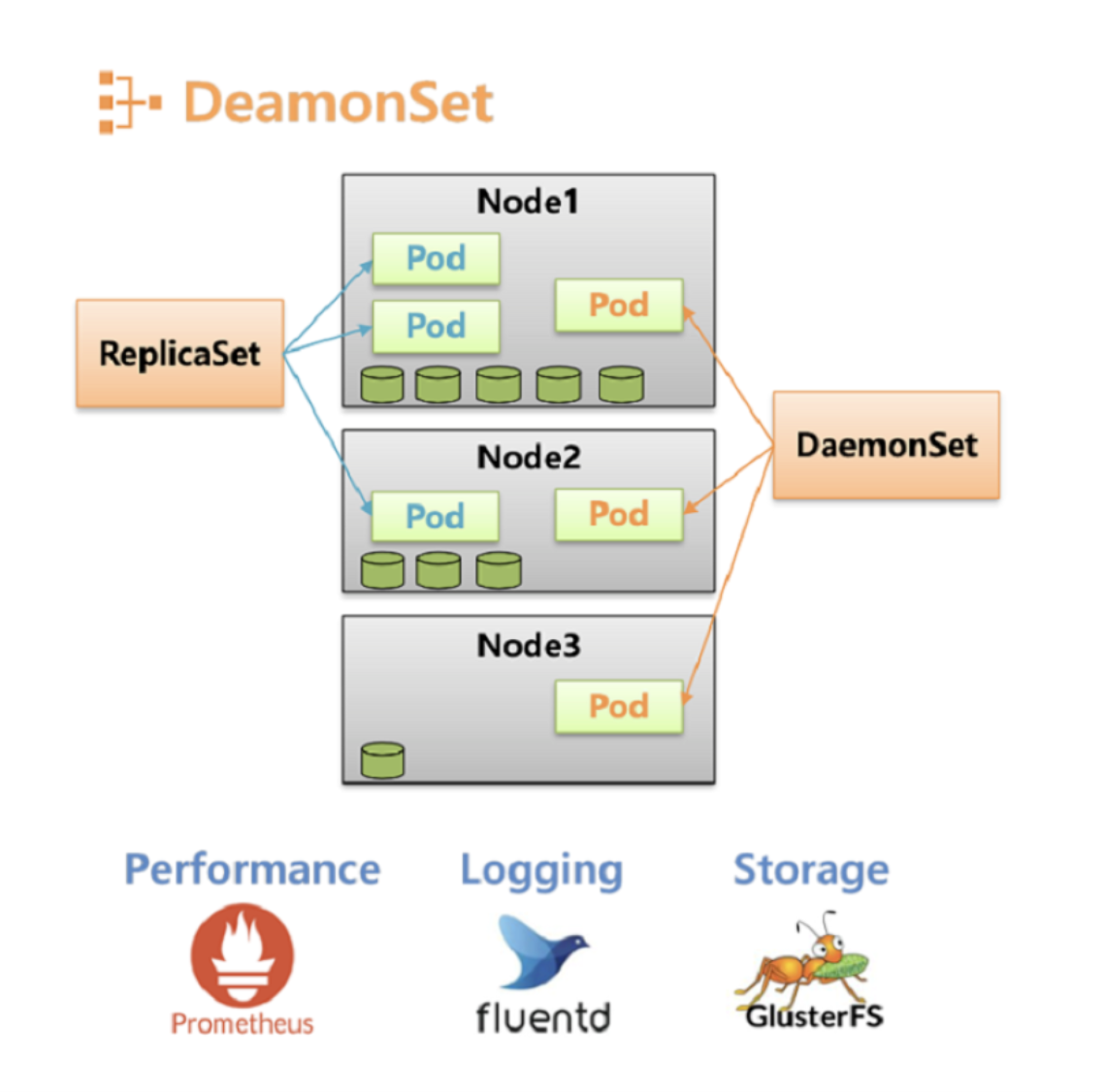

🚀 Deployments vs DaemonSets

Deployments

- In a Deployment, we specify the number of replicas, such as 3, to create and maintain that many pods across multiple nodes. The Deployment controller and scheduler are responsible for ensuring the specified number of pod replicas are always running. They manage the scheduling of pods based on node availability, resource constraints, taints, tolerations, and other factors.

DaemonSets

DaemonSet are used to run a single instance of a pod on each worker node, ensuring consistent deployment across the entire cluster. As soon as node deletion, DaemonSet gets deleted.

🌟 Use Cases for DaemonSets

Monitoring agents

Logging agents

Networking components (e.g., kube-proxy, CNI plugins)

💡 Note: DaemonSets ensure continuous operation on each node, ideal for node-specific tasks.

DaemonSet yaml:

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ds

labels:

env: demo

spec:

selector:

matchLabels:

env: demo

template:

metadata:

labels:

env: demo

name: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

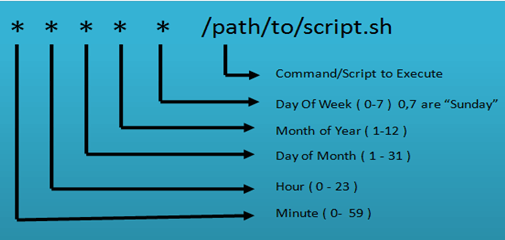

🕒 CronJobs

- A CronJob in Kubernetes is a specialized Job that runs on a schedule. It allows you to execute tasks at specified times, dates, or intervals. This is similar to the cron utility in Unix/Linux systems.

Uses cron-like syntax: * * * * * (minute, hour, day of month, month, day of week)

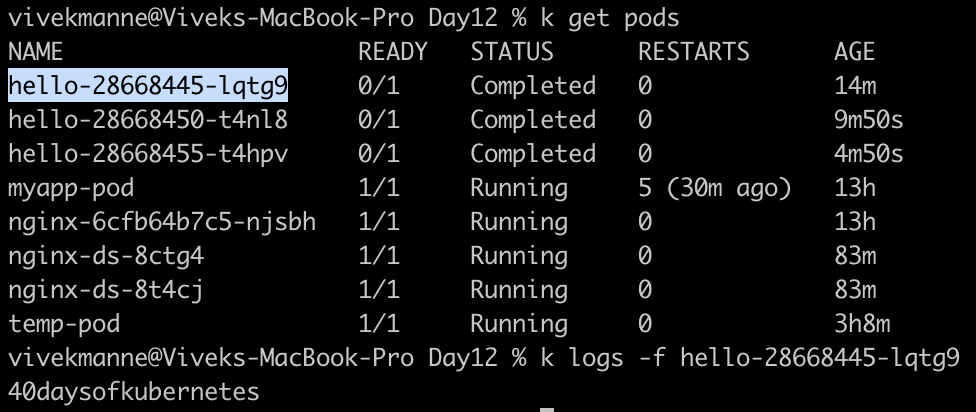

TASK: create a cronjob object in kubernetes that prints "40daysofkubernetes" after every 5 minutes and use busybox image

Cronjob yaml:

# This will schedule cronjob to run every 5 minutes and spin up a container using image busybox and print out "40daysofkubernetes" apiVersion: batch/v1 kind: CronJob metadata: name: hello spec: schedule: "*/5 * * * *" jobTemplate: spec: template: spec: containers: - name: hello image: busybox:1.28 imagePullPolicy: IfNotPresent command: ["sh", "-c", "echo '40daysofkubernetes'"] restartPolicy: OnFailure

So, every time when a cronjob is scheduled , in k8s a new job pod is created and once execution done, it marks the pod as completed.

Examples:

Every Saturday:

* * * * 6Every Saturday at 11:00 PM:

0 23 * * 6Every 5 minutes:

*/5 * * * *

🌟 Jobs

Executes tasks only once and ensures the task completes successfully. Like part of installation script, performing a database backup, or any task that needs to run once and finish.

Job YAML:

apiVersion: batch/v1 kind: Job metadata: name: hello-world spec: template: spec: containers: - name: hello image: busybox command: ["echo", "Hello, World!"] restartPolicy: Never backoffLimit: 4

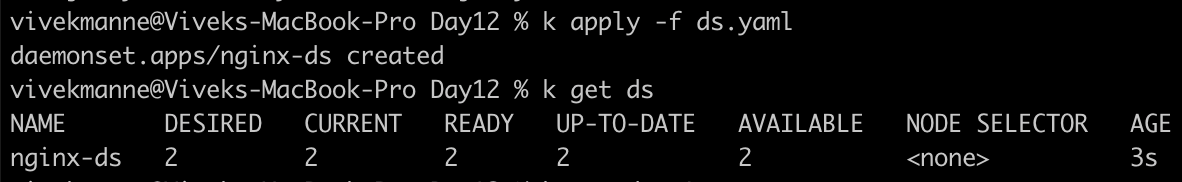

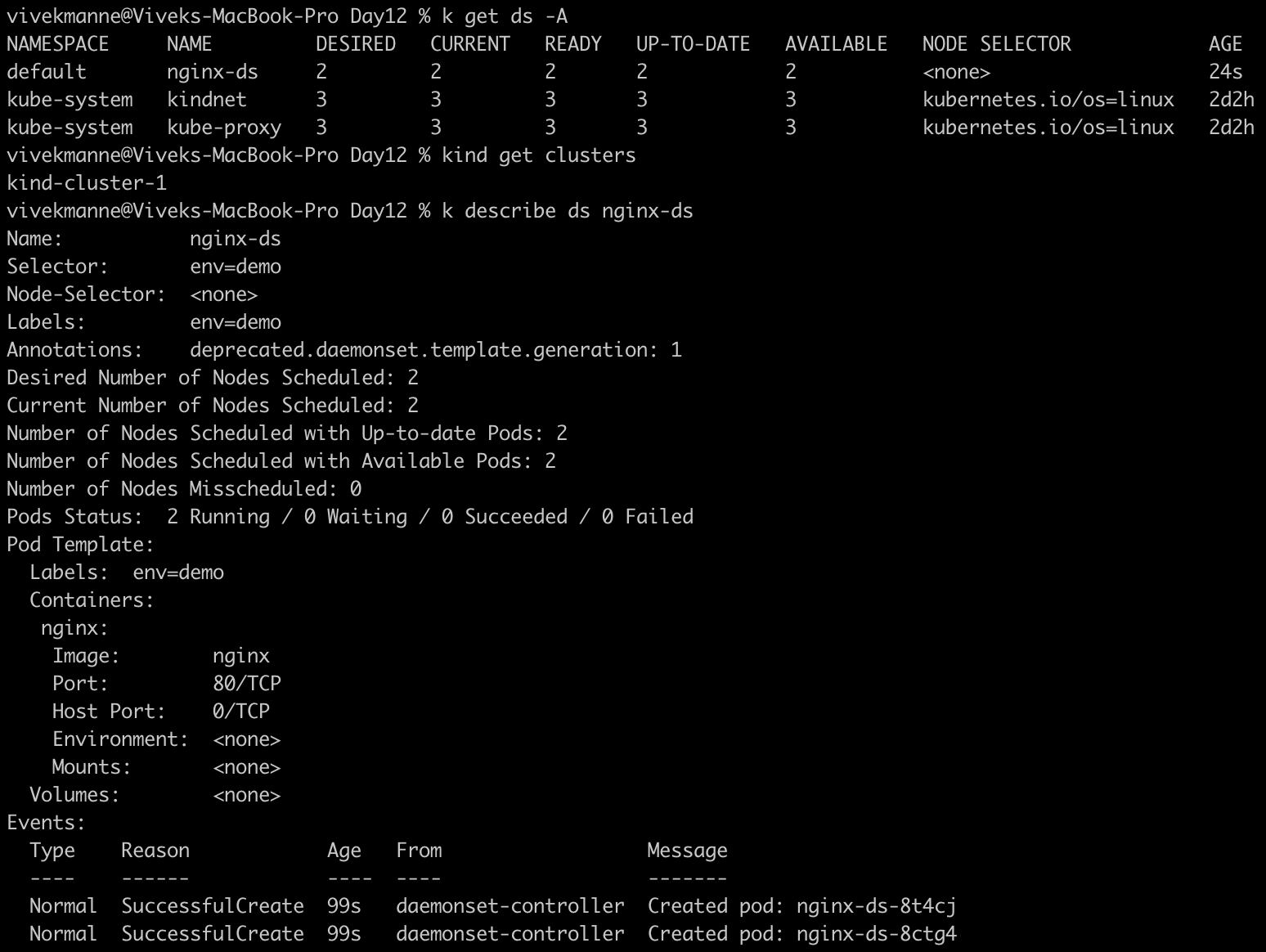

🔍 Verifying Workloads

Check kube-proxy DaemonSet on worker nodes:

kubectl get ds kube-proxy -n kube-systemNOTE: Daemonset runs on each worker node but not on master because of taints on it.

💁 Key Points:

kube-proxy:

A data plane component that runs in the

kube-systemnamespace.kube-proxy runs on all nodes to ensure svc to pod traffic networking across the cluster.

nginx-ds DaemonSet: Runs on only worker nodes, not on master nodes to maintain workload separation and ensure efficient resource allocation.

Commands to View DaemonSets:

To get the DaemonSet from all namespaces:

kubectl get ds -ATo view the DaemonSet specifically from the

kube-systemnamespace:kubectl get ds -n kube-system

By understanding these Kubernetes resources like DaemonSet,Cronjob,Jobs, we can effectively manage various types of tasks and ensure our cluster operates smoothly across different scenarios.

#Kubernetes #DaemonSet #Cronjobs #Jobs #40DaysofKubernetes #CKASeries

Subscribe to my newsletter

Read articles from Gopi Vivek Manne directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gopi Vivek Manne

Gopi Vivek Manne

I'm Gopi Vivek Manne, a passionate DevOps Cloud Engineer with a strong focus on AWS cloud migrations. I have expertise in a range of technologies, including AWS, Linux, Jenkins, Bitbucket, GitHub Actions, Terraform, Docker, Kubernetes, Ansible, SonarQube, JUnit, AppScan, Prometheus, Grafana, Zabbix, and container orchestration. I'm constantly learning and exploring new ways to optimize and automate workflows, and I enjoy sharing my experiences and knowledge with others in the tech community. Follow me for insights, tips, and best practices on all things DevOps and cloud engineering!