Difference between Bagging and Boosting

Meemansha Priyadarshini

Meemansha Priyadarshini

In this article you will learn about ensemble learning and why it is important for performing machine learning tasks.

Ensemble learning uses multiple algorithms to solve regression and classification problems. It combines more than one learner in order to produce better results.

1. Bagging

In Bagging multiple algorithms are used to train the model , The models are arranged in a parallel way.

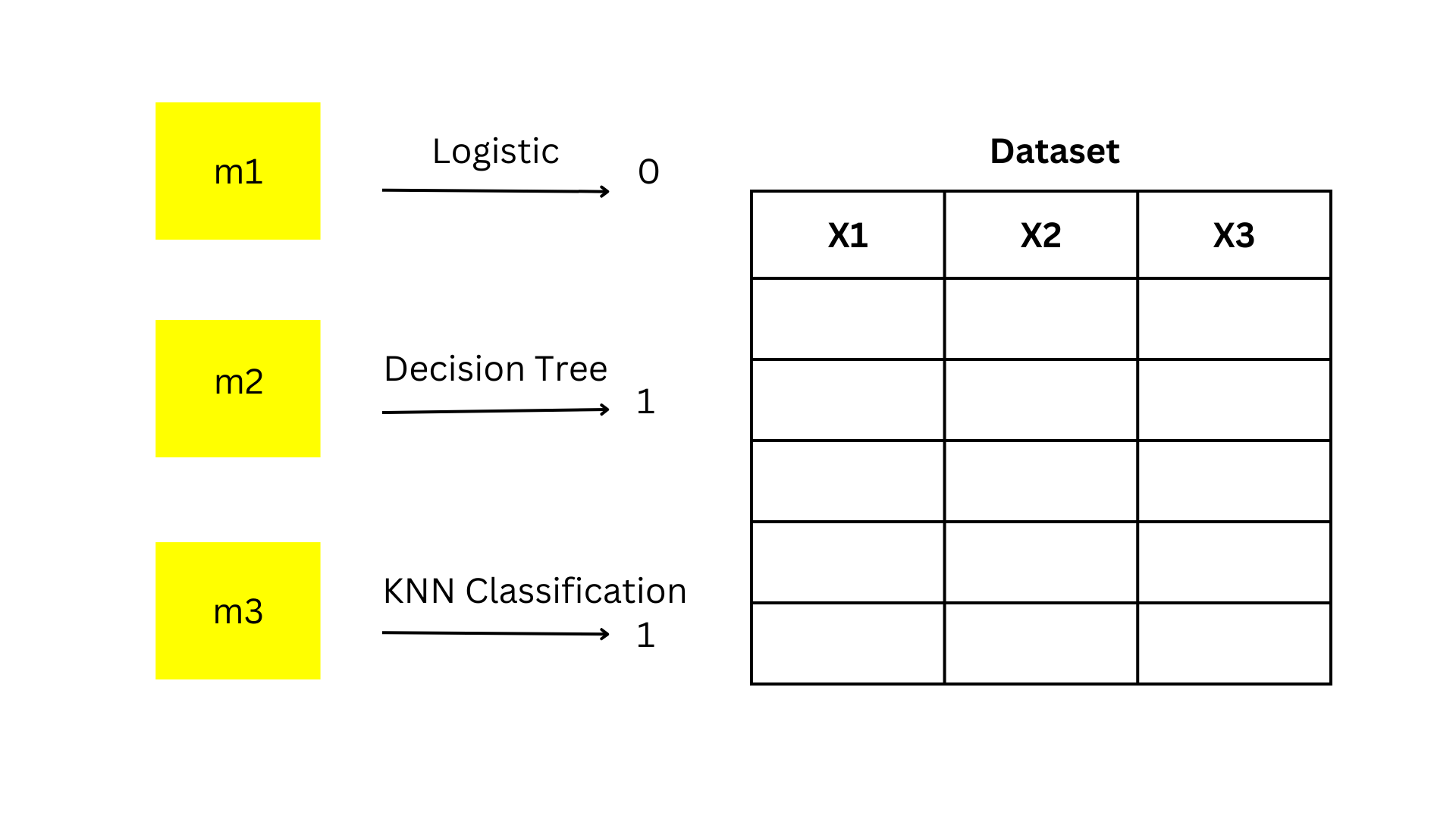

Let's understand with the help of classification problem. The below diagram describes the 3 different models m1,m2 & m3. One by one all the models are applied to the dataset and the combination of the best model is selected among them.

Suppose we get all the outputs of 0 & 1 we will aggregate all this and get the majority voting result. In the above example we see that the majority output is 1.

Thus, Majority Voting = 1. This process is called Bootstrap Aggregator.

In the case of Regression we take mean of all the results of algorithms used.

Random Forest is the algorithm which is an example of Bagging.

2. Boosting

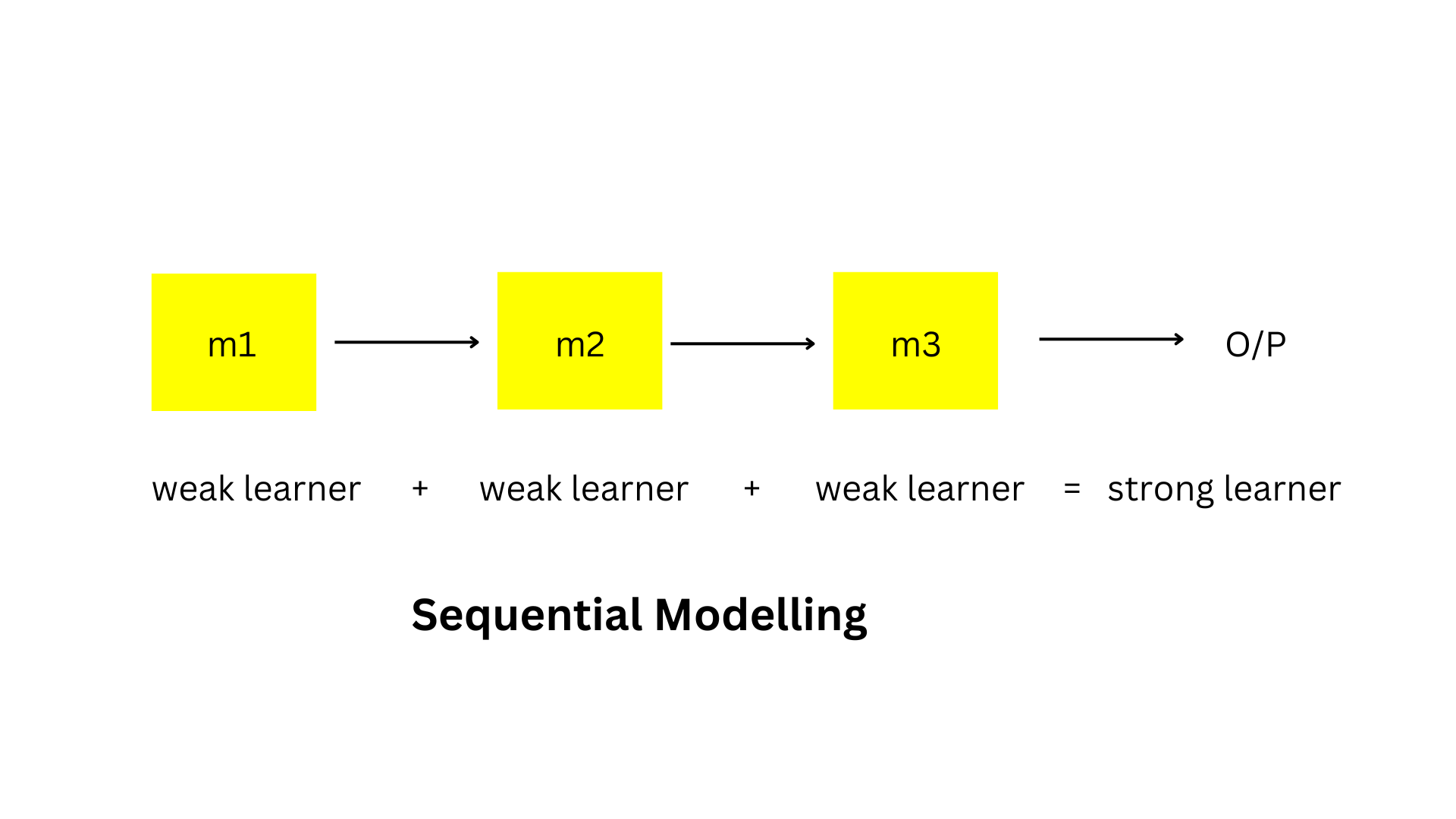

In boosting the models are arranged in sequential manner , each model is a weak learner and all are combined together to form a strong learner.

Gradient Boosting , Adaboosting , Xgboost are some examples of Boosting. They perform well on imbalanced dataset.

Importance of Ensemble learning

Improves accuracy: By combining multiple models ensemble learning provides more accuracy and robustness as compared to single individual model. They can take the advantages of each model type, leading to a more powerful predictive performance.

Reduces Overfitting: When the data is overfit it consists of low bias & high variance. Bagging and Boosting algorithms reduce variance and decreases overfitting.

Improves Flexibility: Ensemble methods can be used for various machine learning tasks, such as classifying data, predicting values, and even identifying patterns without labeled data I.e. Unsupervised learning.

Increased Stability: Ensemble learning helps make predictions more reliable. If one model makes a mistake, the other models in the group can correct it, resulting in more consistent and dependable outcomes.

In many machine learning competitions, the winning solutions usually use ensemble methods. This shows that combining multiple models is very effective for achieving top results.

Subscribe to my newsletter

Read articles from Meemansha Priyadarshini directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Meemansha Priyadarshini

Meemansha Priyadarshini

I am a passionate technical writer with a strong foundation in programming, machine learning, and deep learning. My background in innovation engineering and my expertise in advanced AI technologies make me adept at explaining complex technical concepts in a clear and engaging manner. I have a keen eye for detail, strong research skills, and a commitment to producing high-quality content.