Content Engine for PDF Document Comparison using Retrieval Augmented Generation Techniques with Large Language Models locally with Streamlit

Debanjan Chakraborty

Debanjan Chakraborty

Introduction

In today's data-driven world, accessing relevant information quickly and accurately from vast collections of documents is crucial. Whether for research, enterprise documentation, legal searches, or knowledge management, the ability to efficiently query and retrieve information from PDFs is a game-changer. The Content-Engine project addresses this need by combining advanced natural language processing (NLP) techniques and retrieval-augmented generation (RAG) methods with a user-friendly Streamlit interface. This blog post will guide you through the setup, key features, and technical underpinnings of the Content-Engine, making it easy to understand and use for your own document management needs.

Overview

The Content-Engine is a sophisticated, modular system designed to enable efficient querying and retrieval of information from PDF documents. It leverages state-of-the-art NLP models and embedding techniques to provide a seamless interface for interacting with document collections. The project is scalable and flexible, making it suitable for a wide range of applications, from academic research to enterprise document management.

Prerequisites

Before getting started, ensure you have the following installed on your system:

Ollama: Download and install from this link.

Python: Ensure Python is installed and updated to the latest version.

Virtualenv: Install using

pip install virtualenvif not already installed.

Additionally, you will need to install the required NLP models:

ollama pull nomic-embed-text

ollama pull mistral

Getting Started

Follow these steps to set up the Content-Engine:

Clone the Repository:

git clone https://github.com/hydracsnova13/Content-Engine.gitAdd PDF Files: Place your PDF files in the

datafolder of the cloned directory.Set Up Virtual Environment:

virtualenv <environment_name> source <environment_name>/bin/activate # On Windows, use <environment_name>\Scripts\activateInstall Dependencies: Navigate to the directory with the code files and install the required packages:

pip install -r requirements.txt

Running the Project

Populate Chroma Database: Run the script to add document clusters to the Chroma database:

python populate_database.pyRun the Streamlit App: Start the Streamlit app to begin querying and retrieving document information:

streamlit run app.py

Technical Explanations

Requirements

The requirements.txt file lists all the necessary packages needed for the project. These include:

pypdflangchainchromadbpytestboto3streamlitaiohttpnltktiktokentransformerstorch

These packages provide the foundational tools for PDF processing, embedding generation, database management, and web interface creation.

Embedding Function

The get_embedding_function.py script defines the embedding function used in the project.

Key snippet:

from langchain_community.embeddings.ollama import OllamaEmbeddings

def get_embedding_function():

embeddings = OllamaEmbeddings(model="nomic-embed-text")

return embeddings

Concepts and Theory:

Embeddings: Transform text into numerical vectors that capture semantic meaning. This project uses the "nomic-embed-text" model for generating high-quality embeddings.

OllamaEmbeddings: A class for creating embedding functions with the specified model, ensuring accurate and meaningful document representations.

Query Processing

The query_data.py script handles querying and retrieval of relevant document contexts using embeddings.

Key snippets:

- Preprocess Text:

def preprocess_text(text):

import re

from nltk.corpus import stopwords

from string import punctuation

text = re.sub(f"[{re.escape(punctuation)}]", "", text)

words = text.split()

stop_words = set(stopwords.words('english'))

filtered_words = [word for word in words if word.lower() not in stop_words]

condensed_text = " ".join(filtered_words)

return condensed_text

Concepts and Theory:

- Text Preprocessing: Involves cleaning and normalizing text to improve the quality of subsequent processing steps. This includes removing punctuation and stopwords to focus on the most significant words.

- Summarize Text using GPT-2:

def summarize_text_gpt2(text):

from transformers import GPT2LMHeadModel, GPT2Tokenizer

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

model = GPT2LMHeadModel.from_pretrained("gpt2")

inputs = tokenizer.encode("Summarize: " + text, return_tensors="pt", max_length=1024, truncation=True)

summary_ids = model.generate(inputs, max_length=300, min_length=50, length_penalty=2.0, num_beams=4, early_stopping=True)

summary = tokenizer.decode(summary_ids[0], skip_special_tokens=True)

return summary

Concepts and Theory:

- Summarization: The process of condensing a document into a shorter version while preserving its main ideas. This project uses GPT-2, a large language model, for generating summaries.

- Query and Retrieve Relevant Documents:

async def query_rag(query_text: str, top_k: int = 5):

embedding_function = get_embedding_function()

from langchain_community.vectorstores import Chroma

db = Chroma(persist_directory="chroma", embedding_function=embedding_function)

results = db.similarity_search_with_score(query_text, k=top_k)

context_parts = [doc.page_content[:200] for doc, score in results]

context_text = "\n\n---\n\n".join(context_parts).replace("\n", " ").replace("\t", " ")

condensed_context = preprocess_text(context_text)

summarized_context = summarize_text_gpt2(condensed_context)

return summarized_context

Concepts and Theory:

- Retrieval-Augmented Generation (RAG): Combines information retrieval and text generation to produce responses that are both contextually relevant and informative. It retrieves relevant documents and generates a coherent summary.

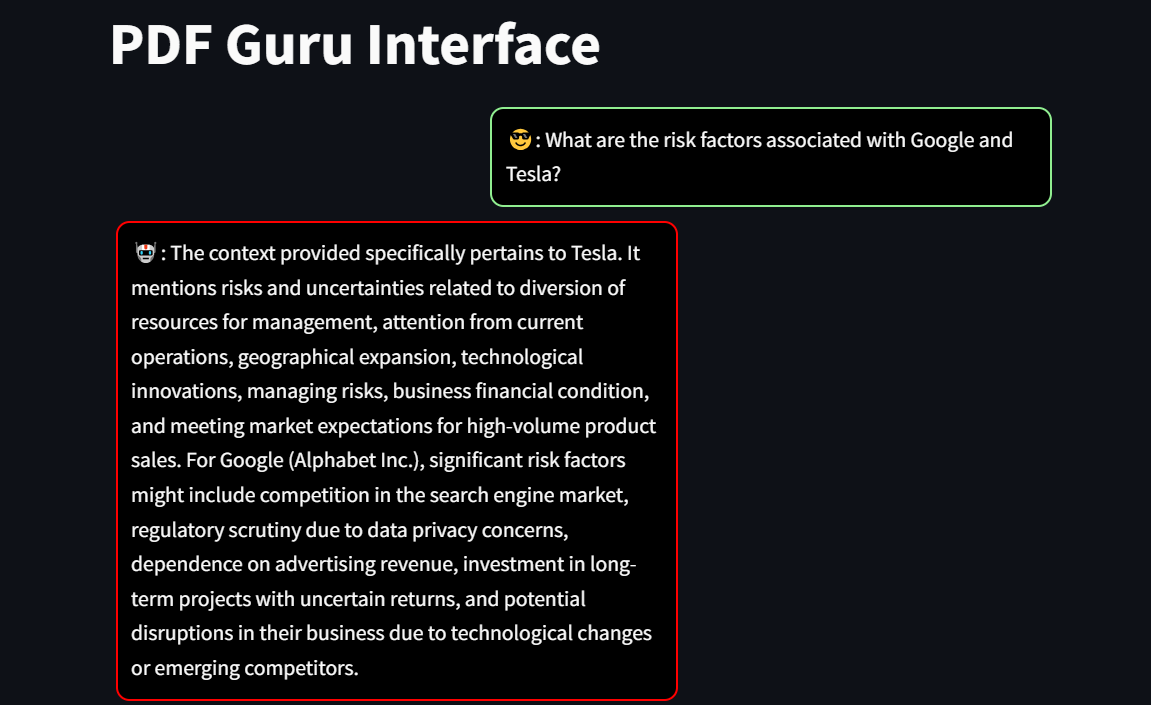

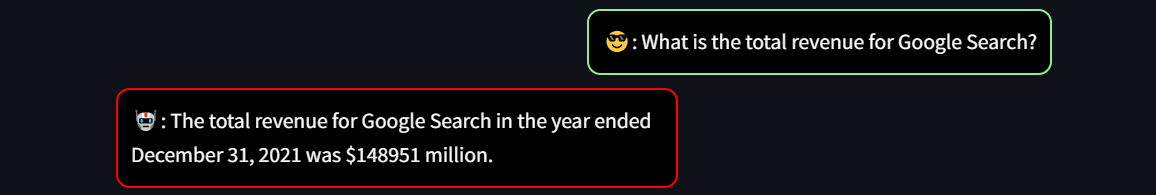

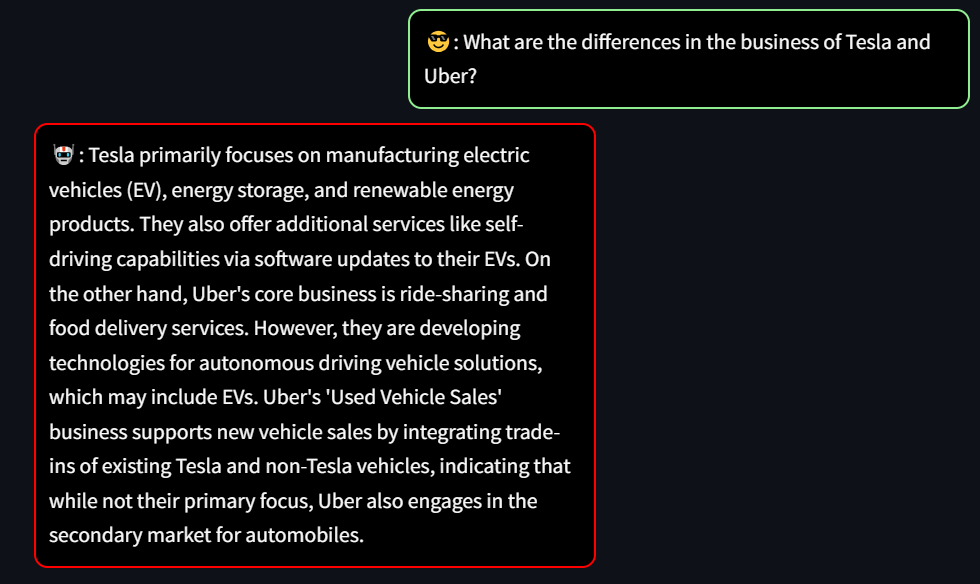

Streamlit Interface

The app.py script contains the Streamlit application for querying PDFs.

Key snippets:

- Invoke Model Asynchronously:

async def invoke_model_async(prompt):

import aiohttp

import json

url = "http://localhost:11500/api/generate"

headers = {"Content-Type": "application/json"}

data = {"model": "mistral", "prompt": prompt}

json_data = json.dumps(data)

response_text = ""

async with aiohttp.ClientSession(connector=aiohttp.TCPConnector(ssl=False)) as session:

async with session.post(url, headers=headers, data=json_data) as response:

async for line in response.content:

line_data = line.decode('utf-8')

import re

match = re.search(r'"response":"(.*?)"', line_data)

if match:

chunk_text = match.group(1).replace("\\n", "\n").replace("\\t", "\t")

response_text += chunk_text

return response_text

Concepts and Theory:

- Asynchronous Processing: Improves responsiveness and performance by allowing the program to handle other tasks while waiting for a response from the model.

- Main Function:

def main():

import streamlit as st

st.title("PDF Guru Interface")

if 'chat_history' not in st.session_state:

st.session_state.chat_history = []

if 'processing' not in st.session_state:

st.session_state.processing = False

query_text = st.text_area("Message:", key=f"message_input_{len(st.session_state.chat_history)}", disabled=st.session_state.processing, height=80)

if st.button("Send", disabled=st.session_state.processing):

query_text = query_text.strip()

if query_text:

st.session_state.processing = True

context_text = get_condensed_context(query_text)

prompt = f"""

Answer the question based only on the following context in an optimal manner. Ensure the response does not exceed 500 characters:

{context_text}

---

Answer the question based on the above context: {query_text}

"""

async def run_query():

response = await invoke_model_async(prompt)

st.session_state.chat_history.append(f"😎: {query_text}")

st.session_state.chat_history.append(f"🤖: {response}")

st.session_state.processing = False

st.rerun()

try:

import asyncio

asyncio.get_running_loop()

asyncio.create_task(run_query())

except RuntimeError:

asyncio.run(run_query())

st.rerun()

if __name__ == "__main__":

main()

Concepts and Theory:

- Interactive User Interface: Uses Streamlit to create a simple, interactive web application for querying documents. The interface includes a chat-like history to display user queries and model responses.

Database Population

The populate_database.py script populates the Chroma database with document chunks from PDFs.

Key snippets:

- Load Documents:

def load_documents():

from langchain_community.document_loaders import PyPDFDirectoryLoader

document_loader = PyPDFDirectoryLoader("data")

documents = document_loader.load()

return documents

Concepts and Theory:

- Document Loading: Uses

PyPDFDirectoryLoaderto read and load PDF documents from a specified directory.

- Split Documents:

def split_documents(documents):

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=800,

chunk_overlap=80,

length_function=len,

is_separator_regex=False,

)

chunks = text_splitter.split_documents(documents)

return chunks

Concepts and Theory:

- Text Splitting: Breaks down large documents into smaller, manageable chunks, which improves the efficiency of processing and querying.

- Add to Chroma:

def add_to_chroma(chunks):

from langchain_community.vectorstores import Chroma

db = Chroma(persist_directory="chroma", embedding_function=get_embedding_function())

chunks_with_ids = calculate_chunk_ids(chunks)

existing_items = db.get(include=[])

existing_ids = set(existing_items["ids"])

new_chunks = [chunk for chunk in chunks_with_ids if chunk.metadata["id"] not in existing_ids]

if new_chunks:

from concurrent.futures import ThreadPoolExecutor, as_completed

with ThreadPoolExecutor(max_workers=4) as executor:

futures = [executor.submit(add_batch_to_chroma, db, new_chunks[i:i + 50], i//50 + 1) for i in range(0, len(new_chunks), 50)]

for future in as_completed(futures):

future.result()

Concepts and Theory:

- Database Management: Manages document embeddings in the Chroma database, ensuring efficient storage and retrieval. Uses multithreading for parallel processing to enhance performance.

Conclusion

The Content-Engine project represents a significant advancement in the field of document management and retrieval. By combining powerful NLP models with an intuitive user interface, it offers an unparalleled solution for accessing and interacting with document collections. Whether for academic, corporate, or legal purposes, the Content-Engine provides a reliable and efficient platform to meet the demanding needs of information retrieval in today's data-driven world.

Subscribe to my newsletter

Read articles from Debanjan Chakraborty directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by