How to run Gemini Flash with MindsDB

Lê Đức Minh

Lê Đức MinhIntroduction

Machine learning has emerged as an indispensable tool for businesses and organizations in the age of data-driven decision-making. MindsDB, an open-source platform, provides a straightforward approach to creating machine learning models, allowing users to harness the potential of AI without requiring substantial technical experience.

In this writing, we go through a brief introduction to MindDB and put our hands to the grind by integrating Gemini, which is a robust LLM developed by Google, with MindsDB environment.

Let’s dive in!

MindsDB

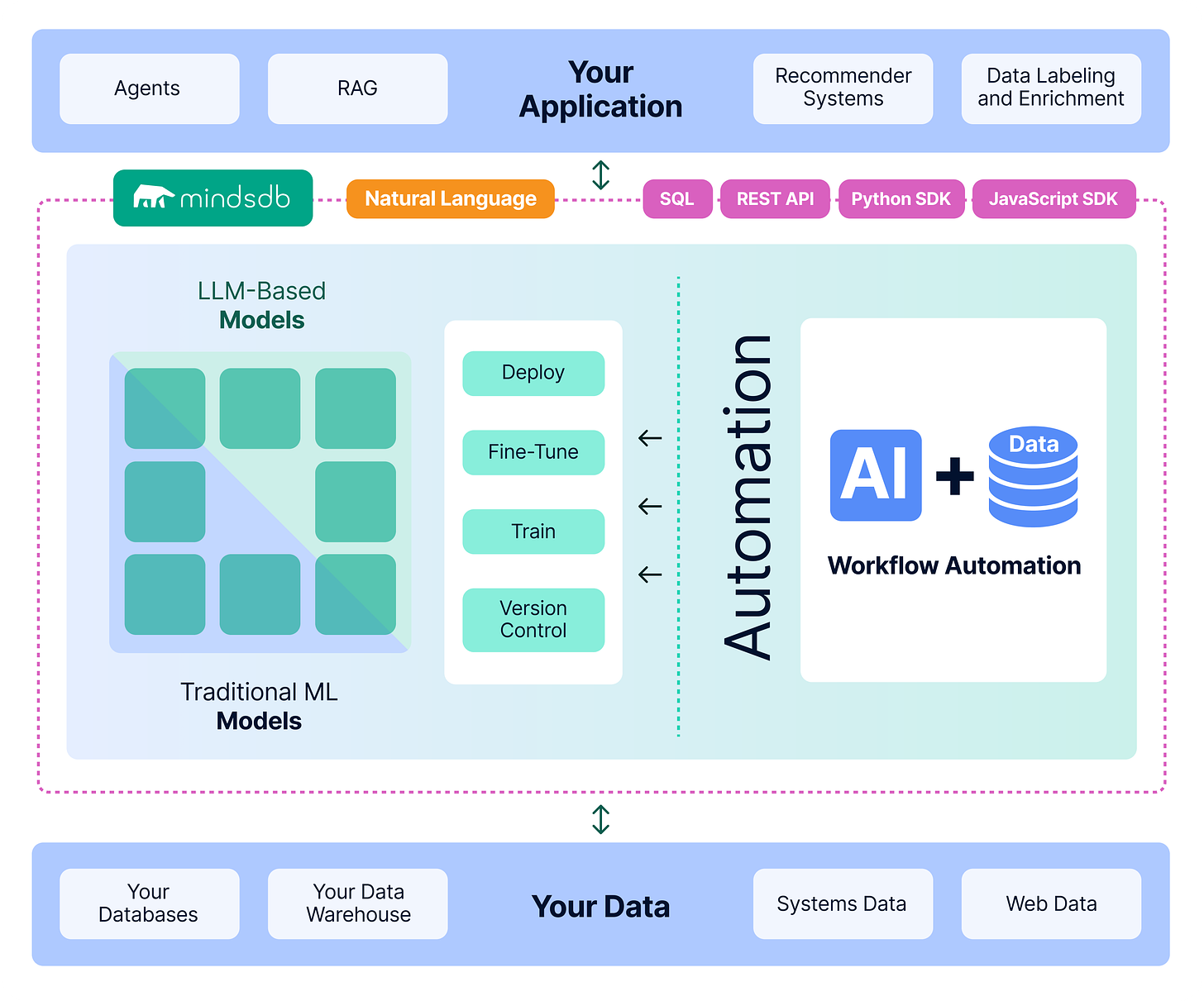

MindsDB connects with a wide range of data sources, including databases, vector stores, and apps, as well as major AI/ML frameworks such as AutoML and LLM. MindsDB connects data and AI, allowing for the intuitive deployment and automation of AI systems.

Personally, I believe that this strategy is really ambitious. MindsDB, with approximately 200 data sources and AI/ML framework integrations, enables any developer to access enterprise data fast and securely while tailoring AI to their specific requirements. It is similar to other well-known frameworks such as LangChain and Llama-Index, except it concentrates on data sources.Not to add that its cloud service provides free access to a variety of LLMs, such as ChatGPT and Mixtral. As a result, it includes everything a user would require when engaging with LLMs.

Code Implementation

Install MindsDB and MindsDB’ SDK for Python

MindsDB provides Docker images tailored to different use cases, each containing specific integrations and dependencies. Here’s how to set up MindsDB using Docker:

1. Install MindsDB

Run the following command to create a Docker container with MindsDB:

docker run --name mindsdb_container -p 47334:47334 -p 47335:47335 mindsdb/mindsdb

Explanation:

docker run: Spins up a Docker container.--name mindsdb_container: Assigns a name to the container.-p 47334:47334: Publishes port 47334 to access MindsDB GUI and HTTP API.-p 47335:47335: Publishes port 47335 to access MindsDB MySQL API.mindsdb/mindsdb: Specifies the Docker image provided by MindsDB.

2. Managing the Container

Once the container is created, you can use the following commands:

docker stop mindsdb_container: Stops the container.docker start mindsdb_container: Restarts a stopped container.

Optionally, you can run the container in detached mode using the -d flag:

docker run --name mindsdb_container -d -p 47334:47334 -p 47335:47335 mindsdb/mindsdb

3. Persisting Data:

To persist models and configurations on the host machine, create a data directory and mount it to the container:

mkdir mdb_data

docker run --name mindsdb_container -p 47334:47334 -p 47335:47335 -v $(pwd)/mdb_data:/root/mdb_storage mindsdb/mindsdb

Explanation:

-v $(pwd)/mdb_data:/root/mdb_storage: Maps the host machine'smdb_datafolder to/root/mdb_storageinside the container.

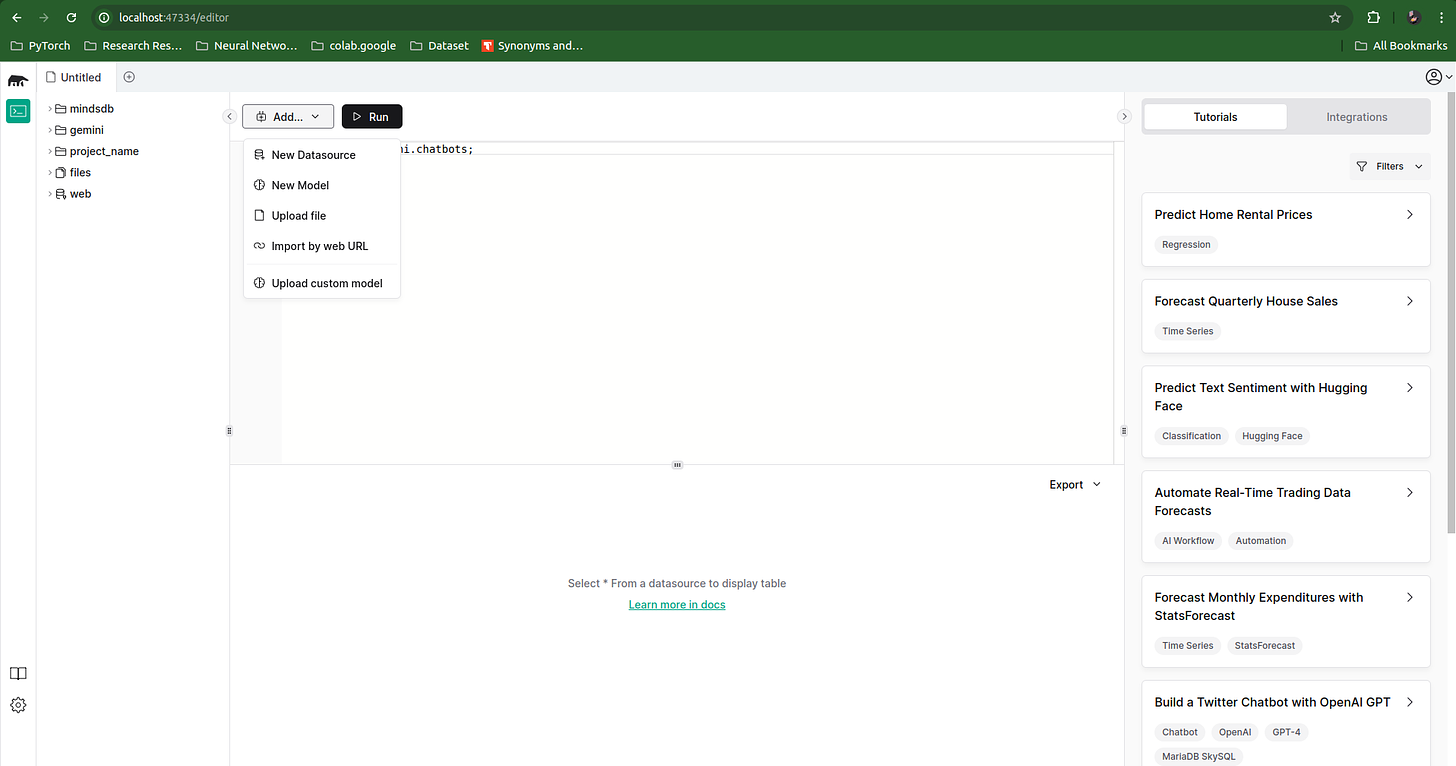

After we have installed MindsDB’s docker image, we can view its UI on http://localhost:47334/editor

To install Python’s SDK, simply type this command line on your terminal:

pip install mindsdb_sdk

Bridging Gemini and MindsDB

To keep things simple, we would concentrate on how to combine those parts rather than all the amazing features that MindsDB has to offer (that would be covered in a different post). The flow of bridging Gemini and MindsDB is illustrated as follows:

Create a ml_engine.

Create a ml_agent that utilizes the ml_engine as its core.

Ready to use!

First of all, we call the necessary libraries and create a connection for MindsDB’s server.

import os

from dotenv import load_dotenv

import mindsdb_sdk

load_dotenv()

server = mindsdb_sdk.connect('http://127.0.0.1:47334')

try:

project = server.create_project('gemini')

except:

project = server.get_project('gemini')

print(server.list_projects())

After that, we create a ml_engine , so that we can easily access it for our later uses:

# Create a ml_engine

server.ml_engines.create(

name="google_gemini_engine",

handler="google_gemini",

connection_data={

"google_gemini_api_key": os.getenv("GEMINI_API_KEY")

}

)

After we have already created an engine, we use that engine as a core for our Gemini agent. In this experiment, we chose gemini-1.5-flash, also, defined our prompt template:

project.models.create(

name='gemini_agent',

predict="completion",

engine='google_gemini_engine',

prompt_template= "You are a helpful AI assistant. Your task is to answer this {{question}}",

column = 'question',

model_name='models/gemini-1.5-flash',

)

When everything is done, we can call our model for the project and interact with it.

agent = project.models.get(name='gemini_agent')

result = agent.predict({"question": "What is your name?"})

print(result.completion.iloc[-1])

"""

"I am Gemini, a multimodal AI language model developed by Google. I don't have a name, as I am not a person."

"""

Reference

- mindsdb- MindsDB - Platform for Customizing AI - URL: https://docs.mindsdb.com/what-is-mindsdb

Subscribe to my newsletter

Read articles from Lê Đức Minh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by