Real-Time Chat App: Kubernetes Ingress on AWS with React and Node.js

Sprasad Pujari

Sprasad Pujari

I'll provide a detailed two-tier architecture project using Kubernetes Ingress with AWS, implemented through Visual Studio Code. This project will be a real-time chat application with a React frontend and a Node.js backend using Socket.IO for real-time communication.

Project: Real-Time Chat Application with Kubernetes Ingress on AWS

Project Architecture:

chat-app/

│

├── backend/

│ ├── node_modules/

│ ├── .env

│ ├── Dockerfile

│ ├── package.json

│ └── server.js

│

├── frontend/

│ ├── node_modules/

│ ├── public/

│ ├── src/

│ │ ├── App.js

│ │ ├── index.js

│ │ └── ... (other React files)

│ ├── .env

│ ├── Dockerfile

│ └── package.json

│

├── k8s/

│ ├── backend-deployment.yaml

│ ├── backend-service.yaml

│ ├── frontend-deployment.yaml

│ ├── frontend-service.yaml

│ ├── ingress.yaml

│ └── cluster-issuer.yaml

│

└── README.md

Tier 1: React Frontend

Tier 2: Node.js Backend with Socket.IO

AWS Services: EKS, ECR, Route 53

Additional Components: Kubernetes Ingress, Cert-Manager for SSL

Let's go through this step-by-step:

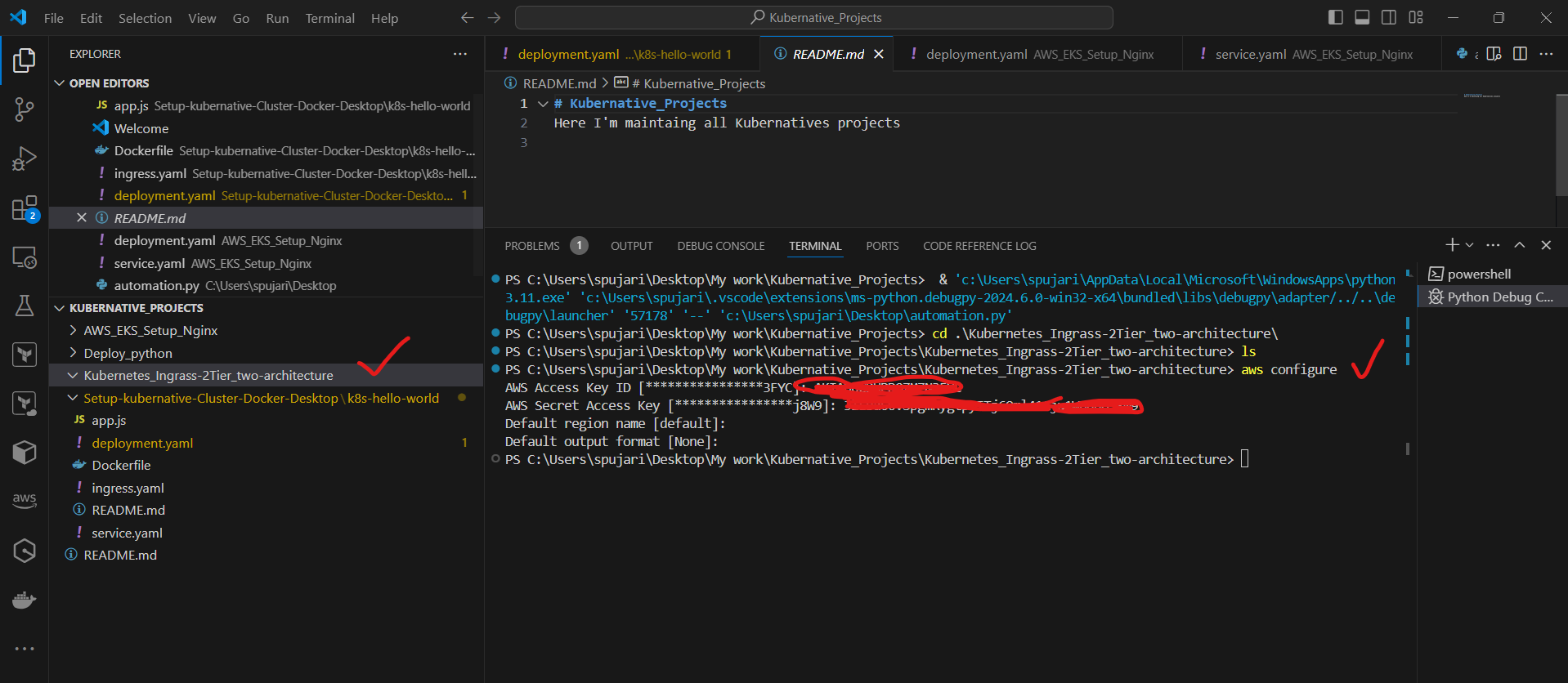

1.Set up the development environment:

a. Install required tools:

Visual Studio Code: https://code.visualstudio.com/

Node.js and npm: https://nodejs.org/

AWS CLI: https://aws.amazon.com/cli/

eksctl: https://eksctl.io/

b. Configure AWS CLI:

aws configure

Enter your AWS Access Key ID, Secret Access Key, and preferred region.

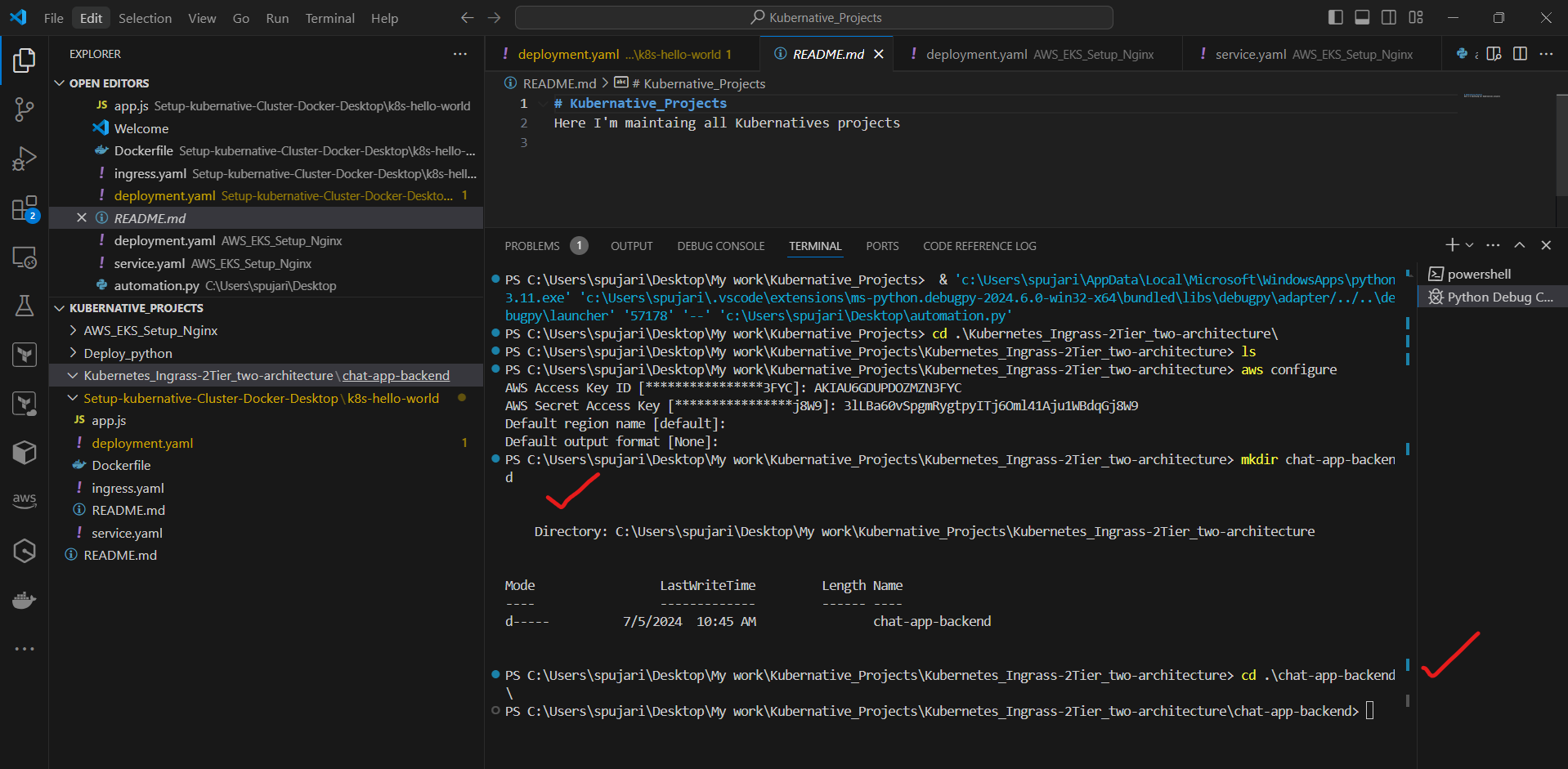

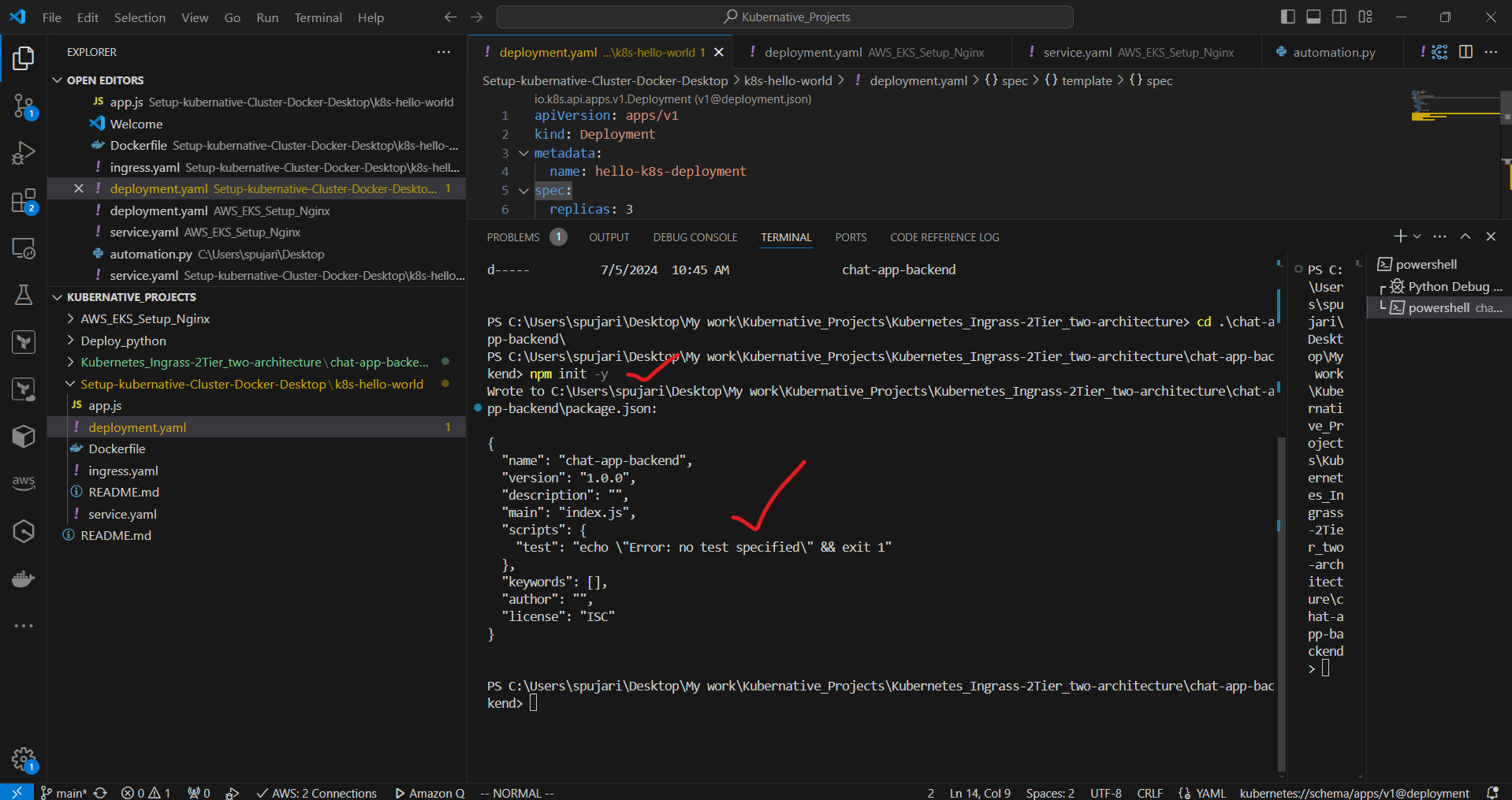

2.Create the backend application:

a. Create a new directory for the backend:

mkdir chat-app-backend

cd chat-app-backend

b. Initialize a new Node.js project:

npm init -y

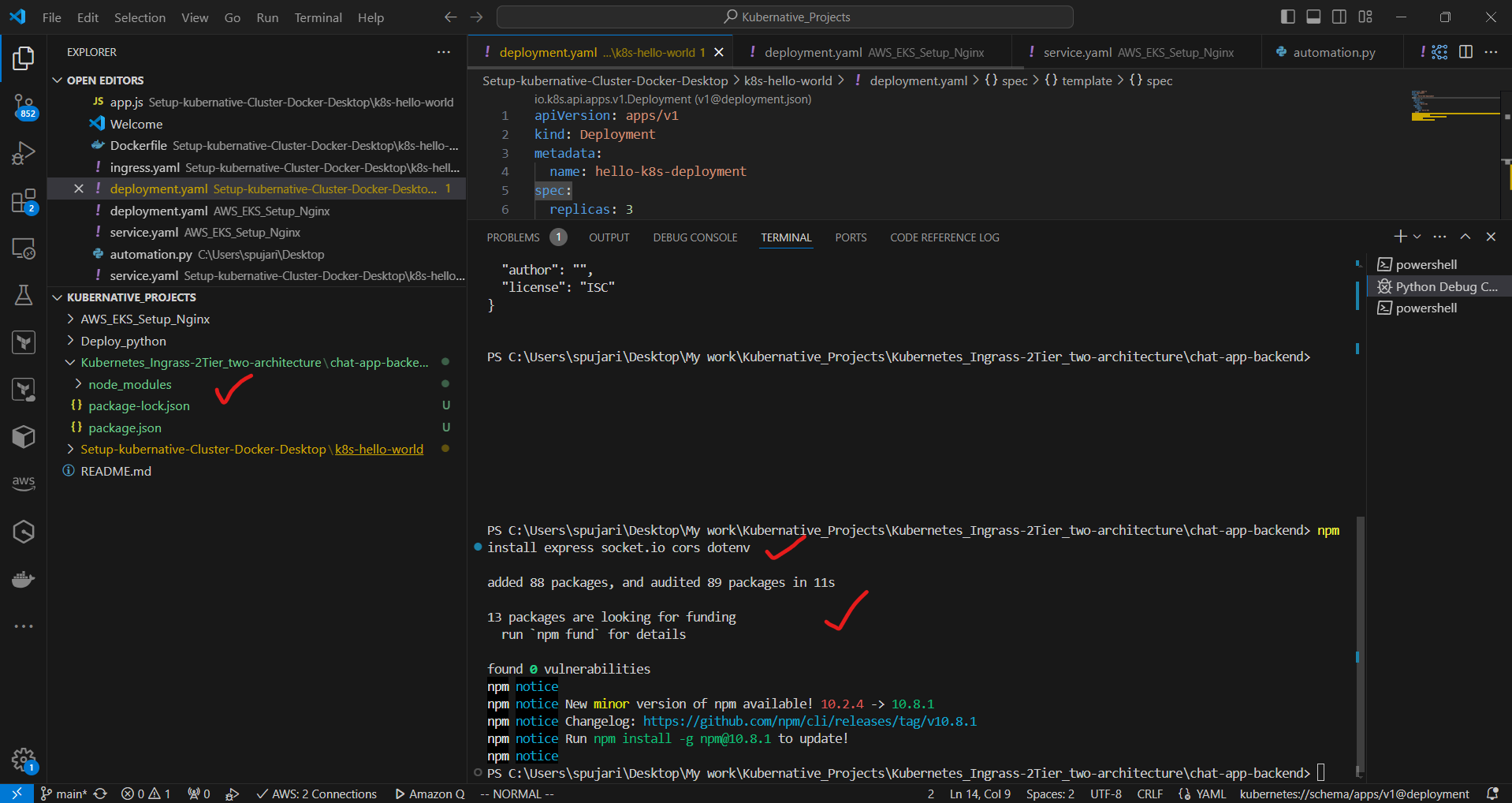

c. Install dependencies:

npm install express socket.io cors dotenv

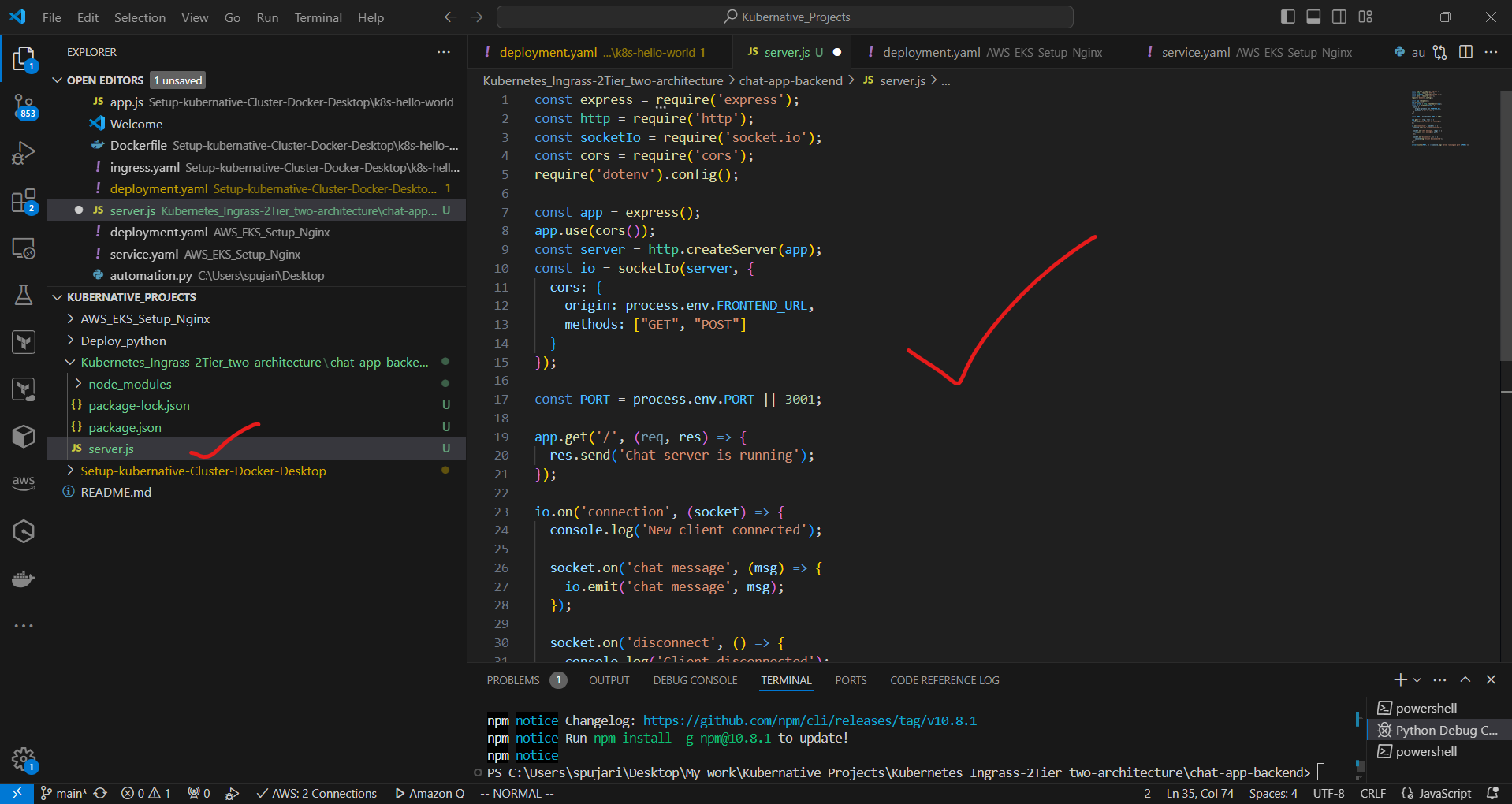

d. Create a new file server.js:

const express = require('express');

const http = require('http');

const socketIo = require('socket.io');

const cors = require('cors');

require('dotenv').config();

const app = express();

app.use(cors());

const server = http.createServer(app);

const io = socketIo(server, {

cors: {

origin: process.env.FRONTEND_URL,

methods: ["GET", "POST"]

}

});

const PORT = process.env.PORT || 3001;

app.get('/', (req, res) => {

res.send('Chat server is running');

});

io.on('connection', (socket) => {

console.log('New client connected');

socket.on('chat message', (msg) => {

io.emit('chat message', msg);

});

socket.on('disconnect', () => {

console.log('Client disconnected');

});

});

server.listen(PORT, () => console.log(`Server running on port ${PORT}`));

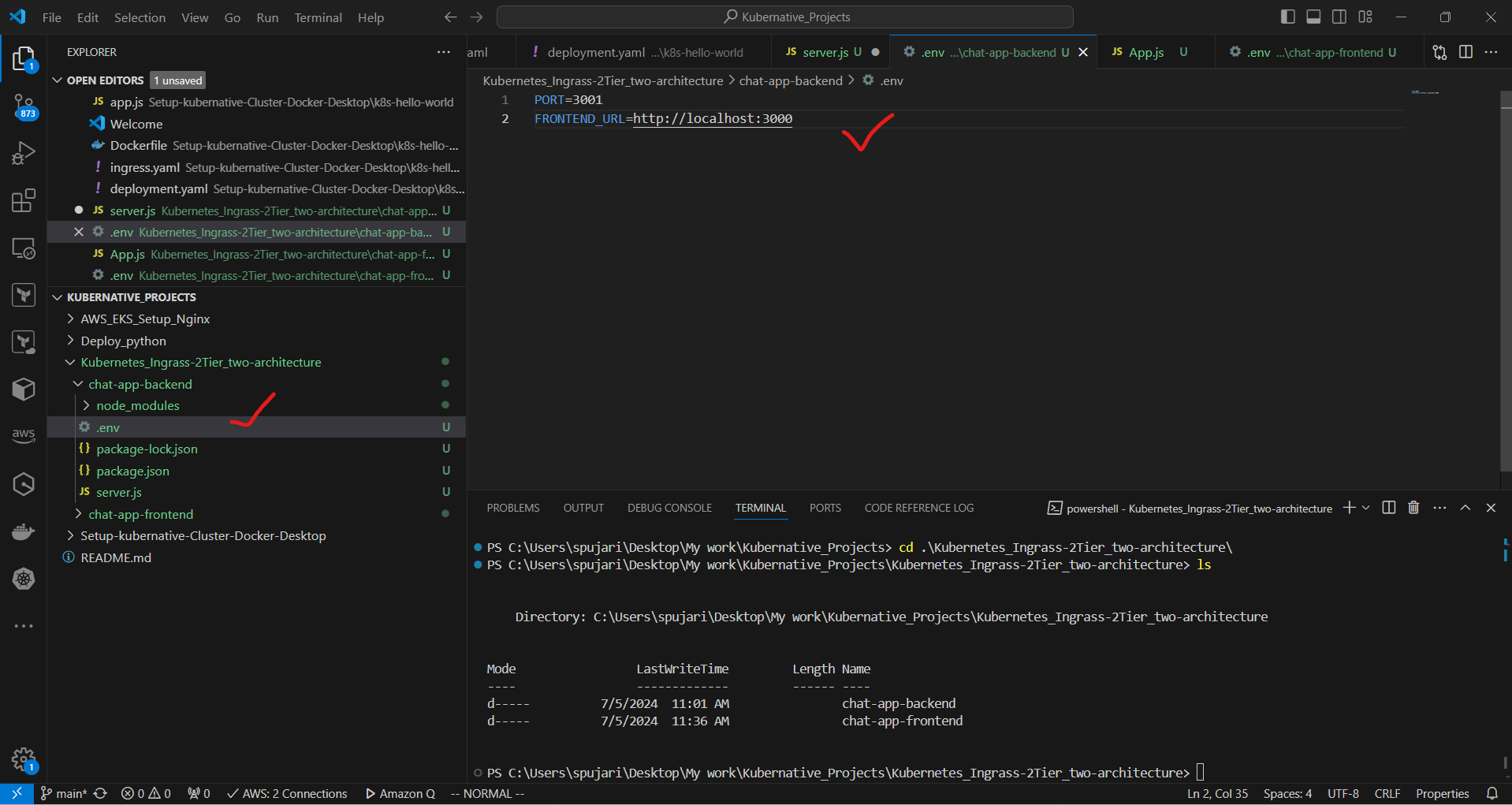

e. Create a .env file:

PORT=3001

FRONTEND_URL=http://localhost:3000

3. Create the frontend application:

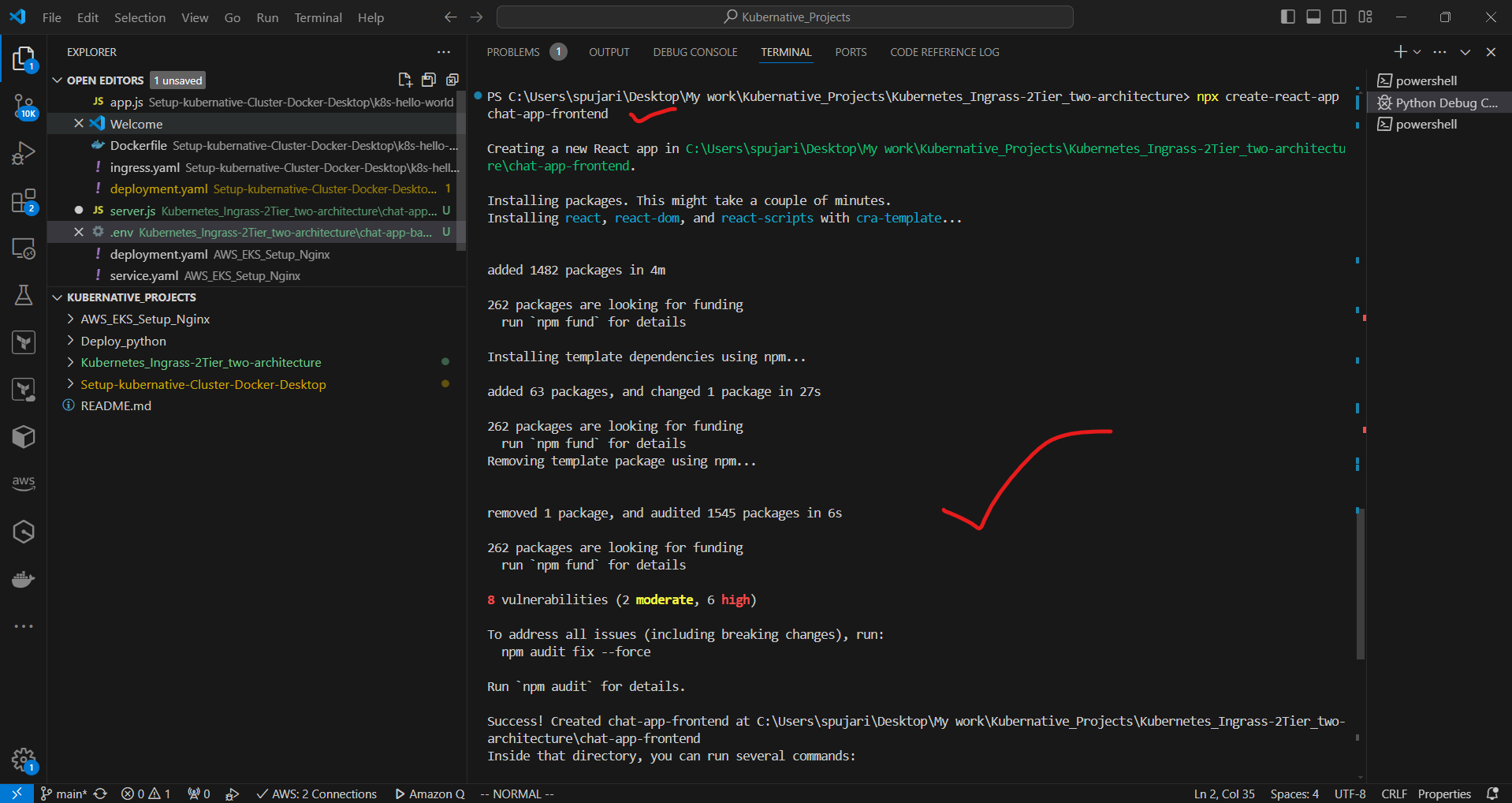

a. Create a new React app:

npx create-react-app chat-app-frontend

cd chat-app-frontend

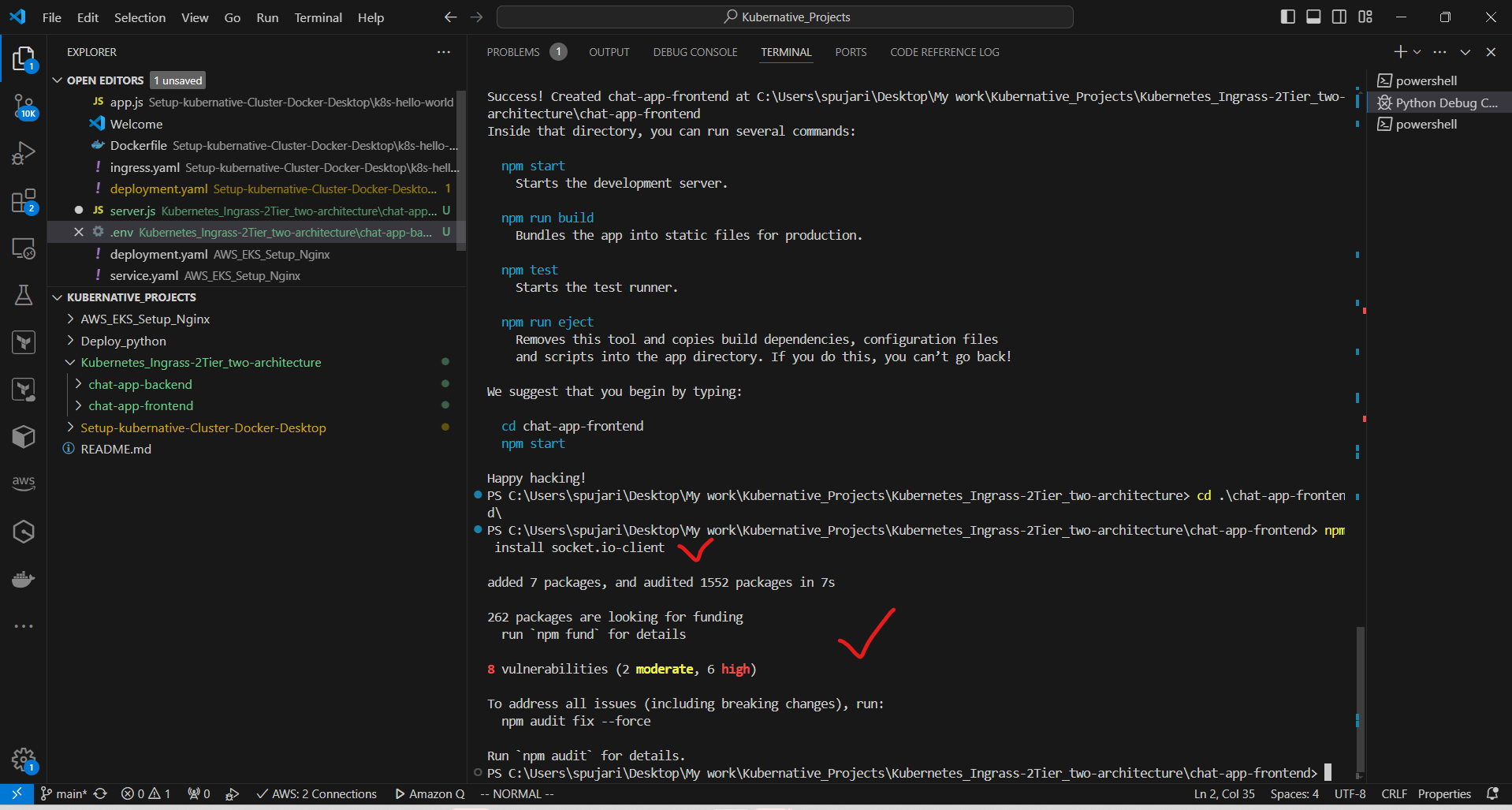

b. Install dependencies:

npm install socket.io-client

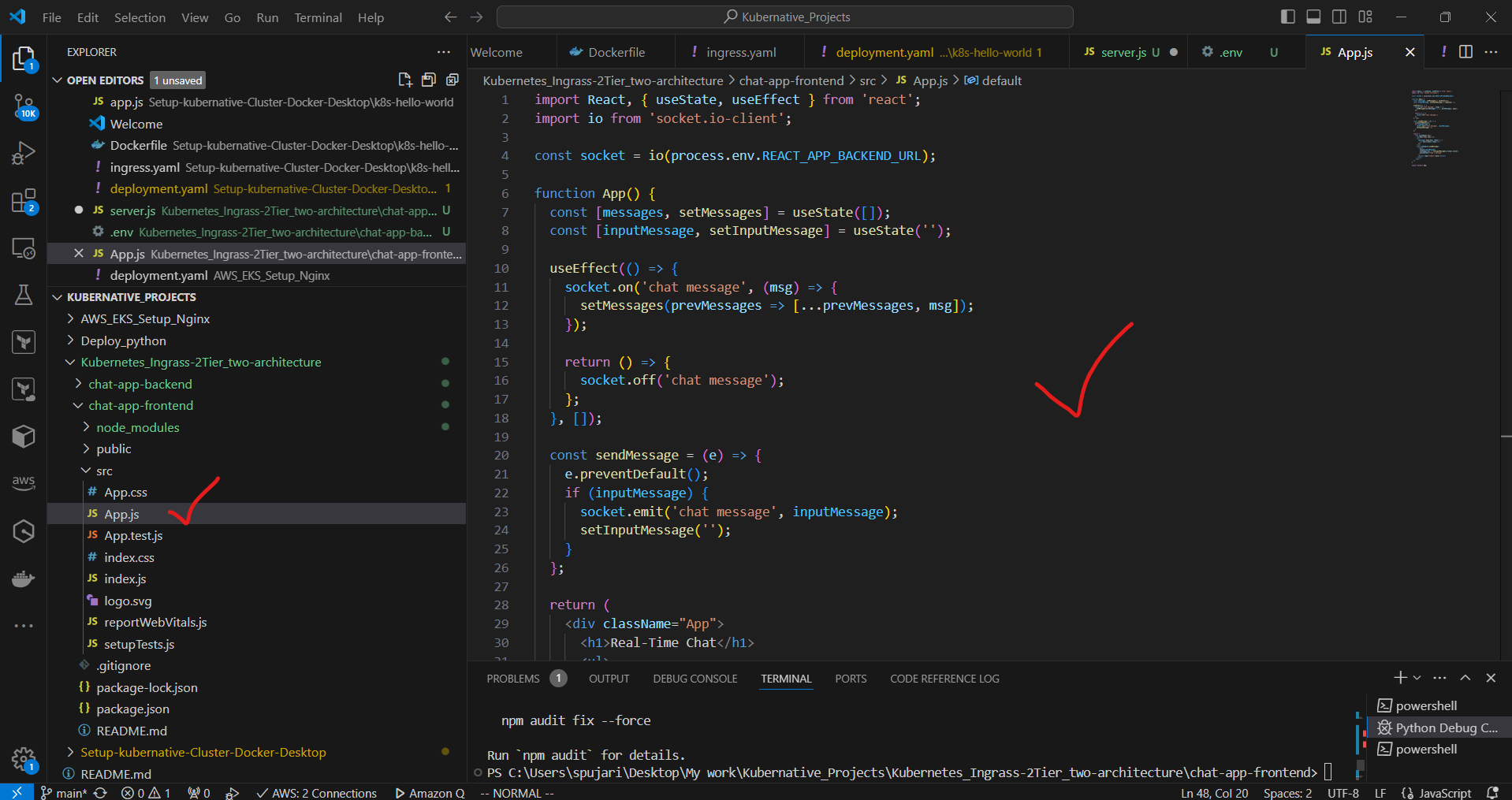

c. Replace the contents of src/App.js:

import React, { useState, useEffect } from 'react';

import io from 'socket.io-client';

const socket = io(process.env.REACT_APP_BACKEND_URL);

function App() {

const [messages, setMessages] = useState([]);

const [inputMessage, setInputMessage] = useState('');

useEffect(() => {

socket.on('chat message', (msg) => {

setMessages(prevMessages => [...prevMessages, msg]);

});

return () => {

socket.off('chat message');

};

}, []);

const sendMessage = (e) => {

e.preventDefault();

if (inputMessage) {

socket.emit('chat message', inputMessage);

setInputMessage('');

}

};

return (

<div className="App">

<h1>Real-Time Chat</h1>

<ul>

{messages.map((msg, index) => (

<li key={index}>{msg}</li>

))}

</ul>

<form onSubmit={sendMessage}>

<input

value={inputMessage}

onChange={(e) => setInputMessage(e.target.value)}

placeholder="Type a message"

/>

<button type="submit">Send</button>

</form>

</div>

);

}

export default App;

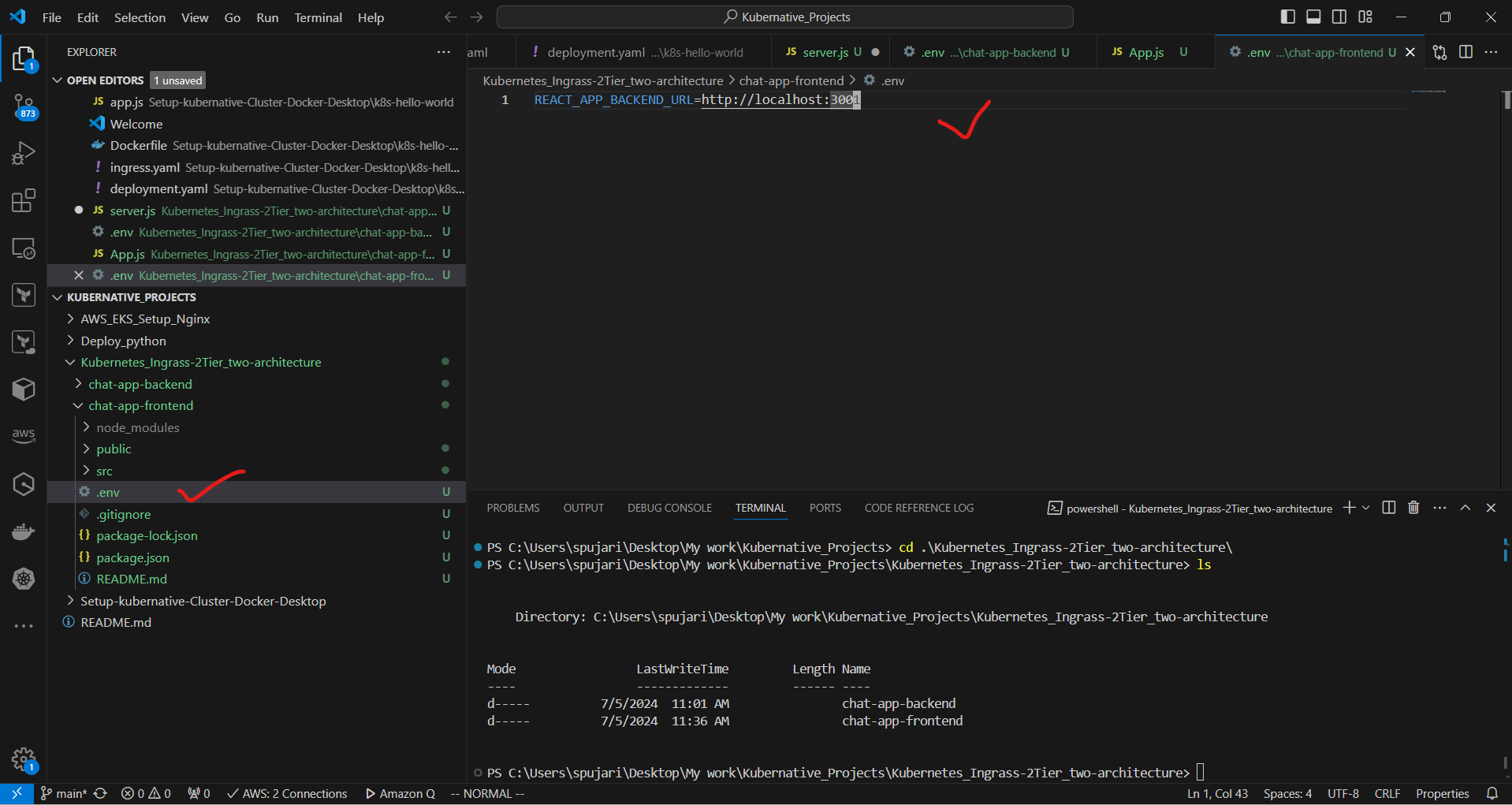

d. Create a .env file in the frontend directory:

REACT_APP_BACKEND_URL=http://localhost:3001

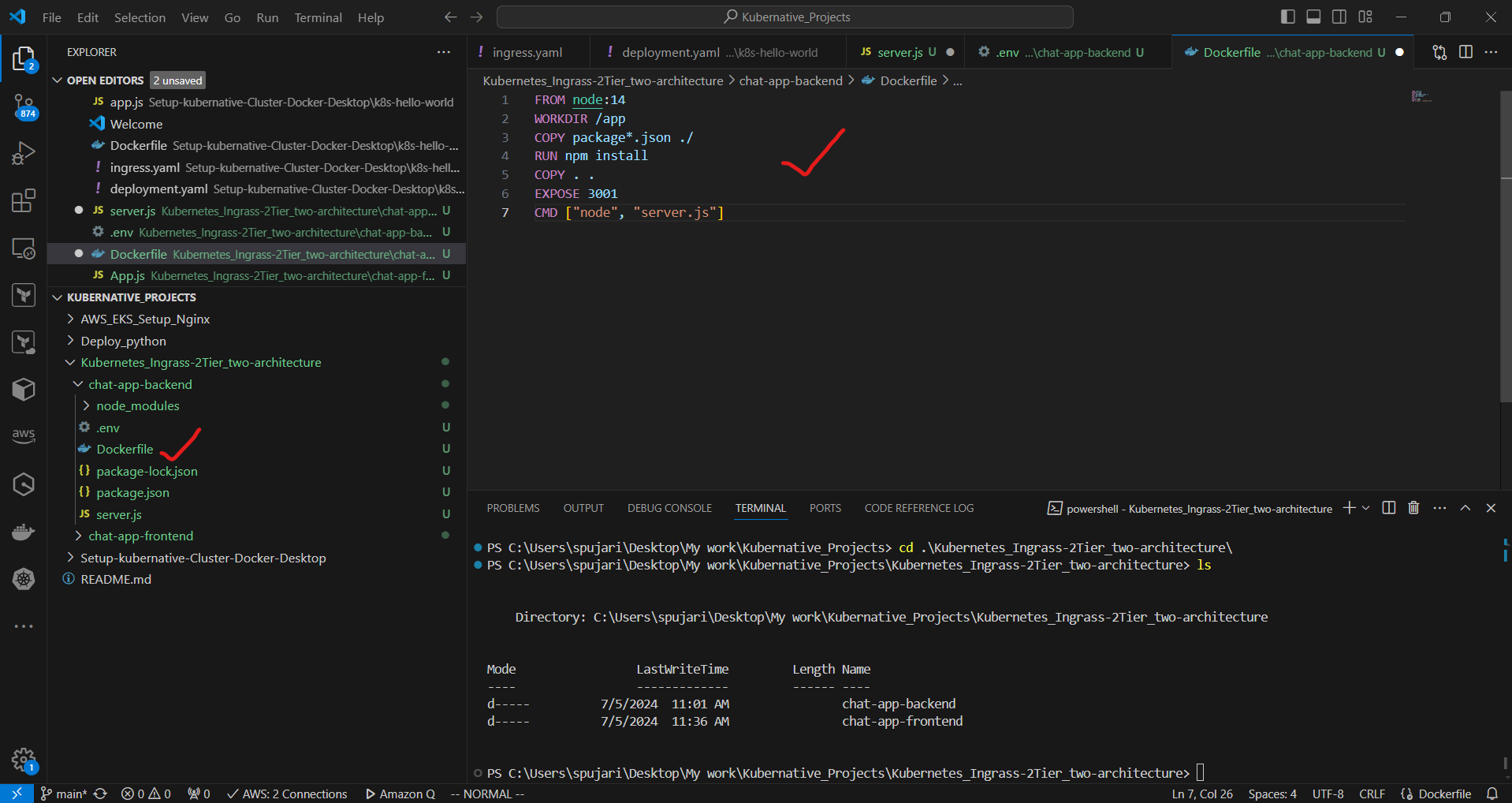

4.Containerize the applications:

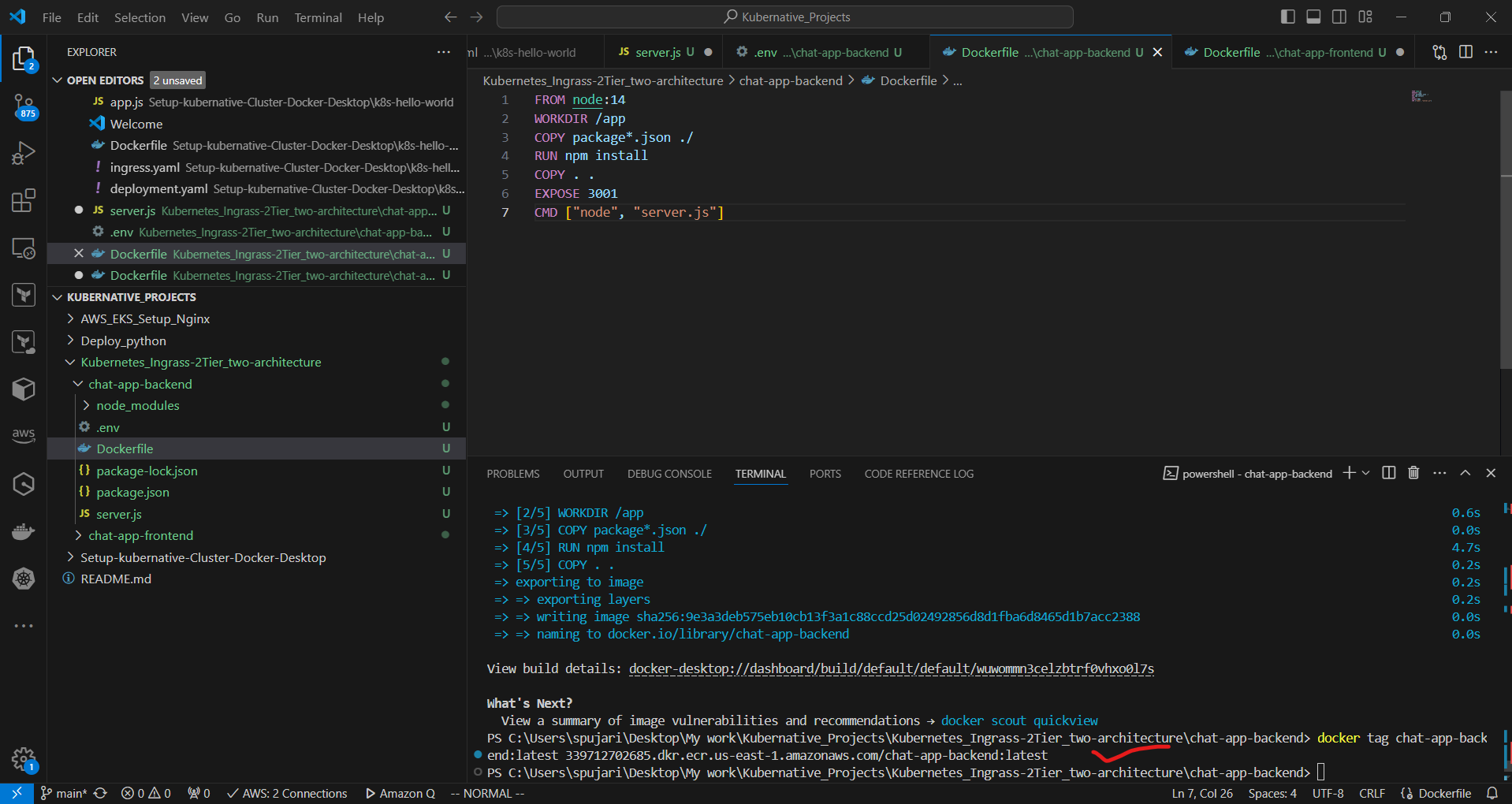

a. In the backend directory, create a Dockerfile:

FROM node:14

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 3001

CMD ["node", "server.js"]

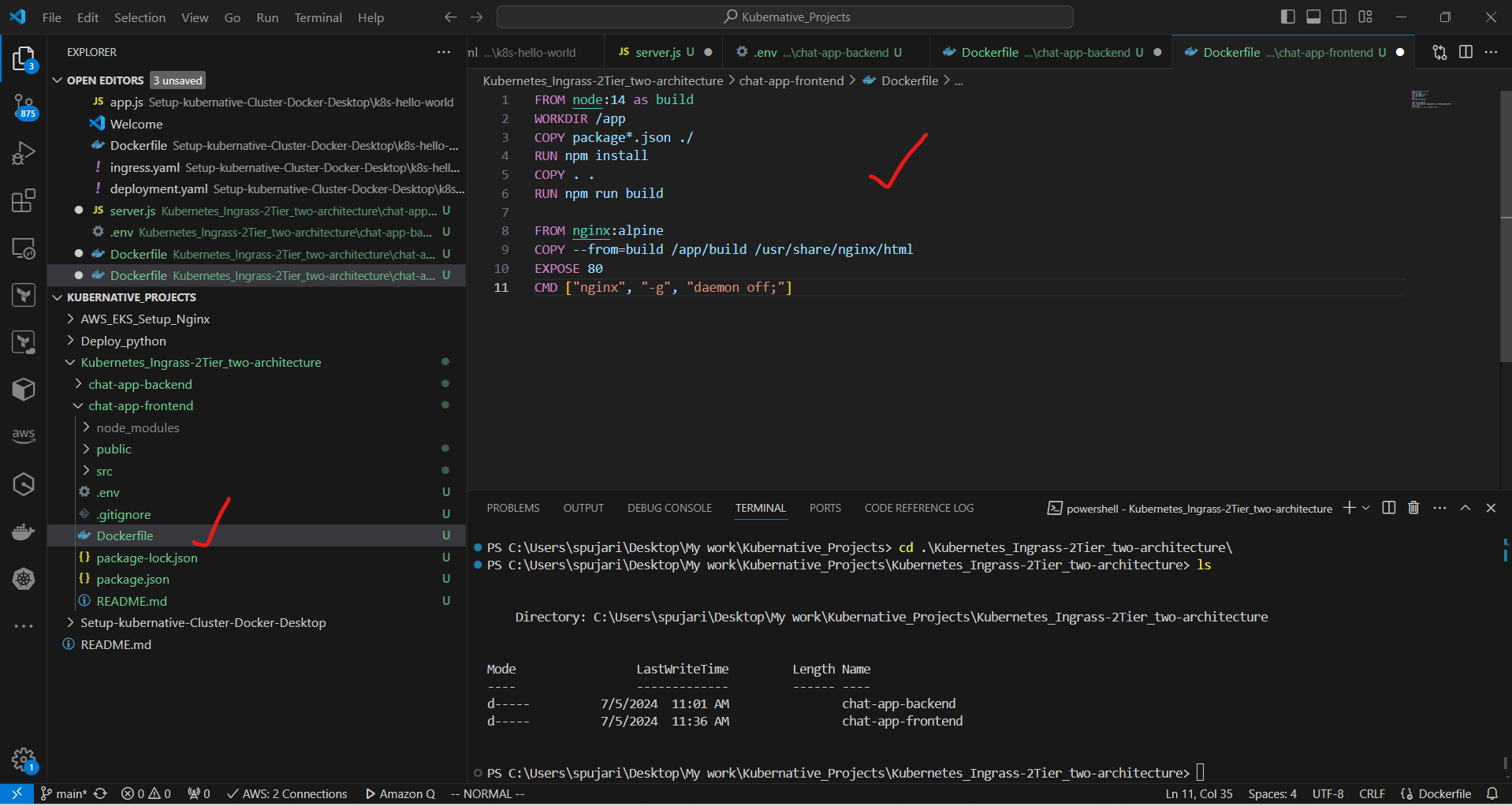

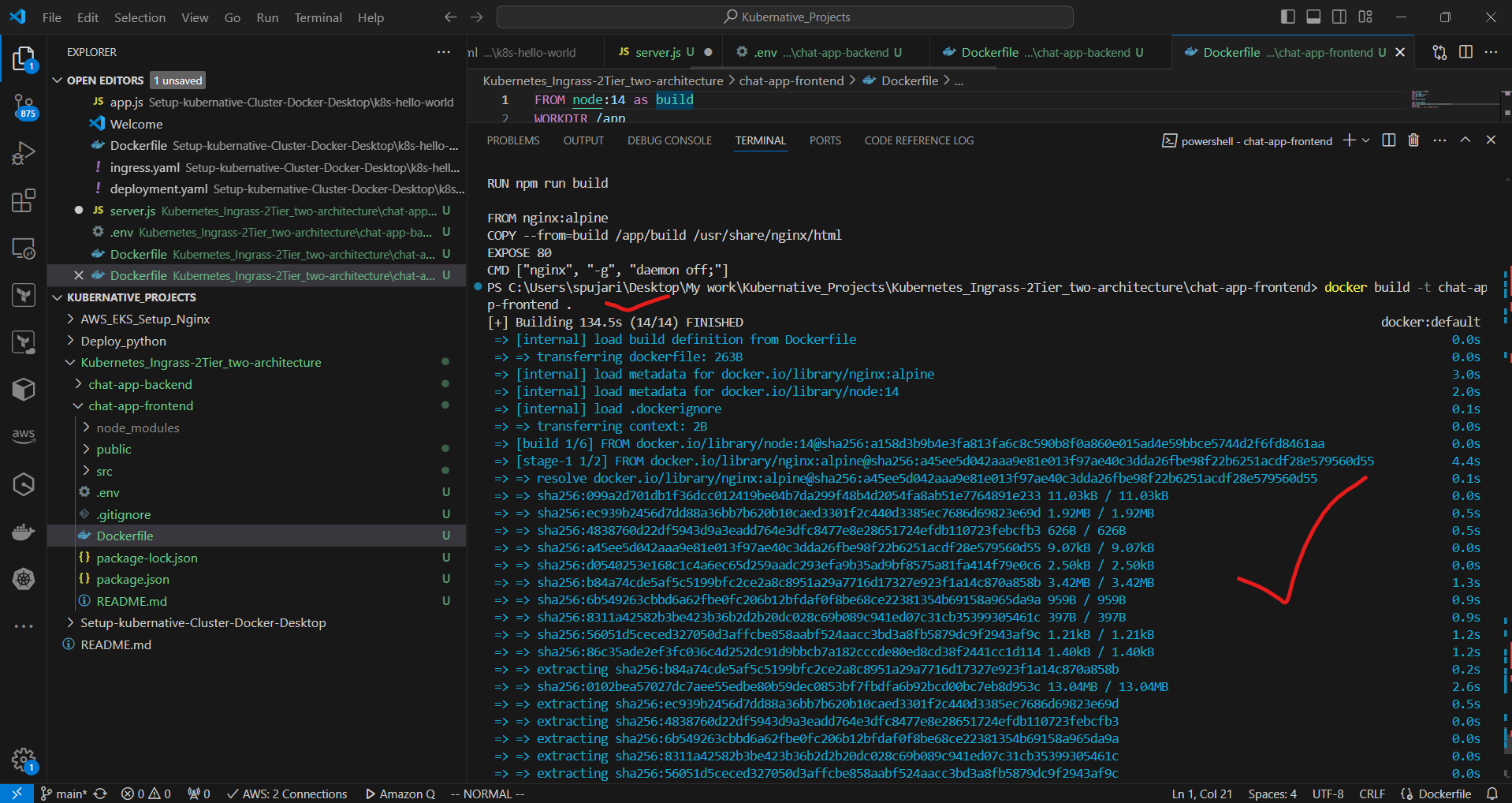

b. In the frontend directory, create a Dockerfile:

FROM node:14 as build

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build

FROM nginx:alpine

COPY --from=build /app/build /usr/share/nginx/html

EXPOSE 80

CMD ["nginx", "-g", "daemon off;"]

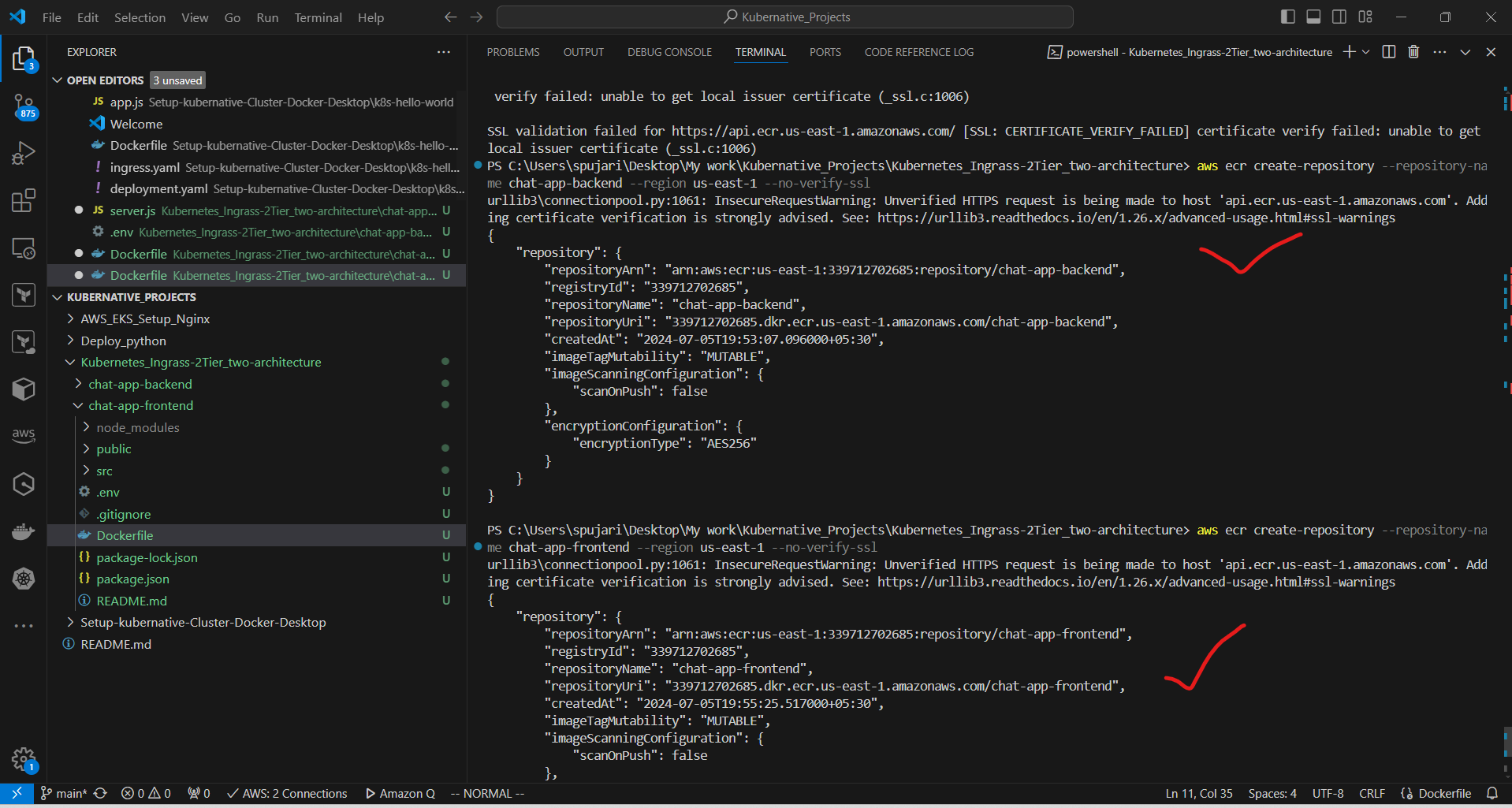

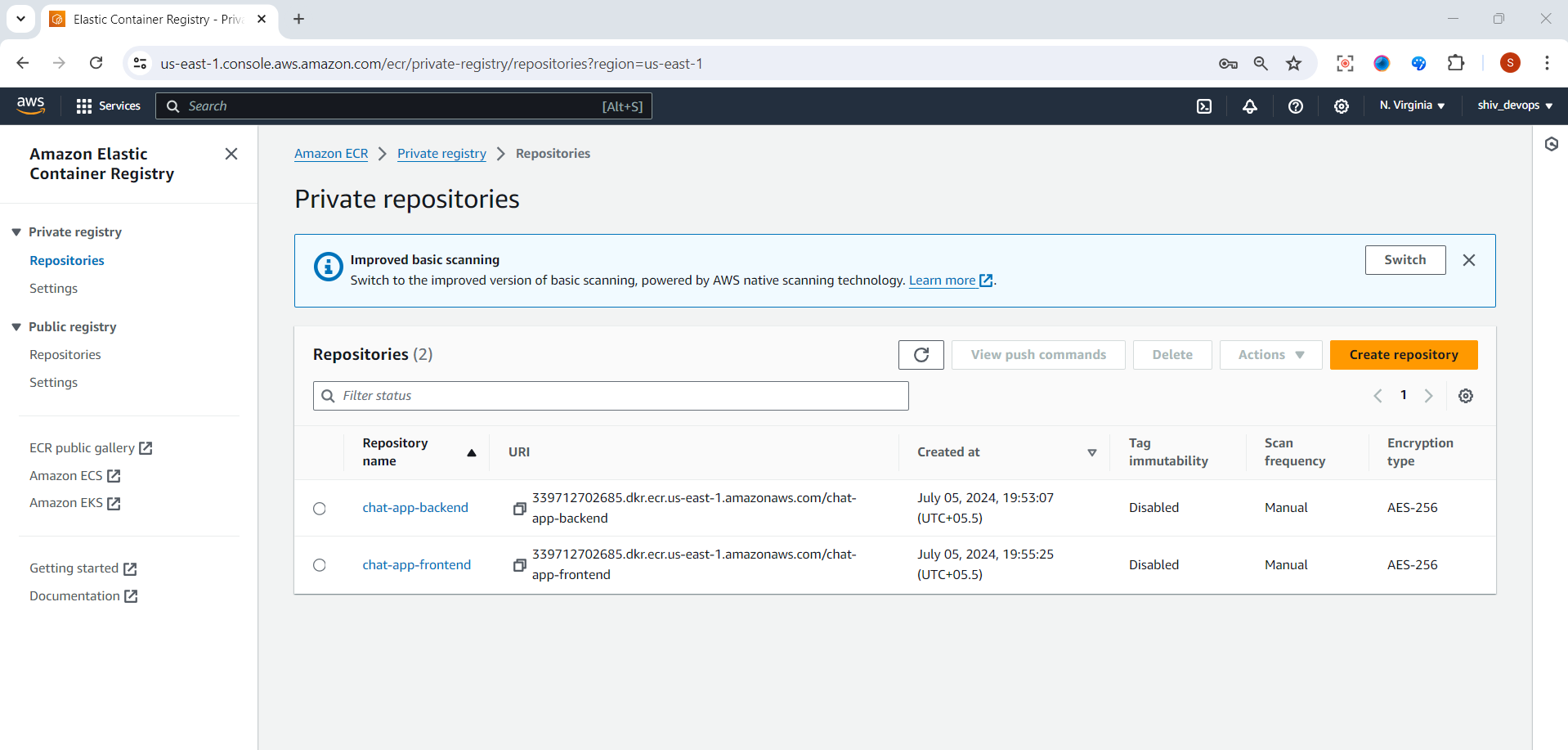

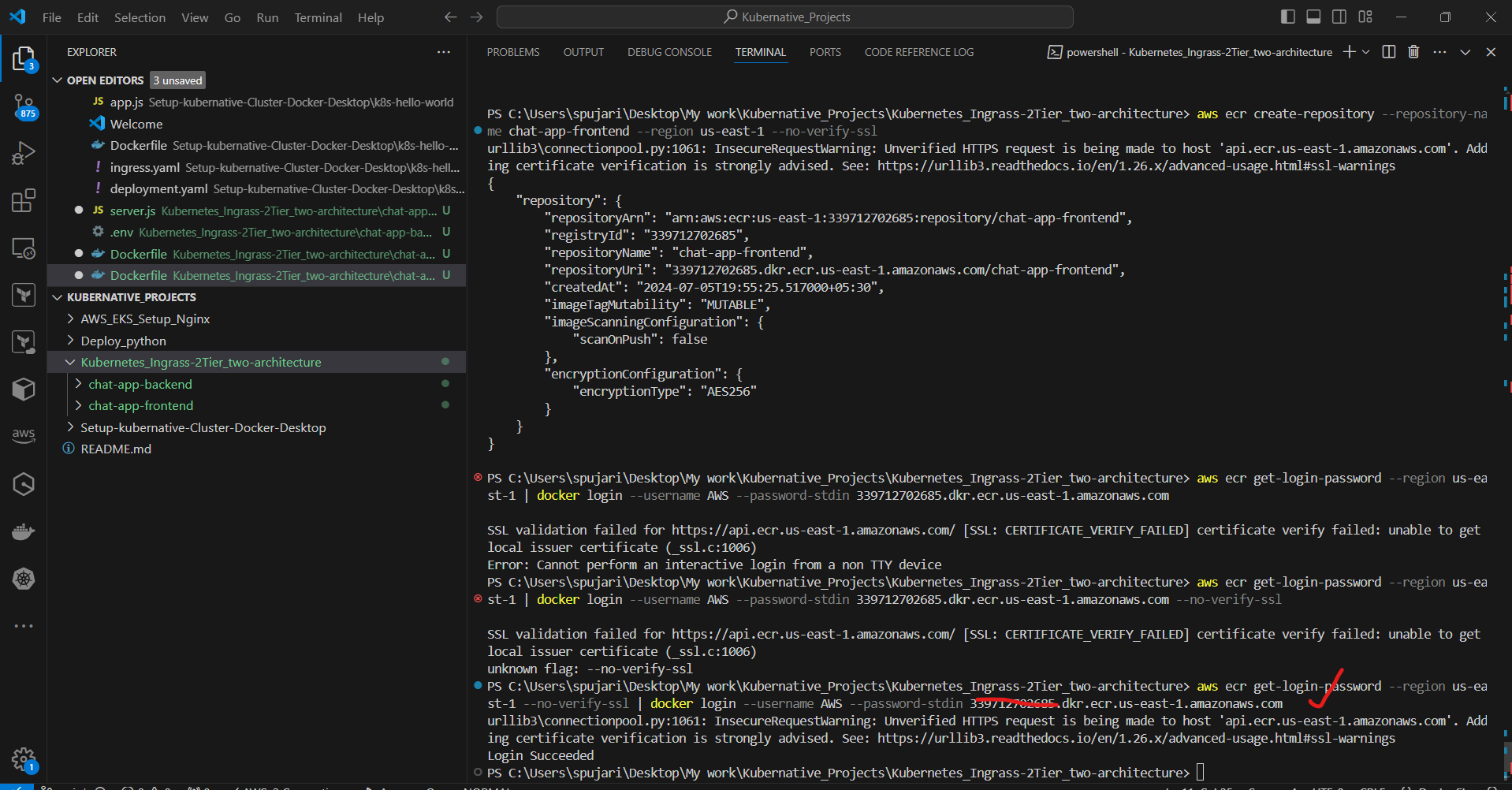

5.Set up Amazon ECR repositories:

a. Create repositories:

aws ecr create-repository --repository-name chat-app-backend

/* If above command is not worked ,use below command */

aws ecr create-repository --repository-name chat-app-backend --region us-east-1 --no-verify-ssl

aws ecr create-repository --repository-name chat-app-frontend

/* If above command is not worked ,use below command */

aws ecr create-repository --repository-name chat-app-frontend --region us-east-1 --no-verify-ssl

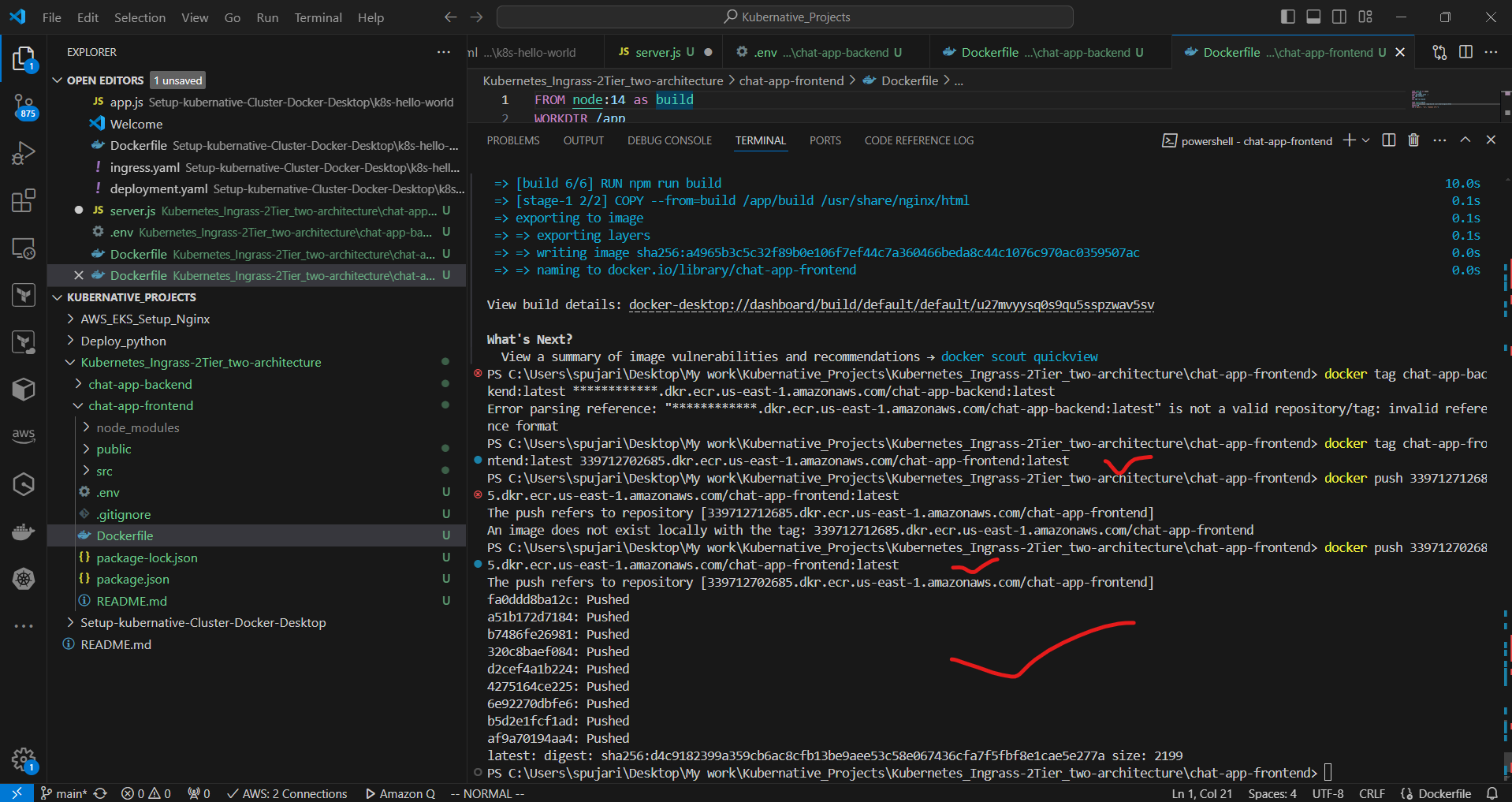

b.Build and push Docker images:

# Login to ECR

aws ecr get-login-password --region us-east-1 --no-verify-ssl | docker login --username AWS --password-stdin 339712702685.dkr.ecr.us-east-1.amazonaws.com

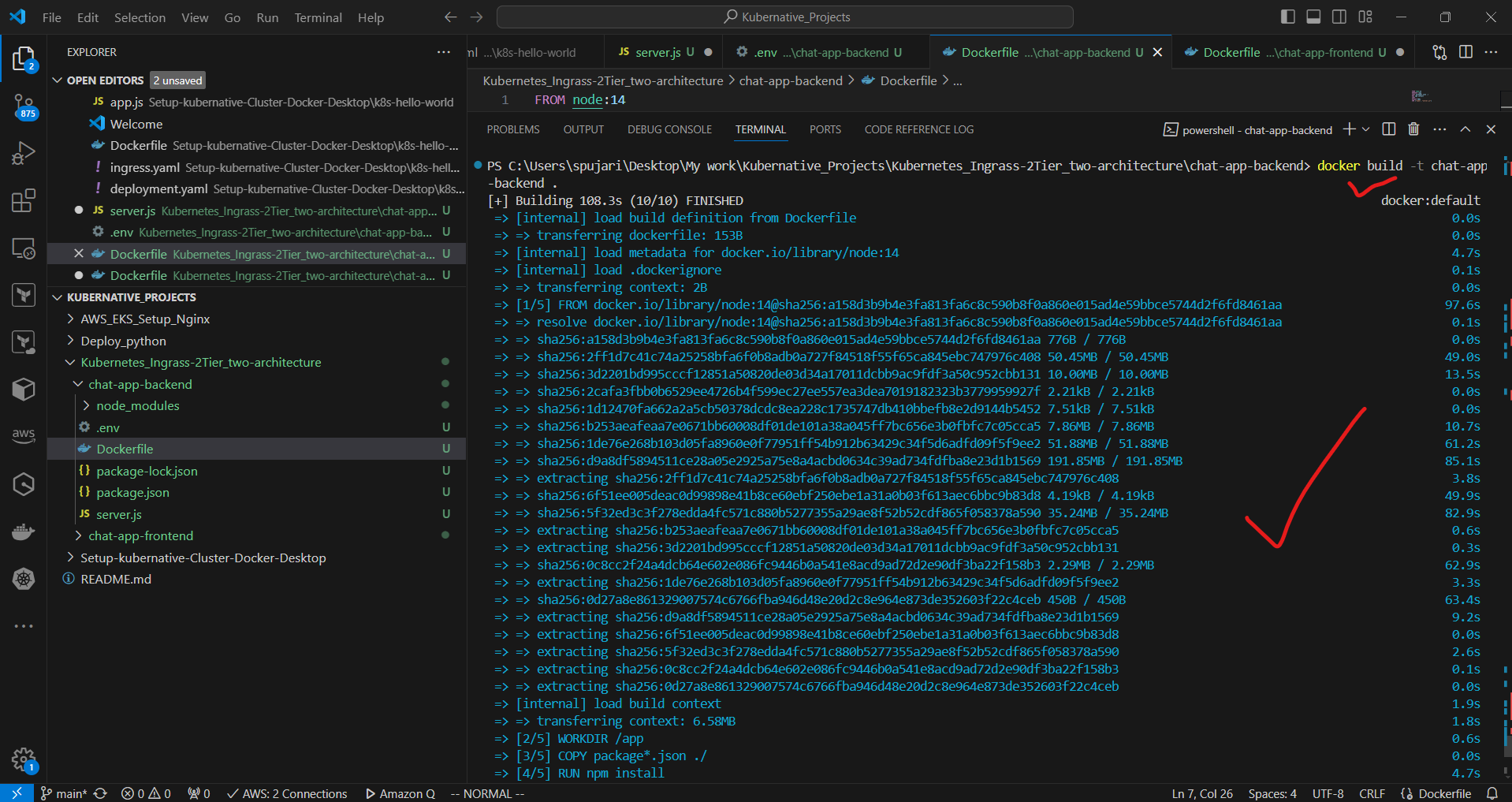

# Build and push backend

docker build -t chat-app-backend ./chat-app-backend

docker tag chat-app-backend:latest ************.dkr.ecr.us-east-1.amazonaws.com/chat-app-backend:latest

This command does the following:

It tags your local Docker image chat-app-backend:latest

With a new tag that points to your Amazon ECR repository

The new tag includes:

Your AWS account ID: ***********

The ECR domain: dkr.ecr.us-east-1.amazonaws.com

The repository name: chat-app-backend

The image tag: latest

docker push 339712712685.dkr.ecr.us-east-1.amazonaws.com/chat-app-backend:latest

Now Build and Push Frontend

# Build and push frontend

docker build -t chat-app-frontend .

docker tag chat-app-frontend:latest 339712702685.dkr.ecr.us-east-1.amazonaws.com/chat-app-frontend:latest

docker push 339712712685.dkr.ecr.us-east-1.amazonaws.com/chat-app-frontend:latest

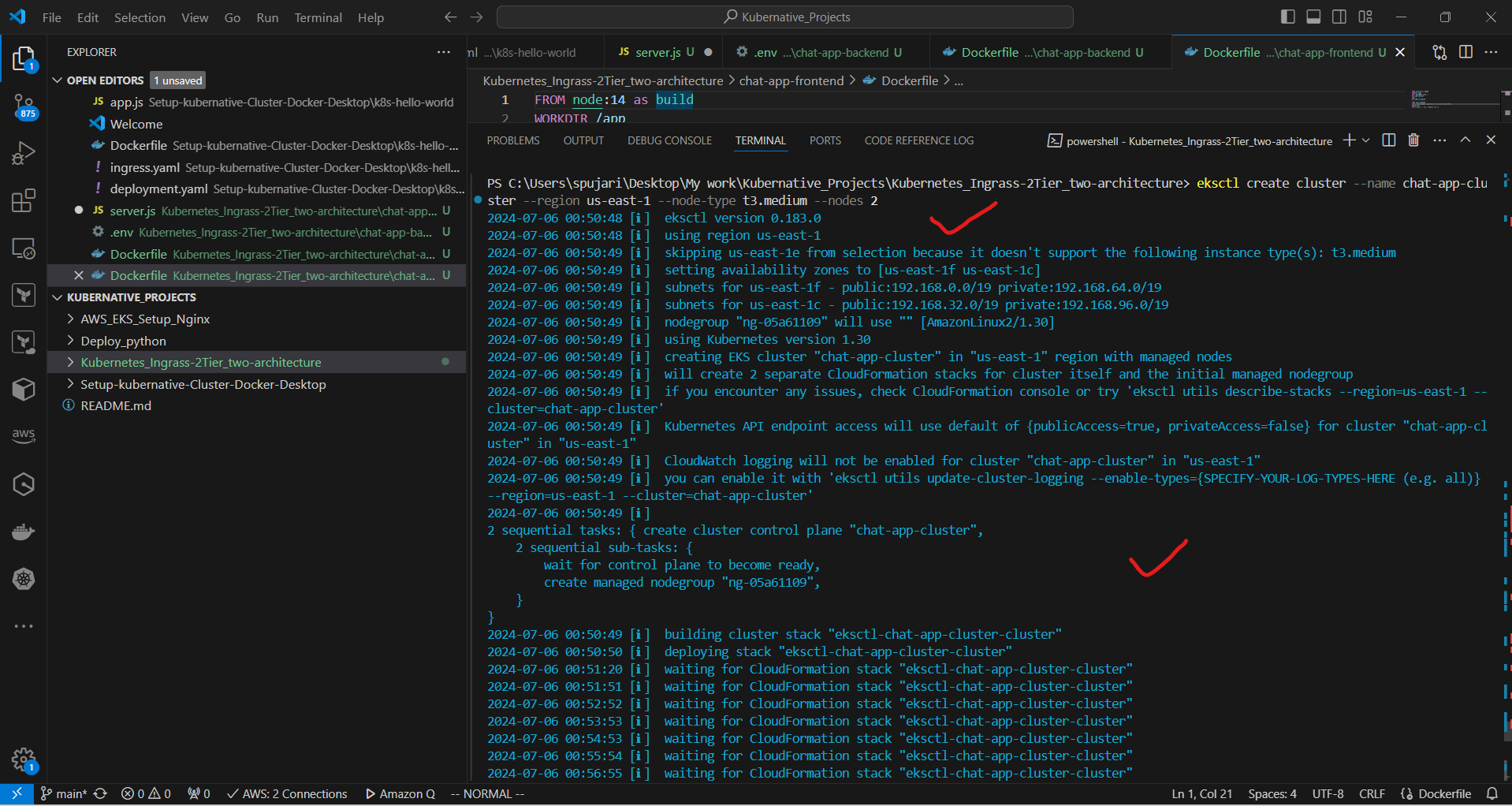

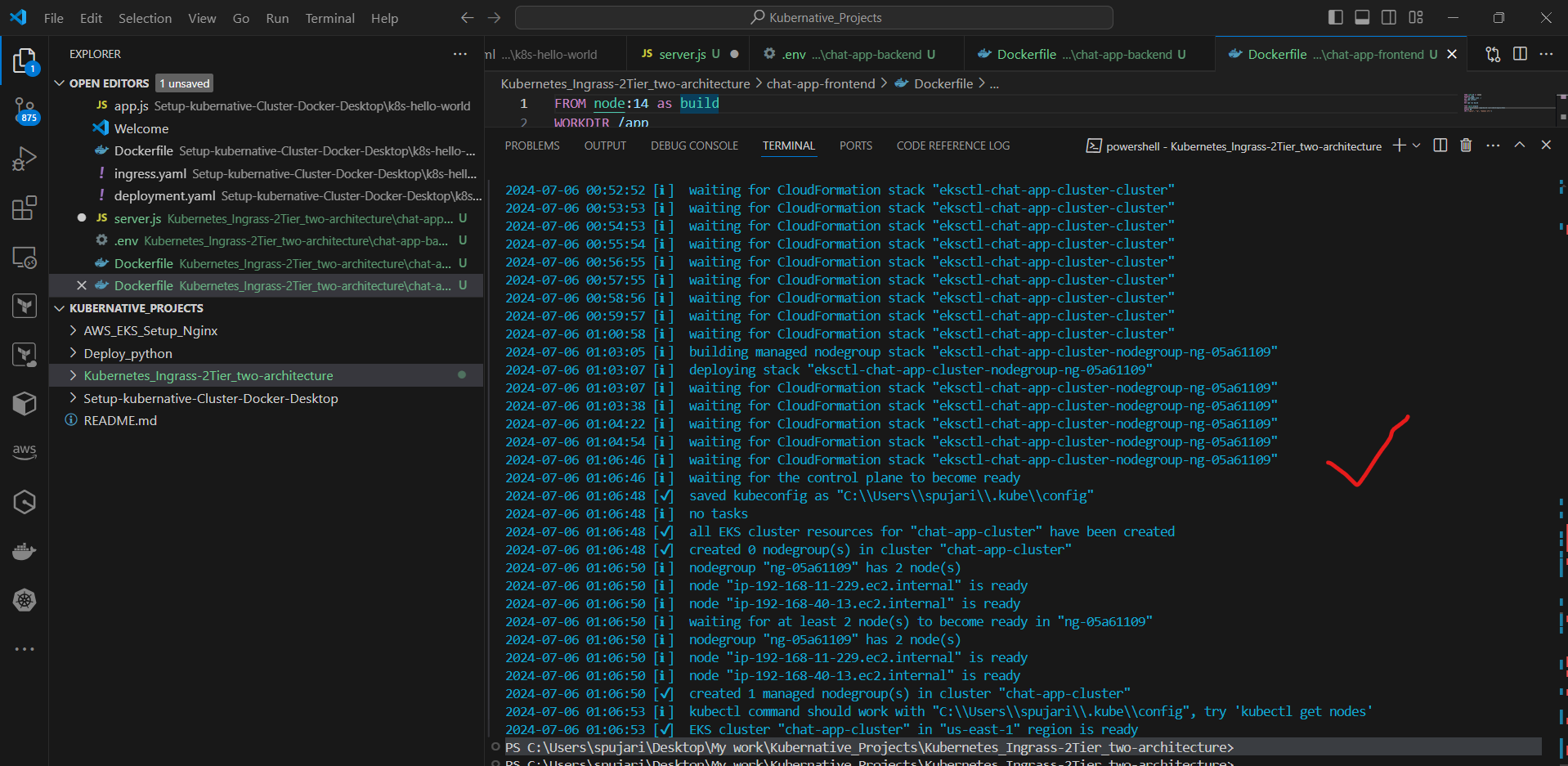

6. Create an Amazon EKS cluster:

a. Create the cluster:

eksctl create cluster --name chat-app-cluster --region us-east-1 --node-type t3.medium --nodes 2

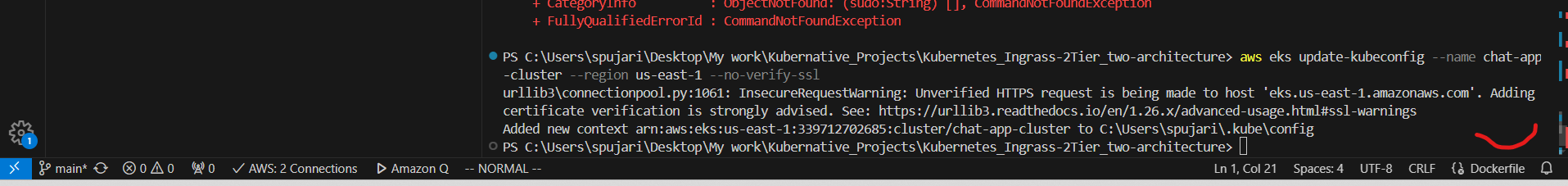

b. Update kubeconfig:

aws eks update-kubeconfig --name chat-app-cluster --region us-east-1 --no-verify-ssl

7.Deploy the application to EKS:

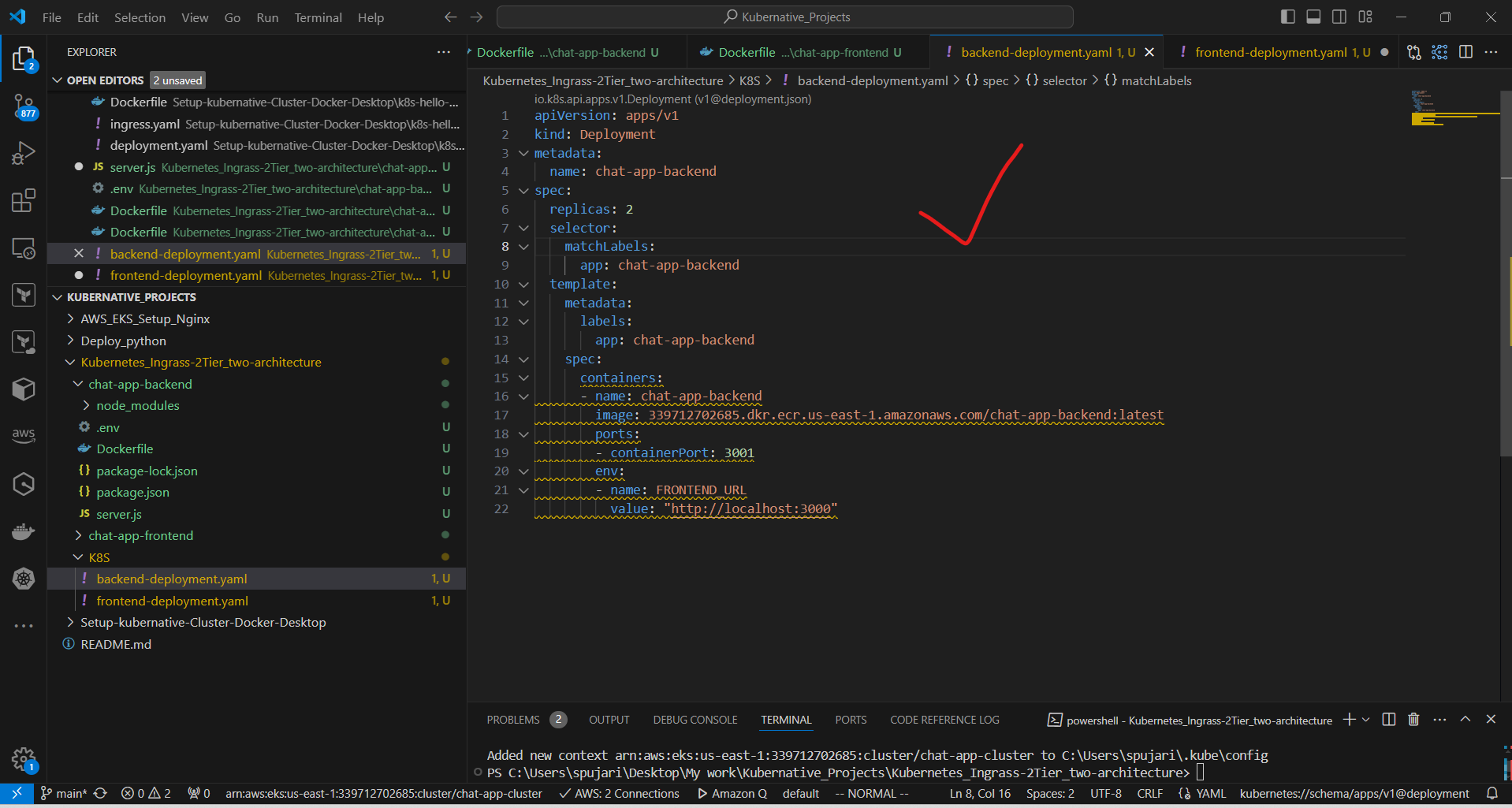

a. Create backend-deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: chat-app-backend

spec:

replicas: 2

selector:

matchLabels:

app: chat-app-backend

template:

metadata:

labels:

app: chat-app-backend

spec:

containers:

- name: chat-app-backend

image: 339712702685.dkr.ecr.us-east-1.amazonaws.com/chat-app-backend:latest

ports:

- containerPort: 3001

env:

- name: FRONTEND_URL

value: "http://localhost:3000"

Key changes:

Updated the

imagefield with your ECR repository URL:Account ID: 339712702685

Region: us-east-1

Notes:

The

FRONTEND_URLenvironment variable is still set to "https://your-domain.com". You should replace this with your actual frontend domain.Make sure you've created the ECR repository named "chat-app-backend" and pushed your image to it.

The containerPort is set to 3001. Ensure this matches the port your application is listening on.

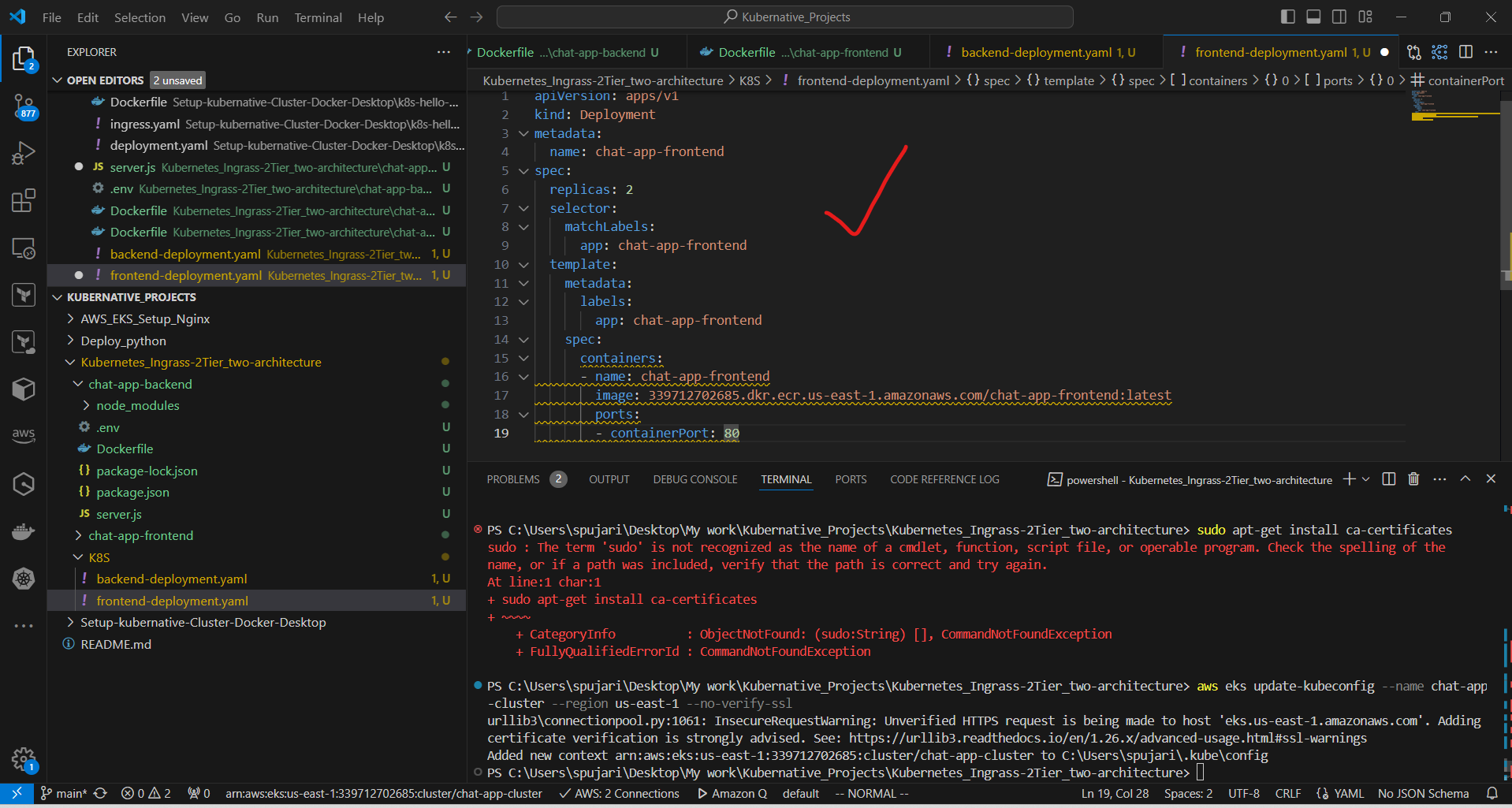

b. Create frontend-deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: chat-app-frontend

spec:

replicas: 2

selector:

matchLabels:

app: chat-app-frontend

template:

metadata:

labels:

app: chat-app-frontend

spec:

containers:

- name: chat-app-frontend

image: 339712702685.dkr.ecr.us-east-1.amazonaws.com/chat-app-frontend:latest

ports:

- containerPort: 80

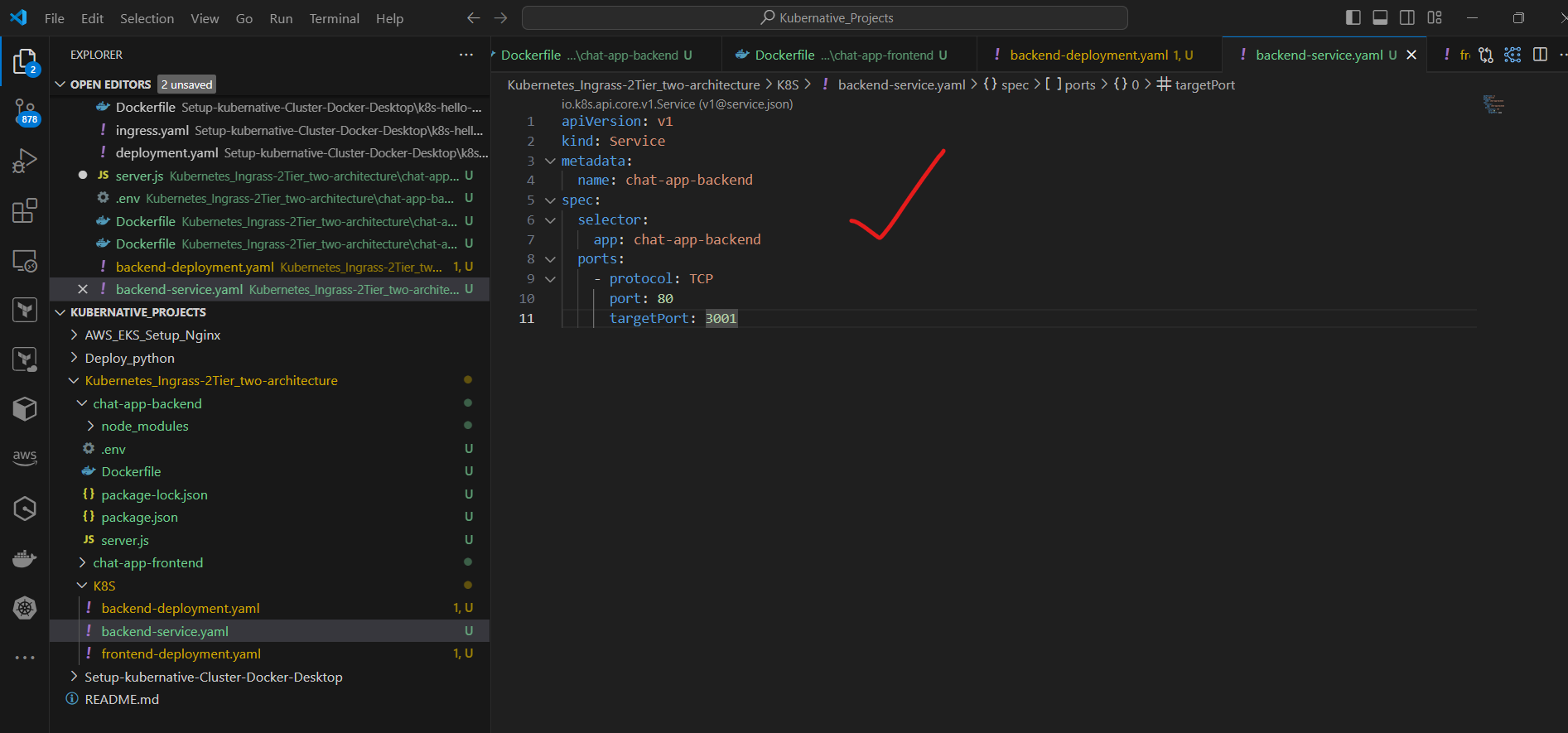

c. Create backend-service.yaml:

apiVersion: v1

kind: Service

metadata:

name: chat-app-backend

spec:

selector:

app: chat-app-backend

ports:

- protocol: TCP

port: 80

targetPort: 3001

This Service:

Is named "chat-app-backend"

Selects pods with the label

app: chat-app-backendExposes port 80 externally

Routes traffic to port 3001 on the selected pods (your backend application port)

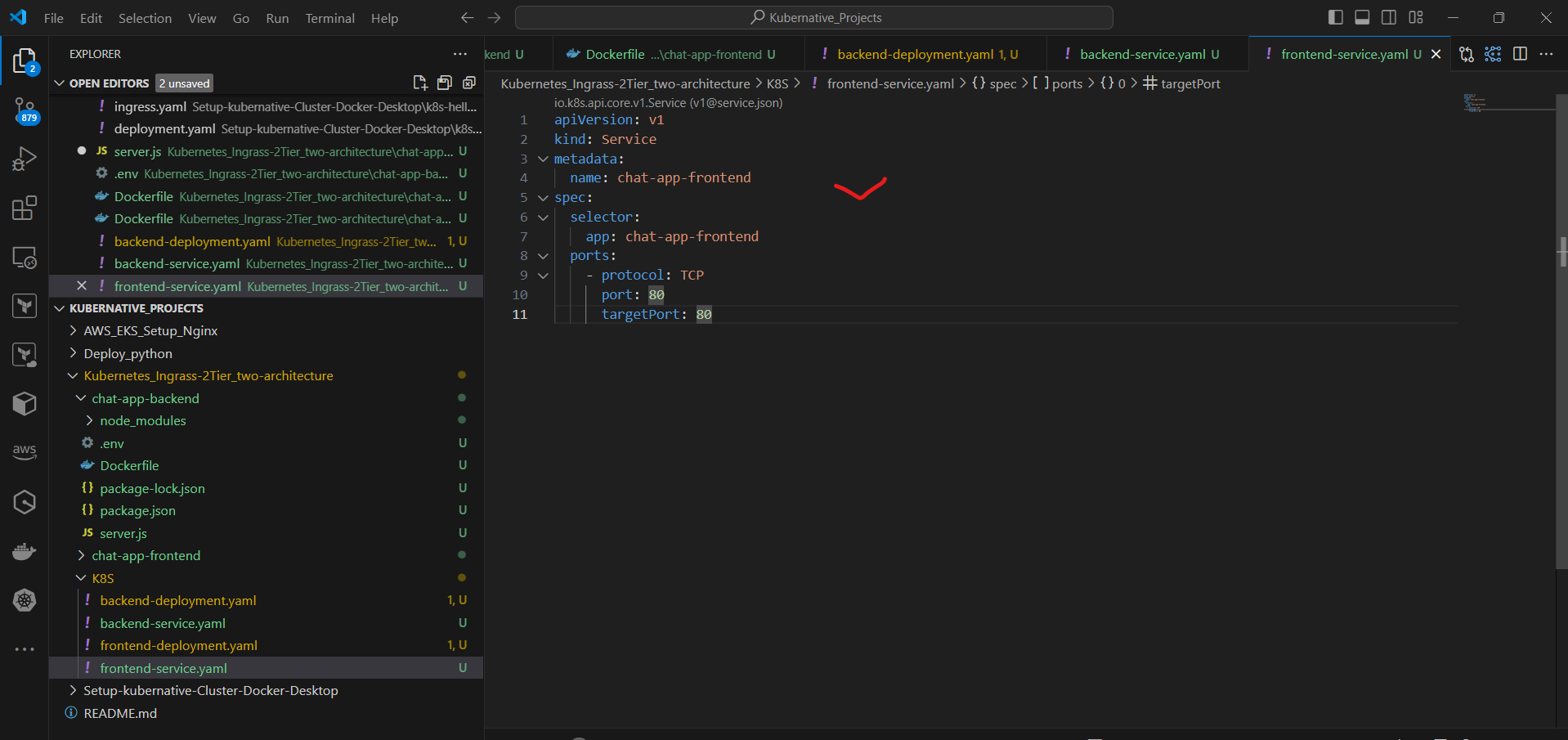

d. Create frontend-service.yaml:

apiVersion: v1

kind: Service

metadata:

name: chat-app-frontend

spec:

selector:

app: chat-app-frontend

ports:

- protocol: TCP

port: 80

targetPort: 80

This Service:

Is named "chat-app-frontend"

Selects pods with the label

app: chat-app-frontendExposes port 80 externally

Routes traffic to port 80 on the selected pods

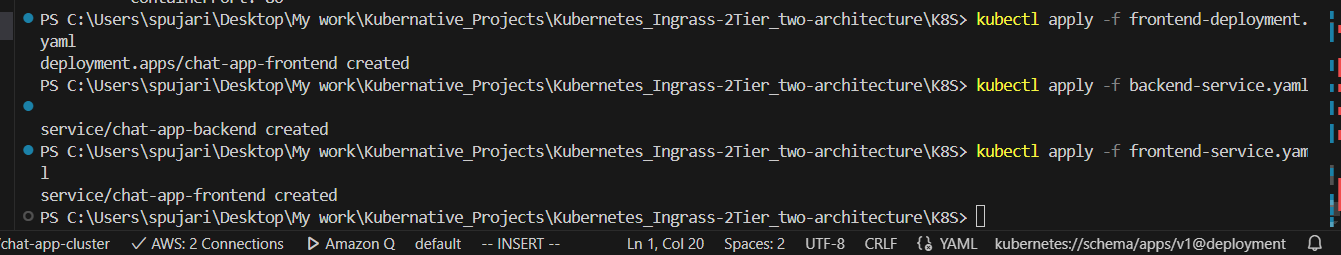

e. Apply the configurations:

kubectl apply -f backend-deployment.yaml

kubectl apply -f frontend-deployment.yaml

kubectl apply -f backend-service.yaml

kubectl apply -f frontend-service.yaml

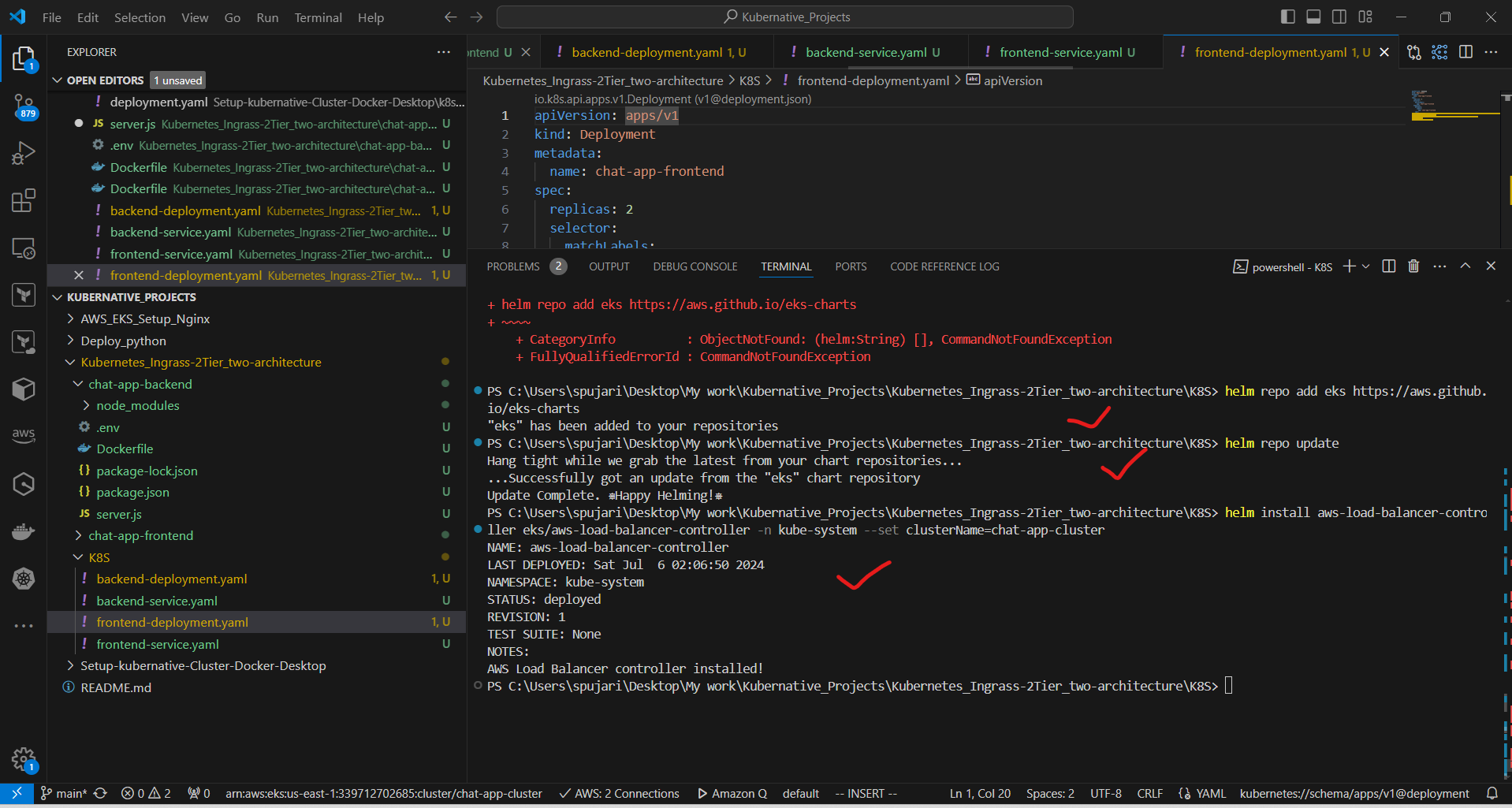

8. Set up Kubernetes Ingress:

a. Install AWS Load Balancer Controller:

kubectl apply -k "github.com/aws/eks-charts/stable/aws-load-balancer-controller//crds?ref=master"

helm repo add eks https://aws.github.io/eks-charts

helm repo update

helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=chat-app-cluster

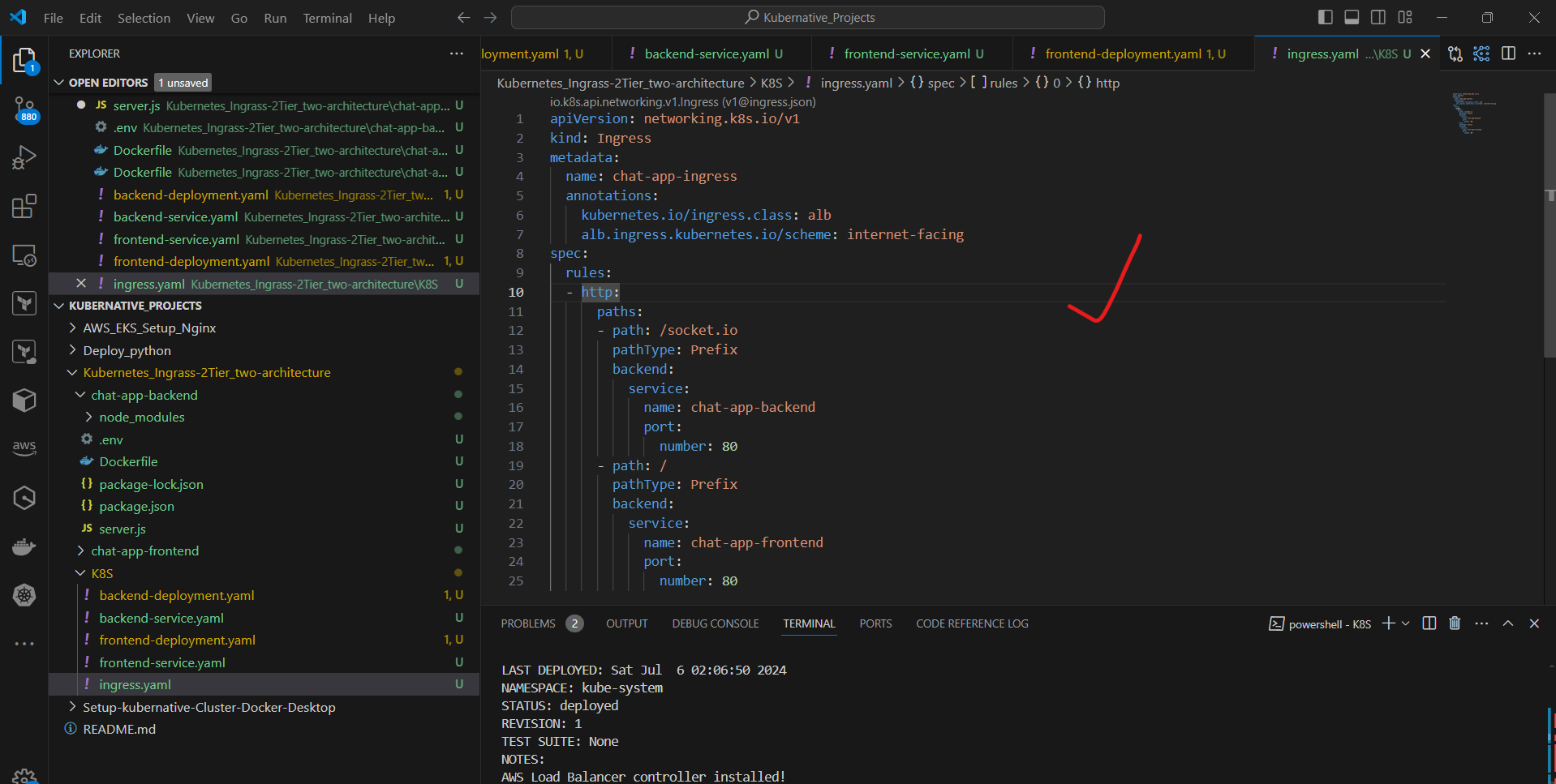

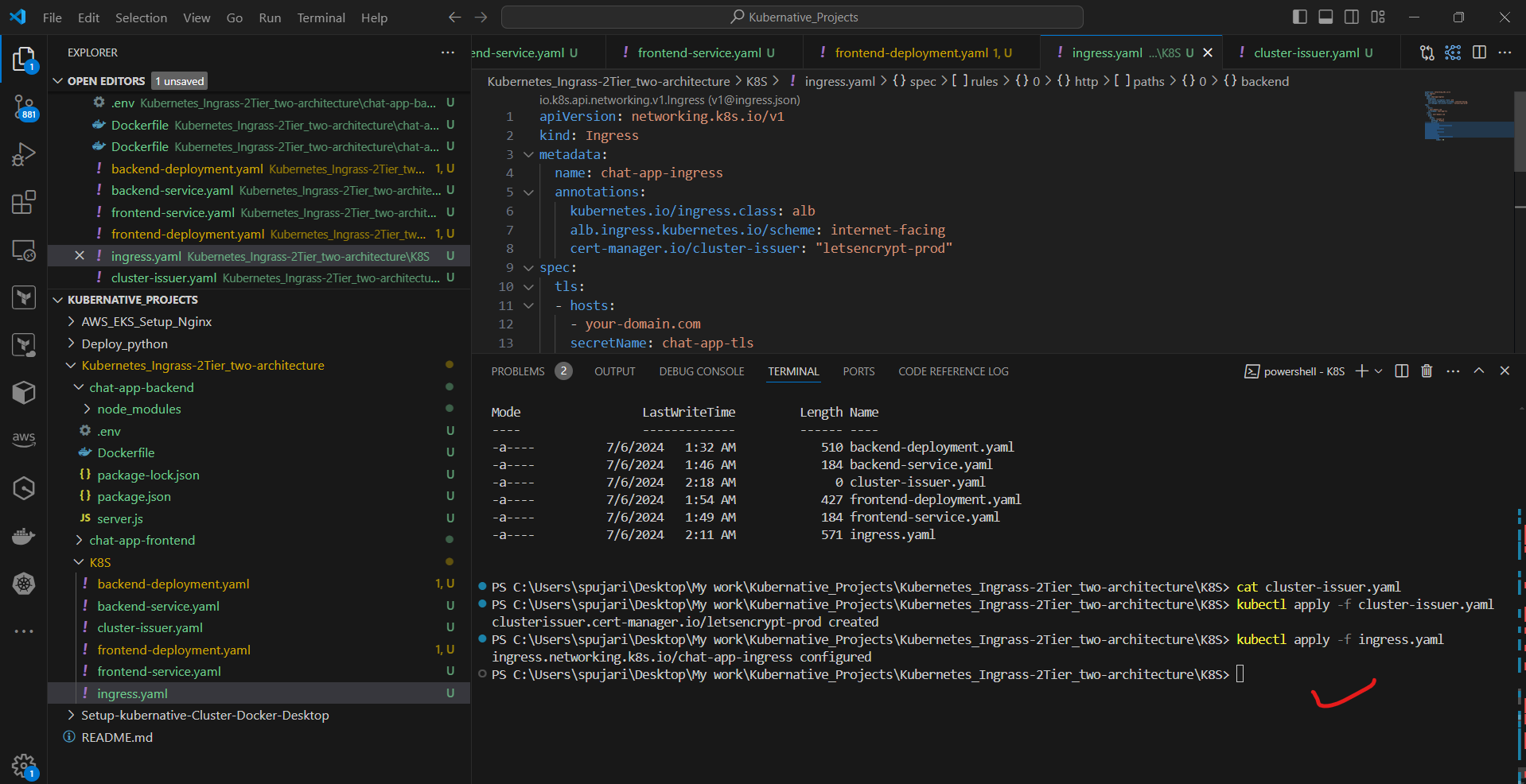

b. Create ingress.yaml:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: chat-app-ingress

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internet-facing

spec:

rules:

- http:

paths:

- path: /socket.io

pathType: Prefix

backend:

service:

name: chat-app-backend

port:

number: 80

- path: /

pathType: Prefix

backend:

service:

name: chat-app-frontend

port:

number: 80

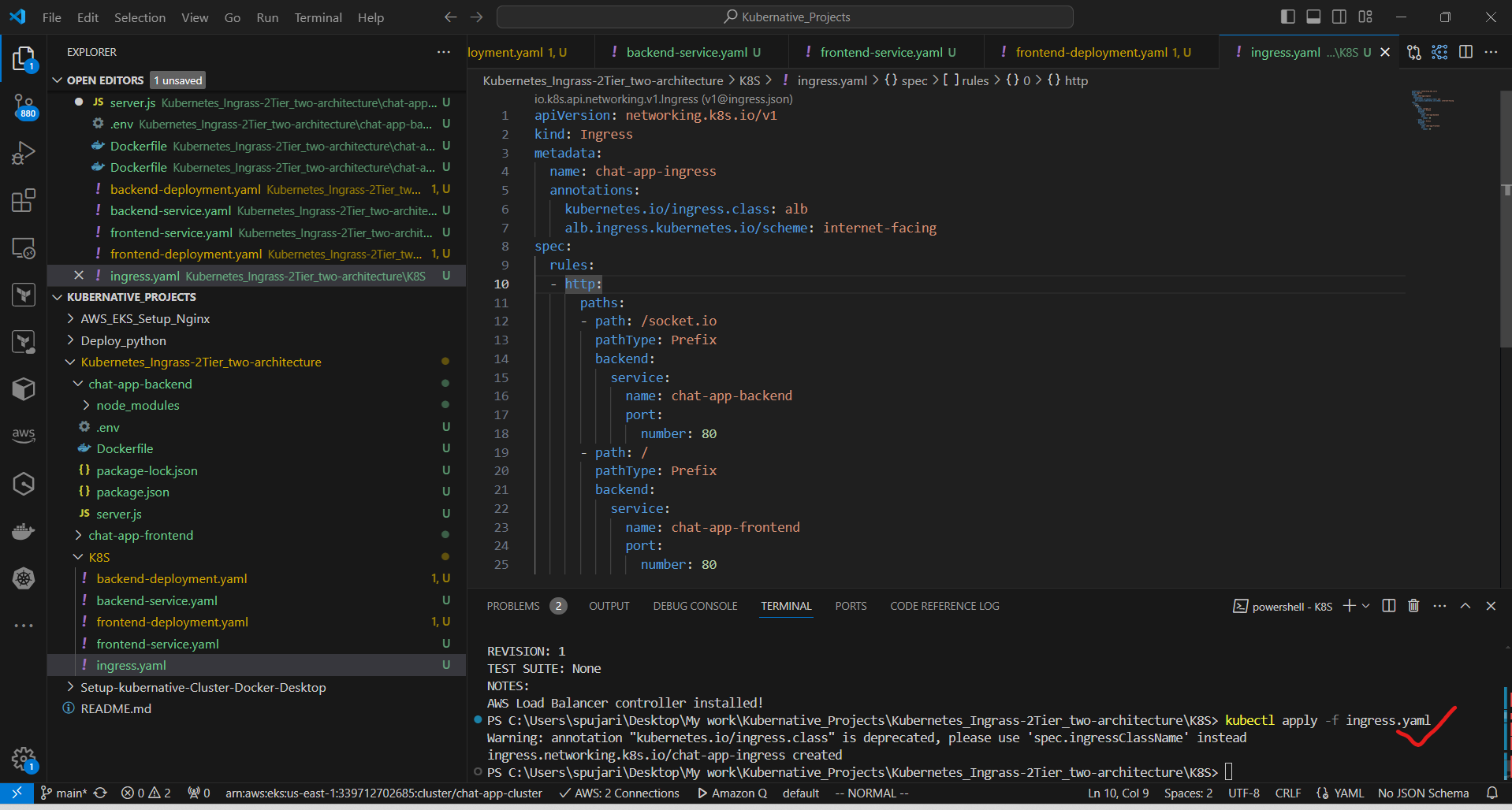

c. Apply the Ingress configuration:

kubectl apply -f ingress.yaml

- Configure DNS and SSL:

a. Install cert-manager:

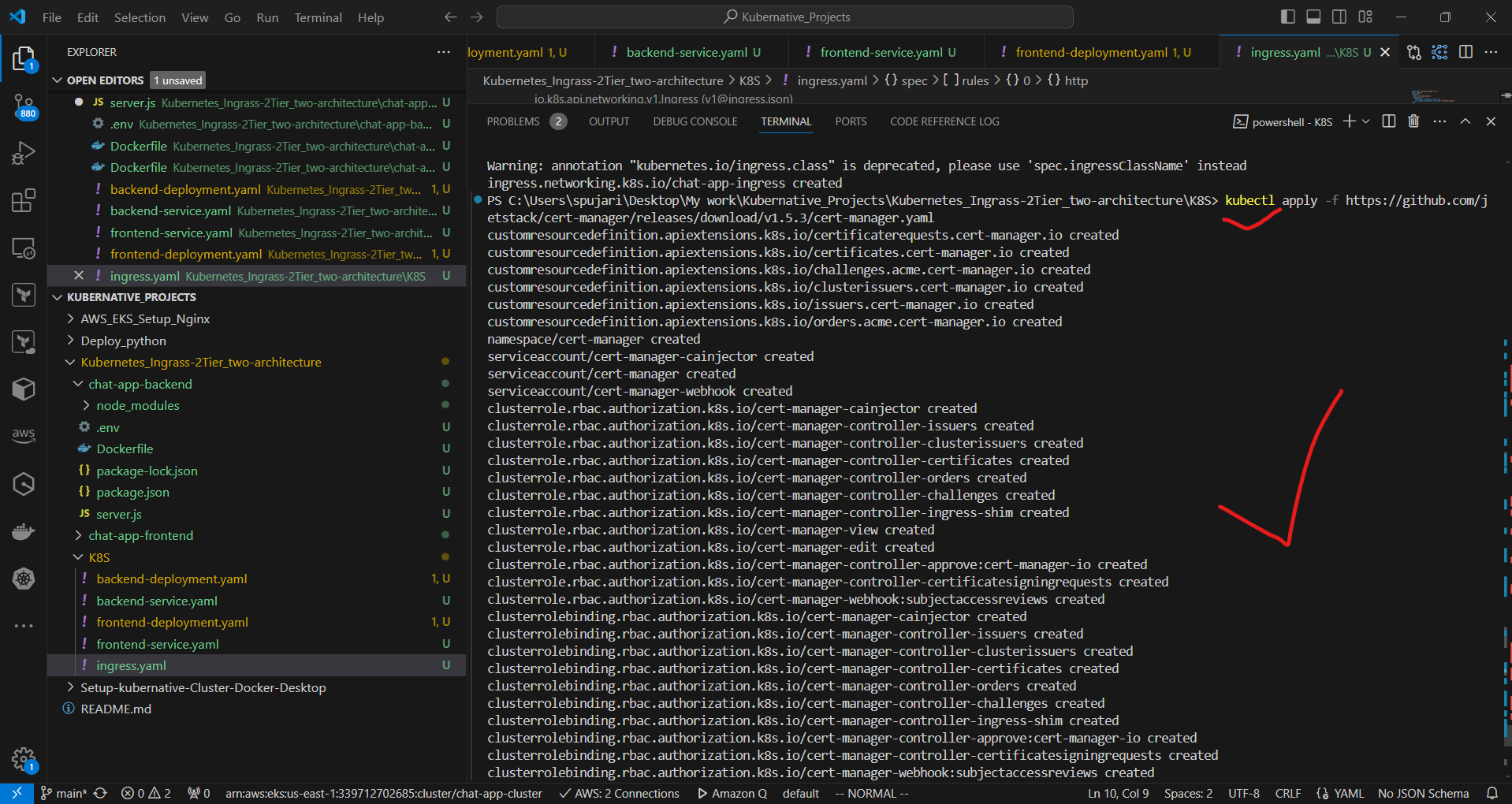

kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v1.5.3/cert-manager.yaml

This command is using kubectl to apply a Kubernetes manifest file. Here's a breakdown of what it does:

kubectl apply: This command is used to apply configurations to a Kubernetes cluster.-f: This flag specifies that the following argument is a file or URL containing the configuration to apply.The URL points to a YAML file hosted on GitHub, specifically version 1.5.3 of cert-manager's manifest.

Cert-manager is a popular Kubernetes add-on that automates the management and issuance of TLS certificates from various issuing sources.

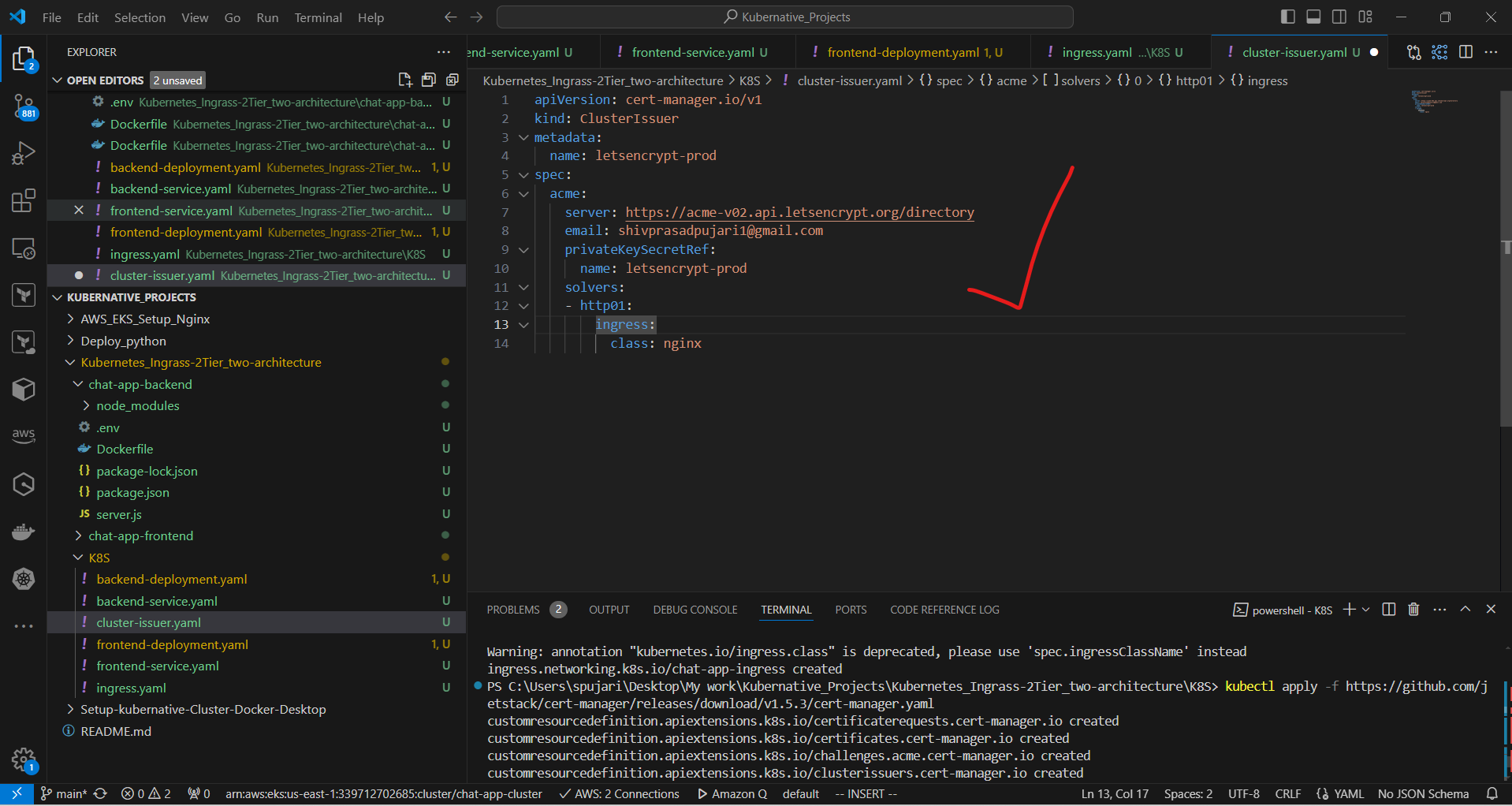

b. Create a ClusterIssuer for Let's Encrypt: Create cluster-issuer.yaml:

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-prod

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: your-email@example.com

privateKeySecretRef:

name: letsencrypt-prod

solvers:

- http01:

ingress:

class: nginx

This specifies that we're creating a ClusterIssuer resource, which is used by cert-manager to issue certificates.

This names the ClusterIssuer "letsencrypt-prod".

This configures the ACME protocol to use Let's Encrypt's production server. You should replace "your-email@example.com" with your actual email

This specifies where cert-manager should store the account's private key.

This configures the HTTP-01 challenge solver, which will use Nginx ingress to solve ACME challenges.

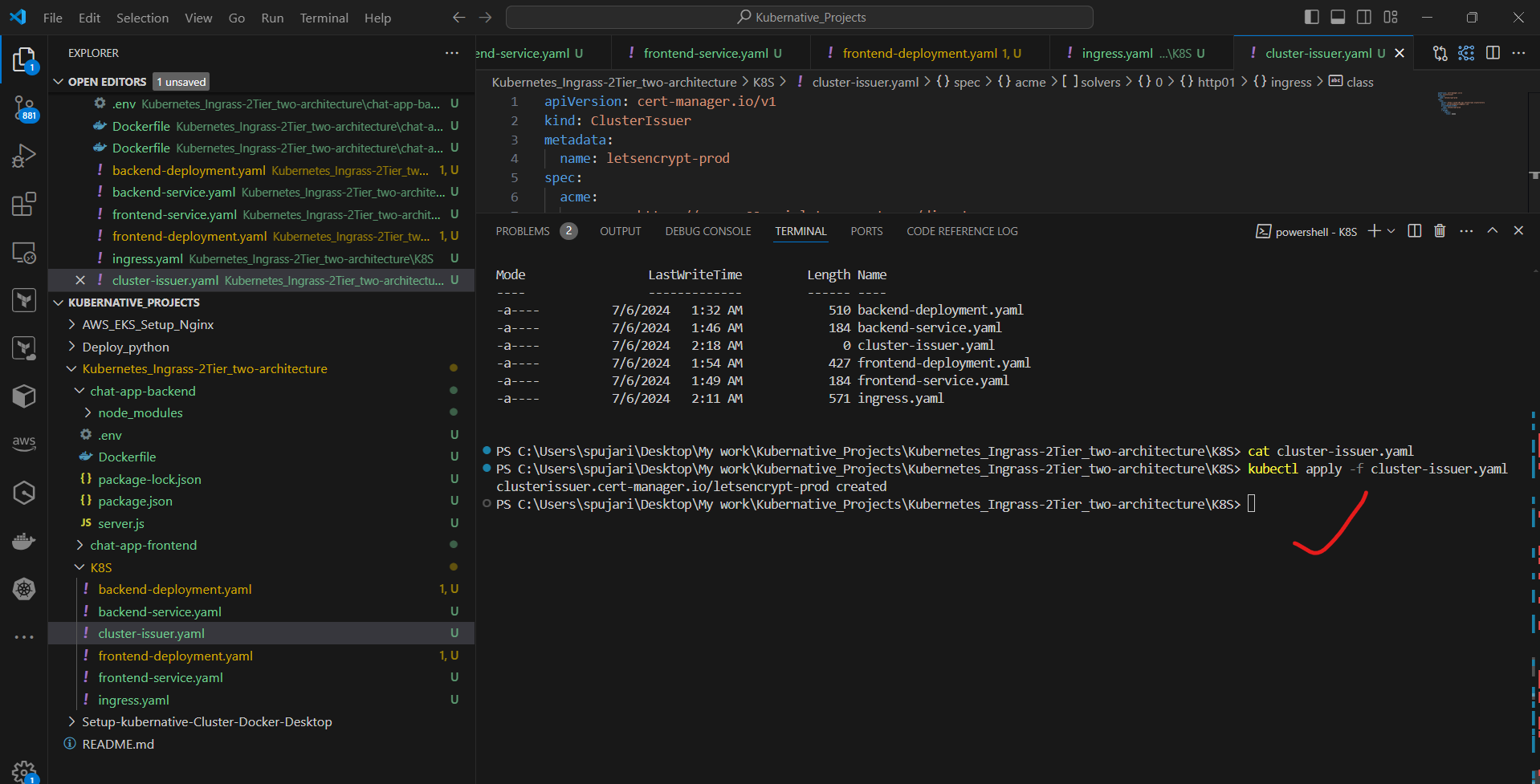

c. Apply the ClusterIssuer:

kubectl apply -f cluster-issuer.yaml

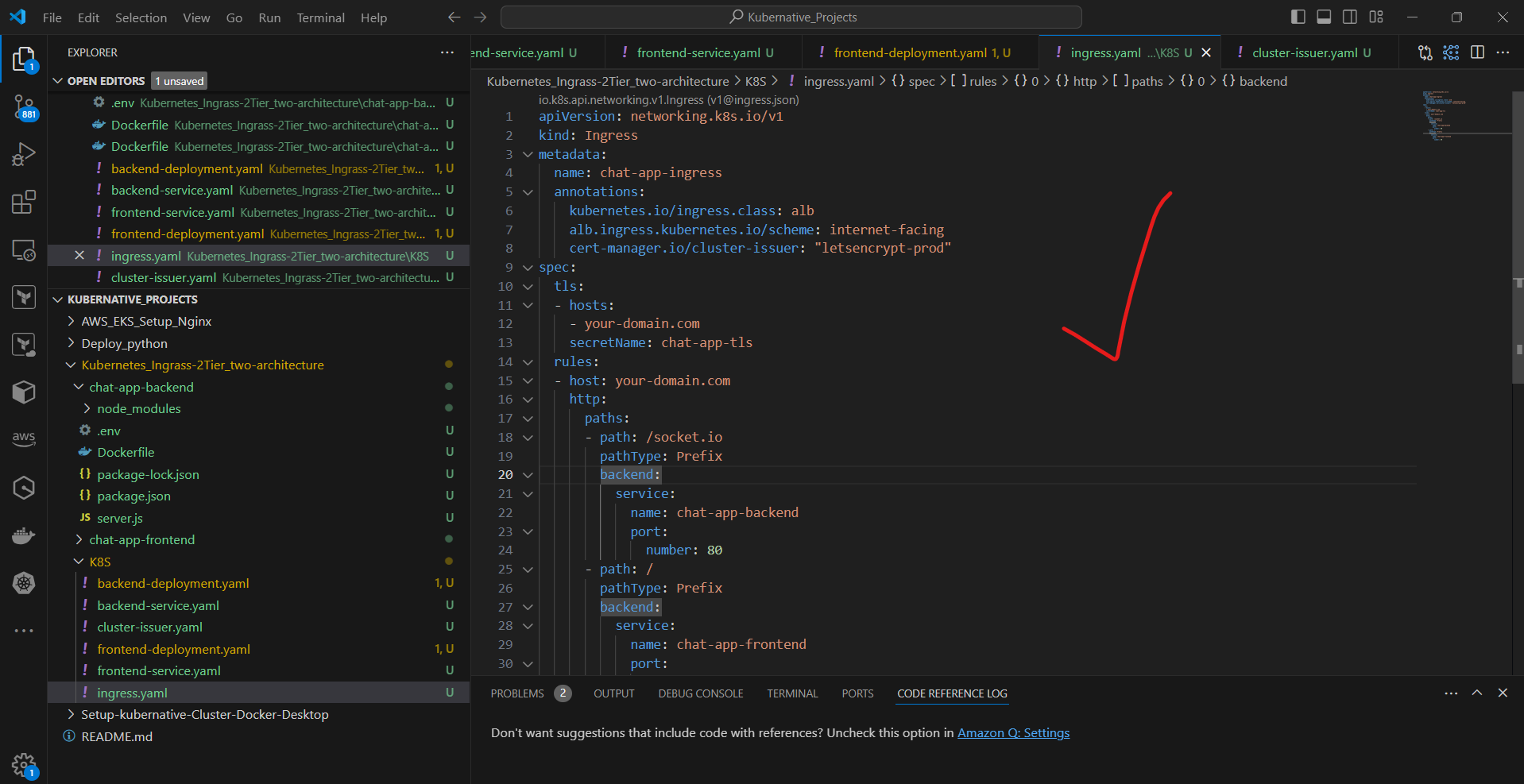

d. Update ingress.yaml to use TLS:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: chat-app-ingress

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internet-facing

cert-manager.io/cluster-issuer: "letsencrypt-prod"

spec:

tls:

- hosts:

- your-domain.com

secretName: chat-app-tls

rules:

- host: your-domain.com

http:

paths:

- path: /socket.io

pathType: Prefix

backend:

service:

name: chat-app-backend

port:

number: 80

- path: /

pathType: Prefix

backend:

service:

name: chat-app-frontend

port:

number: 80

e. Apply the updated Ingress:

kubectl apply -f ingress.yaml

Alternative to Route 53 setup:

Instead of using Route 53, you can directly use the Application Load Balancer (ALB) DNS name provided by AWS:

a. After applying your Ingress configuration, retrieve the ALB DNS name:

bashCopykubectl get ingress chat-app-ingress -o jsonpath='{.status.loadBalancer.ingress[0].hostname}'

b. Use this DNS name to access your application directly, e.g., http://your-alb-dns-name

c. For SSL, you can use a service like Cloudflare as a reverse proxy:

Add your domain to Cloudflare

Create a CNAME record pointing to your ALB DNS name

Enable Cloudflare's SSL/TLS encryption

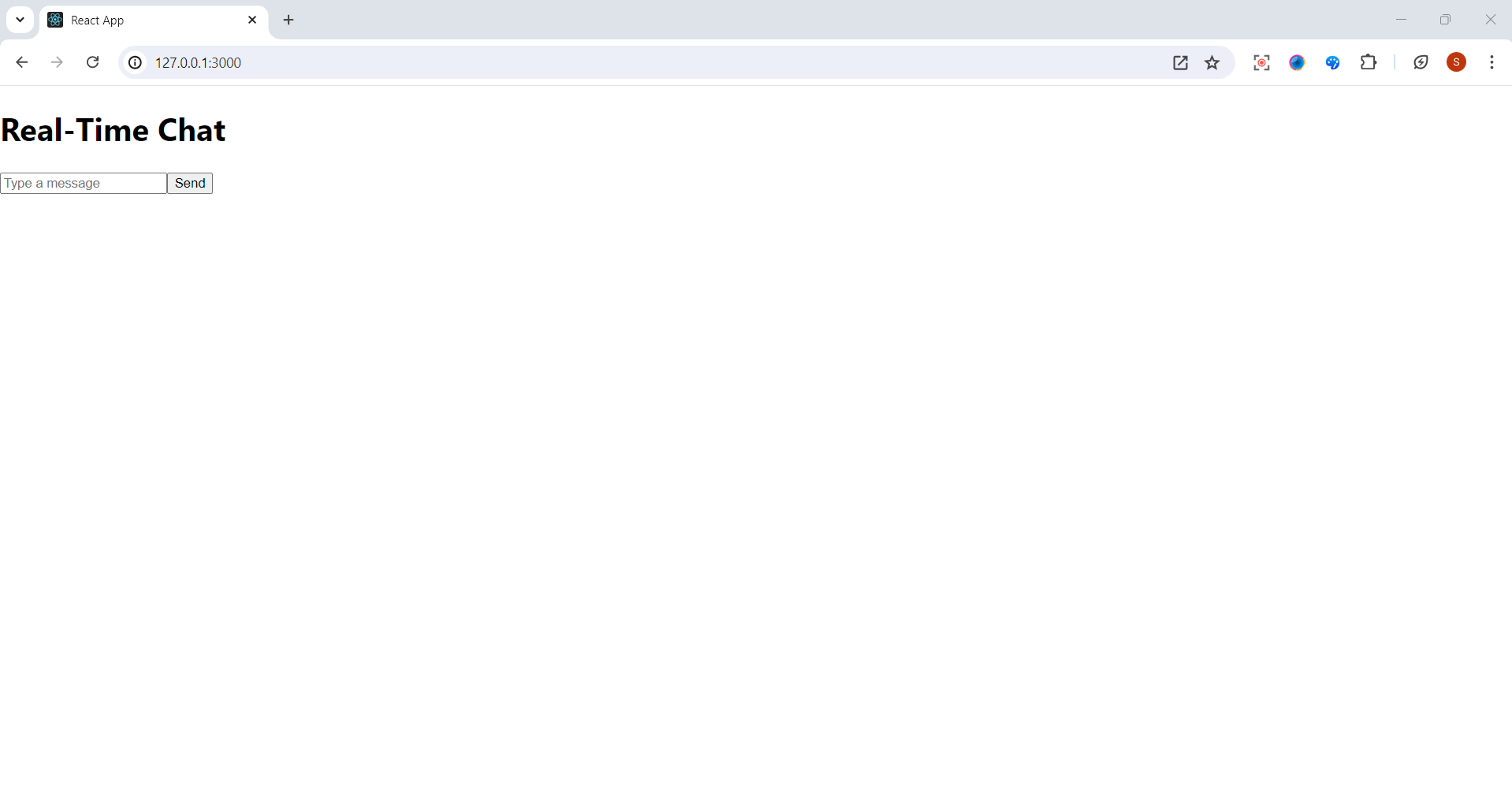

- Alternative testing approach:

a. Use port-forwarding to test locally:

bashCopy# For backend

kubectl port-forward service/chat-app-backend 3001:80

# For frontend

kubectl port-forward service/chat-app-frontend 3000:80

b. Access the application at http://localhost:3000 in your browser

c. Use tools like Postman or curl to test the backend API endpoints

d. For multi-device testing, use a tool like ngrok to expose your local port to the internet temporarily

Next Phase we will check continuous deployment setup:

Instead of GitHub Actions, you can use AWS CodePipeline:

a. Create an AWS CodeCommit repository for your project

b. Set up AWS CodeBuild with buildspec.yml to build and push Docker images to ECR

c. Use AWS CodeDeploy with appspec.yml to deploy to EKS:

Create a CodeDeploy application and deployment group

Define deployment configuration to update EKS deployments

d. Create an AWS CodePipeline that ties these stages together:

Source stage: CodeCommit

Build stage: CodeBuild

Deploy stage: CodeDeploy

This approach keeps everything within the AWS ecosystem and can be more tightly integrated with your EKS cluster.rnetes Ingress on AWS. It demonstrates real-time functionality with WebSockets, containerization, Kubernetes deployment, and secure HTTPS access through a custom domain. The separation of frontend and backend allows for independent scaling and maintenance of each tier.

Thank you for joining me on this journey through the world of cloud computing! Your interest and support mean a lot to me, and I'm excited to continue exploring this fascinating field together. Let's stay connected and keep learning and growing as we navigate the ever-evolving landscape of technology.

LinkedIn Profile: https://www.linkedin.com/in/prasad-g-743239154/

Feel free to reach out to me directly at spujari.devops@gmail.com. I'm always open to hearing your thoughts and suggestions, as they help me improve and better cater to your needs. Let's keep moving forward and upward!

If you found this blog post helpful, please consider showing your support by giving it a round of applause👏👏👏. Your engagement not only boosts the visibility of the content, but it also lets other DevOps and Cloud Engineers know that it might be useful to them too. Thank you for your support! 😀

Thank you for reading and happy deploying! 🚀

Best Regards,

Sprasad

Subscribe to my newsletter

Read articles from Sprasad Pujari directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sprasad Pujari

Sprasad Pujari

Greetings! I'm Sprasad P, a DevOps Engineer with a passion for optimizing development pipelines, automating processes, and enabling teams to deliver software faster and more reliably.