Day 13/40 Days of K8s: Static Pods, Manual Scheduling, Labels, and Selectors in Kubernetes

Gopi Vivek Manne

Gopi Vivek Manne❓🚀 Pod Scheduling in Kubernetes

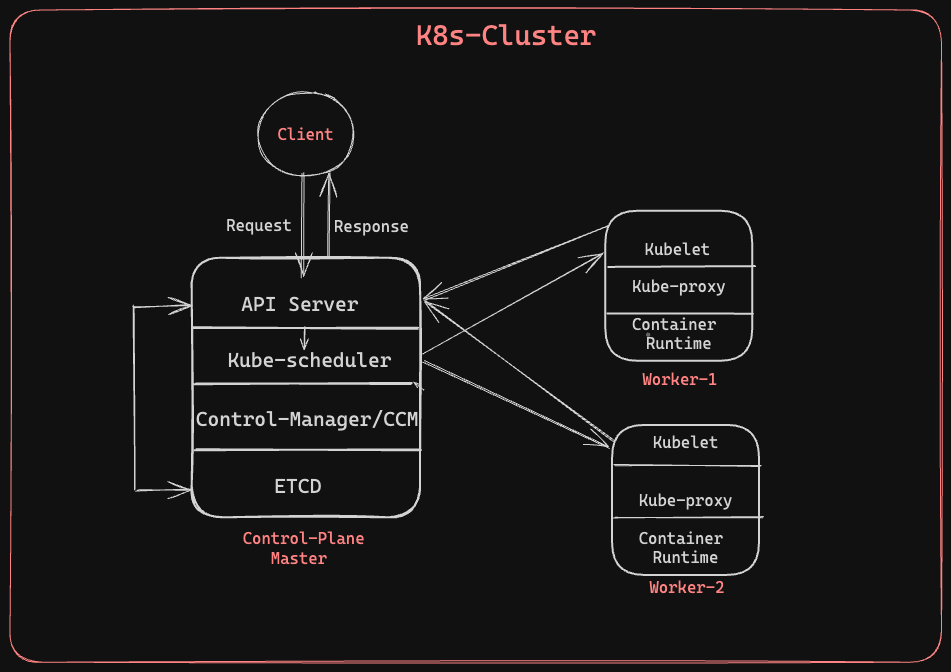

In a multi-node Kubernetes (K8s) cluster, pod scheduling involves several steps and components. When a user executes an imperative command to create a pod, the following process takes place:

API Server Interaction: The command interacts with the API server, which acts as the entry point to the cluster.

Scheduler: The API server after authentication/authorization sends a request to the scheduler, a component in the master node, to decide on which node pod has to be scheduled based on factors like node& resource availability, node affinity rules, taints and tolerations, and network policies..etc

Kubelet: The scheduler then sends a request to the kubelet via api-server, an agent on the worker node. The kubelet schedules the pod and sends a response back to the API server, which in turn responds to the client.

The scheduler must be running continuously, typically as a pod. But,.....

1. what if the scheduler pod goes down? 🤔

2. Who manages the scheduler pod? 🤔

3. Where is the configuration located for this scheduler control plane pod components ?? 🤔

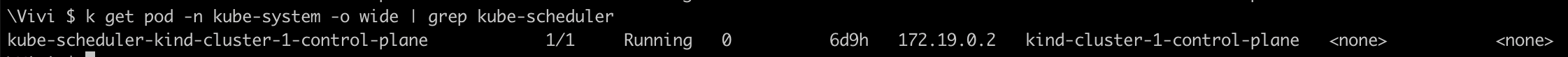

In a production-grade Kubernetes cluster, such as EKS or kops. Control plane components typically run as pods on the master VM. However, in our case, we have set up a kind cluster with one master and two worker nodes, and kind itself runs on top of containers.So, the scheduler will run as a container.

🚀 Control Plane Components as Static Pods

In Kubernetes, control plane components like the scheduler,api-server,CM run as static pods. The kubelet is responsible for managing these static pod resources and ensuring they are running.

We SSH into the VM, exec into the pods in case of full-blown K8s cluster.

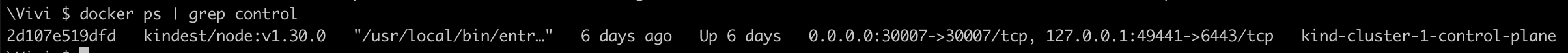

For Kind cluster, we exec into the containers,

- Look for the running containers

All the Control plane pod manifests stored at

/etc/kubernetes/manifests/

kubelet will monitor this directory and check if the manifests are present to run the control plane pods running.

Let's remove

kube-scheduler.yamlfrom this dir now, verify If scheduler is running and if not, control plane remains operational but scheduling capability will not work.

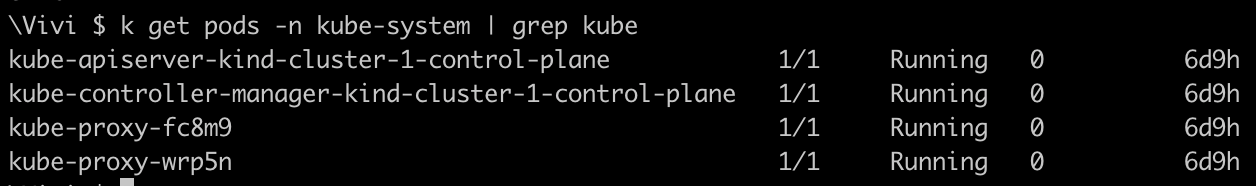

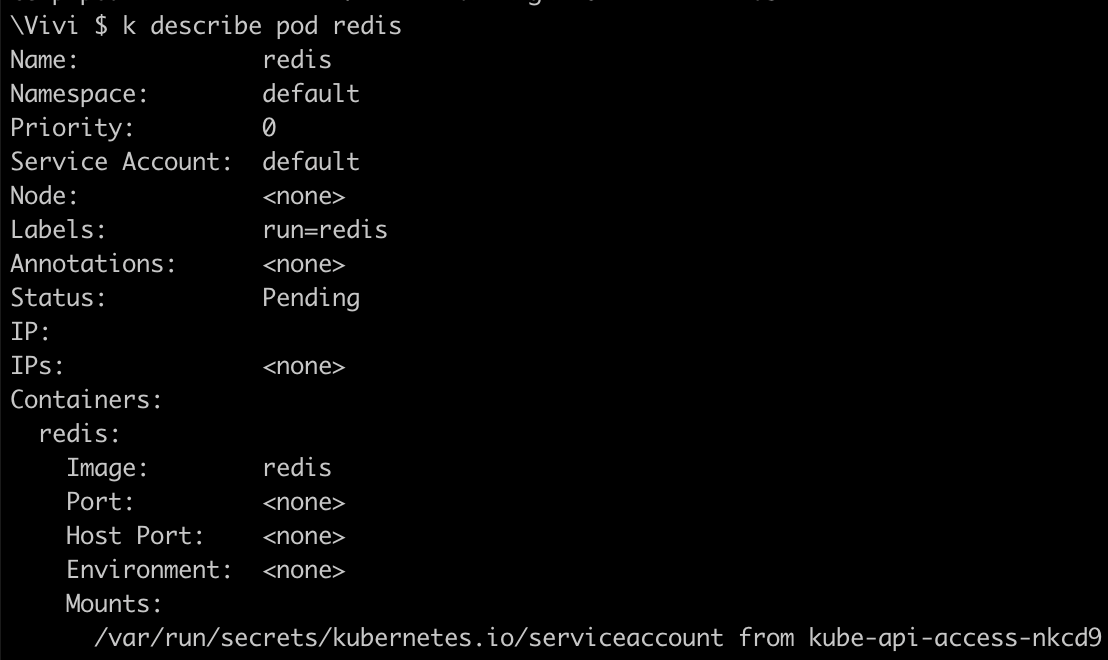

As you can see above, kube-scheduler pod is not running. Now, lets create a redis pod and see if that is scheduled on to any node or not.

Check if redis pod is running or not,

Now, get the yaml back to the dir

/etc/kubernetes/manifests/. Kubelet will now be able to find the yaml and start the scheduling again.

Manually schedule the pod: By specifying

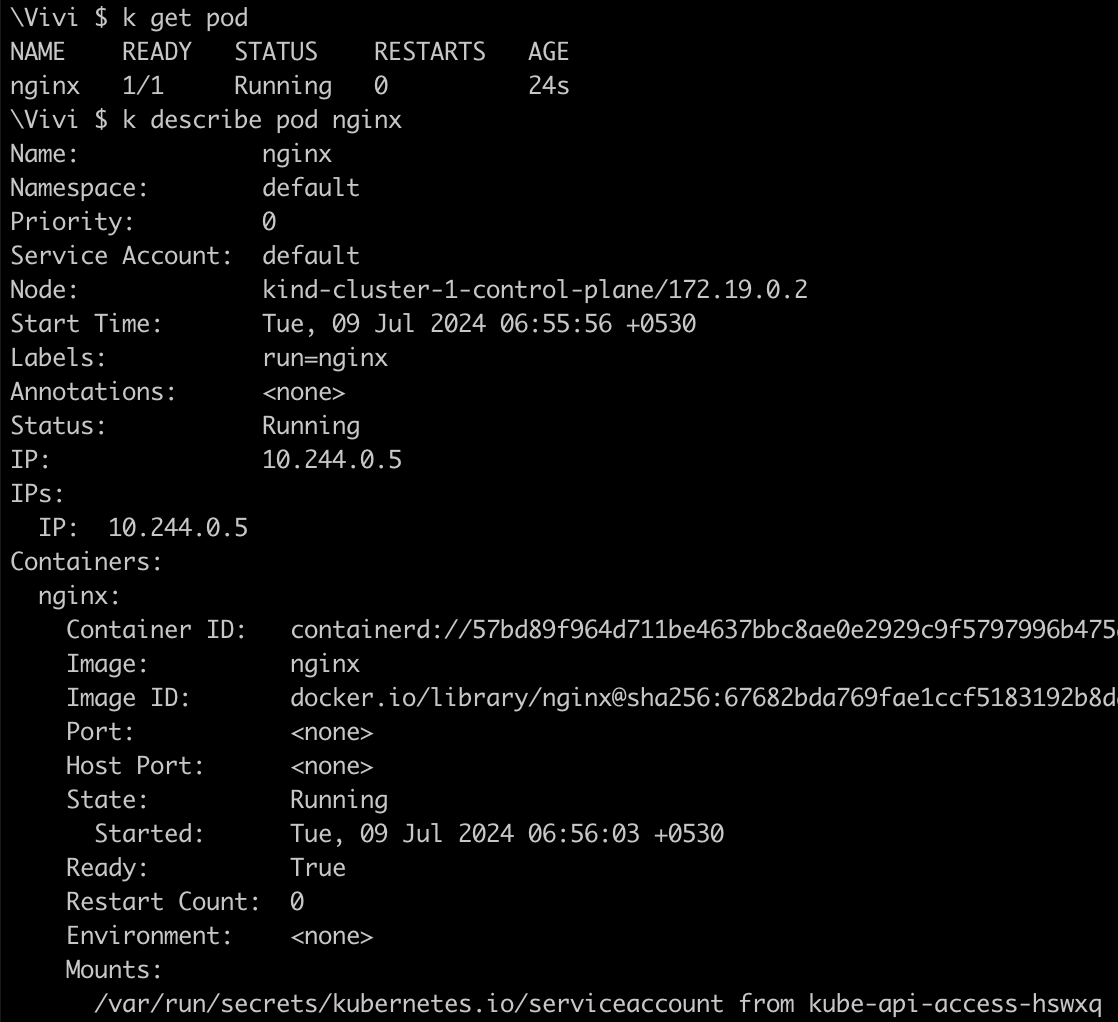

nodeNamein the pod's YAML, ensuring it runs on the specific node if resources are available. The scheduler will skip this pod and continue with others.🌟 TASK: Create a pod and try to schedule it manually without the scheduler.

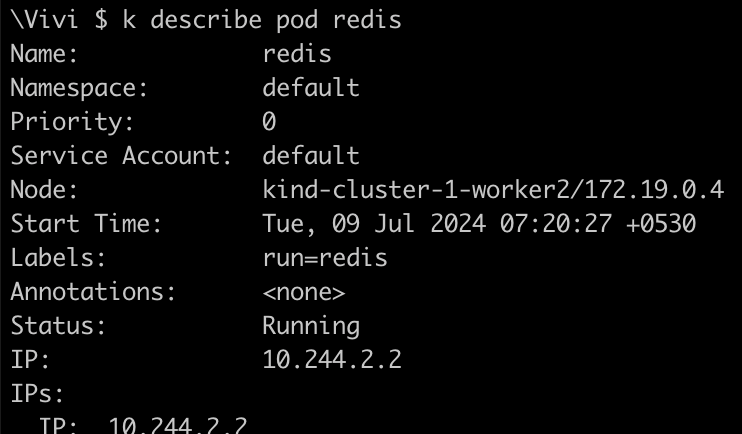

Kubectl run redis --image=redis -o yaml > redis.yamlapiVersion: v1 kind: Pod metadata: labels: run: redis name: redis spec: containers: - image: redis name: redis nodeName: kind-cluster-1-worker2

Now, the pod redis was scheduled on node kind-cluster-1-worker2

Annotations: They store additional metadata details for objects, making it easier for controllers to track and roll back previous changes.

Labels and Selectors

Labels: Labels are part of the metadata of a deployment and help in filtering out Kubernetes objects.

Selectors: Selectors match the labels of pods to manage them as part of the deployment.

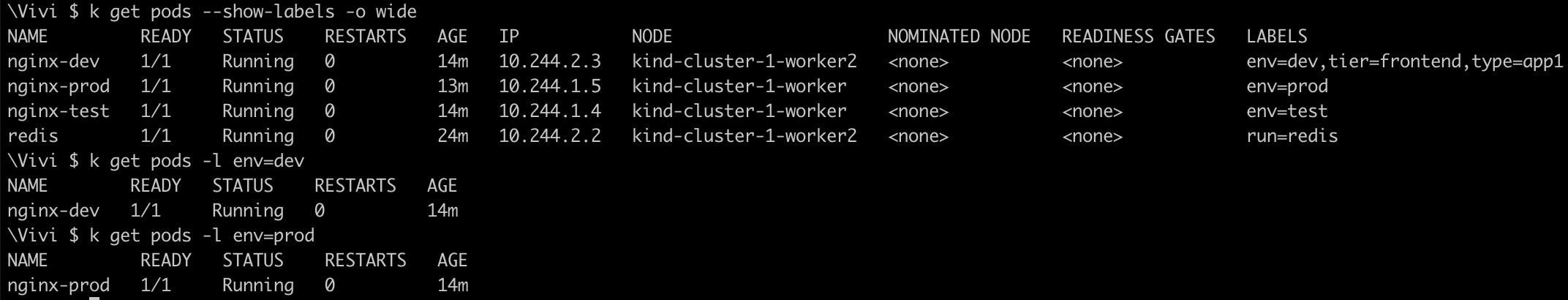

🌟 TASK: Create 3 pods with the name as nginx-dev, nginx-prod and nginx-test based on the nginx image and use labels as env:test, env:dev and env:prod for each of these pods respectively.

Using imperative command, Create 3 pods of nginx with different labels

kubectl run nginx-test --image=nginx --labels=env=test --port=80 kubectl run nginx-dev --image=nginx --labels=env=dev --port=80 kubectl run nginx-prod --image=nginx --labels=env=prod --port=80apiVersion: apps/v1 kind: Deployment metadata: name: nginx-dev labels: env: dev spec: replicas: 2 selector: matchLabels: env: dev tier: frontend template: metadata: labels: env: dev tier: frontend spec: containers: - name: nginx-dev image: nginx ports: - containerPort: 80

Labels vs Namespace

Namespace: logical isolation of resources or objects in a group, enabling access control, resource isolation, resource quotas, resource sharing across different environments, and avoiding conflicts between teams.

Labels: Used to tag resources for filtering. Selectors use these labels to find and manage pods with the specified labels.

#Kubernetes #StaticPods #ManualScheduling #Labels #Selectors #40DaysofKubernetes #CKASeries

Subscribe to my newsletter

Read articles from Gopi Vivek Manne directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gopi Vivek Manne

Gopi Vivek Manne

I'm Gopi Vivek Manne, a passionate DevOps Cloud Engineer with a strong focus on AWS cloud migrations. I have expertise in a range of technologies, including AWS, Linux, Jenkins, Bitbucket, GitHub Actions, Terraform, Docker, Kubernetes, Ansible, SonarQube, JUnit, AppScan, Prometheus, Grafana, Zabbix, and container orchestration. I'm constantly learning and exploring new ways to optimize and automate workflows, and I enjoy sharing my experiences and knowledge with others in the tech community. Follow me for insights, tips, and best practices on all things DevOps and cloud engineering!