Day 14/40 Days of K8s: Taints and Tolerations in Kubernetes !! ☸️

Gopi Vivek Manne

Gopi Vivek Manne🌟 Key Concepts

Taint 🚫: Like Fences - "prohibited access/strict permission needed"

Toleration 🎫: Permission slips for Pods to bypass taints.

Scenario:

Lets say we have 3 nodes, and on node1 specifically we want only pods to be scheduled of type gpu=true , where AI workloads will run. So the another application pod will not be scheduled on the node which was tainted , will proceed with next available nodes.

We are instructing nodes capability for scheduling only the pod which has toleration set of this tainted node.

Important Points ❗

Taints: Node level

Tolerations: Pod level

Toleration has effect(scheduling type)- NoSchedule, PreferNoSchedule, NoExecute. When we specify these effects, how do they work?

Toleration Effects

NoSchedule 🚫:

- Applies to new pods,Checks toleration on pods before scheduling.

PreferNoSchedule 🤔:

- Tries to apply but doesn't guarantee scheduling

NoExecute:

Checks existing pods and evicts those that don't have a matching toleration. This taint is checked both at scheduling time and during execution.

Applies to existing and new pods as well.

Practical Example:

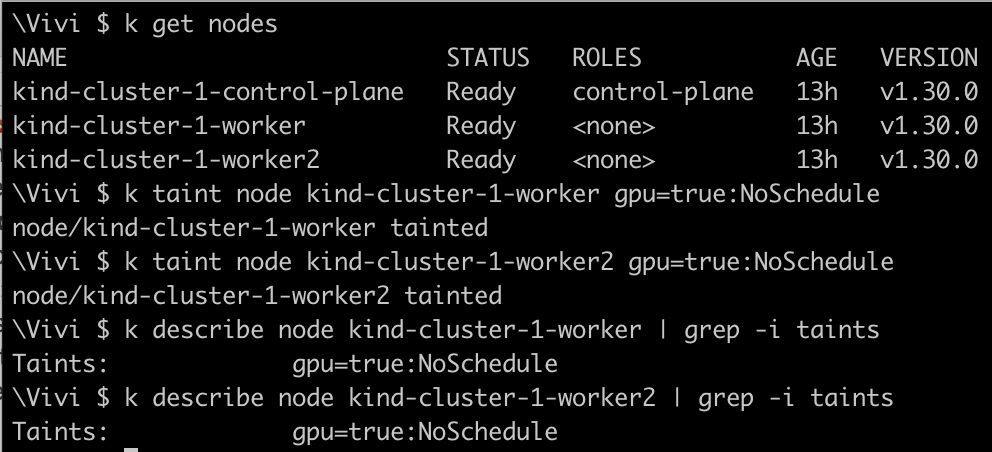

Lets taint both

kind-cluster-1-worker,kind-cluster-2-worker2nodes ,withgpu=true

Create

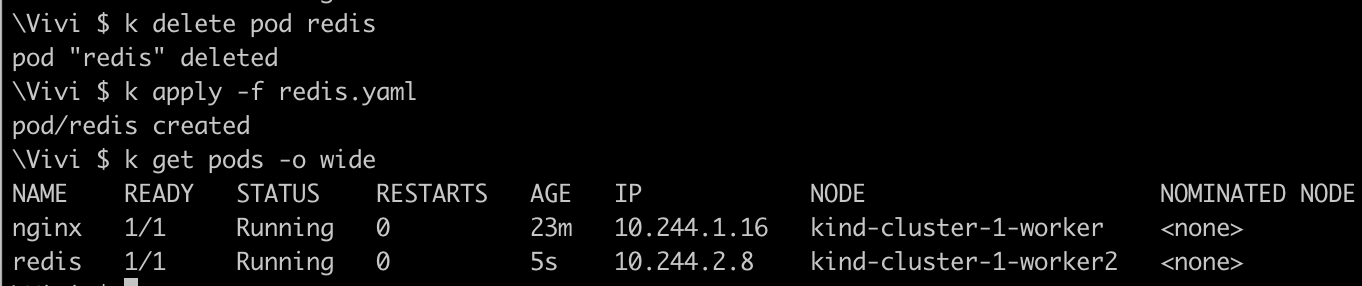

nginx,redispod and see why it's not getting scheduled on worker nodes and control plane nodes.kubectl run redis --image=redis --dry-run=client -o yaml > redis.yaml kubectl run nginx --image=nginx --dry-run=client -o yaml > nginx.yaml kubectl apply -f nginx.yaml pod/nginx created kubectl apply -f redis.yaml pod/redis created kubectl get pods -o wide

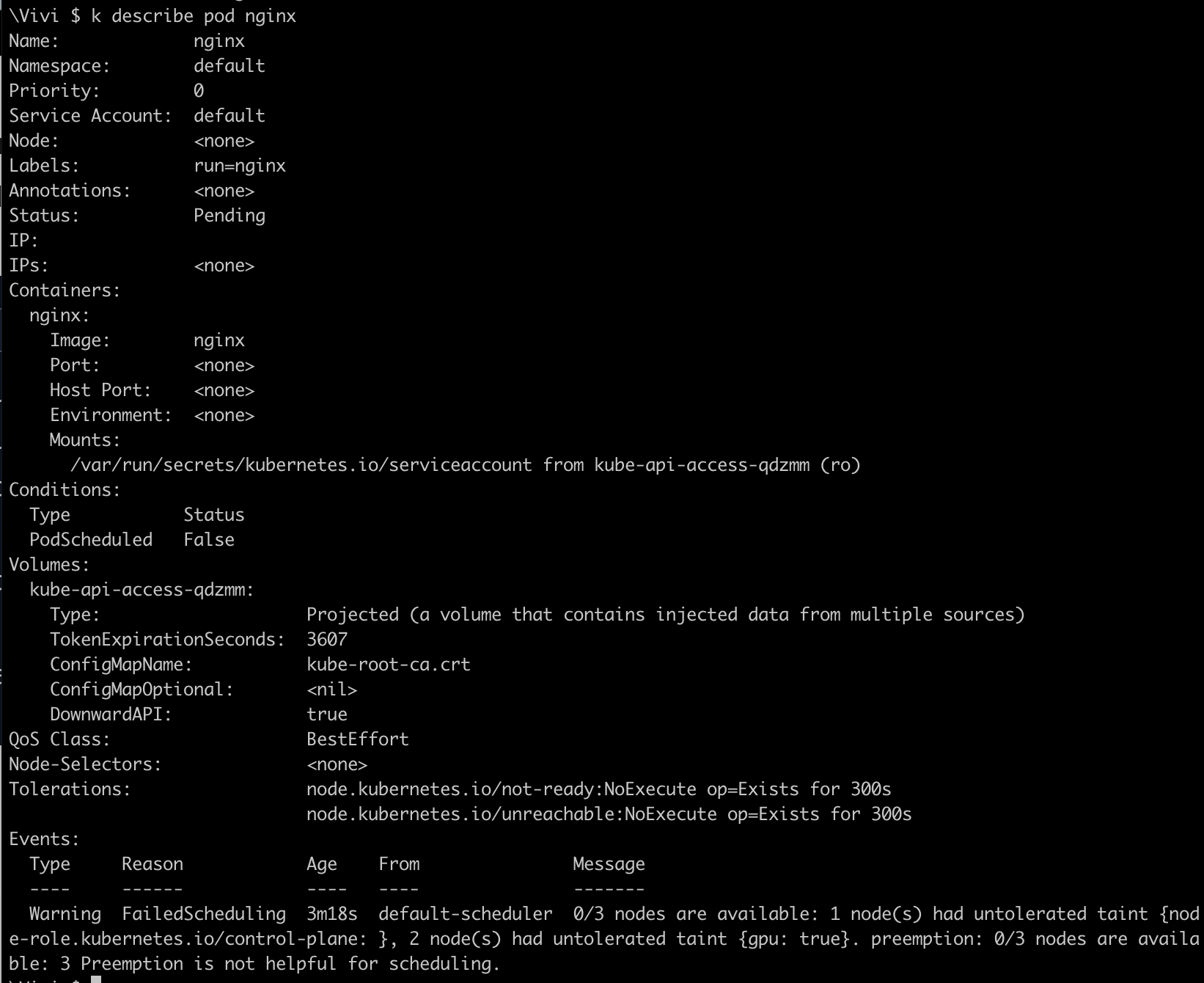

As you can see in the above picture, Pod FailedScheduling on nodes with 1 node was tainted for control-plane processes to run, other 2 worker nodes has got taint on them with

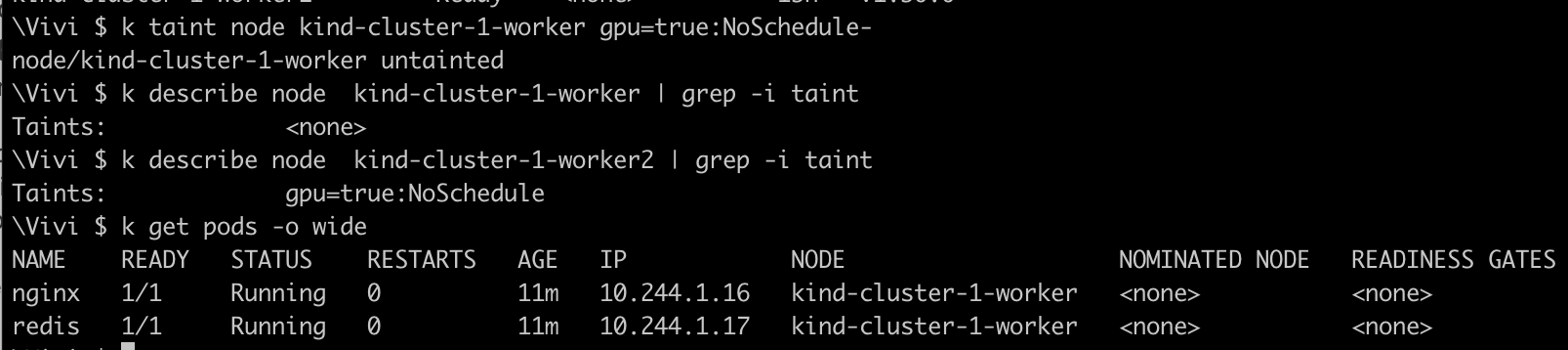

gpu:true.Let's Remove the taint from

kind-cluster-1-workerTo remove the taint , you add - at the end of the taint command , like below.

Nginx: Schedules on

kind-cluster-1-worker(no taint, no toleration needed)Redis: Schedules on

kind-cluster-1-worker, (no taint, no toleration needed)

NOTE: This means the pod which has got toleration added will only be scheduled on the node which is tainted. We are telling node to accept the particular type of pod

Redis: Schedules on

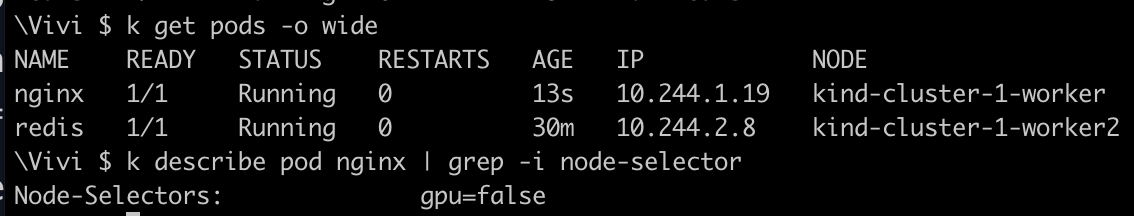

kind-cluster-1-worker2(tainted node, pod has toleration)Let's add toleration of

gpu=trueto redis pod, redeploy the podapiVersion: v1 kind: Pod metadata: labels: run: redis name: redis spec: containers: - image: redis name: redis tolerations: - key: "gpu" operator: "Equal" value: "true" effect: "NoSchedule"

💡 Key Takeaway

Pods with tolerations can schedule on tainted nodes, but it doesn't guarantee exclusivity.

It doesn't ensure that only the specific pods can be scheduled on it - just restricts which pods can be deployed on the node.

To extend this capability, instead of node decide which pods to be allowed for it to be scheduled on, we give privilege to pod which node it has to be deployed on using NodeSelector labels.

✅ Node Selection Strategies

NodeSelector

Gives pods the privilege to choose their node

Example:

Add label

gpu=falsetokind-cluster-1-workernodekubectl label nodes kind-cluster-1-worker gpu=falseAdd

nodeSelector: gpu: "false"to redis podapiVersion: v1 kind: Pod metadata: labels: run: redis name: redis spec: containers: - image: redis name: redis tolerations: - key: "gpu" operator: "Equal" value: "true" effect: "NoSchedule" nodeSelector: gpu: "false"

✅ As you can see, the

nginxpod is deployed on tokind-cluster-1-workernode by using NodeSelector labels.

Comparison

Taints/Tolerations: Node-centric approach, more related to node level, it gives capacity for node to decide and accept what types of pods.

NodeSelector: Pod-centric approach, pods take the decision to go on to which node

Limitations and Next Steps

However, there are limitations with NodeSelector. we can’t use expressions like logical AND ,OR, more conditionals like schedule on multiple nodes. Which can be handled by Node Affinity and Anti-Affinity and we will look into it in the next lesson!!

💁 Observations

🌟 Question 1: Can I manually schedule a pod on a specific node?

Yes, we can manually specify the exact nodeName where the pod should run. However, this is generally used for testing purposes where you need absolute control over pod placement. This method is less flexible and resilient to node failures, as manual intervention is involved.

🌟 Question 2: Can Kubernetes decide which pod to place on which node?

Yes, Kubernetes can decide which pod to place on which node using NodeSelector and labels. By assigning labels to nodes and specifying NodeSelectors in pods or deployments, we allow K8's to handle scheduling.

Additionally, nodes can use taints and tolerations to control which pods are allowed to run on them. This method improves flexibility and resilience, delegates the job of scheduling to Kubernetes based on defined labels, and enhances load balancing and high availability in real-time.

#Kubernetes #Taints&Tolerations #NodeSelector #40DaysofKubernetes #CKASeries

Subscribe to my newsletter

Read articles from Gopi Vivek Manne directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gopi Vivek Manne

Gopi Vivek Manne

I'm Gopi Vivek Manne, a passionate DevOps Cloud Engineer with a strong focus on AWS cloud migrations. I have expertise in a range of technologies, including AWS, Linux, Jenkins, Bitbucket, GitHub Actions, Terraform, Docker, Kubernetes, Ansible, SonarQube, JUnit, AppScan, Prometheus, Grafana, Zabbix, and container orchestration. I'm constantly learning and exploring new ways to optimize and automate workflows, and I enjoy sharing my experiences and knowledge with others in the tech community. Follow me for insights, tips, and best practices on all things DevOps and cloud engineering!