Understanding Floating Point Numbers

Cloud Tuned

Cloud Tuned

Understanding Floating Point Numbers

Introduction

Floating point numbers are a fundamental aspect of computing, enabling us to perform calculations with real numbers that have fractional components. Despite their ubiquity, floating point numbers can be a source of confusion due to their inherent imprecision and the unique way they are represented in computer systems. In this article, we'll delve into how floating point numbers work, explore their representation, discuss common pitfalls, and understand their limitations.

What are Floating Point Numbers?

Floating point numbers are a way to represent real numbers in a way that can support a wide range of values. They are particularly useful for representing very large or very small numbers, which would be impractical to handle with fixed-point or integer representations.

Components of Floating Point Numbers

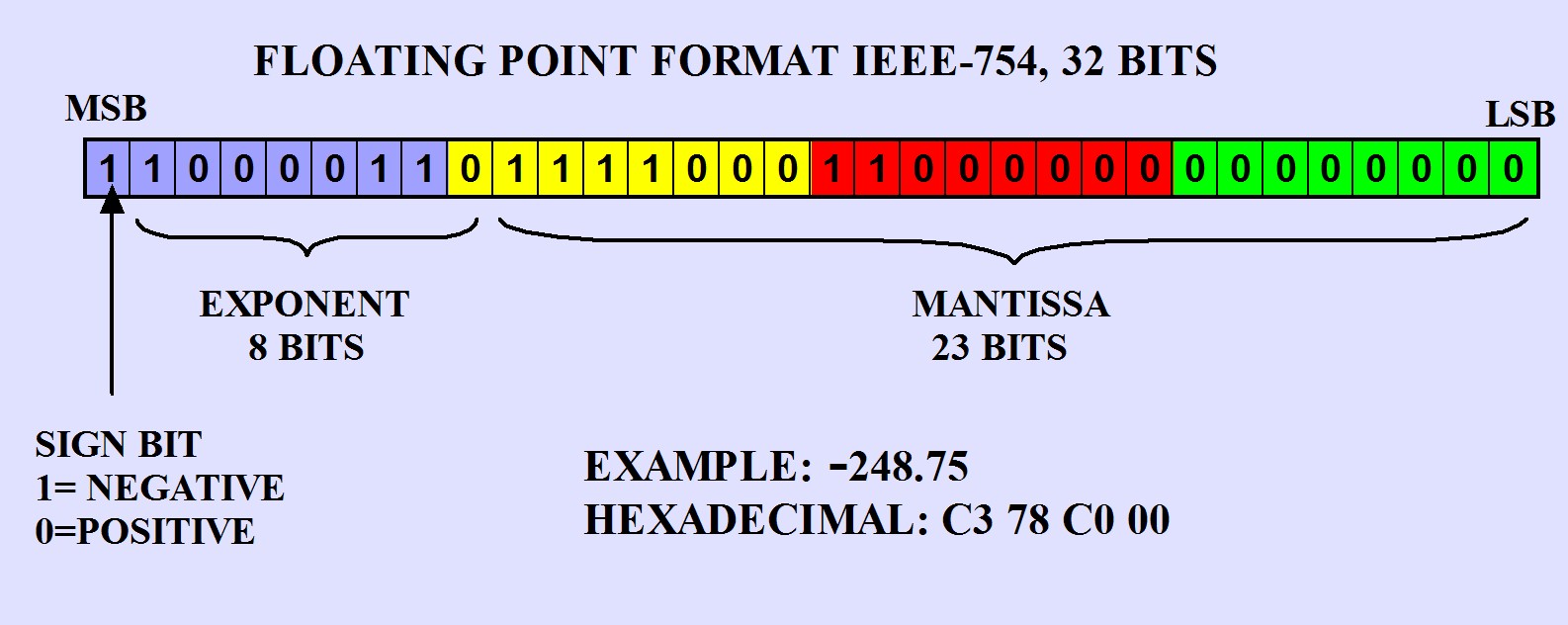

A floating point number is typically composed of three main parts:

Sign Bit: Indicates whether the number is positive or negative.

Exponent: Represents the power of the base (usually 2) to scale the number.

Mantissa (or Significand): Represents the significant digits of the number.

In binary, a floating point number is represented as:

[ (-1)^{\text{sign}} \times \text{mantissa} \times 2^{\text{exponent}} ]

IEEE 754 Standard

The most widely used standard for floating point computation is IEEE 754. It defines several formats, including single precision (32-bit) and double precision (64-bit).

Single Precision (32-bit):

1 bit for the sign

8 bits for the exponent

23 bits for the mantissa

Double Precision (64-bit):

1 bit for the sign

11 bits for the exponent

52 bits for the mantissa

How Floating Point Numbers Work

Representation in Memory

When a floating point number is stored in memory, it is divided into the sign, exponent, and mantissa. For example, consider the single precision representation of the number 6.5:

Convert to Binary: 6.5 in binary is

110.1.Normalize: Move the binary point to get

1.101and record the exponent. Here,110.1becomes1.101with an exponent of 2.Store:

Sign Bit: 0 (positive)

Exponent: 2 (in binary: 10000001, adding the bias of 127 for single precision)

Mantissa: 10100000000000000000000 (after the binary point)

Precision and Range

Floating point numbers can represent a wide range of values but with limited precision. This limitation is due to the fixed number of bits allocated to the mantissa and exponent. For example, in single precision, you have 23 bits for the mantissa, which translates to roughly 7 decimal digits of precision. Double precision provides about 15 decimal digits.

Special Values

IEEE 754 also defines special values such as:

Zero: Represented with all bits in the exponent and mantissa set to zero.

Infinity: Represented with all bits in the exponent set to one and all bits in the mantissa set to zero.

NaN (Not a Number): Used to represent undefined or unrepresentable values, such as the result of 0/0.

Common Pitfalls and Limitations

Precision Errors

Due to limited precision, certain numbers cannot be represented exactly. For example, the decimal number 0.1 cannot be represented precisely in binary, leading to small errors in calculations. This imprecision can accumulate in iterative processes, leading to significant errors.

Rounding Errors

Rounding is necessary when the result of a computation has more digits than the format can store. There are several rounding modes defined by IEEE 754, such as round to nearest, round toward zero, and round toward infinity. Rounding can introduce small errors that might affect the outcome of calculations.

Overflow and Underflow

Overflow occurs when a number exceeds the representable range, resulting in infinity. Underflow happens when a number is too close to zero, resulting in a value that is effectively zero. Both situations can cause computational issues if not handled properly.

Conclusion

Floating point numbers are a powerful tool for numerical computations, offering the ability to represent a wide range of values. However, they come with limitations such as precision errors and rounding issues that can affect the accuracy of calculations. Understanding these limitations and how floating point numbers work is crucial for developers and engineers working with numerical data.

If you found this article helpful and want to stay updated with more content like this, please leave a comment below and subscribe to our blog newsletter. Stay informed about the latest tools and practices in computing and software development!

We value your feedback! Please share your thoughts in the comments section and don't forget to subscribe to our newsletter for more informative articles and updates.

Subscribe to my newsletter

Read articles from Cloud Tuned directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by