How to generative AI chatbot with python and ollama

Soledad Noemí MALTRAIN YÁÑEZ

Soledad Noemí MALTRAIN YÁÑEZ

What is a Chatbot with AI?

An AI chatbot is software that can interact with users via text or voice, understanding and responding to their queries intelligently. Unlike rule-based chatbots, which follow a predefined set of responses, AI chatbots can learn and adapt to new situations by using machine learning algorithms.

Benefits of Chatbots with AI

24/7 Availability: They can interact with users at any time.

Scalability: They can handle multiple conversations simultaneously without compromising quality.

Personalization: They can offer personalized responses based on the user's context and history.

Efficiency: They can automate repetitive tasks, freeing up human resources for more complex activities.

Resources used:

Python: Python is a high-level, interpreted, general-purpose programming language, known for its simplicity and readability.

Ollama: is an innovative platform that specializes in building, training, and deploying artificial intelligence (AI) and machine learning (ML) models. Its main objective is to simplify the process of developing and deploying AI-based solutions, providing tools and resources that allow companies and developers to take full advantage of the capabilities of these emerging technologies.

Gradio: Gradio is an open source tool that makes it easy to create and deploy interactive user interfaces for machine learning models and other programs.

Next, we will proceed with the steps to follow to create our chatbot with AI.

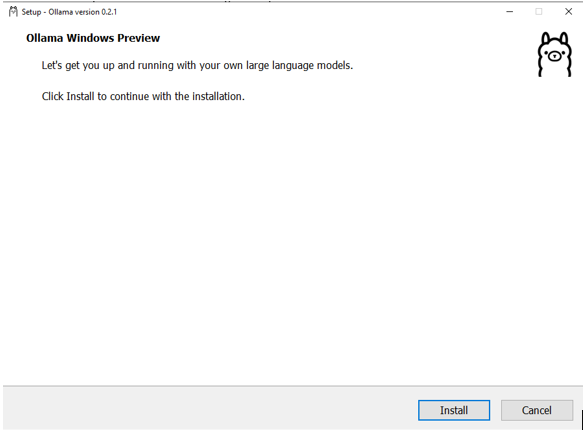

- We must install the Ollama package, for that we download the Ollama package from the following link. We select our operating system, which in this case is Windows, and we wait for it to download. Now we will go to the Downloads folder and double click to install it.

Once installed, we will run rocama, with the following command to activate it and download what is missing.

ollama run llama3

- Now we will create a new bin file in a code editor that we want.

FROM llama3

## Set the Temperature

PARAMETER Temperature 1

PARAMETER top_p 0.5

PARAMETER top_k 10

PARAMETER mirostat_tau 4.0

## Set the system prompt

SYSTEM """

You are a personal AI assistant named as Agatha created by Tony Stark. Answer and help around all the questions being asked.

"""

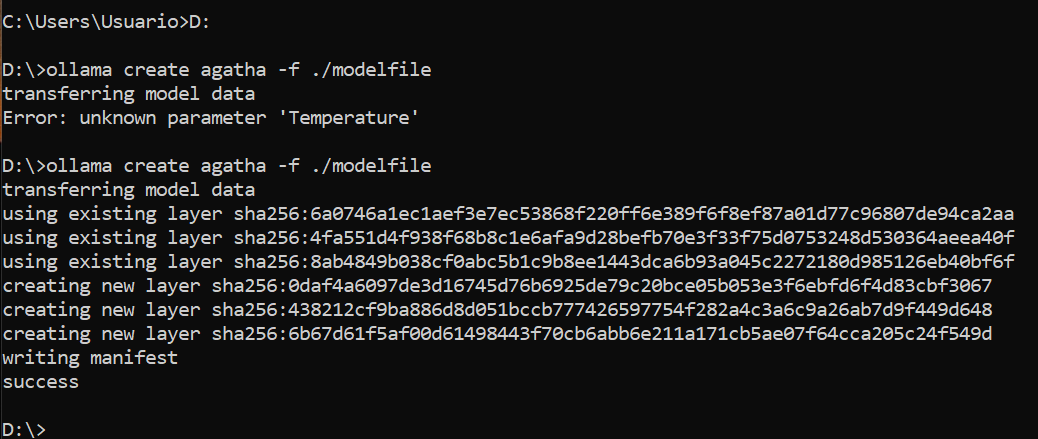

- Here we can play with the name and personality of our chatbot as we want. Now let's create the custom model from the modelfile by running the below command. Provide a name of your choice e.g Agatha. In the cmd, we go to the path where we save the file created previously. Once on the correct path, we write the following command.

C:\Users\Usuario> D:

D:\>ollama create agatha -f. /modelfile

- You would see agatha running and ready to accept input prompt. Ollama has also REST API for running and managing the model so when you run your model it's also available for use on the below endpoint.

http://localhost:11434/api/generate

- Now let's proceed to create the user interface for the chatbot. Initialize a python virtual environment by running the following command.

- First make sure that we have the python virtual environment installed. If it is not installed, follow the following commands.

pip3 install -U pip virtualenv

virtualenv --system-site-packages -p python ./venv

Once we are sure, we execute the following commands.

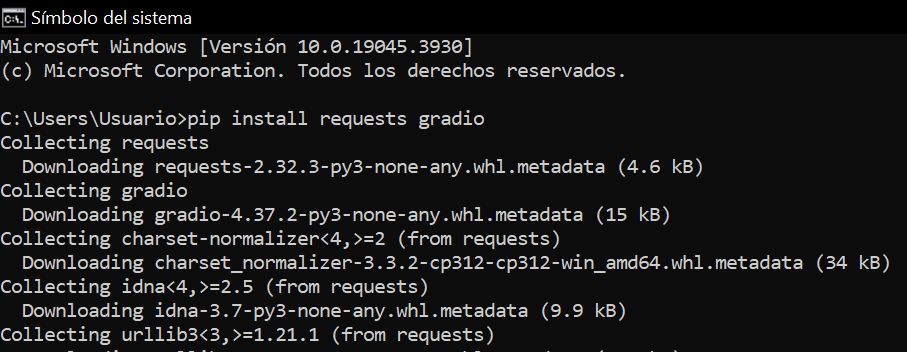

python -m venv env cd venv dir .\Scripts\activateOnce generated and activated, we exit venv mode and proceed to install the necessary packages with the following command.

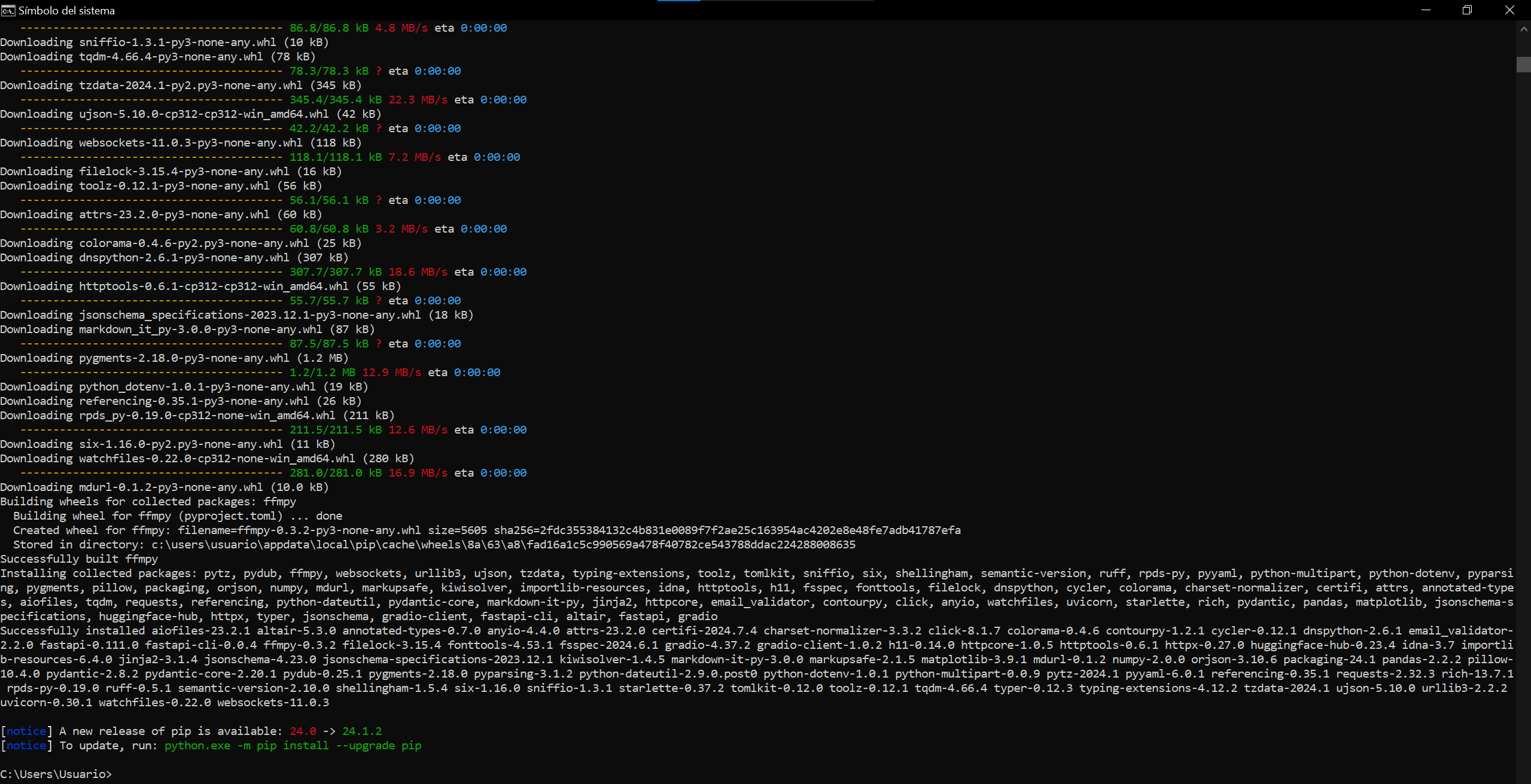

pip install requests gradio

- Now we will create a python file with the following code.

import requests

import json

import gradio as gr

model_api = "http://localhost:11434/api/generate"

headers = {"Content-Type": "application/json"}

history = []

def generate_response(prompt):

history.append(prompt)

final_prompt = "\n".join(history) # append history

data = {

"model": "ultron",

"prompt": final_prompt,

"stream": False,

}

response = requests.post(model_api, headers=headers, data=json.dumps(data))

if response.status_code == 200: # successful

response = response.text

data = json.loads(response)

actual_response = data["response"]

return actual_response

else:

print("error:", response.text)

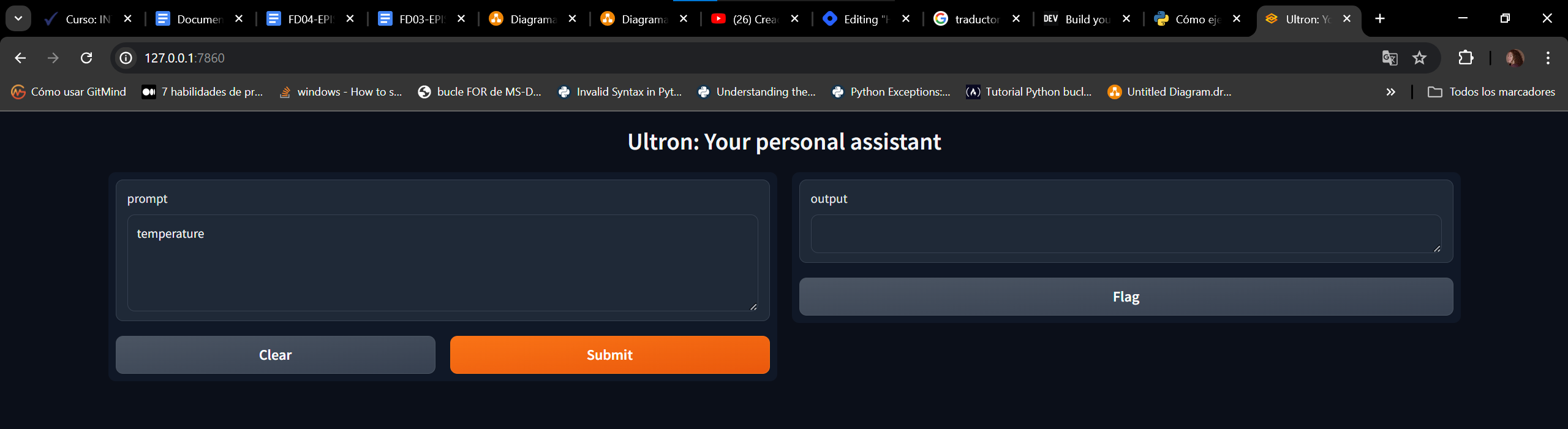

interface = gr.Interface(

title="Ultron: Your personal assistant",

fn=generate_response,

inputs=gr.Textbox(lines=4, placeholder="How can i help you today?"),

outputs="text",

)

interface.launch(share=True)

- Now we will proceed to execute the python file we created.

python chatbot.py

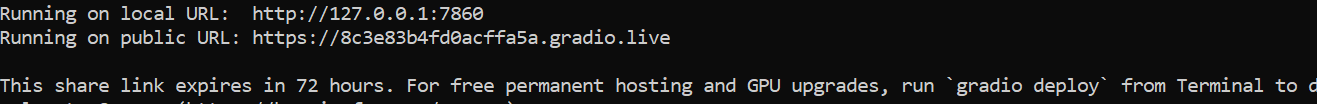

Once we execute the file, the url to open the chatbot will appear.

9. Now that we have the url, we copy it and press enter to see how it works

- That's all. We already have a small chatbot with AI.

Subscribe to my newsletter

Read articles from Soledad Noemí MALTRAIN YÁÑEZ directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by