Shell Scripting Challenge #Day-10 Log Analyzer and Report Generator

Nikunj Vaishnav

Nikunj Vaishnav

Scenario

You are a system administrator responsible for managing a network of servers. Every day, a log file is generated on each server containing important system events and error messages. As part of your daily tasks, you need to analyze these log files, identify specific events, and generate a summary report.

Task

Write a Bash script that automates the process of analyzing log files and generating a daily summary report. The script should perform the following steps:

Input: The script should take the path to the log file as a command-line argument.

Error Count: Analyze the log file and count the number of error messages. An error message can be identified by a specific keyword (e.g., "ERROR" or "Failed"). Print the total error count.

Critical Events: Search for lines containing the keyword "CRITICAL" and print those lines along with the line number.

Top Error Messages: Identify the top 5 most common error messages and display them along with their occurrence count.

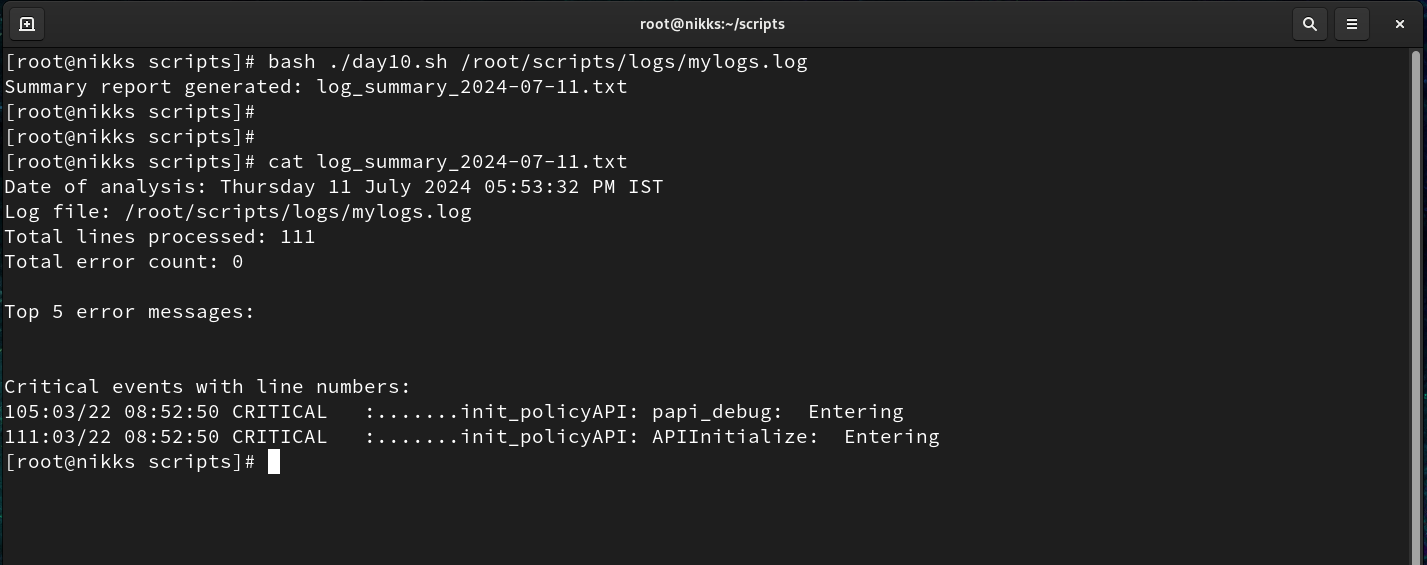

Summary Report: Generate a summary report in a separate text file. The report should include:

Date of analysis

Log file name

Total lines processed

Total error count

Top 5 error messages with their occurrence count

List of critical events with line numbers

Optional Enhancement: Add a feature to automatically archive or move processed log files to a designated directory after analysis.

Script:

#!/bin/bash

# Check if the user provided the log file path as a command-line argument

if [ $# -ne 1 ]; then

echo "Usage: $0 <path_to_logfile>"

exit 1

fi

# Get the log file path from the command-line argument

log_file="$1"

# Check if the log file exists

if [ ! -f "$log_file" ]; then

echo "Error: Log file not found: $log_file"

exit 1

fi

# Step 1: Count the total number of lines in the log file

total_lines=$(wc -l < "$log_file")

# Step 2: Count the number of error messages (identified by the keyword "ERROR" in this example)

error_count=$(grep -c -i "ERROR" "$log_file")

# Step 3: Search for critical events (lines containing the keyword "CRITICAL") and store them in an array

mapfile -t critical_events < <(grep -n -i "CRITICAL" "$log_file")

# Step 4: Identify the top 5 most common error messages and their occurrence count using associative arrays

declare -A error_messages

while IFS= read -r line; do

# Use awk to extract the error message (fields are space-separated)

error_msg=$(awk '{for (i=3; i<=NF; i++) printf $i " "; print ""}' <<< "$line")

((error_messages["$error_msg"]++))

done < <(grep -i "ERROR" "$log_file")

# Sort the error messages by occurrence count (descending order)

sorted_error_messages=$(for key in "${!error_messages[@]}"; do

echo "${error_messages[$key]} $key"

done | sort -rn | head -n 5)

# Step 5: Generate the summary report in a separate file

summary_report="log_summary_$(date +%Y-%m-%d).txt"

{

echo "Date of analysis: $(date)"

echo "Log file: $log_file"

echo "Total lines processed: $total_lines"

echo "Total error count: $error_count"

echo -e "\nTop 5 error messages:"

echo "$sorted_error_messages"

echo -e "\nCritical events with line numbers:"

for event in "${critical_events[@]}"; do

echo "$event"

done

} > "$summary_report"

echo "Summary report generated: $summary_report"

Result:

Conclusion

Automating the process of log file analysis and report generation using a Bash script can significantly streamline the daily tasks of a system administrator. By implementing a script that counts error messages, identifies critical events, and summarizes the findings in a report, administrators can efficiently monitor system health and quickly address issues. Additionally, features like archiving processed log files enhance the overall management and organization of log data. This approach not only saves time but also ensures a more systematic and reliable method of maintaining server performance and security.

Thanks for reading. Happy Learning !!

Connect and Follow Me On Socials :

Like👍 | Share📲 | Comment💭

Subscribe to my newsletter

Read articles from Nikunj Vaishnav directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Nikunj Vaishnav

Nikunj Vaishnav

👋 Hi there! I'm Nikunj Vaishnav, a passionate QA engineer Cloud, and DevOps. I thrive on exploring new technologies and sharing my journey through code. From designing cloud infrastructures to ensuring software quality, I'm deeply involved in CI/CD pipelines, automated testing, and containerization with Docker. I'm always eager to grow in the ever-evolving fields of Software Testing, Cloud and DevOps. My goal is to simplify complex concepts, offer practical tips on automation and testing, and inspire others in the tech community. Let's connect, learn, and build high-quality software together! 📝 Check out my blog for tutorials and insights on cloud infrastructure, QA best practices, and DevOps. Feel free to reach out – I’m always open to discussions, collaborations, and feedback!