Agent-AMA: An SQL Querying AI Agent

Harsha S

Harsha S

Introduction:

Do you need to access information from a database but don’t know how to write SQL queries? Are you a non-technical person worried about how to get the data you need? Well fret not, Agent-AMA is for your rescue. Imagine having a smart AI assistant that can instantly provide answers to your database queries without the hassle of learning SQL, saving you hours of manual work.

SQL (Structured Query Language) is a standard programming language used for managing and manipulating databases. However, learning SQL can be challenging for those without a technical background.

Note:- This project is also submitted as a capstone project for my AI Internship at Tublian.

In this blog we'll dive in to the project that leverages the power of LangChain framework, Groq along with llama-3 LLM to create an intelligent SQL AI Agent using Python programming language.

The goal of the project is to simplify database interactions by allowing users to query the database using prompts or simple text inputs, making data retrieval faster and more intuitive.

Project Background:

Agent-AMA is an AI-powered, command-line based agent that is designed to interact with SQL databases, perform reasoning before taking actions, generates SQL queries based on user prompts or inputs, executes the generated SQL statements and gives the output as a human-like texts using the results of generated SQL queries.

Technologies Used:

This agent uses LangChain framework, Groq, Llama-3 LLM and ReAct prompting technique.

LangChain is an open-source framework that simplifies building applications powered by large language models (LLMs). It offers tools and components to manage interactions with language models, connect different parts, and use resources like APIs and databases. Key features include chains, prompt templates, vector stores, indexes, retrievers, output parsers, agents, and example selectors, allowing developers to create customized LLM-based applications easily. LangChain was chosen for its robust support for integrating various components and managing complex interactions with LLMs.

Groq is an AI company that designs hardware and software to speed up AI workloads, specializing in fast AI inference and offering one of the quickest LLM inference solutions available. It significantly reduces the latency in LLM responses. Groq supports various LLMs, including LLaMA3-8b, LLaMA3-70b, Mixtral 8x7b, Gemma 7b, and more. LangChain also supports integration with Groq chat models; to get started, you need to install the

langchain-groqpackage, create an API key, and initialize it using ChatGroq. Groq was selected for its ability to provide rapid and efficient AI inference, which is crucial for real-time query processing.Llama-3, developed by Meta, is a next-generation family of large language models, featuring pre-trained and instruction-tuned text models in 8B and 70B parameter sizes. Optimized for dialogue, these models outperform many open-source chat models on common benchmarks. In this project, we are using the

llama3-70b-8192model and leveraging Groq's support for Llama-3 to enhance our implementation. Llama-3 was chosen for its superior performance in dialogue-based tasks, making it ideal for generating accurate and contextually relevant SQL queries.ReAct is a framework that uses large language models (LLMs) to generate reasoning traces and task-specific actions together. This helps the model create and update action plans, handle exceptions, and gather information from external sources. By interacting with external tools, ReAct improves the reliability and accuracy of responses. It combines reasoning and acting, prompting LLMs to generate verbal reasoning traces and actions, which allows for dynamic reasoning and plan adjustment. This method addresses hallucination and error propagation issues. While LangChain supports ReAct prompting, we have created custom ReAct prompts for our project to meet specific goals. ReAct was chosen for its ability to enhance the reasoning capabilities of the AI agent, ensuring more accurate and reliable query results.

Project Setup:

To set up the environment, you'll need to install the required dependencies, set up the database, and obtain necessary API keys. Here's a step-by-step guide:

Clone the Repository:

git clone https://github.com/sharsha315/Agent-AMA.git cd Agent-AMACreate and Activate a Virtual Environment :

Create a virtual environment:

python -m venv venvActivate the virtual environment:

On Windows:

.\venv\Scripts\activateOn macOS/Linux:

source venv/bin/activate

Install Dependencies:

pip install -r requirements.txtSetup Environmental Variables:

Create a

.envfile in the project root and add your GROQ API key as follows:GROQ_API_KEY=your_groq_api_keyPlease, ensure the Database file exists. For our project, we are using the

northwind.dbdatabase file.

Note: Please, feel free to try Agent-AMA with your own SQL database.

The project environment is successfully set up and ready; run the main.py script to start the interactive command-line interface.

python main.py

Code Walkthrough:

The motivation behind this project was to bridge the gap between complex SQL queries and users with non-technical background or who may not be familiar with SQL syntax. By using natural language processing, we can make database querying accessible to a wide range of audience.

The main components of the project includes:

LLM initialization: This part of the code sets up the large language model (LLM) used for processing natural language queries, generating SQL queries, and producing responses.

Database Creation: This section handles the connection to the SQL database and provides tools for interaction.

Custom Agent Creation: Here, we define the custom agent that will interact with the SQL database, generate SQL queries based on user inputs, and execute these queries.

ReAct Prompt Design: This part involves designing the prompt templates that guide the AI agent on how to process user questions and generate SQL queries.

Memory Feature: This section sets up the memory feature that allows the agent to remember and refer to past conversations, improving its ability to handle follow-up questions.

The code is modularized into different files for better readability and maintainability, here's how the project structure looks like:

Agent-AMA/

│

├── main.py

├── llm.py

├── memory.py

├── database.py

├── agent.py

├── prompt.py

├── .env

├── README.md

└── requirements.txt

Now, without further ado, let's dive into the intricacies of the codebase.

llm.pyimport os from langchain_groq import ChatGroq def create_llm(): GROQ_API_KEY = os.getenv("GROQ_API_KEY") return ChatGroq( temperature=0, model="llama3-70b-8192", api_key=GROQ_API_KEY )This file initializes the LLM used for processing natural language queries, generating SQL queries and generating responses. From the above code, we import the

osmodule to interact with the operating system, mainly to get environmental variables. We also import theChatGroqclass from thelangchain_groqmodule.ChatGroqis a class used to interact with the GROQ-based language model supported by the LangChain framework. The functioncreate_llm()retrieves the value of the environment variableGROQ_API_KEYand stores it in theGROQ_API_KEYvariable, then returns an instance of theChatGroqclass, which is the required LLM.ChatGroqis initialized with parameters likemodelset tollama3-70b-8192,temperatureset to0, andapi_keyset to theGROQ_API_KEY.database.pyimport os from langchain_community.utilities import SQLDatabase def initialize_database(db_path): if not os.path.exists(db_path): raise FileNotFoundError(f"The Database file {db_path} does not exist.") return SQLDatabase.from_uri(f"sqlite:///{db_path}")This file connects to the database and provides tools for interaction. We start by importing the

osmodule to interact with the operating system to check if the SQL database file exists, and also import theSQLDatabaseclass from thelangchain_community.utilitiesmodule.SQLDatabaseis a class used to interact with SQL databases. The functioninitialize_databasechecks if a database file exists at the specified path. If the file does not exist, it raises an error. If the file exists, it initializes and returns anSQLDatabaseobject connected to the database at the given path.

prompt.py

system_prompt = """

You are an agent named "Agent-AMA", designed to interact with the SQL database.

You are an agent that does a reasoning step before the acting.

Given an input question, create a syntactically correct dialect query to run, then look at the results of the query and return the answer.

Unless the user specifies a specific number of examples they wish to obtain, always limit your query to at most 5 results.

You can order the results by a relevant column to return the most interesting examples in the database.

Never query for all the columns from a specific table, only ask for the relevant columns given the question.

You have access to tools for interacting with the database.

Only use the below tools. Only use the information returned by the below tools to construct your final answer.

You MUST double check your query before executing it. If you get an error while executing a query, rewrite the query and try again.

DO NOT make any DML statements (INSERT, UPDATE, DELETE, DROP etc.) to the database.

If the question does not seem related to the database, just return "I am Sorry, I only answer questions related to the database" as the answer.

If you come across the Final Answer immediately stop Thought, Action Process and return the answer framing a very good sentence.

Answer the following questions as best you can. You have access to the following tools:

{tools}

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Final Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: {input}

Thought:{agent_scratchpad}

"""

This file defines the prompt templates used by the AI agent to interact with the database. The prompt guides the agent on how to process user questions and generate SQL queries. The system_prompt variable in prompt.py defines a detailed set of instructions for "Agent-AMA," an AI designed to interact with an SQL database. This prompt follows the ReAct prompting style and guides the agent to first think and reason before acting. It instructs the agent to create SQL queries based on input questions, execute these queries, and provide results. The agent is advised to limit results to a maximum of five unless specified otherwise and to ensure queries are correct before execution. It also stresses avoiding any data manipulation operations like INSERT, UPDATE, DELETE, or DROP. If a question is unrelated to the database, the agent should respond accordingly. The prompt follows a structured format for the agent's thought process, actions, and final answers.

Note: The above "system_prompt" can also be pulled from LangChain Prompt Hub, for more information refer FAQs.

agent.pyfrom langchain.agents import AgentExecutor, create_react_agent from langchain_community.agent_toolkits import SQLDatabaseToolkit from langchain_community.agent_toolkits.sql.prompt import SQL_FUNCTIONS_SUFFIX from langchain_core.prompts.chat import ChatPromptTemplate, SystemMessagePromptTemplate, HumanMessagePromptTemplate, AIMessagePromptTemplate from prompt import system_prompt def create_custom_agent(llm, db): # Create tools toolkit = SQLDatabaseToolkit(db=db, llm=llm) context = toolkit.get_context() tools = toolkit.get_tools() # Create ReAct prompt messages = [ SystemMessagePromptTemplate.from_template(system_prompt), HumanMessagePromptTemplate.from_template("{input}"), AIMessagePromptTemplate.from_template(SQL_FUNCTIONS_SUFFIX), ("human", "{agent_scratchpad}"), ] prompt = ChatPromptTemplate.from_messages(messages) prompt = prompt.partial(**context) # Create Agent agent = create_react_agent(llm=llm, tools=tools, prompt=prompt) # Create and return Agent Executor return AgentExecutor(agent=agent, tools=tools, verbose=True, handle_parsing_errors=True)The

agent.pyfile defines a functioncreate_custom_agentthat sets up and returns a custom agent executor for interacting with an SQL database. It starts by importing necessary modules fromlangchain, including tools for creating agents, handling SQL databases, and managing prompts, with thesystem_promptimported fromprompt.py. Within thecreate_custom_agentfunction, tools are created usingSQLDatabaseToolkitby passing the database (db) and language model (llm), and the context and tools required for the agent's operation are retrieved from the toolkit. The ReAct prompt is set up by combining system, human, and AI message templates into a message sequence, and theChatPromptTemplateis partially formatted with the retrieved context. The agent is created using thecreate_react_agentfunction, which takes in the language model, tools, and the prompt. Finally, anAgentExecutoris created and returned, managing the agent's actions with verbose logging and error handling for parsing errors.memory.pyfrom langchain.memory import ConversationEntityMemory def create_memory(llm): return ConversationEntityMemory(llm=llm)The

memory.pyfile contains a simple function calledcreate_memory. This function sets up and provides a memory feature for the agent. It begins by importing theConversationEntityMemoryclass from thelangchain.memorymodule, which helps the agent remember and refer to past conversations. Entity memory remembers given facts about specific entities in a conversation. Thecreate_memoryfunction takes a language model (llm) as an input and returns aConversationEntityMemoryinstance initialized with this language model. This memory feature allows the agent to keep track of context across different queries, making it better at handling follow-up questions and maintaining smooth conversations.main.pyimport os from dotenv import load_dotenv from langchain.agents import AgentExecutor from llm import create_llm from memory import create_memory from database import initialize_database from agent import create_custom_agent # Load environmental variables from .env file load_dotenv() # Initialize LLM and Memory llm = create_llm() memory = create_memory(llm) # Initialize Database db = initialize_database("/workspaces/Agent-AMA/northwind.db") # Create Custom SQL Agent agent_executor = create_custom_agent(llm, db) # Main function def main(): print("\nWelcome!!!") print("\nI am Agent-AMA,") while True: user_input = input("\nEnter your query (or type 'exit' to quit): ") if user_input.lower() == 'exit': print("\nGoodbye!!!\n") break try: memory.load_memory_variables({"input": "Can you list the tables available in the database?"}) result = agent_executor.invoke({"input": user_input}) memory.save_context({"input": result["input"]}, {"output": result["output"]}) print() print("****"*10) print(f"\nYou: {result['input']}\nAgent-AMA: {result['output']}") print() print("****"*10) except ValueError as e: print(f"Error: {e}") if __name__ == "__main__": main()The

main.pyscript is the main execution file that integrates various modules to run the Agent-AMA system. It begins by importing necessary modules likeosfor environment variables,load_dotenvfor loading these variables from a.envfile, and essential functions (create_llm,create_memory,initialize_database,create_custom_agent) for initializing components. The script loads environmental variables, initializes the language model (LLM) and memory, and connects to the specified database. It then creates a custom SQL agent using these components. The main function of the script welcomes the user, continuously prompts for input, and processes the queries using the agent, displaying the results. If the user types "exit", the program terminates. It handles errors gracefully by catching and printing anyValueErrorexceptions. The script ensures the main function runs only when the script is executed directly, not when imported as a module. Overall,main.pycoordinates the initialization and interaction processes, maintaining context through memory to handle user queries effectively.

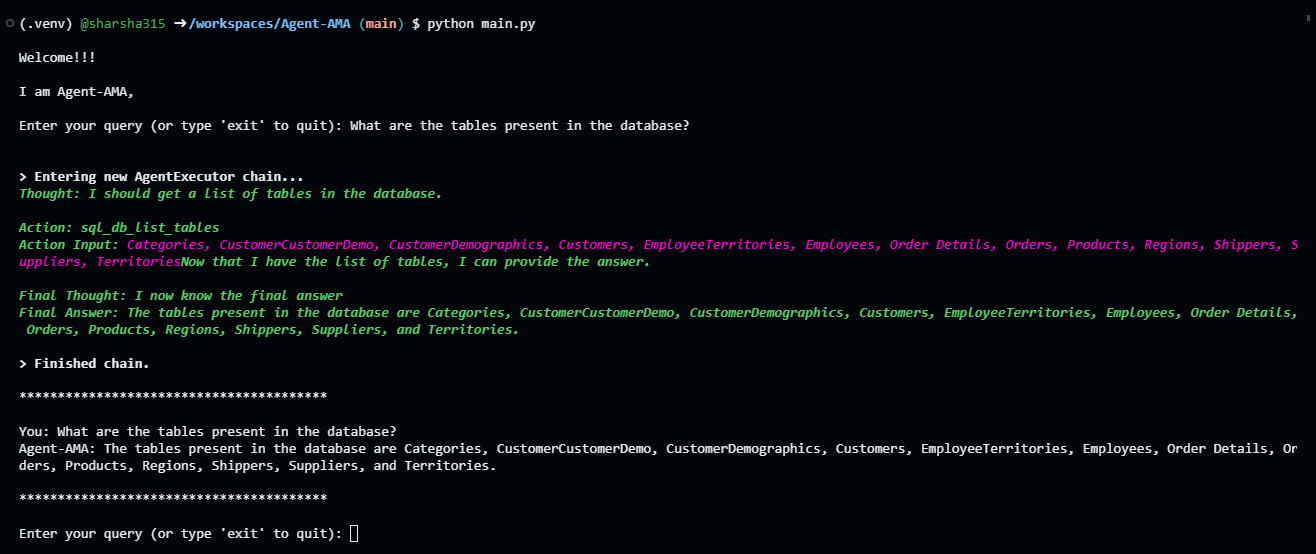

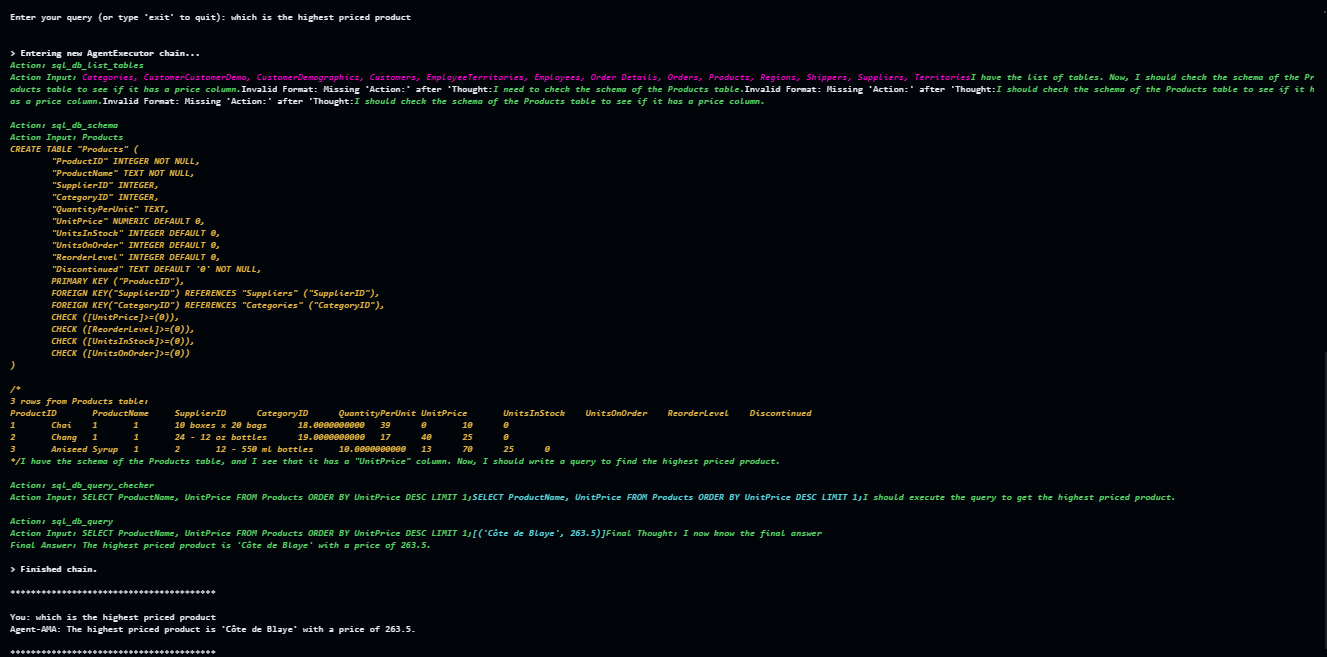

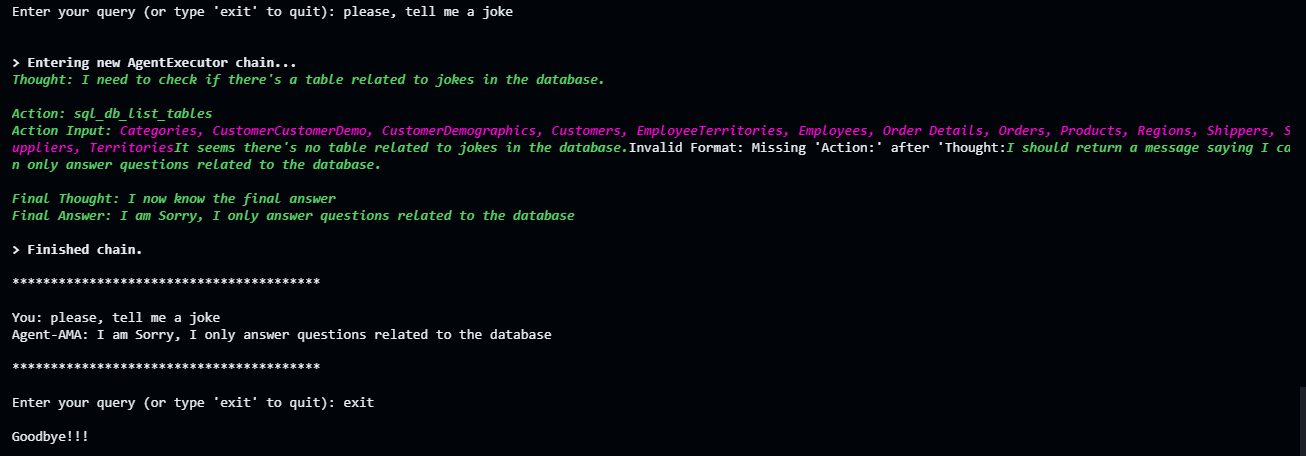

Example Use Cases:

Query Examples:

Query: "What are the tables present in the database?"

Query: "Which is the highest priced product?

Query: "please, tell me a joke"

Conclusion:

In conclusion, Agent-AMA represents a significant advancement in making database querying accessible to non-technical users. By leveraging the power of the LangChain framework, Groq, and the Llama-3 LLM, this project simplifies the process of retrieving data from SQL databases through natural language prompts. The integration of ReAct prompting and memory features ensures that the AI agent can reason, act, and maintain context effectively, providing accurate and human-like responses. This project not only bridges the gap between complex SQL queries and users with limited technical knowledge but also demonstrates the potential of AI in enhancing productivity and efficiency in data retrieval tasks. For those interested in exploring or contributing to this innovative solution, the provided references and resources offer a comprehensive starting point.

Thank you for taking the time to read about Agent-AMA. We welcome your comments and encourage you to contribute to the project; you can find the link in the reference section.

References and Resources:

FAQs:

How to get GROQ API key?

First, sign up or log in to the Groq console here. Then, navigate to the "API_KEYS" section on the left-side navigation panel. Click on "Create API key," fill in the necessary details, and submit. Once the API key is generated, copy it and store it safely, as it will only be displayed once.

What is the Northwind Trade database used in the project?

The Northwind Trade database is a sample database provided by Microsoft, often used for demonstrating database concepts and testing SQL queries. It contains data related to a fictional trading company.

What is Tublian and Tublian 8020?

Tublian is an AI-powered platform designed to boost your skills, build credibility using streetcred, contribute to open-source projects and increase your visibility. It transforms every developer into a solo enterprise, enabling you to create at scale, uncover endless opportunities, and learn anything at any time. Tublian 8020 is an AI-driven learning tool for developers that boosts learning efficiency by focusing on the 20% of content that yields 80% of the results. It customizes learning paths based on individual skills and goals, combining high-quality content with practical exercises. This personalized approach helps developers quickly master new skills through hands-on projects and real-time feedback, setting a new standard in professional development.

What is LangChain Prompt Hub?

LangChain Prompt Hub lets you find, manage, and test different prompts for LangChain and LLMs right in your browser. Use this code to get the system prompt for this project:

# Set the LANGCHAIN_API_KEY environment variable (create key in settings) from langchain import hub prompt = hub.pull("sharsha315/custom_react_sql_agent")

Subscribe to my newsletter

Read articles from Harsha S directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Harsha S

Harsha S

Constant Learner