Learn Linux for Beginners: From Basics to Advanced Techniques [Full Book]

Zaira Hira

Zaira Hira

Learning Linux is one of the most valuable skills in the tech industry. It can help you get things done faster and more efficiently. Many of the world's powerful servers and supercomputers run on Linux.

While empowering you in your current role, learning Linux can also help you transition into other tech careers like DevOps, Cybersecurity, and Cloud Computing.

In this handbook, you'll learn the basics of the Linux command line, and then transition to more advanced topics like shell scripting and system administration. Whether you are new to Linux or have been using it for years, this book has something for you.

Important Note: All examples in this book are demonstrated in Ubuntu 22.04.2 LTS (Jammy Jellyfish). Most command line tools are more or less the same in other distributions. However, some GUI applications and commands may differ if you are working on another Linux distribution.

Table of Contents

Part 1: Introduction to Linux

1.1. Getting Started with Linux

What is Linux?

Linux is an open-source operating system that is based on the Unix operating system. It was created by Linus Torvalds in 1991.

Open source means that the source code of the operating system is available to the public. This allows anyone to modify the original code, customise it, and distribute the new operating system to potential users.

Why should you learn about Linux?

In today's data center landscape, Linux and Microsoft Windows stand out as the primary contenders, with Linux having a major share.

Here are several compelling reasons to learn Linux:

Given the prevalence of Linux hosting, there is a high chance that your application will be hosted on Linux. So learning Linux as a developer becomes increasingly valuable.

With cloud computing becoming the norm, chances are high that your cloud instances will rely on Linux.

Linux serves as the foundation for many operating systems for the Internet of Things (IoT) and mobile applications.

In IT, there are many opportunities for those skilled in Linux.

What does it mean that Linux is an open-source operating system?

First, what is open source? Open source software is software whose source code is freely accessible, allowing anyone to utilize, modify, and distribute it.

Whenever source code is created, it is automatically considered copyrighted, and its distribution is governed by the copyright holder through software licenses.

In contrast to open source, proprietary or closed-source software restricts access to its source code. Only the creators can view, modify, or distribute it.

Linux is primarily open source, which means that its source code is freely available. Anyone can view, modify, and distribute it. Developers from anywhere in the world can contribute to its improvement. This lays the foundation of collaboration which is an important aspect of open source software.

This collaborative approach has led to the widespread adoption of Linux across servers, desktops, embedded systems, and mobile devices.

The most interesting aspect of Linux being open source is that anyone can tailor the operating system to their specific needs without being restricted by proprietary limitations.

Chrome OS used by Chromebooks is based on Linux. Android, that powers many smartphones globally, is also based on Linux.

What is a Linux Kernel?

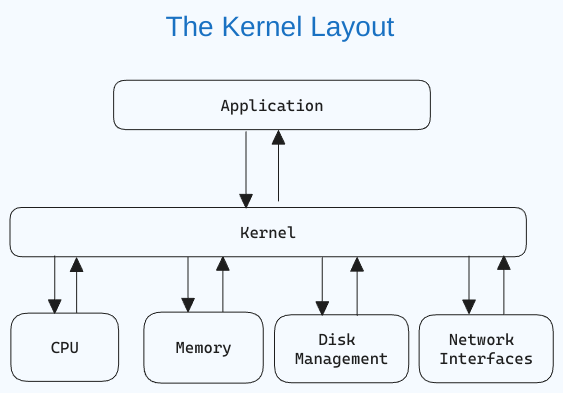

The kernel is the central component of an operating system that manages the computer and its hardware operations. It handles memory operations and CPU time.

The kernel acts as a bridge between applications and the hardware-level data processing using inter-process communication and system calls.

The kernel loads into memory first when an operating system starts and remains there until the system shuts down. It is responsible for tasks like disk management, task management, and memory management.

If you are curious about what the Linux kernel looks like, here is the GitHub link.

What is a Linux distribution?

By this point, you know that you can re-use the Linux kernel code, modify it, and create a new kernel. You can further combine different utilities and software to create a completely new operating system.

A Linux distribution or distro is a version of the Linux operating system that includes the Linux kernel, system utilities, and other software. Being open source, a Linux distribution is a collaborative effort involving multiple independent open-source development communities.

What does it mean that a distribution is derived? When you say that a distribution is "derived" from another, the newer distro is built upon the base or foundation of the original distro. This derivation can include using the same package management system (more on this later), kernel version, and sometimes the same configuration tools.

Today, there are thousands of Linux distributions to choose from, offering differing goals and criteria for selecting and supporting the software provided by their distribution.

Distributions vary from one to the other, but they generally have several common characteristics:

A distribution consists of a Linux kernel.

It supports user space programs.

A distribution may be small and single-purpose or include thousands of open-source programs.

Some means of installing and updating the distribution and its components should be provided.

If you view the Linux Distributions Timeline, you'll see two major distros: Slackware and Debian. Several distributions are derived from them. For example, Ubuntu and Kali are derived from Debian.

What are the advantages of derivation? There are various advantages of derivation. Derived distributions can leverage the stability, security, and large software repositories of the parent distribution.

When building on an existing foundation, developers can drive their focus and effort entirely on the specialized features of the new distribution. Users of derived distributions can benefit from the documentation, community support, and resources already available for the parent distribution.

Some popular Linux distributions are:

Ubuntu: One of the most widely used and popular Linux distributions. It is user-friendly and recommended for beginners. Learn more about Ubuntu here.

Linux Mint: Based on Ubuntu, Linux Mint provides a user-friendly experience with a focus on multimedia support. Learn more about Linux Mint here.

Arch Linux: Popular among experienced users, Arch is a lightweight and flexible distribution aimed at users who prefer a DIY approach. Learn more about Arch Linux here.

Manjaro: Based on Arch Linux, Manjaro provides a user-friendly experience with pre-installed software and easy system management tools. Learn more about Manjaro here.

Kali Linux: Kali Linux provides a comprehensive suite of security tools and is mostly focused on cybersecurity and hacking. Learn more about Kali Linux here.

How to install and access Linux

The best way to learn is to apply the concepts as you go. In this section, we'll learn how to install Linux on your machine so you can follow along. You'll also learn how to access Linux on a Windows machine.

I recommend that you follow any one of the methods mentioned in this section to get access to Linux so you may follow along.

Install Linux as the primary OS

Installing Linux as the primary OS is the most efficient way to use Linux, as you can use the full power of your machine.

In this section, you will learn how to install Ubuntu, which is one of the most popular Linux distributions. I have left out other distributions for now, as I want to keep things simple. You can always explore other distributions once you are comfortable with Ubuntu.

Step 1 – Download the Ubuntu iso: Go to the official website and download the iso file. Make sure to select a stable release that is labeled "LTS". LTS stands for Long Term Support which means you can get free security and maintenance updates for a long time (usually 5 years).

Step 2 – Create a bootable pendrive: There are a number of softwares that can create a bootable pendrive. I recommend using Rufus, as it is quite easy to use. You can download it from here.

Step 3 – Boot from the pendrive: Once your bootable pendrive is ready, insert it and boot from the pendrive. The boot menu depends on your laptop. You can google the boot menu for your laptop model.

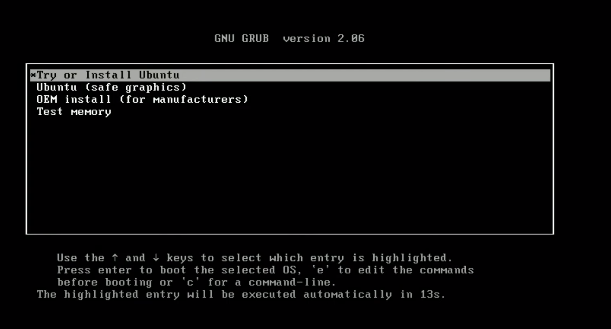

Step 4 – Follow the prompts. Once, the boot process starts, select

try or install ubuntu.

The process will take some time. Once the GUI appears, you can select the language, and keyboard layout and continue. Enter your login and name. Remember the credentials as you will need them to log in to your system and access full privileges. Wait for the installation to complete.

Step 5 – Restart: Click on restart now and remove the pen drive.

Step 6 – Login: Login with the credentials you entered earlier.

And there you go! Now you can install apps and customize your desktop.

For advanced installation, you can explore the following topics:

Disk partitioning.

Setting swap memory for enabling hibernation.

Accessing the terminal

An important part of this handbook is learning about the terminal where you'll run all the commands and see the magic happen. You can search for the terminal by pressing the "windows" key and typing "terminal". You can pin the Terminal in the dock where other apps are located for easy access.

💡 The shortcut for opening the terminal is

ctrl+alt+t

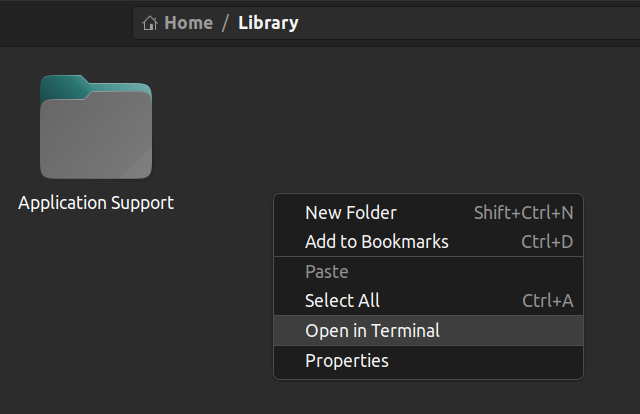

You can also open the terminal from inside a folder. Right click where you are and click on "Open in Terminal". This will open the terminal in the same path.

How to use Linux on a Windows machine

Sometimes you might need to run both Linux and Windows side by side. Luckily, there are some ways you can get the best of both worlds without getting different computers for each operating system.

In this section, you'll explore a few ways to use Linux on a Windows machine. Some of them are browser-based or cloud-based and do not need any OS installation before using them.

Option 1: "Dual-boot" Linux + Windows With dual boot, you can install Linux alongside Windows on your computer, allowing you to choose which operating system to use at startup.

This requires partitioning your hard drive and installing Linux on a separate partition. With this approach, you can only use one operating system at a time.

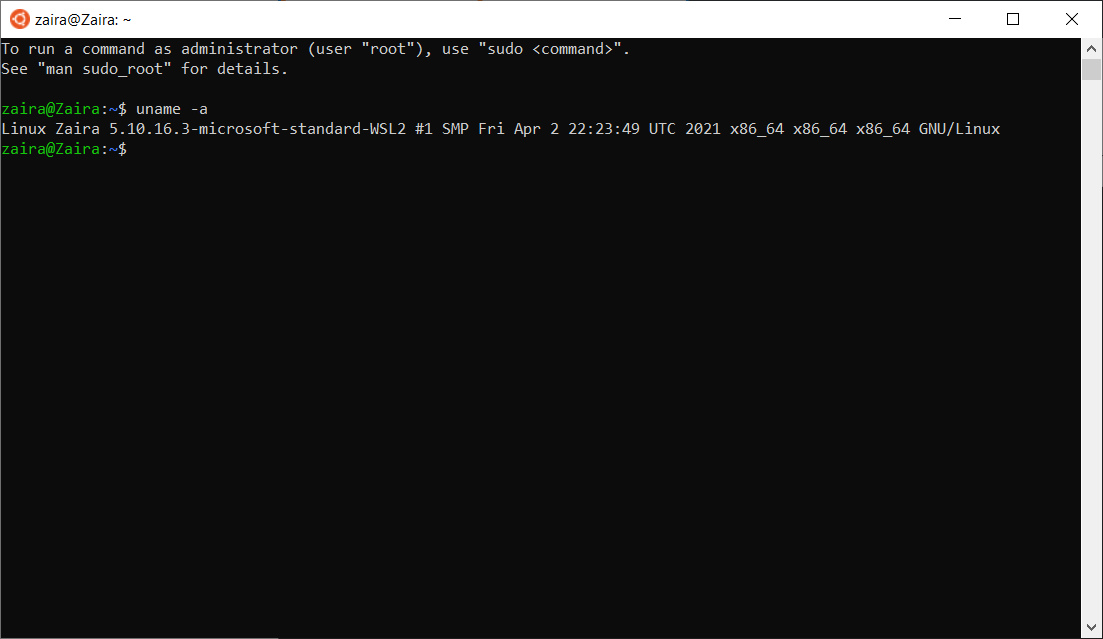

Option 2: Use Windows Subsystem for Linux (WSL) Windows Subsystem for Linux provides a compatibility layer that lets you run Linux binary executables natively on Windows.

Using WSL has some advantages. The setup for WSL is simple and not time-consuming. It is lightweight compared to VMs where you have to allocate resources from the host machine. You don't need to install any ISO or virtual disc image for Linux machines which tend to be heavy files. You can use Windows and Linux side by side.

How to install WSL2

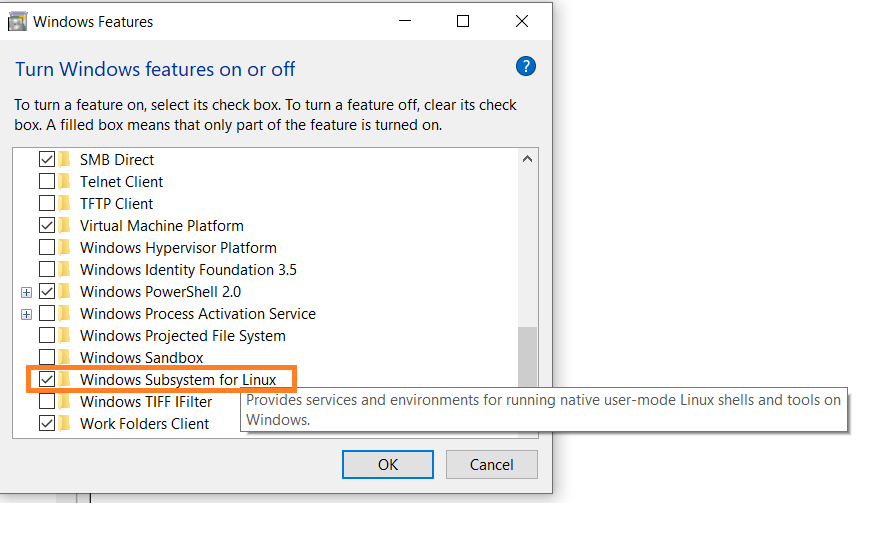

First, enable the Windows Subsystem for Linux option in settings.

Go to Start. Search for "Turn Windows features on or off."

Check the option "Windows Subsystem for Linux" if it isn't already.

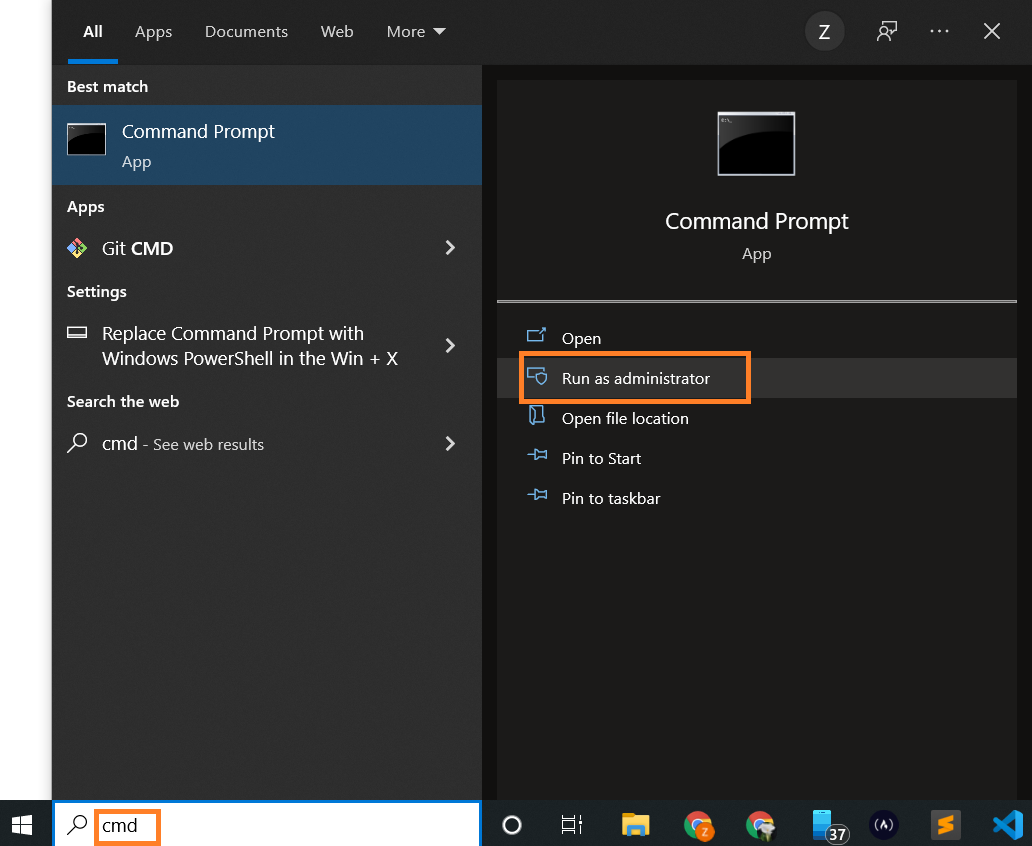

Next, open your command prompt and provide the installation commands.

Open Command Prompt as an administrator:

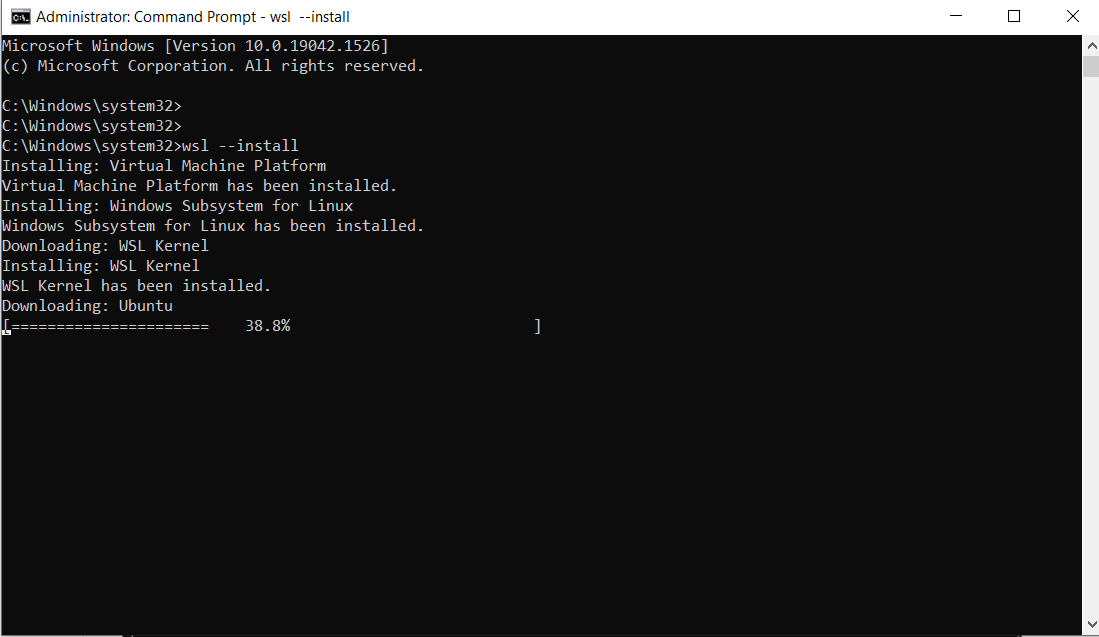

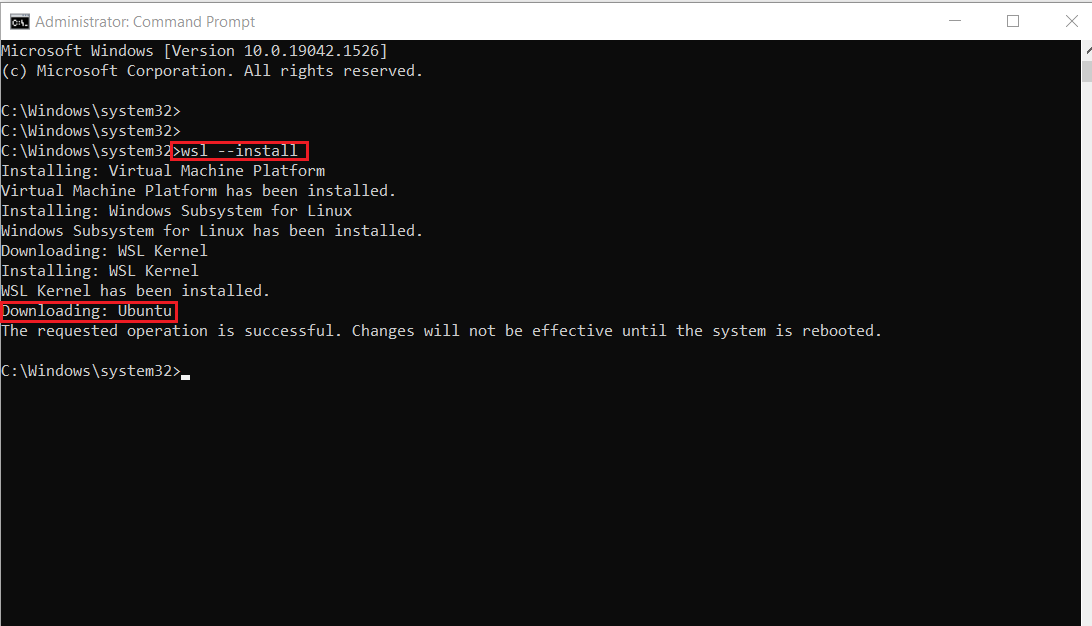

Run the command below:

wsl --install

This is the output:

Note: By default, Ubuntu will be installed.

- Once installation is complete, you'll need to reboot your Windows machine. So, restart your Windows machine.

After restarting, you might see a window like this:

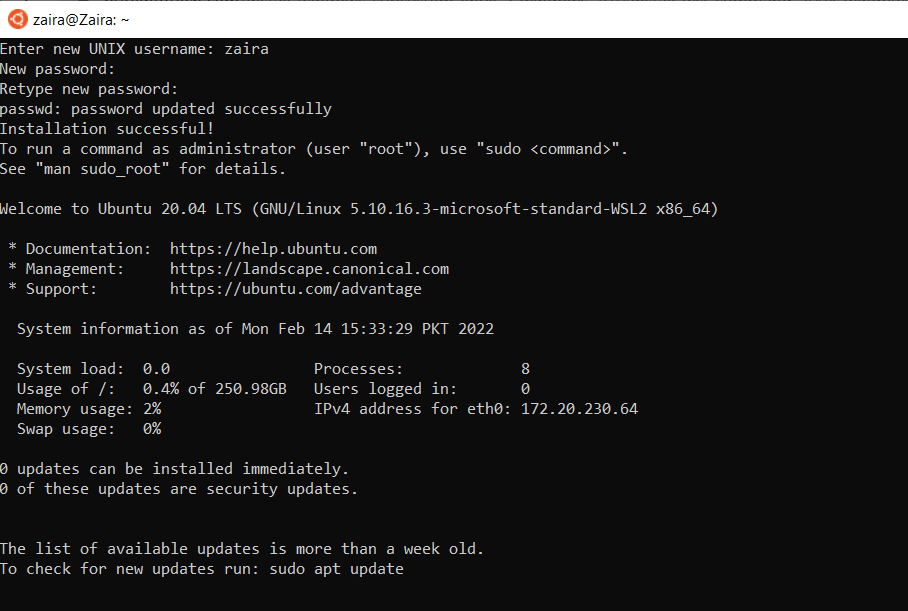

Once installation of Ubuntu is complete, you'll be prompted to enter your username and password.

And, that's it! You are ready to use Ubuntu.

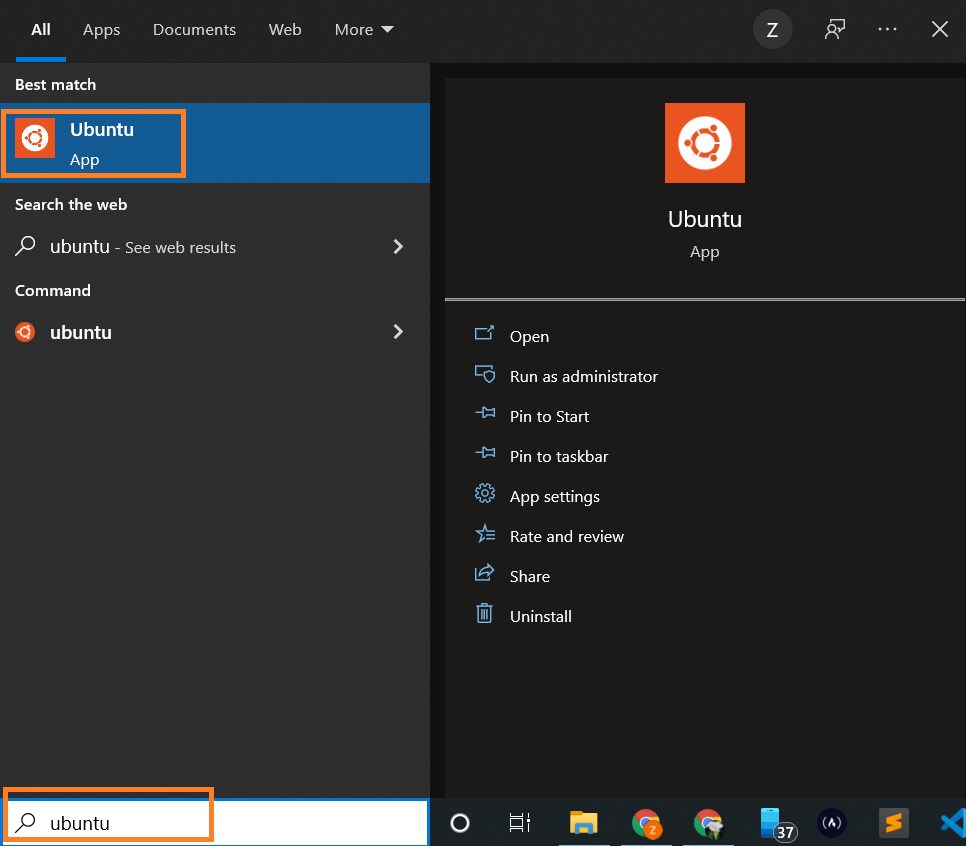

Launch Ubuntu by searching from the start menu.

And here we have your Ubuntu instance launched.

Option 3: Use a Virtual Machine (VM)

A virtual machine (VM) is a software emulation of a physical computer system. It allows you to run multiple operating systems and applications on a single physical machine simultaneously.

You can use virtualization software such as Oracle VirtualBox or VMware to create a virtual machine running Linux within your Windows environment. This allows you to run Linux as a guest operating system alongside Windows.

VM software provides options to allocate and manage hardware resources for each VM, including CPU cores, memory, disk space, and network bandwidth. You can adjust these allocations based on the requirements of the guest operating systems and applications.

Here are some of the common options available for virtualization:

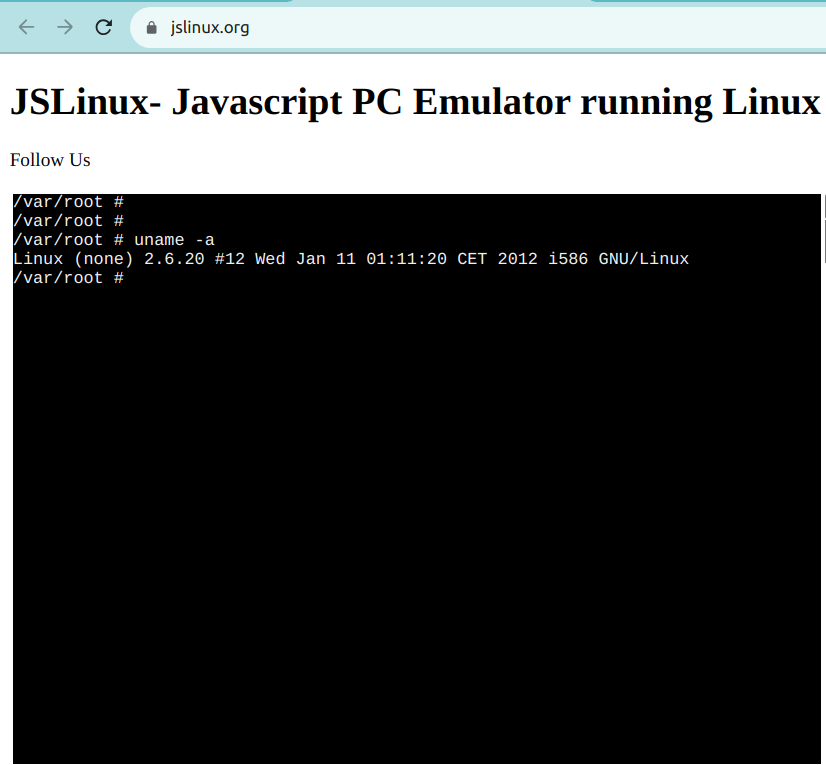

Option 4: Use a Browser-based Solution

Browser-based solutions are particularly useful for quick testing, learning, or accessing Linux environments from devices that don't have Linux installed.

You can either use online code editors or web-based terminals to access Linux. Note that you usually don't have full administration privileges in these cases.

Online code editors

Online code editors offer editors with built-in Linux terminals. While their primary purpose is coding, you can also utilize the Linux terminal to execute commands and perform tasks.

Replit is an example of an online code editor, where you can write your code and access the Linux shell at the same time.

Web-based Linux terminals:

Online Linux terminals allow you to access a Linux command-line interface directly from your browser. These terminals provide a web-based interface to a Linux shell, enabling you to execute commands and work with Linux utilities.

One such example is JSLinux. The screenshot below shows a ready-to-use Linux environment:

Option 5: Use a Cloud-based Solution

Instead of running Linux directly on your Windows machine, you can consider using cloud-based Linux environments or virtual private servers (VPS) to access and work with Linux remotely.

Services like Amazon EC2, Microsoft Azure, or DigitalOcean provide Linux instances that you can connect to from your Windows computer. Note that some of these services offer free tiers, but they are not usually free in the long run.

Part 2: Introduction to Bash Shell and System Commands

2.1. Getting Started with the Bash shell

Introduction to the bash shell

The Linux command line is provided by a program called the shell. Over the years, the shell program has evolved to cater to various options.

Different users can be configured to use different shells. But, most users prefer to stick with the current default shell. The default shell for many Linux distros is the GNU Bourne-Again Shell (bash). Bash is succeeded by the Bourne shell (sh).

To find out your current shell, open your terminal and enter the following command:

echo $SHELL

Command breakdown:

The

echocommand is used to print on the terminal.The

$SHELLis a special variable that holds the name of the current shell.

In my setup, the output is /bin/bash. This means that I am using the bash shell.

# output

echo $SHELL

/bin/bash

Bash is very powerful as it can simplify certain operations that are hard to accomplish efficiently with a GUI (or Graphical User Interface). Remember that most servers do not have a GUI, and it is best to learn to use the powers of a command line interface (CLI).

Terminal vs Shell

The terms "terminal" and "shell" are often used interchangeably, but they refer to different parts of the command-line interface.

The terminal is the interface you use to interact with the shell. The shell is the command interpreter that processes and executes your commands. You'll learn more about shells in Part 6 of the handbook.

What is a prompt?

When a shell is used interactively, it displays a $ when it is waiting for a command from the user. This is called the shell prompt.

[username@host ~]$

If the shell is running as root (you'll learn more about the root user later on), the prompt is changed to #.

[root@host ~]#

2.2. Command Structure

A command is a program that performs a specific operation. Once you have access to the shell, you can enter any command after the $ sign and see the output on the terminal.

Generally, Linux commands follow this syntax:

command [options] [arguments]

Here is the breakdown of the above syntax:

command: This is the name of the command you want to execute.ls(list),cp(copy), andrm(remove) are common Linux commands.[options]: Options, or flags, often preceded by a hyphen (-) or double hyphen (--), modify the behavior of the command. They can change how the command operates. For example,ls -auses the-aoption to display hidden files in the current directory.[arguments]: Arguments are the inputs for the commands that require one. These could be filenames, user names, or other data that the command will act upon. For example, in the commandcat access.log,catis the command andaccess.logis the input. As a result, thecatcommand displays the contents of theaccess.logfile.

Options and arguments are not required for all commands. Some commands can be run without any options or arguments, while others might require one or both to function correctly. You can always refer to the command's manual to check the options and arguments it supports.

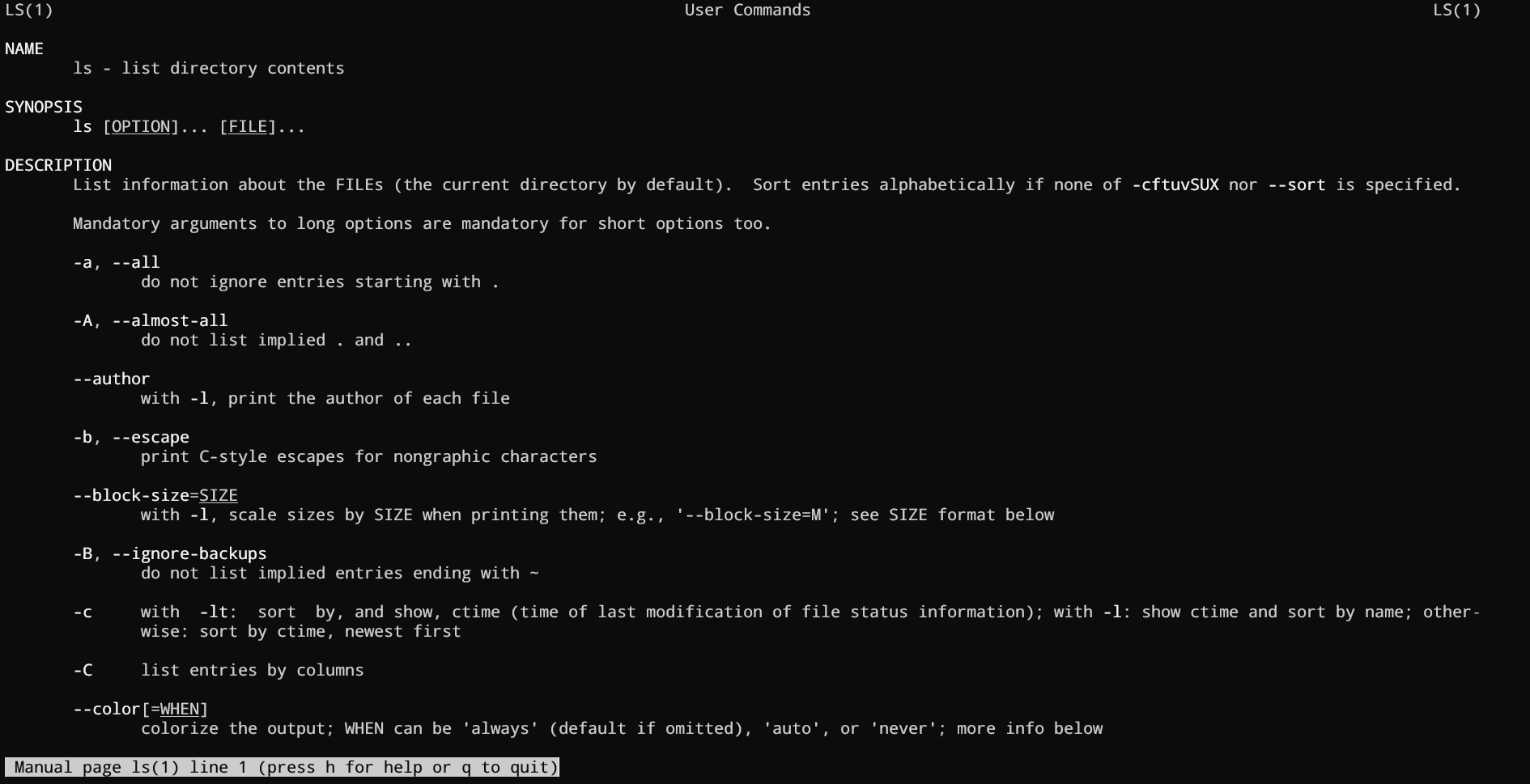

💡Tip: You can view a command's manual using the man command.

You can access the manual page for ls with man ls, and it'll look like this:

Manual pages are a great and quick way to access the documentation. I highly recommend going through man pages for the commands that you use the most.

2.3. Bash Commands and Keyboard Shortcuts

When you are in the terminal, you can speed up your tasks by using shortcuts.

Here are some of the most common terminal shortcuts:

| Operation | Shortcut |

| Look for the previous command | Up Arrow |

| Jump to the beginning of the previous word | Ctrl+LeftArrow |

| Clear characters from the cursor to the end of the command line | Ctrl+K |

| Complete commands, file names, and options | Pressing Tab |

| Jumps to the beginning of the command line | Ctrl+A |

| Displays the list of previous commands | history |

2.4. Identifying Yourself: The whoami Command

You can get the username you are logged in with by using the whoami command. This command is useful when you are switching between different users and want to confirm the current user.

Just after the $ sign, type whoami and press enter.

whoami

This is the output I got.

zaira@zaira-ThinkPad:~$ whoami

zaira

Part 3: Understanding Your Linux System

3.1. Discovering Your OS and Specs

Print system information using the uname Command

You can get detailed system information from the uname command.

When you provide the -a option, it prints all the system information.

uname -a

# output

Linux zaira 6.5.0-21-generic #21~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Fri Feb 9 13:32:52 UTC 2 x86_64 x86_64 x86_64 GNU/Linux

In the output above,

Linux: Indicates the operating system.zaira: Represents the hostname of the machine.6.5.0-21-generic #21~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Fri Feb 9 13:32:52 UTC 2: Provides information about the kernel version, build date, and some additional details.x86_64 x86_64 x86_64: Indicates the architecture of the system.GNU/Linux: Represents the operating system type.

Find details of the CPU architecture using the lscpu Command

The lscpu command in Linux is used to display information about the CPU architecture. When you run lscpu in the terminal, it provides details such as:

The architecture of the CPU (for example, x86_64)

CPU op-mode(s) (for example, 32-bit, 64-bit)

Byte Order (for example, Little Endian)

CPU(s) (number of CPUs), and so on

Let's try it out:

lscpu

# output

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 48 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 12

On-line CPU(s) list: 0-11

Vendor ID: AuthenticAMD

Model name: AMD Ryzen 5 5500U with Radeon Graphics

Thread(s) per core: 2

Core(s) per socket: 6

Socket(s): 1

Stepping: 1

CPU max MHz: 4056.0000

CPU min MHz: 400.0000

That was a whole lot of information, but useful too! Remember you can always skim the relevant information using specific flags. See the command manual with man lscpu.

Part 4: Managing Files From the Command line

4.1. The Linux File-system Hierarchy

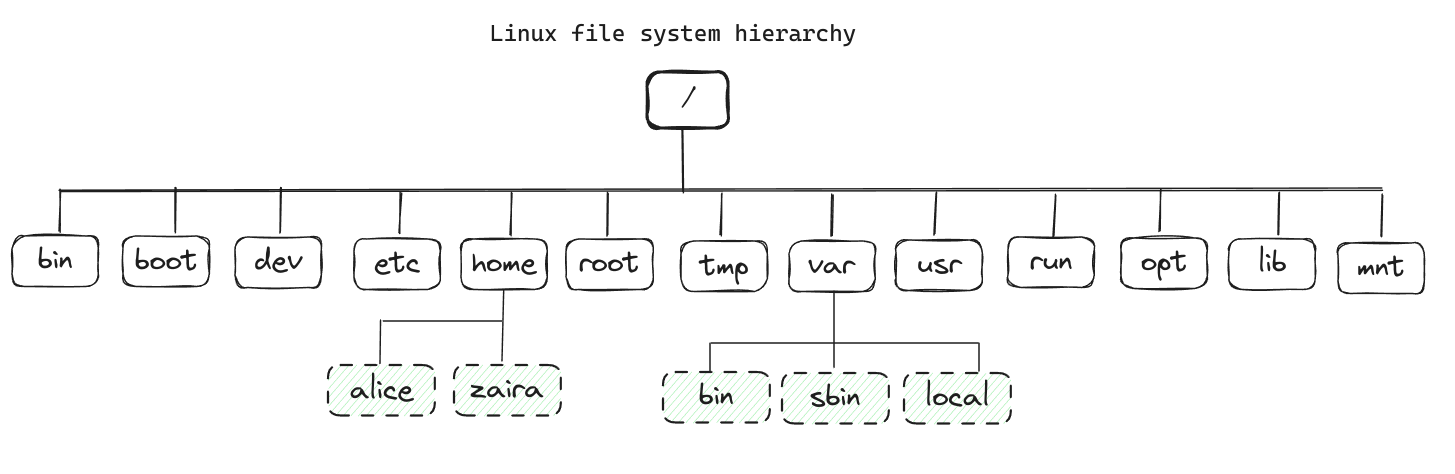

All files in Linux are stored in a file-system. It follows an inverted-tree-like structure because the root is at the topmost part.

The / is the root directory and the starting point of the file system. The root directory contains all other directories and files on the system. The / character also serves as a directory separator between path names. For example, /home/alice forms a complete path.

The image below shows the complete file system hierarchy. Each directory servers a specific purpose.

Note that this is not an exhaustive list and different distributions may have different configurations.

Here is a table that shows the purpose of each directory:

| Location | Purpose |

| /bin | Essential command binaries |

| /boot | Static files of the boot loader, needed in order to start the boot process. |

| /etc | Host-specific system configuration |

| /home | User home directories |

| /root | Home directory for the administrative root user |

| /lib | Essential shared libraries and kernel modules |

| /mnt | Mount point for mounting a filesystem temporarily |

| /opt | Add-on application software packages |

| /usr | Installed software and shared libraries |

| /var | Variable data that is also persistent between boots |

| /tmp | Temporary files that are accessible to all users |

💡 Tip: You can learn more about the file system using the man hier command.

You can check your file system using the tree -d -L 1 command. You can modify the -L flag to change the depth of the tree.

tree -d -L 1

# output

.

├── bin -> usr/bin

├── boot

├── cdrom

├── data

├── dev

├── etc

├── home

├── lib -> usr/lib

├── lib32 -> usr/lib32

├── lib64 -> usr/lib64

├── libx32 -> usr/libx32

├── lost+found

├── media

├── mnt

├── opt

├── proc

├── root

├── run

├── sbin -> usr/sbin

├── snap

├── srv

├── sys

├── tmp

├── usr

└── var

25 directories

This list is not exhaustive and different distributions and systems may be configured differently.

4.2. Navigating the Linux File-system

Absolute path vs relative path

The absolute path is the full path from the root directory to the file or directory. It always starts with a /. For example, /home/john/documents.

The relative path, on the other hand, is the path from the current directory to the destination file or directory. It does not start with a /. For example, documents/work/project.

Locating your current directory using the pwd command

It is easy to lose your way in the Linux file system, especially if you are new to the command line. You can locate your current directory using the pwd command.

Here is an example:

pwd

# output

/home/zaira/scripts/python/free-mem.py

Changing directories using the cd command

The command to change directories is cd and it stands for "change directory". You can use the cd command to navigate to a different directory.

You can use a relative path or an absolute path.

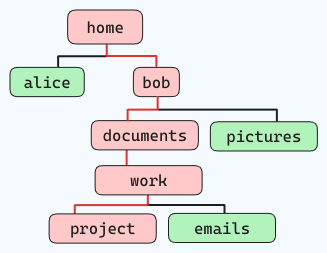

For example, if you want to navigate the below file structure (following the red lines):

and you are standing at "home", the command would be like this:

cd home/bob/documents/work/project

Some other commonly used cd shortcuts are:

| Command | Description |

cd .. | Go back one directory |

cd ../.. | Go back two directories |

cd or cd ~ | Go to the home directory |

cd - | Go to the previous path |

4.3. Managing Files and Directories

When working with files and directories, you might want to copy, move, remove, and create new files and directories. Here are some commands that can help you with that.

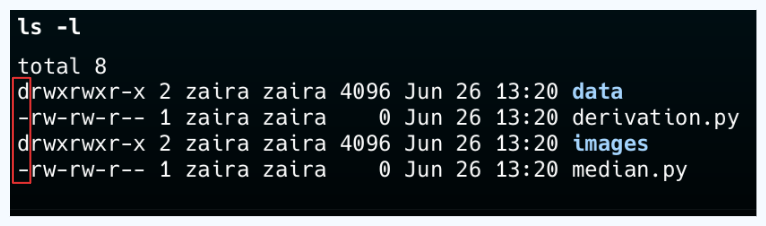

💡Tip: You can differentiate between a file and folder by looking at the first letter in the output of ls -l. A'-' represents a file and a 'd' represents a folder.

Creating new directories using the mkdir command

You can create an empty directory using the mkdir command.

# creates an empty directory named "foo" in the current folder

mkdir foo

You can also create directories recursively using the -p option.

mkdir -p tools/index/helper-scripts

# output of tree

.

└── tools

└── index

└── helper-scripts

3 directories, 0 files

Creating new files using the touch command

The touch command creates an empty file. You can use it like this:

# creates empty file "file.txt" in the current folder

touch file.txt

The file names can be chained together if you want to create multiple files in a single command.

# creates empty files "file1.txt", "file2.txt", and "file3.txt" in the current folder

touch file1.txt file2.txt file3.txt

Removing files and directories using the rm and rmdir command

You can use the rm command to remove both files and non-empty directories.

| Command | Description |

rm file.txt | Removes the file file.txt |

rm -r directory | Removes the directory directory and its contents |

rm -f file.txt | Removes the file file.txt without prompting for confirmation |

rmdir directory | Removes an empty directory |

🛑 Note that you should use the -f flag with caution as you won't be asked before deleting a file. Also, be careful when running rm commands in the root folder as it might result in deleting important system files.

Copying files using the cp command

To copy files in Linux, use the cp command.

- Syntax to copy files:

cp source_file destination_of_file

This command copies a file named file1.txt to a new file location /home/adam/logs.

cp file1.txt /home/adam/logs

The cp command also creates a copy of one file with the provided name.

This command copies a file named file1.txt to another file named file2.txt in the same folder.

cp file1.txt file2.txt

Moving and renaming files and folders using the mv command

The mv command is used to move files and folders from one directory to the other.

Syntax to move files:mv source_file destination_directory

Example: Move a file named file1.txt to a directory named backup:

mv file1.txt backup/

To move a directory and its contents:

mv dir1/ backup/

Renaming files and folders in Linux is also done with the mv command.

Syntax to rename files:mv old_name new_name

Example: Rename a file from file1.txt to file2.txt:

mv file1.txt file2.txt

Rename a directory from dir1 to dir2:

mv dir1 dir2

4.4. Locating Files and Folders Using the find Command

The find command lets you efficiently search for files, folders, and character and block devices.

Below is the basic syntax of the find command:

find /path/ -type f -name file-to-search

Where,

/pathis the path where the file is expected to be found. This is the starting point for searching files. The path can also be/or.which represents the root and current directory, respectively.-typerepresents the file descriptors. They can be any of the below:

f– Regular file such as text files, images, and hidden files.

d– Directory. These are the folders under consideration.

l– Symbolic link. Symbolic links point to files and are similar to shortcuts.

c– Character devices. Files that are used to access character devices are called character device files. Drivers communicate with character devices by sending and receiving single characters (bytes, octets). Examples include keyboards, sound cards, and the mouse.

b– Block devices. Files that are used to access block devices are called block device files. Drivers communicate with block devices by sending and receiving entire blocks of data. Examples include USB and CD-ROM-nameis the name of the file type that you want to search.

How to search files by name or extension

Suppose we need to find files that contain "style" in their name. We'll use this command:

find . -type f -name "style*"

#output

./style.css

./styles.css

Now let's say we want to find files with a particular extension like .html. We'll modify the command like this:

find . -type f -name "*.html"

# output

./services.html

./blob.html

./index.html

How to search hidden files

A dot at the beginning of the filename represents hidden files. They are normally hidden but can be viewed with ls -a in the current directory.

We can modify the find command as shown below to search for hidden files:

find . -type f -name ".*"

List and find hidden files

ls -la

# folder contents

total 5

drwxrwxr-x 2 zaira zaira 4096 Mar 26 14:17 .

drwxr-x--- 61 zaira zaira 4096 Mar 26 14:12 ..

-rw-rw-r-- 1 zaira zaira 0 Mar 26 14:17 .bash_history

-rw-rw-r-- 1 zaira zaira 0 Mar 26 14:17 .bash_logout

-rw-rw-r-- 1 zaira zaira 0 Mar 26 14:17 .bashrc

find . -type f -name ".*"

# find output

./.bash_logout

./.bashrc

./.bash_history

Above you can see a list of hidden files in my home directory.

How to search log files and configuration files

Log files usually have the extension .log, and we can find them like this:

find . -type f -name "*.log"

Similarly, we can search for configuration files like this:

find . -type f -name "*.conf"

How to search other files by type

We can search for character block files by providing c to -type:

find / -type c

Similarly, we can find device block files by using b:

find / -type b

How to search directories

In the example below, we are finding the folders using the -type d flag.

ls -l

# list folder contents

drwxrwxr-x 2 zaira zaira 4096 Mar 26 14:22 hosts

-rw-rw-r-- 1 zaira zaira 0 Mar 26 14:23 hosts.txt

drwxrwxr-x 2 zaira zaira 4096 Mar 26 14:22 images

drwxrwxr-x 2 zaira zaira 4096 Mar 26 14:23 style

drwxrwxr-x 2 zaira zaira 4096 Mar 26 14:22 webp

find . -type d

# find directory output

.

./webp

./images

./style

./hosts

How to search files by size

An incredibly helpful use of the find command is to list files based on a particular size.

find / -size +250M

Here, we are listing files whose size exceeds 250MB.

Other units include:

G: GigaBytes.M: MegaBytes.K: KiloBytesc: bytes.

Just replace with the relevant unit.

find <directory> -type f -size +N<Unit Type>

How to search files by modification time

By using the -mtime flag, you can filter files and folders based on the modification time.

find /path -name "*.txt" -mtime -10

For example,

-mtime +10 means you are looking for a file modified 10 days ago.

-mtime -10 means less than 10 days.

-mtime 10 If you skip + or – it means exactly 10 days.

4.5. Basic Commands for Viewing Files

Concatenate and display files using the cat command

The cat command in Linux is used to display the contents of a file. It can also be used to concatenate files and create new files.

Here is the basic syntax of the cat command:

cat [options] [file]

The simplest way to use cat is without any options or arguments. This will display the contents of the file on the terminal.

For example, if you want to view the contents of a file named file.txt, you can use the following command:

cat file.txt

This will display all the contents of the file on the terminal at once.

Viewing text files interactively using less and more

While cat displays the entire file at once, less and more allow you to view the contents of a file interactively. This is useful when you want to scroll through a large file or search for specific content.

The syntax of the less command is:

less [options] [file]

The more command is similar to less but has fewer features. It is used to display the contents of a file one screen at a time.

The syntax of the more command is:

more [options] [file]

For both commands, you can use the spacebar to scroll one page down, the Enter key to scroll one line down, and the q key to exit the viewer.

To move backward you can use the b key, and to move forward you can use the f key.

Displaying the last part of files using tail

Sometimes you might need to view just the last few lines of a file instead of the entire file. The tail command in Linux is used to display the last part of a file.

For example, tail file.txt will display the last 10 lines of the file file.txt by default.

If you want to display a different number of lines, you can use the -n option followed by the number of lines you want to display.

# Display the last 50 lines of the file file.txt

tail -n 50 file.txt

💡Tip: Another usage of the tail is its follow-along (-f) option. This option enables you to view the contents of a file as they are being written. This is a useful utility for viewing and monitoring log files in real-time.

Displaying the beginning of files using head

Just like tail displays the last part of a file, you can use the head command in Linux to display the beginning of a file.

For example, head file.txt will display the first 10 lines of the file file.txt by default.

To change the number of lines displayed, you can use the -n option followed by the number of lines you want to display.

Counting words, lines, and characters using wc

You can count words, lines and characters in a file using the wc command.

For example, running wc syslog.log gave me the following output:

1669 9623 64367 syslog.log

In the output above,

1669represents the number of lines in the filesyslog.log.9623represents the number of words in the filesyslog.log.64367represents the number of characters in the filesyslog.log.

So, the command wc syslog.log counted 1669 lines, 9623 words, and 64367 characters in the file syslog.log.

Comparing files line by line using diff

Comparing and finding differences between two files is a common task in Linux. You can compare two files right within the command line using the diff command.

The basic syntax of the diff command is:

diff [options] file1 file2

Here are two files, hello.py and also-hello.py, that we will compare using the diff command:

# contents of hello.py

def greet(name):

return f"Hello, {name}!"

user = input("Enter your name: ")

print(greet(user))

# contents of also-hello.py

more also-hello.py

def greet(name):

return fHello, {name}!

user = input(Enter your name: )

print(greet(user))

print("Nice to meet you")

- Check whether the files are the same or not

diff -q hello.py also-hello.py

# Output

Files hello.py and also-hello.py differ

- See how the files differ. For that, you can use the

-uflag to see a unified output:

diff -u hello.py also-hello.py

--- hello.py 2024-05-24 18:31:29.891690478 +0500

+++ also-hello.py 2024-05-24 18:32:17.207921795 +0500

@@ -3,4 +3,5 @@

user = input(Enter your name: )

print(greet(user))

+print("Nice to meet you")

In the above output:

--- hello.py 2024-05-24 18:31:29.891690478 +0500indicates the file being compared and its timestamp.+++ also-hello.py 2024-05-24 18:32:17.207921795 +0500indicates the other file being compared and its timestamp.@@ -3,4 +3,5 @@shows the line numbers where the changes occur. In this case, it indicates that lines 3 to 4 in the original file have changed to lines 3 to 5 in the modified file.user = input(Enter your name: )is a line from the original file.print(greet(user))is another line from the original file.+print("Nice to meet you")is the additional line in the modified file.

- To see the diff in a side-by-side format, you can use the

-yflag:

diff -y hello.py also-hello.py

# Output

def greet(name): def greet(name):

return fHello, {name}! return fHello, {name}!

user = input(Enter your name: ) user = input(Enter your name: )

print(greet(user)) print(greet(user))

> print("Nice to meet you")

In the output:

The lines that are the same in both files are displayed side by side.

Lines that are different are shown with a

>symbol indicating the line is only present in one of the files.

Part 5: The Essentials of Text Editing in Linux

Text editing skills using the command line are one of the most crucial skills in Linux. In this section, you will learn how to use two popular text editors in Linux: Vim and Nano.

I suggest that you master any one text editor of your choice and stick to it. It will save you time and make you more productive. Vim and nano are safe choices as they are present on most Linux distributions.

5.1. Mastering Vim: The Complete Guide

Introduction to Vim

Vim is a popular text editing tool for the command line. Vim comes with its advantages: it is powerful, customizable, and fast. Here are some reasons why you should consider learning Vim:

Most servers are accessed via a CLI, so in system administration, you don't necessarily have the luxury of a GUI. But Vim has got your back – it'll always be there.

Vim uses a keyboard-centric approach, as it is designed to be used without a mouse, which can significantly speed up editing tasks once you have learned the keyboard shortcuts. This also makes it faster than GUI tools.

Some Linux utilities, for example editing cron jobs, work in the same editing format as Vim.

Vim is suitable for all – beginners and advanced users. Vim supports complex string searches, highlighting searches, and much more. Through plugins, Vim provides extended capabilities to developers and system admins that includes code completion, syntax highlighting, file management, version control, and more.

Vim has two variations: Vim (vim) and Vim tiny (vi). Vim tiny is a smaller version of Vim that lacks some features of Vim.

How to start using vim

Start using Vim with this command:

vim your-file.txt

your-file.txt can either be a new file or an existing file that you want to edit.

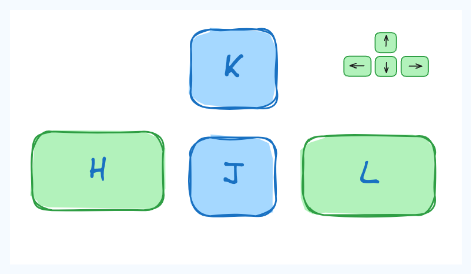

Navigating Vim: Mastering movement and command modes

In the early days of the CLI, the keyboards didn't have arrow keys. Hence, navigation was done using the set of available keys, hjkl being one of them.

Being keyboard-centric, using hjkl keys can greatly speed up text editing tasks.

Note: Although arrow keys would work totally fine, you can still experiment with hjkl keys to navigate. Some people find this this way of navigation efficient.

💡Tip: To remember the hjkl sequence, use this: hang back, jump down, kick up, leap forward.

The three Vim modes

You need to know the 3 operating modes of Vim and how to switch between them. Keystrokes behave differently in each command mode. The three modes are as follows:

Command mode.

Edit mode.

Visual mode.

Command Mode. When you start Vim, you land in the command mode by default. This mode allows you to access other modes.

⚠ To switch to other modes, you need to be present in the command mode first

Edit Mode

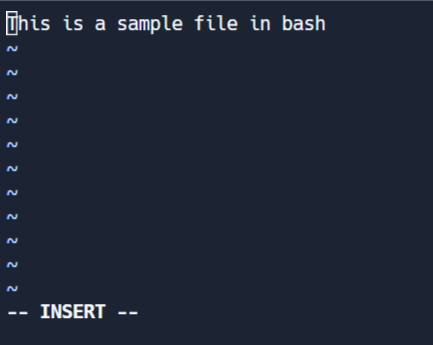

This mode allows you to make changes to the file. To enter edit mode, press I while in command mode. Note the '-- INSERT' switch at the end of the screen.

Visual mode

This mode allows you to work on a single character, a block of text, or lines of text. Let's break it down into simple steps. Remember, use the below combinations when in command mode.

Shift + V→ Select multiple lines.Ctrl + V→ Block modeV→ Character mode

The visual mode comes in handy when you need to copy and paste or edit lines in bulk.

Extended command mode.

The extended command mode allows you to perform advanced operations like searching, setting line numbers, and highlighting text. We'll cover extended mode in the next section.

How to stay on track? If you forget your current mode, just press ESC twice and you will be back in Command Mode.

Editing Efficiently in Vim: Copy/pasting and searching

1. How to copy and paste in Vim

Copy-paste is known as 'yank' and 'put' in Linux terms. To copy-paste, follow these steps:

Select text in visual mode.

Press

'y'to copy/ yank.Move your cursor to the required position and press

'p'.

2. How to search for text in Vim

Any series of strings can be searched with Vim using the / in command mode. To search, use /string-to-match.

In the command mode, type :set hls and press enter. Search using /string-to-match. This will highlight the searches.

Let's search a few strings:

3. How to exit Vim

First, move to command mode (by pressing escape twice) and then use these flags:

Exit without saving →

:q!Exit and save →

:wq!

Shortcuts in Vim: Making Editing Faster

Note: All these shortcuts work in the command mode only.

Basic Navigation

h: Move leftj: Move downk: Move upl: Move right0: Move to the beginning of the line$: Move to the end of the linegg: Move to the beginning of the fileG: Move to the end of the fileCtrl+d: Move half-page downCtrl+u: Move half-page up

Editing

i: Enter insert mode before the cursorI: Enter insert mode at the beginning of the linea: Enter insert mode after the cursorA: Enter insert mode at the end of the lineo: Open a new line below the current line and enter insert modeO: Open a new line above the current line and enter insert modex: Delete the character under the cursordd: Delete the current lineyy: Yank (copy) the current line (use this in visual mode)p: Paste below the cursorP: Paste above the cursor

Searching and Replacing

/: Search for a pattern which will take you to its next occurrence?: Search for a pattern that will take you to its previous occurrencen: Repeat the last search in the same directionN: Repeat the last search in the opposite direction:%s/old/new/g: Replace all occurrences ofoldwithnewin the file

Exiting

:w: Save the file but don't exit:q: Quit Vim (fails if there are unsaved changes):wqor:x: Save and quit:q!: Quit without saving

Multiple Windows

:splitor:sp: Split the window horizontally:vsplitor:vsp: Split the window verticallyCtrl+w followed by h/j/k/l: Navigate between split windows

5.2. Mastering Nano

Getting started with Nano: The user-friendly text editor

Nano is a user-friendly text editor that is easy to use and is perfect for beginners. It is pre-installed on most Linux distributions.

To create a new file using Nano, use the following command:

nano

To start editing an existing file with Nano, use the following command:

nano filename

List of key bindings in Nano

Let's study the most important key bindings in Nano. You'll use the key bindings to perform various operations like saving, exiting, copying, pasting, and more.

Write to a file and save

Once you open Nano using the nano command, you can start writing text. To save the file, press Ctrl+O. You'll be prompted to enter the file name. Press Enter to save the file.

Exit nano

You can exit Nano by pressing Ctrl+X. If you have unsaved changes, Nano will prompt you to save the changes before exiting.

Copying and pasting

To select a region, use ALT+A. A marker will show. Use arrows to select the text. Once selected, exit the marker with with ALT+^.

To copy the selected text, press Ctrl+K. To paste the copied text, press Ctrl+U.

Cutting and pasting

Select the region with ALT+A. Once selected, cut the text with Ctrl+K. To paste the cut text, press Ctrl+U.

Navigation

Use Alt \ to move to the beginning of the file.

Use Alt / to move to the end of the file.

Viewing line numbers

When you open a file with nano -l filename, you can view line numbers on the left side of the file.

Searching

You can search for a specific line number with ALt + G. Enter the line number to the prompt and press Enter.

You can also initiate search for a string with CTRL + W and press Enter. If you want to search backwards, you can press Alt+W after initiating the search with Ctrl+W.

Summary of keybindings in Nano

General

Ctrl+X: Exit Nano (prompting to save if changes are made)Ctrl+O: Save the fileCtrl+R: Read a file into the current fileCtrl+G: Display the help text

Editing

Ctrl+K: Cut the current line and store it in the cutbufferCtrl+U: Paste the contents of the cutbuffer into the current lineAlt+6: Copy the current line and store it in the cutbufferCtrl+J: Justify the current paragraph

Navigation

Ctrl+A: Move to the beginning of the lineCtrl+E: Move to the end of the lineCtrl+C: Display the current line number and file informationCtrl+_(Ctrl+Shift+-): Go to a specific line (and optionally, column) numberCtrl+Y: Scroll up one pageCtrl+V: Scroll down one page

Search and Replace

Ctrl+W: Search for a string (thenEnterto search again)Alt+W: Repeat the last search but in the opposite directionCtrl+\: Search and replace

Miscellaneous

Ctrl+T: Invoke the spell checker, if availableCtrl+D: Delete the character under the cursor (does not cut it)Ctrl+L: Refresh (redraw) the current screenAlt+U: Undo the last operationAlt+E: Redo the last undone operation

Part 6: Bash Scripting

6.1. Definition of Bash scripting

A bash script is a file containing a sequence of commands that are executed by the bash program line by line. It allows you to perform a series of actions, such as navigating to a specific directory, creating a folder, and launching a process using the command line.

By saving commands in a script, you can repeat the same sequence of steps multiple times and execute them by running the script.

6.2. Advantages of Bash Scripting

Bash scripting is a powerful and versatile tool for automating system administration tasks, managing system resources, and performing other routine tasks in Unix/Linux systems.

Some advantages of shell scripting are:

Automation: Shell scripts allow you to automate repetitive tasks and processes, saving time and reducing the risk of errors that can occur with manual execution.

Portability: Shell scripts can be run on various platforms and operating systems, including Unix, Linux, macOS, and even Windows through the use of emulators or virtual machines.

Flexibility: Shell scripts are highly customizable and can be easily modified to suit specific requirements. They can also be combined with other programming languages or utilities to create more powerful scripts.

Accessibility: Shell scripts are easy to write and don't require any special tools or software. They can be edited using any text editor, and most operating systems have a built-in shell interpreter.

Integration: Shell scripts can be integrated with other tools and applications, such as databases, web servers, and cloud services, allowing for more complex automation and system management tasks.

Debugging: Shell scripts are easy to debug, and most shells have built-in debugging and error-reporting tools that can help identify and fix issues quickly.

6.3. Overview of Bash Shell and Command Line Interface

The terms "shell" and "bash" are often used interchangeably. But there is a subtle difference between the two.

The term "shell" refers to a program that provides a command-line interface for interacting with an operating system. Bash (Bourne-Again SHell) is one of the most commonly used Unix/Linux shells and is the default shell in many Linux distributions.

Till now, the commands that you have been entering were basically being entered in a "shell".

Although Bash is a type of shell, there are other shells available as well, such as Korn shell (ksh), C shell (csh), and Z shell (zsh). Each shell has its own syntax and set of features, but they all share the common purpose of providing a command-line interface for interacting with the operating system.

You can determine your shell type using the ps command:

ps

# output:

PID TTY TIME CMD

20506 pts/0 00:00:00 bash <--- the shell type

20931 pts/0 00:00:00 ps

In summary, while "shell" is a broad term that refers to any program that provides a command-line interface, "Bash" is a specific type of shell that is widely used in Unix/Linux systems.

Note: In this section, we will be using the "bash" shell.

6.4. How to Create and Execute Bash scripts

Script naming conventions

By naming convention, bash scripts end with .sh. However, bash scripts can run perfectly fine without the sh extension.

Adding the Shebang

Bash scripts start with a shebang. Shebang is a combination of bash # and bang ! followed by the bash shell path. This is the first line of the script. Shebang tells the shell to execute it via bash shell. Shebang is simply an absolute path to the bash interpreter.

Below is an example of the shebang statement.

#!/bin/bash

You can find your bash shell path (which may vary from the above) using the command:

which bash

Creating your first bash script

Our first script prompts the user to enter a path. In return, its contents will be listed.

Create a file named run_all.sh using any editor of your choice.

vim run_all.sh

Add the following commands in your file and save it:

#!/bin/bash

echo "Today is " `date`

echo -e "\nenter the path to directory"

read the_path

echo -e "\n you path has the following files and folders: "

ls $the_path

Let's take a deeper look at the script line by line. I am displaying the same script again, but this time with line numbers.

1 #!/bin/bash

2 echo "Today is " `date`

3

4 echo -e "\nenter the path to directory"

5 read the_path

6

7 echo -e "\n you path has the following files and folders: "

8 ls $the_path

Line #1: The shebang (

#!/bin/bash) points toward the bash shell path.Line #2: The

echocommand displays the current date and time on the terminal. Note that thedateis in backticks.Line #4: We want the user to enter a valid path.

Line #5: The

readcommand reads the input and stores it in the variablethe_path.line #8: The

lscommand takes the variable with the stored path and displays the current files and folders.

Executing the bash script

To make the script executable, assign execution rights to your user using this command:

chmod u+x run_all.sh

Here,

chmodmodifies the ownership of a file for the current user :u.+xadds the execution rights to the current user. This means that the user who is the owner can now run the script.run_all.shis the file we wish to run.

You can run the script using any of the mentioned methods:

sh run_all.shbash run_all.sh./run_all.sh

Let's see it running in action 🚀

6.5. Bash Scripting Basics

Comments in bash scripting

Comments start with a # in bash scripting. This means that any line that begins with a # is a comment and will be ignored by the interpreter.

Comments are very helpful in documenting the code, and it is a good practice to add them to help others understand the code.

These are examples of comments:

# This is an example comment

# Both of these lines will be ignored by the interpreter

Variables and data types in Bash

Variables let you store data. You can use variables to read, access, and manipulate data throughout your script.

There are no data types in Bash. In Bash, a variable is capable of storing numeric values, individual characters, or strings of characters.

In Bash, you can use and set the variable values in the following ways:

- Assign the value directly:

country=Netherlands

2. Assign the value based on the output obtained from a program or command, using command substitution. Note that $ is required to access an existing variable's value.

same_country=$country

This assigns the value of country to the new variable same_country.

To access the variable value, append $ to the variable name.

country=Netherlands

echo $country

# output

Netherlands

new_country=$country

echo $new_country

# output

Netherlands

Above, you can see an example of assigning and printing variable values.

Variable naming conventions

In Bash scripting, the following are the variable naming conventions:

Variable names should start with a letter or an underscore (

_).Variable names can contain letters, numbers, and underscores (

_).Variable names are case-sensitive.

Variable names should not contain spaces or special characters.

Use descriptive names that reflect the purpose of the variable.

Avoid using reserved keywords, such as

if,then,else,fi, and so on as variable names.

Here are some examples of valid variable names in Bash:

name

count

_var

myVar

MY_VAR

And here are some examples of invalid variable names:

# invalid variable names

2ndvar (variable name starts with a number)

my var (variable name contains a space)

my-var (variable name contains a hyphen)

Following these naming conventions helps make Bash scripts more readable and easier to maintain.

Input and output in Bash scripts

Gathering input

In this section, we'll discuss some methods to provide input to our scripts.

- Reading the user input and storing it in a variable

We can read the user input using the read command.

#!/bin/bash

echo "What's your name?"

read entered_name

echo -e "\nWelcome to bash tutorial" $entered_name

2. Reading from a file

This code reads each line from a file named input.txt and prints it to the terminal. We'll study while loops later in this section.

while read line

do

echo $line

done < input.txt

3. Command line arguments

In a bash script or function, $1 denotes the initial argument passed, $2 denotes the second argument passed, and so forth.

This script takes a name as a command-line argument and prints a personalized greeting.

#!/bin/bash

echo "Hello, $1!"

We have supplied Zaira as our argument to the script.

Output:

Displaying output

Here we'll discuss some methods to receive output from the scripts.

- Printing to the terminal:

echo "Hello, World!"

This prints the text "Hello, World!" to the terminal.

2. Writing to a file:

echo "This is some text." > output.txt

This writes the text "This is some text." to a file named output.txt. Note that the > operator overwrites a file if it already has some content.

3. Appending to a file:

echo "More text." >> output.txt

This appends the text "More text." to the end of the file output.txt.

4. Redirecting output:

ls > files.txt

This lists the files in the current directory and writes the output to a file named files.txt. You can redirect output of any command to a file this way.

You'll learn about output redirection in detail in section 8.5.

Conditional statements (if/else)

Expressions that produce a boolean result, either true or false, are called conditions. There are several ways to evaluate conditions, including if, if-else, if-elif-else, and nested conditionals.

Syntax:

if [[ condition ]];

then

statement

elif [[ condition ]]; then

statement

else

do this by default

fi

Syntax of bash conditional statements

We can use logical operators such as AND -a and OR -o to make comparisons that have more significance.

if [ $a -gt 60 -a $b -lt 100 ]

This statement checks if both conditions are true: a is greater than 60 AND b is less than 100.

Let's see an example of a Bash script that uses if, if-else, and if-elif-else statements to determine if a user-inputted number is positive, negative, or zero:

#!/bin/bash

# Script to determine if a number is positive, negative, or zero

echo "Please enter a number: "

read num

if [ $num -gt 0 ]; then

echo "$num is positive"

elif [ $num -lt 0 ]; then

echo "$num is negative"

else

echo "$num is zero"

fi

The script first prompts the user to enter a number. Then, it uses an if statement to check if the number is greater than 0. If it is, the script outputs that the number is positive. If the number is not greater than 0, the script moves on to the next statement, which is an if-elif statement.

Here, the script checks if the number is less than 0. If it is, the script outputs that the number is negative.

Finally, if the number is neither greater than 0 nor less than 0, the script uses an else statement to output that the number is zero.

Seeing it in action 🚀

Looping and branching in Bash

While loop

While loops check for a condition and loop until the condition remains true. We need to provide a counter statement that increments the counter to control loop execution.

In the example below, (( i += 1 )) is the counter statement that increments the value of i. The loop will run exactly 10 times.

#!/bin/bash

i=1

while [[ $i -le 10 ]] ; do

echo "$i"

(( i += 1 ))

done

For loop

The for loop, just like the while loop, allows you to execute statements a specific number of times. Each loop differs in its syntax and usage.

In the example below, the loop will iterate 5 times.

#!/bin/bash

for i in {1..5}

do

echo $i

done

Case statements

In Bash, case statements are used to compare a given value against a list of patterns and execute a block of code based on the first pattern that matches. The syntax for a case statement in Bash is as follows:

case expression in

pattern1)

# code to execute if expression matches pattern1

;;

pattern2)

# code to execute if expression matches pattern2

;;

pattern3)

# code to execute if expression matches pattern3

;;

*)

# code to execute if none of the above patterns match expression

;;

esac

Here, "expression" is the value that we want to compare, and "pattern1", "pattern2", "pattern3", and so on are the patterns that we want to compare it against.

The double semicolon ";;" separates each block of code to execute for each pattern. The asterisk "*" represents the default case, which executes if none of the specified patterns match the expression.

Let's see an example:

fruit="apple"

case $fruit in

"apple")

echo "This is a red fruit."

;;

"banana")

echo "This is a yellow fruit."

;;

"orange")

echo "This is an orange fruit."

;;

*)

echo "Unknown fruit."

;;

esac

In this example, since the value of fruit is apple, the first pattern matches, and the block of code that echoes This is a red fruit. is executed. If the value of fruit were instead banana, the second pattern would match and the block of code that echoes This is a yellow fruit. would execute, and so on.

If the value of fruit does not match any of the specified patterns, the default case is executed, which echoes Unknown fruit.

Part 7: Managing Software Packages in Linux

Linux comes with several built-in programs. But you might need to install new programs based on your needs. You might also need to upgrade the existing applications.

7.1. Packages and Package Management

What is a package?

A package is a collection of files that are bundled together. These files are essential for a particular program to run. These files contain the program's executable files, libraries, and other resources.

In addition to the files required for the program to run, packages also contain installation scripts, which copy the files to where they are needed. A program may contain many files and dependencies. With packages, it is easier to manage all the files and dependencies at once.

What is the difference between source and binary?

Programmers write source code in a programming language. This source code is then compiled into machine code that the computer can understand. The compiled code is called binary code.

When you download a package, you can either get the source code or the binary code. The source code is the human-readable code that can be compiled into binary code. The binary code is the compiled code that the computer can understand.

Source packages can be used with any type of machine if the source code is compiled properly. Binary, on the other hand, is compiled code that is specific to a particular type of machine or architecture.

You can find the architecture of your machine using the uname -m command.

uname -m

# output

x86_64

Package dependencies

Programs often share files. Instead of including these files in each package, a separate package can provide them for all programs.

To install a program that needs these files, you must also install the package containing them. This is called a package dependency. Specifying dependencies makes packages smaller and simpler by reducing duplicates.

When you install a program, its dependencies must also be installed. Most required dependencies are usually already installed, but a few extra ones might be needed. So, don't be surprised if several other packages are installed along with your chosen package. These are the necessary dependencies.

Package managers

Linux offers a comprehensive package management system for installing, upgrading, configuring, and removing software.

With package management, you can get access to an organized base of thousands of software packages along with having the ability to resolve dependencies and check for software updates.

Packages can be managed using either command-line utilities that can be easily automated by system administrators, or through a graphical interface.

Software channels/repositories

⚠️ Package management is different for different distros. Here, we are using Ubuntu.

Installing software is a bit different in Linux as compared to Windows and Mac.

Linux uses repositories to store software packages. A repository is a collection of software packages that are available for installation via a package manager.

A package manager also stores an index of all of the packages available from a repo. Sometimes the index is rebuilt to ensure that it is up to date and to know which packages have been upgraded or added to the channel since it last checked.

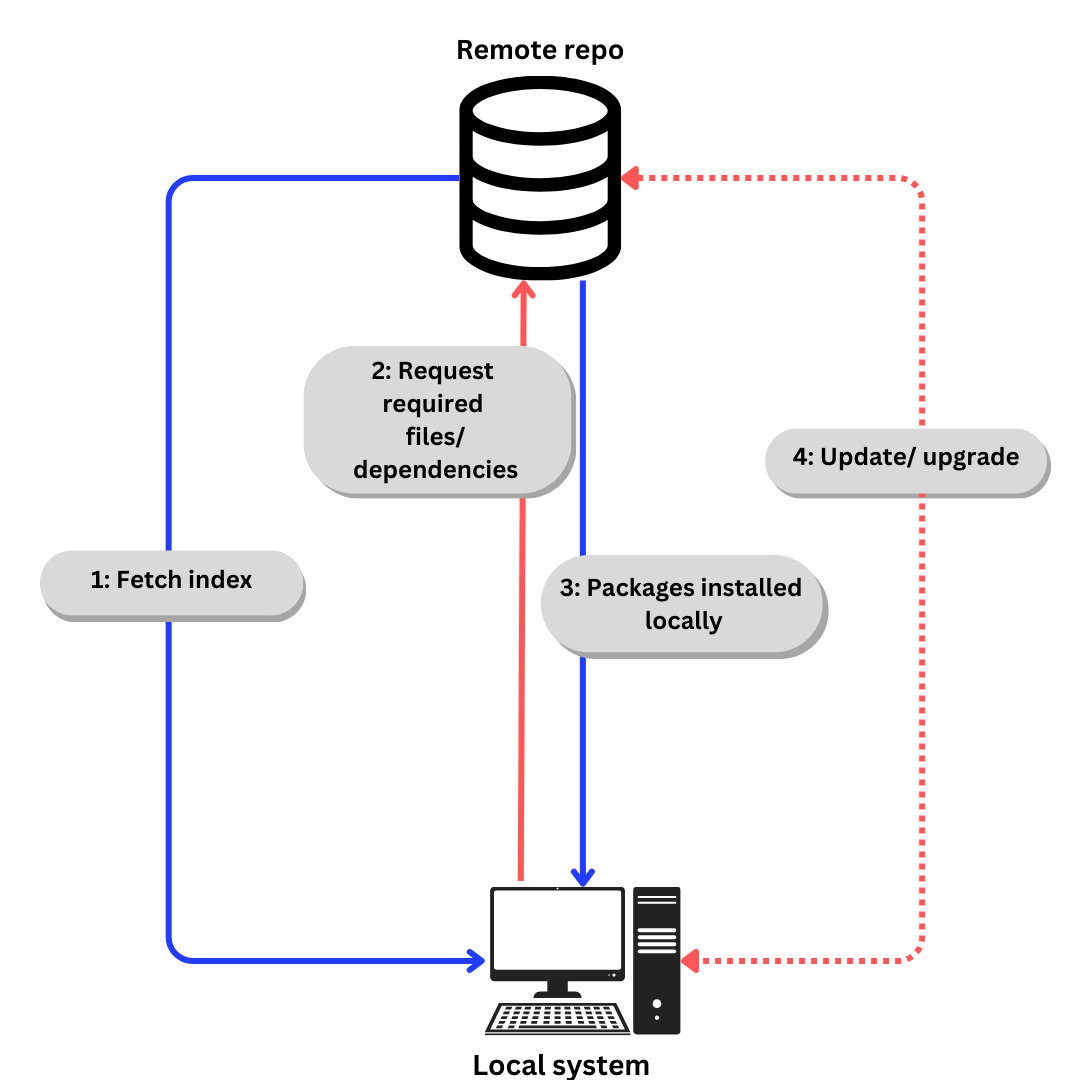

The generic process of downloading software from a repo looks something like this:

If we talk specifically about Ubuntu,

Index is fetched using

apt update.(aptis explained in next section).Required files/ dependencies requested according to index using

apt installPackages and dependencies installed locally.

Update dependencies and packages when required using

apt updateandapt upgrade

On Debian-based distros, you can file the list of repos (repositories) in /etc/apt/sources.list.

7.2. Installing a Package via Command Line

The apt command is a powerful command-line tool, which works with Ubuntu’s "Advanced Packaging Tool (APT)".

apt, along with the commands bundled with it, provides the means to install new software packages, upgrade existing software packages, update the package list index, and even upgrade the entire Ubuntu system.

To view the logs of the installation using apt, you can view the /var/log/dpkg.log file.

Following are the uses of the apt command:

Installing packages

For example, to install the htop package, you can use the following command:

sudo apt install htop

Updating the package list index

The package list index is a list of all the packages available in the repositories. To update the local package list index, you can use the following command:

sudo apt update

Upgrading the packages

Installed packages on your system can get updates containing bug fixes, security patches, and new features.

To upgrade the packages, you can use the following command:

sudo apt upgrade

Removing packages

To remove a package, like htop, you can use the following command:

sudo apt remove htop

7.3. Installing a Package via an Advanced Graphical Method – Synaptic

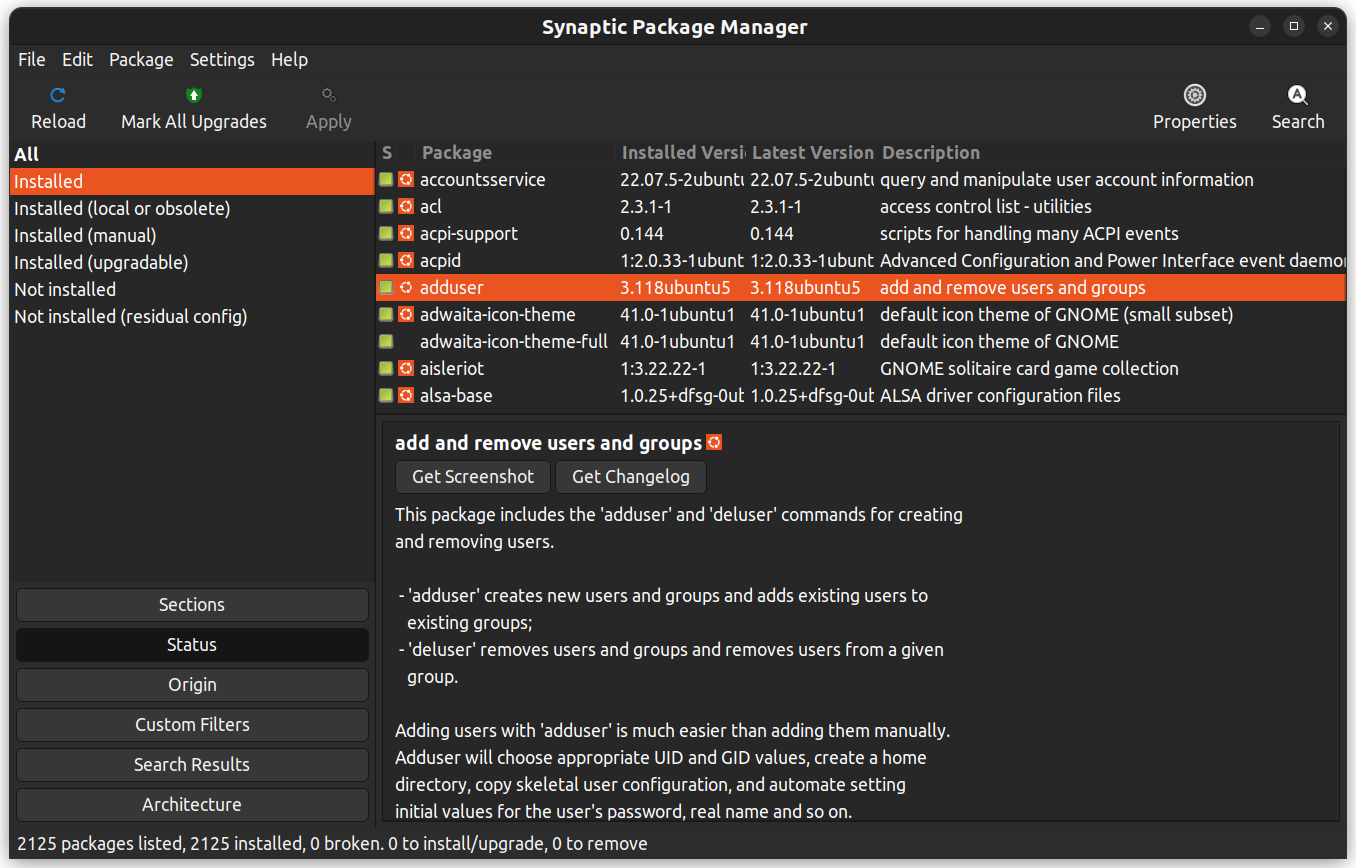

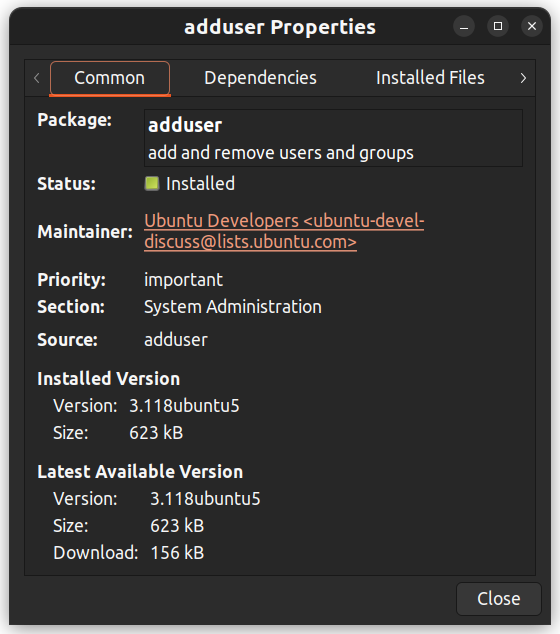

If you are not comfortable with the command line, you can use a GUI application to install packages. You can achieve the same results as the command line, but with a graphical interface.

Synaptic is a GUI package management application that helps in listing the installed packages, their status, pending updates, and so on. It offers custom filters to help you narrow down the search results.

You can also right-click on a package and view further details like the dependencies, maintainer, size, and the installed files.

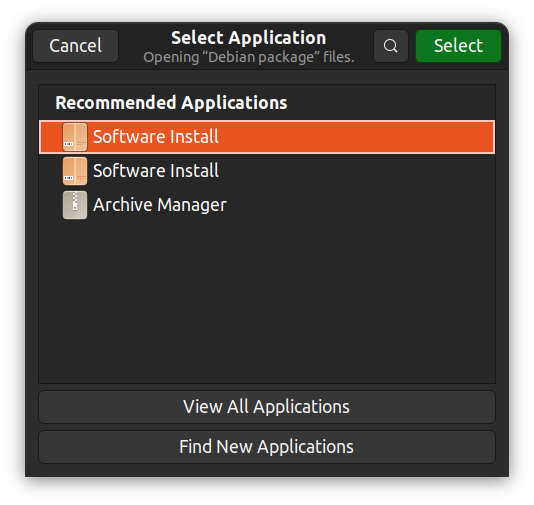

7.4. Installing downloaded packages from a website

You may want to install a package you have downloaded from a website, rather than from a software repository. These packages are called .deb files.

Usingdpkgto install packages:dpkg is a command-line tool used to install packages. To install a package with dpkg, open the Terminal and type the following:

cd directory

sudo dpkg -i package_name.deb

Note: Replace "directory" with the directory where the package is stored and "package_name" with the filename of the package.

Alternatively, you can right-click, select "Open With Other Application," and choose a GUI app of your choice.

💡 Tip: In Ubuntu, you can see a list of installed packages with dpkg --list.

Part 8: Advanced Linux Topics

8.1. User Management

There can be multiple users with varying levels of access in a system. In Linux, the root user has the highest level of access and can perform any operation on the system. Regular users have limited access and can only perform operations they have been granted permission to do.

What is a user?

A user account provides separation between different people and programs that can run commands.

Humans identify users by a name, as names are easy to work with. But the system identifies users by a unique number called the user ID (UID).

When human users log in using the provided username, they have to use a password to authorize themselves.

User accounts form the foundations of system security. File ownership is also associated with user accounts and it enforces access control to the files. Every process has an associated user account that provides a layer of control for the admins.

There are three main types of user accounts:

Superuser: The superuser has complete access to the system. The name of the superuser is

root. It has aUIDof 0.System user: The system user has user accounts that are used to run system services. These accounts are used to run system services and are not meant for human interaction.

Regular user: Regular users are human users who have access to the system.

The id command displays the user ID and group ID of the current user.

id

uid=1000(john) gid=1000(john) groups=1000(john),4(adm),24(cdrom),27(sudo),30(dip)... output truncated

To view the basic information of another user, pass the username as an argument to the id command.

id username

To view user-related information for processes, use the ps command with the -u flag.

ps -u

# Output

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.1 16968 3920 ? Ss 18:45 0:00 /sbin/init splash

root 2 0.0 0.0 0 0 ? S 18:45 0:00 [kthreadd]

By default, systems use the /etc/passwd file to store user information.

Here is a line from the /etc/passwd file:

root:x:0:0:root:/root:/bin/bash

The /etc/passwd file contains the following information about each user:

Username:

root– The username of the user account.Password:

x– The password in encrypted format for the user account that is stored in the/etc/shadowfile for security reasons.User ID (UID):

0– The unique numerical identifier for the user account.Group ID (GID):

0– The primary group identifier for the user account.User Info:

root– The real name for the user account.Home directory:

/root– The home directory for the user account.Shell:

/bin/bash– The default shell for the user account. A system user might use/sbin/nologinif interactive logins are not allowed for that user.

What is a group?

A group is a collection of user accounts that share access and resources. Groups have group names to identify them. The system identifies groups by a unique number called the group ID (GID).

By default, the information about groups is stored in the /etc/group file.

Here is an entry from the /etc/group file:

adm:x:4:syslog,john

Here is the breakdown of the fields in the given entry:

Group name:

adm– The name of the group.Password:

x– The password for the group is stored in the/etc/gshadowfile for security reasons. The password is optional and appears empty if not set.Group ID (GID):

4– The unique numerical identifier for the group.Group members:

syslog,john– The list of usernames that are members of the group. In this case, the groupadmhas two members:syslogandjohn.

In this specific entry, the group name is adm, the group ID is 4, and the group has two members: syslog and john. The password field is typically set to x to indicate that the group password is stored in the /etc/gshadow file.

The groups are further divided into 'primary' and 'supplementary' groups.

Primary Group: Each user is assigned one primary group by default. This group usually has the same name as the user and is created when the user account is made. Files and directories created by the user are typically owned by this primary group.

Supplementary Groups: These are extra groups a user can belong to in addition to their primary group. Users can be members of multiple supplementary groups. These groups let a user have permissions for resources shared among those groups. They help provide access to shared resources without affecting the system’s file permissions and keeping the security intact. While a user must belong to one primary group, belonging to supplementary groups is optional.

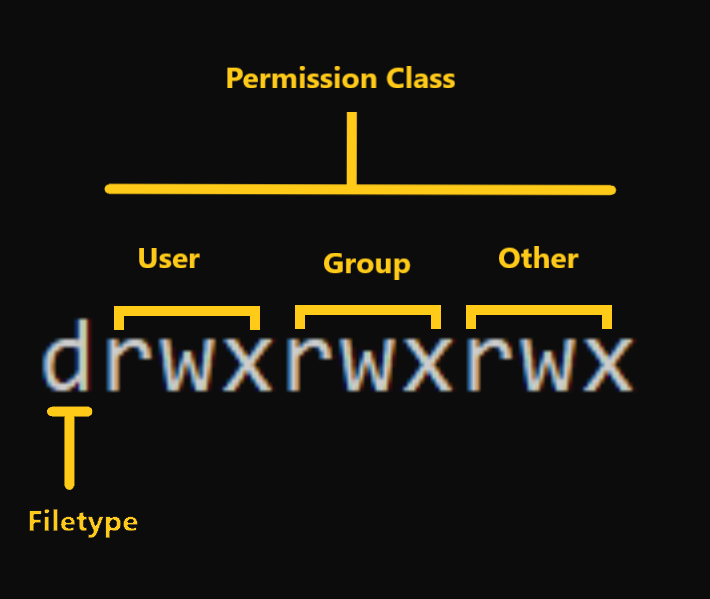

Access control: finding and understanding file permission

File ownership can be viewed using the ls -l command. The first column in the output of the ls -l command shows the permissions of the file. Other columns show the owner of the file and the group that the file belongs to.

Let's have a closer look into the mode column:

Mode defines two things:

File type: File type defines the type of the file. For regular files that contain simple data it is blank

-. For other special file types the symbol is different. For a directory which is a special file, it isd. Special files are treated differently by the OS.Permission classes: The next set of characters define the permissions for user, group, and others respectively.

– User: This is the owner of a file and owner of the file belongs to this class.

– Group: The members of the file’s group belong to this class

– Other: Any users that are not part of the user or group classes belong to this class.

💡Tip: Directory ownership can be viewed using the ls -ld command.

How to Read Symbolic Permissions or the rwx permissions

The rwx representation is known as the Symbolic representation of permissions. In the set of permissions,

rstands for read. It is indicated in the first character of the triad.wstands for write. It is indicated in the second character of the triad.xstands for execution. It is indicated in the third character of the triad.

Read:

For regular files, read permissions allow the file to be opened and read only. Users can't modify the file.

Similarly for directories, read permissions allow the listing of directory content without any modification in the directory.

Write:

When files have write permissions, the user can modify (edit, delete) the file and save it.

For folders, write permissions enable a user to modify its contents (create, delete, and rename the files inside it), and modify the contents of files that the user has write permissions to.

Examples of permissions in Linux

Now that we know how to read permissions, let's see some examples.

-rwx------: A file that is only accessible and executable by its owner.-rw-rw-r--: A file that is open to modification by its owner and group but not by others.drwxrwx---: A directory that can be modified by its owner and group.

Execute:

For files, execute permissions allows the user to run an executable script. For directories, the user can access them, and access details about files in the directory.

How to Change File Permissions and Ownership in Linux using chmod and chown

Now that we know the basics of ownerships and permissions, let's see how we can modify permissions using the chmod command.

Syntax ofchmod:

chmod permissions filename

Where,

permissionscan be read, write, execute or a combination of them.filenameis the name of the file for which the permissions need to change. This parameter can also be a list if files to change permissions in bulk.

We can change permissions using two modes:

Symbolic mode: this method uses symbols like

u,g,oto represent users, groups, and others. Permissions are represented asr, w, xfor read, write, and execute, respectively. You can modify permissions using +, - and =.Absolute mode: this method represents permissions as 3-digit octal numbers ranging from 0-7.

Now, let's see them in detail.

How to Change Permissions using Symbolic Mode

The table below summarize the user representation:

| USER REPRESENTATION | DESCRIPTION |

| u | user/owner |

| g | group |

| o | other |

We can use mathematical operators to add, remove, and assign permissions. The table below shows the summary:

| OPERATOR | DESCRIPTION |

| + | Adds a permission to a file or directory |

| – | Removes the permission |

| \= | Sets the permission if not present before. Also overrides the permissions if set earlier. |

Example:

Suppose I have a script and I want to make it executable for the owner of the file zaira.

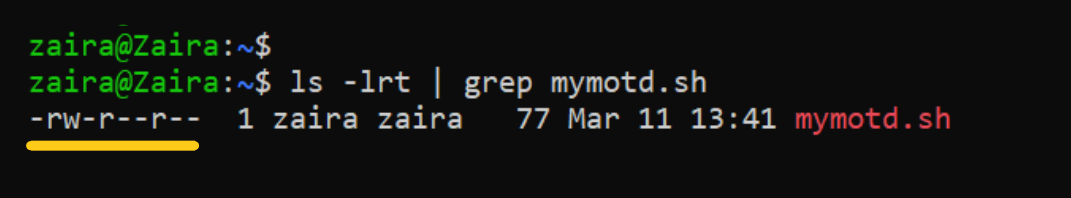

Current file permissions are as follows:

Let's split the permissions like this:

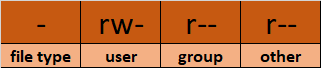

To add execution rights (x) to owner (u) using symbolic mode, we can use the command below:

chmod u+x mymotd.sh

Output:

Now, we can see that the execution permissions have been added for owner zaira.

Additional examples for changing permissions via symbolic method:

Removing

readandwritepermission forgroupandothers:chmod go-rw.Removing

readpermissions forothers:chmod o-r.Assigning

writepermission togroupand overriding existing permission:chmod g=w.

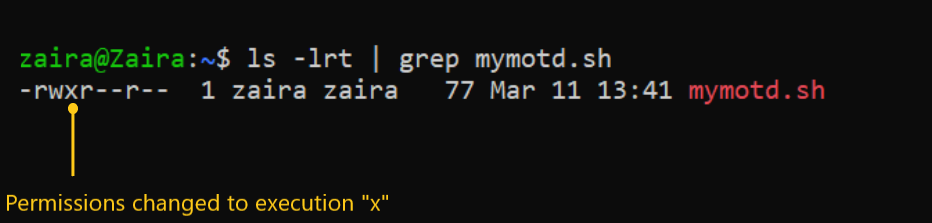

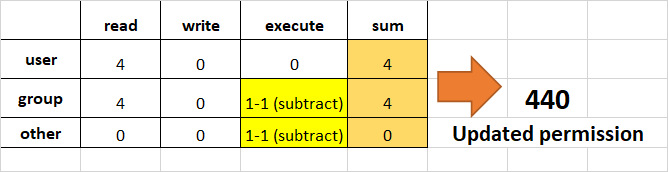

How to Change Permissions using Absolute Mode

Absolute mode uses numbers to represent permissions and mathematical operators to modify them.

The below table shows how we can assign relevant permissions:

| PERMISSION | PROVIDE PERMISSION |

| read | add 4 |

| write | add 2 |

| execute | add 1 |

Permissions can be revoked using subtraction. The below table shows how you can remove relevant permissions.

| PERMISSION | REVOKE PERMISSION |

| read | subtract 4 |

| write | subtract 2 |

| execute | subtract 1 |

Example:

- Set

read(add 4) foruser,read(add 4) andexecute(add 1) for group, and onlyexecute(add 1) for others.

chmod 451 file-name

This is how we performed the calculation:

Note that this is the same as r--r-x--x.

- Remove

executionrights fromotherandgroup.

To remove execution from other and group, subtract 1 from the execute part of last 2 octets.

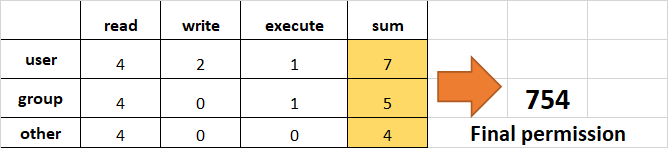

- Assign

read,writeandexecutetouser,readandexecutetogroupand onlyreadto others.

This would be the same as rwxr-xr--.

How to Change Ownership using the chown Command

Next, we will learn how to change the ownership of a file. You can change the ownership of a file or folder using the chown command. In some cases, changing ownership requires sudo permissions.

Syntax of chown:

chown user filename

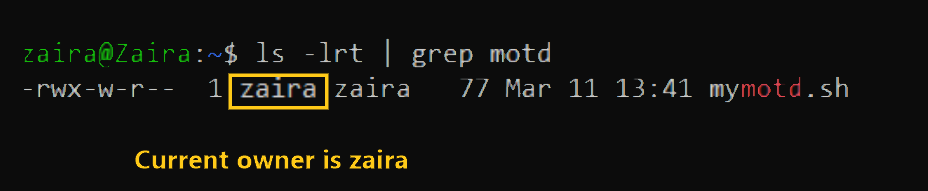

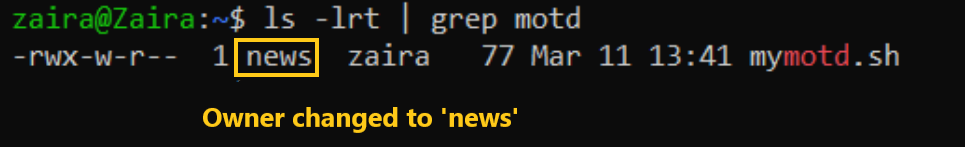

How to change user ownership with chown

Let's transfer the ownership from user zaira to user news.

chown news mymotd.sh

Command to change ownership: sudo chown news mymotd.sh.

Output:

How to change user and group ownership simultaneously

We can also use chown to change user and group simultaneously.

chown user:group filename

How to change directory ownership

You can change ownership recursively for contents in a directory. The example below changes the ownership of the /opt/script folder to allow user admin.

chown -R admin /opt/script

How to change group ownership

In case we only need to change the group owner, we can use chown by preceding the group name by a colon :

chown :admins /opt/script

How to switch between users

You can switch between users using the su command.

[user01@host ~]$ su user02

Password:

[user02@host ~]$

How to gain superuser access