Day 16/40 Days of K8s: Resource Requests and Limits in Kubernetes !! ☸️

Gopi Vivek Manne

Gopi Vivek Manne

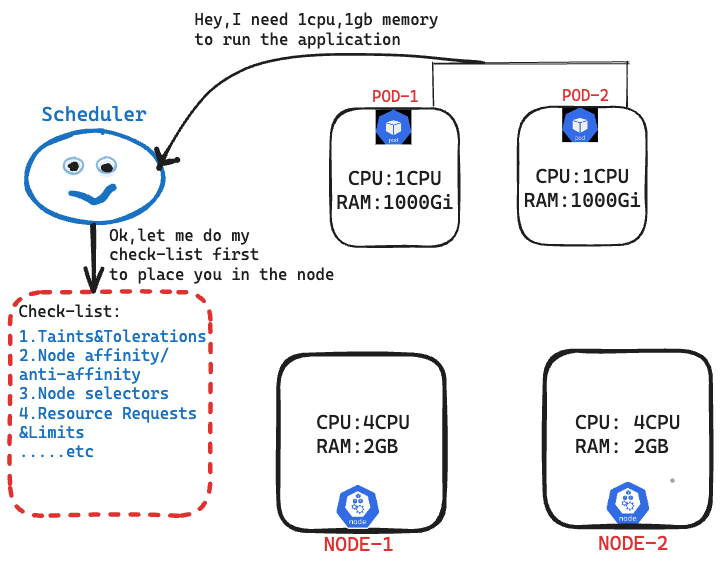

This is one of the parameter scheduler considers before making a decision of where to place a pod.

💁 Scenario

Let's just say we have 2 nodes, each with 4 CPUs and 4 GB of memory. We have container workloads to run as pods, each pod requires 1 GB of memory and 1 CPU. When scheduling the pods, the scheduler checks which nodes have enough resources to accommodate the pods. It also considers factors such as taints, tolerations, node affinity, and node selectors..etc. Based on this information, the scheduler makes the scheduling decision and place the pods on the appropriate nodes.

NOTE: A Kubernetes node cannot allocate all of its resources to pods because the node itself needs some resources to run system processes and k8s components.

❓Situation when there is no Resource Requests and Limits

🌟 What Happens When there is No Resource Requests and Limits Are Set?

Behaviour: If no resource request/limits are specified, the pod is scheduled and utilize maximum resources from the node.

Error: No specific error, but pods might consume more resources than expected and eventually node will be exhausted.

🌟 What Happens When we do Manual Resource Allocation?

Like Manually Setting Resources (2 CPU, 1 GB Memory):

Exceeding Resource Limits: If a pod tries to consume more resources than its limits

CPU: If the pod exceeds its CPU limit, CPU throttling occurs.

Memory: If the pod exceeds its memory limit, it will be terminated, and you will see an "OOMKilled" (Out Of Memory Killed) status.

🛑 Drawbacks

Without Resource Requests,Limits: Scheduler will not have information on how much a pod need from the node, eventually pod can consume all available resources on a node and resulting node failure.

💡 Solution

To avoid resources exhaustion, have fair resource sharing among pods within the node, we will use resource requests and limits for the pod. Like setting up a quota how much it can request/access.

✳ Resource Requests and Limits

✅ Resource request- where how much resources a pod can request form the node

Use Case: Helps the scheduler place the pod on a node with sufficient resources.

✅ Resource limit - where how much a pod can maximum utilize from the node. If this is not set, it takes all resources of the node.

Use Case: Prevents a pod from consuming all resources on a node.

✳ TASK

- Create a namespace named

mem-example

kubectl create ns mem-example

✳ Installing Metrics Server

Metrics server is responsible to gather resource usage for both nodes and pods. Let's install in our KIND cluster.

Download and apply the Metrics Server

apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server rbac.authorization.k8s.io/aggregate-to-admin: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-view: "true" name: system:aggregated-metrics-reader rules: - apiGroups: - metrics.k8s.io resources: - pods - nodes verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server name: system:metrics-server rules: - apiGroups: - "" resources: - nodes/metrics verbs: - get - apiGroups: - "" resources: - pods - nodes verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: v1 kind: Service metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: ports: - name: https port: 443 protocol: TCP targetPort: https selector: k8s-app: metrics-server --- apiVersion: apps/v1 kind: Deployment metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: selector: matchLabels: k8s-app: metrics-server strategy: rollingUpdate: maxUnavailable: 0 template: metadata: labels: k8s-app: metrics-server spec: containers: - command: - /metrics-server - --cert-dir=/tmp - --secure-port=10250 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s - --kubelet-insecure-tls image: registry.k8s.io/metrics-server/metrics-server:v0.7.1 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 3 httpGet: path: /livez port: https scheme: HTTPS periodSeconds: 10 name: metrics-server ports: - containerPort: 10250 name: https protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /readyz port: https scheme: HTTPS initialDelaySeconds: 20 periodSeconds: 10 resources: requests: cpu: 100m memory: 200Mi securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 seccompProfile: type: RuntimeDefault volumeMounts: - mountPath: /tmp name: tmp-dir nodeSelector: kubernetes.io/os: linux priorityClassName: system-cluster-critical serviceAccountName: metrics-server volumes: - emptyDir: {} name: tmp-dir --- apiVersion: apiregistration.k8s.io/v1 kind: APIService metadata: labels: k8s-app: metrics-server name: v1beta1.metrics.k8s.io spec: group: metrics.k8s.io groupPriorityMinimum: 100 insecureSkipTLSVerify: true service: name: metrics-server namespace: kube-system version: v1beta1 versionPriority: 100You should see

metrics-serverdeployment in thekube-systemnamespace. The status should indicate that it is running.

✳ Use Cases and Errors:

Pod Requests Within Allowed Limits:

Behaviour: Pod is scheduled and runs normally.

Error: None(as consumption is well within the limits)

Example

#create a Pod that has one Container. The Container has a memory request of 100 MiB and a memory limit of 200 MiB. # For stress testing passed args like 150M. apiVersion: v1 kind: Pod metadata: name: memory-demo namespace: mem-example spec: containers: - name: memory-demo-ctr image: polinux/stress resources: requests: memory: "100Mi" limits: memory: "200Mi" command: ["stress"] args: ["--vm", "1", "--vm-bytes", "150M", "--vm-hang", "1"]

Pod Requests More Than the Allowed Limit:

Behaviour:

- CPU: CPU throttling happens, processes will be slowed down to stay within the limit.

- Memory: Pod is killed with "OOMKilled" status and will restart according to the restart policy in place.

Error: OOMKilled (for memory).

Example

#Pod that has one Container with a memory request of 50 MiB and a memory limit of 100 MiB # Pod attempting to consume more memory than its limit via stress testing. apiVersion: v1 kind: Pod metadata: name: memory-demo-2 namespace: mem-example spec: containers: - name: memory-demo-2-ctr image: polinux/stress resources: requests: memory: "50Mi" limits: memory: "100Mi" command: ["stress"] args: ["--vm", "1", "--vm-bytes", "250M", "--vm-hang", "1"]

As you can see above, pod is in

CrashLoopBackoffstate where pod is trying to restart but couldn’t and there are several reasons for a pod to enter in this state like Imagenotfound,Appcrashes,ResourceLimits,Readinessprobe...etc.In our case, it's ResourceLimits(OOMKilled) which is causing this error.

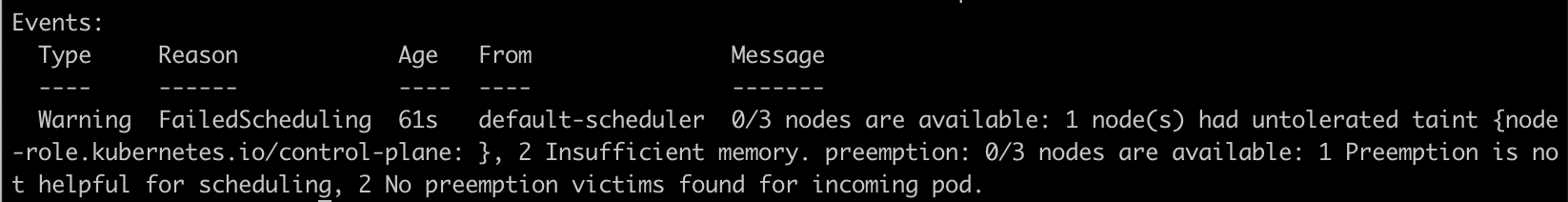

Pod Requests More Resources Than Available on the Node:

Behaviour: Pod is not scheduled and remains in a "Pending" state.

Error: "Pending" status due to insufficient resources.

Example

# Pod that has one Container with a request for 1000 GiB of memory, which likely exceeds the capacity of any Node in your cluster. apiVersion: v1 kind: Pod metadata: name: memory-demo-3 namespace: mem-example spec: containers: - name: memory-demo-3-ctr image: polinux/stress resources: requests: memory: "1000Gi" limits: memory: "1000Gi" command: ["stress"] args: ["--vm", "1", "--vm-bytes", "150M", "--vm-hang", "1"]

Now, the pod

memory-demo-3was ispendingstate since Insufficient memory.

Resource requests and limits are important for efficient resource management and scheduling in Kubernetes. Not setting these values can lead to resource unavailability. Properly setting resource requests ensures the pod gets the necessary resources, setting resource limits prevents pods from consumption of all node resources.

#Kubernetes #ResourceRequests #ResourceLimits #40DaysofKubernetes #CKASeries

Subscribe to my newsletter

Read articles from Gopi Vivek Manne directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gopi Vivek Manne

Gopi Vivek Manne

I'm Gopi Vivek Manne, a passionate DevOps Cloud Engineer with a strong focus on AWS cloud migrations. I have expertise in a range of technologies, including AWS, Linux, Jenkins, Bitbucket, GitHub Actions, Terraform, Docker, Kubernetes, Ansible, SonarQube, JUnit, AppScan, Prometheus, Grafana, Zabbix, and container orchestration. I'm constantly learning and exploring new ways to optimize and automate workflows, and I enjoy sharing my experiences and knowledge with others in the tech community. Follow me for insights, tips, and best practices on all things DevOps and cloud engineering!