From Messy to Manageable: Mastering Data Cleaning with Swiggy Dataset

Navneet Patnecha

Navneet PatnechaHi, I'm Navneet. Welcome back to my blog!

As a data enthusiast, I thrive on extracting golden insights from messy data. We data analysts wrestle with chaotic information and uncover meaningful insights. From SQL queries to advanced analytics, from visualization to machine learning, we'll explore it all. Fascinated by transforming raw data into valuable knowledge? You're in the right place. Subscribe to my blog for amazing content that will teach you something new with each post.

Today, we dive into data cleaning, a crucial step in the data analysis process. We'll use a Swiggy dataset to illustrate the importance and techniques of cleaning data, ensuring our insights are accurate and valuable. Let's get started on turning chaos into clarity!

We are going to use stored procedures and some intermediate concepts to clean the data. Of course, you don't want to write a script after every update, which is why automation is important. So, let's get started!

Download above dataset and let's explore the data first

Let's Get Started !

About data :

Our dataset contains 1,749 rows and 7 columns, capturing detailed information about various restaurants listed on Swiggy . The columns include:

hotel_name: The name of the restaurant.rating: The average rating given by customers.time_minutes: The average time taken to deliver an order from the restaurant.food_type: A list of cuisines offered by the restaurant.location: The area where the restaurant is located.offer_above: The minimum order value required to avail of an offer.offer_percentage: The percentage of discount offered.

This Swiggy restaurant dataset will serve as the basis for our data cleaning tutorial. Let's dive into the details of how to clean this data efficiently!

let's check the dataset:

select * from swiggy;

Now that we're familiar with our dataset's structure, let's dive into the cleaning .

What approach can we take to systematically identify and quantify missing values in each column of our Swiggy restaurant dataset? Additionally, how can we leverage a stored procedure to automate this process for efficiency?

So, we will first focus on understanding the concept for a single column, such as how exactly we can count missing values from a table:

select sum(case when column_name = "" then 1 else 0 end ) as missing_column;

The above code serves as the foundational idea for our solution. It flags each occurrence where a column is either null or empty, assigns a value of 1 to it, aggregates these flags, and ultimately gives us the count of missing values for that specific column. Now, let's extend this approach to all columns and create a stored procedure for it.

Let's break down the process further. First, we need to dynamically fetch all the column names present in our table, as real datasets can have more than 50 columns. To achieve this, we can use the following SQL query:

select column_name from information_schema.column where table_name = 'swiggy';

This query retrieves the names of all columns in the 'swiggy' table.

Next, we'll create a stored procedure that takes the name of a column as input and returns the number of missing values from that column. Here's how we can set up the procedure:

-- this takes single column as input and give number of missing column in that table

delimiter ##

create procedure missing_column(in entity varchar(100))

begin

set @query = concat('select sum(case when `',entity,'` = '''' then 1 else 0 end ) as missing_column');

prepare stmt from @query;

execute @query;

deallocate prepare stmt;

end ##

delimiter;

Now, you might be wondering about the PREPARE, EXECUTE, and DEALLOCATE statements, and why we are using the CONCAT function here. To create a dynamic query, we use the CONCAT function to combine the column provided by the procedure into a query string. Then, PREPARE prepares this query as a SQL statement to be executed using the input column name (entity). The EXECUTE statement performs the prepared query, executing it to count the number of missing values in that specific column. Finally, DEALLOCATE PREPARE releases the resources used by preparing the statement. This approach allows us to efficiently handle each column individually. To extend this functionality to handle all columns dynamically, we utilize the GROUP_CONCAT function, which concatenates results group-wise. This ensures effective identification and quantification of missing data across multiple columns in our Swiggy restaurant dataset using MySQL stored procedures.

-- THIS STATEMENT WILL GROUP ALL THE STATEMNT FOR EACH COLUMN PRESENT IN TABLE

SELECT GROUP_CONCAT(

CONCAT('SUM(CASE WHEN `', column_name, '` = '''' THEN 1 ELSE 0 END) AS `', column_name ,'`')

) INTO @sql

FROM information_schema.columns

WHERE table_name = 'swiggy';

-- BELOW STATEMNT WILL BE ACTUAL QUERY IN STRING FORMAT

SET @SQL = CONCAT('SELECT',@SQL ,'FROM SWIGGY');

-- LET'S PREPARE AND EXCEUTE STATEMENT

PREPARE STMT FROM @SQL;

EXECUTE STMT;

DEALLOCATE PREPARE STMT;

Now that we understood flow and concept of this particular problem , let's create a procedure and see results

DELIMITER $$

CREATE PROCEDURE FIND_MISSING_COLUMNS()

BEGIN

SELECT GROUP_CONCAT(

CONCAT('SUM(CASE WHEN `', column_name, '` = '''' THEN 1 ELSE 0 END) AS `', column_name ,'`')

) INTO @sql

FROM information_schema.columns

WHERE table_name = 'swiggy';

SET @SQL = CONCAT('SELECT',@SQL ,'FROM SWIGGY');

PREPARE STMT FROM @SQL;

EXECUTE STMT;

DEALLOCATE PREPARE STMT;

END $$

DELIMITER;

-- LET'S CALL THE FUNCTION AND SEE THE RESULT

CALL FIND_MISSING_COLUMNS();

Output :

| food_type | hotel_name | location | offer_above | offer_percentage | rating | time_minutes |

| 0 | 0 | 0 | 0 | 0 | 0 | 343 |

we got 343 missing values in time_minutes cloumn .

To understand group_concat function you can refer to

Second problem: In our dataset, we've noticed that some values from thetime_minutescolumn have mistakenly been moved to theratingcolumn. Our task is to correct this by transferring these values back to their correct column,rating, using SQL.

| Rating | time_minutes |

| 36 mins | |

| 4.3 | 28 |

| 4.4 | 30 |

| 38 mins | |

| 4.3 | 55 |

We can see that in the rating column, there are some values that belong to the time_minutes column. So before jumping directly to the solution, let's understand this problem and break it into sub-problems to get the final cleaned result.

Intuition: What if we create a new table that only contains the values with mins as a suffix and then remove mins from that column so that we can now transfer those values to time_minutes?

Let's create a new table first:

create table clean as

select * from swiggy where rating like '%mins%;

-- table clean will contain all the rows where ratings suffix is mins

| hotel_name | rating | time_minutes |

| Hotel A | 36 mins | |

| Hotel B | 38 mins |

Now, we need to clean or remove the mins suffix if present.

-- function that will remove the mins from suffix

delimiter &&

create function fname(in col varchar(100))

returns varchar(100)

deterministic

begin

set @l = locate(' ' , col );

set @first = if(@l > 0 , left(col, @l -1 ) , col );

return @first;

end &&

delimiter;

The above function will remove the mins part.

Now lets' clean the mins by calling this function

create table cleaned as

select *, fname(ratings) as cleaned_time from clean ;

Output :

| hotel_name | cleaned_time | time_minutes |

| Hotel A | 36 | |

| Hotel B | 38 |

Now we have cleaned time_minutes values that were present in the rating column. Next, we will join this table with our main swiggy table:

update swiggy as s

inner join cleaned as c

on s.hotel_name= c.hotel_name

set s.time_minutes= c.cleaned_time

Now our data is cleaned and being updated into time_minutes column .

Problem: We can see that some of the values in thetime_minutescolumn have a '-' between them. We need to replace these with the average of the two numbers. For example:

24-28 = (24 + 28) / 2 = 26

| hotel_name | rating | time_minutes | food_type | location | offer_above | offer_percentage |

| McDonald's | 4.5 | 24-28 | Burgers, Beverages, Cafe, Desserts | Kandivali East | 75 | 30 |

| KFC | 4.2 | 26-34 | Burgers, Biryani, American, Snacks, Fast Food | Kandivali East | 80 | 40 |

| Domino's Pizza | 4.3 | 32-36 | Pizzas, Italian, Pastas, Desserts | Thakur Village | 299 | NULL |

Let's breakdown this problem into smaller problems :

What if we create a function that takes the value of the time_minutes column and returns the average of the numbers on either side of the '-'? Even if the number doesn't contain a '-', the average of the same number is still the same number.

For example:

20-24 becomes (20 + 24) / 2, which equals 22

If the number is 20, then (20 + 20) / 2 equals 20

So, let's write the function first.

delimiter &&

create function average_of(col varchar(100))

returns int

deterministic

begin

DECLARE l INT;

DECLARE first INT;

DECLARE second INT;

DECLARE final INT;

SET l = LOCATE('-', col); -- it will find position of '-' in the given string

SET first = IF(l > 0, LEFT(col, l - 1), col); -- it will fetch left of '-'

SET second = IF(l > 0, SUBSTRING(col, l + 1), col); -- it will fetch right of '-'

SET final = (first + second) / 2; -- calculating averag

RETURN final;

Now we will create a dummy table called cleaning, then join it with swiggy, and update swiggy.time_minutes with the average value present in the cleaning table.

-- this will create a cleaning table with extra column as averag(time_minutes)

create table cleaning as

select * , average(time_minutes) as avg from swiggy;

update swiggy s

inner join

cleaning c

on s.hotel_name = c.hotel_name

set s.time_minutes = c.avg; -- this will replace time_minutes values with aggregated values

select * from swiggy ; -- to check updates

Now ourtime_minutescolumn is cleaned

Problem:Now, we have a few missing values in the ratings column, and we need to handle those missing values.

Intuition: What if we replace the missing ratings with the average rating of restaurants in that particular location? The idea behind this is that restaurants in a specific locality are competitors, so they tend to maintain ratings that are very similar to those of their competitors.

Let's get average rating of restaurants according to locality

select location, round(avg(rating),2) as avg_rating_of_location

from swiggy

group by location;

above code will give output as :

| Location | avg_rating_of_location |

| Kandivali East | 4.1 |

| Thakur Village | 3.67 |

| Malad Kan East | 2.74 |

| Borivali West | 4.16 |

Now we will join this table with swiggy table and set the avg_rating_of_location to the rating where rating is null or blank.

UPDATE swiggy s

INNER JOIN (

SELECT location, ROUND(AVG(rating), 2) AS avg_rating

FROM swiggy

WHERE rating IS NOT NULL AND rating <> ''

GROUP BY location

) t ON s.location = t.location

SET s.rating = t.avg_rating

WHERE s.rating IS NULL OR s.rating = '';

-- Also if there are blanks then we gonna replace those with average of rating

update swiggy

set rating = avg(rating)

where rating is null or rating = '';

Our rating column is cleaned .

Problem : Upon exploring the location column, we found that in our location data, Kandivali has several names, such as Kandivali East, Kandivali West, etc. We need to handle this inconsistency.

Intution: We are going to replace all names that include 'Kandivali' with just 'Kandivali', regardless of any suffix.

update swiggy

set location = 'kandivali'

where location like 'kandivali%';

Problem: Upon exploring the offer_percentage column, we noticed that there are too many values marked as 'not_available.' We need to address this issue.

Intuition: We should find all occurrences of 'not_available' and replace them with 0.

update swiggy

set offer_percentage = 0

where offer_percentage = 'not_available';

offer_percentage column in cleaned .

Problem: Thefood_typecolumn contains various types of foods offered by restaurants, but they are separated by commas. This makes it difficult to perform analysis, such as determining how many different types of food are offered by restaurants. To solve this, we need to separate each food type into different rows.

for example:

| hotel_name | rating | time_minutes | food_type | location | offer_above | offer_percentage |

| McDonald's | 4.1 | 27 | Burgers, Beverages, Cafe, Desserts | kandivali | 75 | 30 |

| KFC | 4.1 | 30 | Burgers, Biryani, American, Snacks, Fast Food | kandivali | 80 | 40 |

Now, we need to separate the food_type values into different rows. Before that, let's break down this problem into smaller steps and then combine them to solve the query.

We can start by extracting each category in food_type one by one using a function called SUBSTRING_INDEX. This function takes a string, a delimiter, and an index of the delimiter. In this case, the delimiter is a comma. The function returns the substring before the occurrence of the specified delimiter.

for example:

-- this will retun string before the second occurence of the comma

select substring('pizza ,burger, pasta , juice',',',2)

-- Output : pizza ,burger

Now that we understand how the SUBSTRING_INDEX function works, we see that it returns a substring containing multiple items, rather than individual items. To solve this, we will use the SUBSTRING_INDEX function again to extract the last word of the substring.

for example :

select substring_index(substring_index('pizza ,burger, pasta , juice',',4),',',-1);

-- this will return the last word that is juice from the string pizza ,burger , pasta , juice

If we use 3 in the inner SUBSTRING_INDEX query, it will return 'pasta'; if we use 2, it will return 'burger', and so on. By changing the values, we get individual words from the string. Now, what we need is a list of those numbers first so that we can join with the table with a condition and get our desired result. I know it sounds vague, but bear with me, and you will understand. So now, to create values from 1 to 100:

select 1+a.N + b.N*10 as n from

(

(

SELECT 0 AS N UNION ALL SELECT 1 UNION ALL SELECT 2 UNION ALL SELECT 3 UNION ALL SELECT 4 UNION ALL SELECT 5 UNION ALL SELECT 6 UNION ALL SELECT 7

UNION ALL SELECT 8 UNION ALL SELECT 9) a

cross join

(

SELECT 0 AS N UNION ALL SELECT 1 UNION ALL SELECT 2 UNION ALL SELECT 3 UNION ALL SELECT 4 UNION ALL SELECT 5 UNION ALL SELECT 6 UNION ALL SELECT 7

UNION ALL SELECT 8 UNION ALL SELECT 9)b

The above code will give us values from 1 to 100. Now, if we join this with our actual table like:

select *

from swiggy_cleaned

join

(

select 1+a.N + b.N*10 as n from

(

(

SELECT 0 AS N UNION ALL SELECT 1 UNION ALL SELECT 2 UNION ALL SELECT 3 UNION ALL SELECT 4 UNION ALL SELECT 5 UNION ALL SELECT 6 UNION ALL SELECT 7

UNION ALL SELECT 8 UNION ALL SELECT 9) a

cross join

(

SELECT 0 AS N UNION ALL SELECT 1 UNION ALL SELECT 2 UNION ALL SELECT 3 UNION ALL SELECT 4 UNION ALL SELECT 5 UNION ALL SELECT 6 UNION ALL SELECT 7

UNION ALL SELECT 8 UNION ALL SELECT 9)b

)

) as numbers

on char_length(food_type) - char_length(replace(food_type ,',','')) >= numbers.n-1

)a

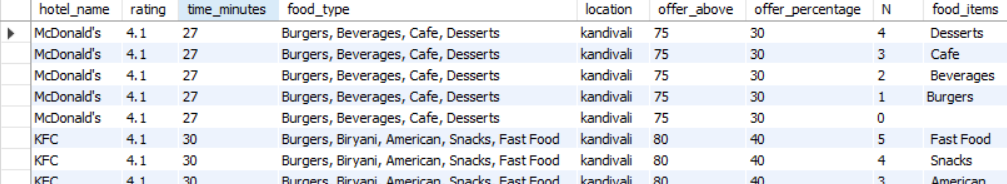

The above query will join the table like :

| hotel_name | rating | time_minutes | food_type | location | offer _above | offer_percentage | N | |

| McDonald's | 4.1 | 27 | Burgers, Beverages, Cafe, Desserts | kandivali | 75 | 40 | 4 | |

| McDonald's | 4.1 | 27 | Burgers, Beverages, Cafe, Desserts | kandivali | 75 | 30 | 3 | |

| McDonald's | 4.1 | 27 | Burgers, Beverages, Cafe, Desserts | kandivali | 75 | 30 | 2 | |

| McDonald's | 4.1 | 27 | Burgers, Beverages, Cafe, Desserts | kandivali | 75 | 30 | 1 |

Scroll to the last column, and you can see that if the number of food_type values is 4, then we got 4 rows for this particular hotel_name.

Let's understand the condition query:

char_length(food_type) - char_length(replace(food_type ,',','')) >= numbers.n-1

CHAR_LENGTH(food_type) gives us the length of the string. CHAR_LENGTH(REPLACE(food_type, ',', '')) gives us the length without including commas. Subtracting these values gives us the number of commas present. The condition ensures that we limit the number of rows to the number of food types.

Finally, we use SUBSTRING_INDEX() to extract the different food types:

select *, substring_index( substring_index(food_type ,',',numbers.n),',', -1) as 'food'

from swiggy_cleaned

join

(

select 1+a.N + b.N*10 as n from

(

(

SELECT 0 AS N UNION ALL SELECT 1 UNION ALL SELECT 2 UNION ALL SELECT 3 UNION ALL SELECT 4 UNION ALL SELECT 5 UNION ALL SELECT 6 UNION ALL SELECT 7

UNION ALL SELECT 8 UNION ALL SELECT 9) a

cross join

(

SELECT 0 AS N UNION ALL SELECT 1 UNION ALL SELECT 2 UNION ALL SELECT 3 UNION ALL SELECT 4 UNION ALL SELECT 5 UNION ALL SELECT 6 UNION ALL SELECT 7

UNION ALL SELECT 8 UNION ALL SELECT 9)b

)

) as numbers

on char_length(food_type) - char_length(replace(food_type ,',','')) >= numbers.n-1

)a )

this code gives us output as :

this problem was a bit conceptual and required breaking down the task into smaller steps. Practice each step several times, and you'll grasp the essence of how to separate and analyze food_type values effectively.

Now that our data is clean and ready for analysis, you can create visualizations and perform further analysis using SQL to gain insights.

Wrapping Up: Exploring Data Analytics

This concludes this blog. I'll be coming back with more awesome content soon. If you have any questions, feel free to email me at navneetchippa@gmail.com and subscribe to my weekly newsletter to stay updated on the latest in data science and generative AI.

Subscribe to my newsletter

Read articles from Navneet Patnecha directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by