Mastering Kubernetes: Unveiling the Power and Architecture of K8s

Abhishek Jha

Abhishek JhaIntroduction

In today's rapidly evolving technological landscape, Kubernetes has become a pivotal tool for managing containerized applications. This article focuses on why Kubernetes and the Architecture of Kubernetes. Whether you're a seasoned developer or just starting, mastering Kubernetes can significantly enhance your ability to deploy, scale, and manage applications efficiently.

Why Kubernetes?

Kubernetes, often abbreviated as K8s, is an open-source platform designed to automate application container deployment, scaling, and operation. Here are some key reasons why Kubernetes has become essential:

Scalability: Kubernetes can automatically scale applications up or down based on demand, ensuring optimal resource utilization and performance.

Portability: Kubernetes works across various environments, including on-premises, public clouds, and hybrid setups, providing a consistent application deployment platform.

High Availability: Kubernetes ensures that applications are always available by automatically managing and distributing containers across multiple nodes.

Self-Healing: Kubernetes can automatically restart failed containers, replace and reschedule them, and kill containers that don't respond to user-defined health checks.

Declarative Configuration: Kubernetes uses declarative configuration to manage applications, allowing users to define the desired state of the system and let Kubernetes maintain it.

Extensibility: Kubernetes is highly extensible, with a rich ecosystem of plugins and tools that can be integrated to enhance its functionality.

Efficient Resource Management: Kubernetes optimizes the use of resources by efficiently managing containerized applications, ensuring that they run smoothly without wasting resources.

Community and Ecosystem: Kubernetes has a large and active community, providing extensive support, documentation, and a wide range of tools and extensions.

These features make Kubernetes a powerful tool for modern application development and operations, enabling organizations to deliver applications faster and more reliably.

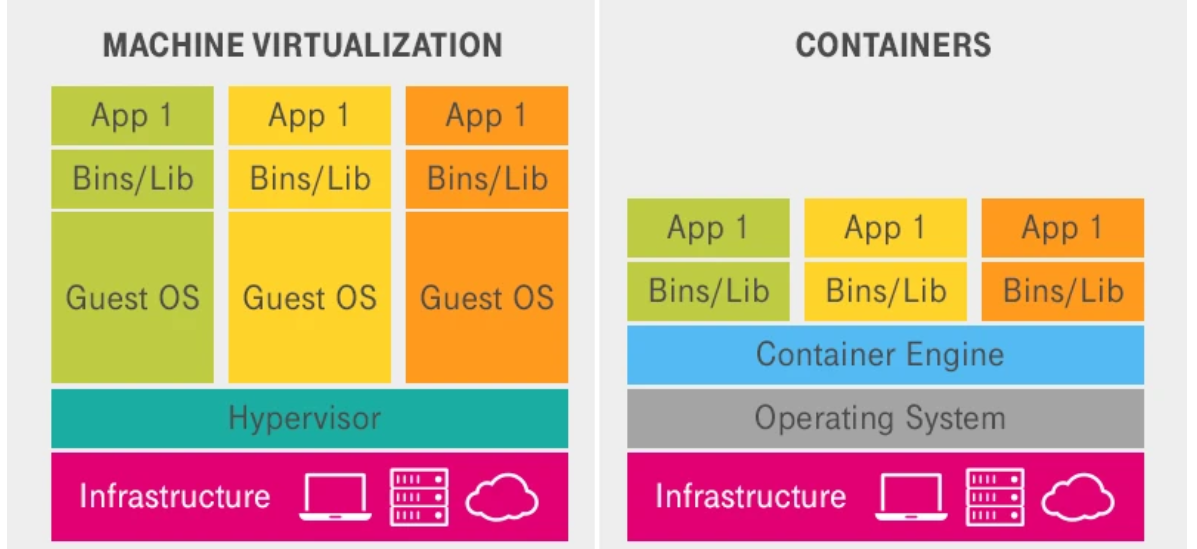

VM vs Containers

Virtual Machines (VMs) and Containers are both technologies used to deploy applications, but they have distinct differences in terms of architecture, performance, and use cases.

Virtual Machines (VMs)

Architecture: VMs run on a hypervisor, which can be either Type 1 (bare-metal) or Type 2 (hosted). Each VM includes a full operating system (OS) along with the application and its dependencies.

Isolation: VMs provide strong isolation as each VM runs a separate OS, making them suitable for running multiple applications with different OS requirements on the same physical hardware.

Resource Usage: VMs are resource-intensive because each VM requires its own OS, which consumes significant CPU, memory, and storage.

Boot Time: VMs have longer boot times because they need to start a full OS.

Portability: VMs are less portable due to their larger size and dependency on the hypervisor.

Containers

Architecture: Containers share the host OS kernel and run as isolated processes in user space. They package the application and its dependencies but do not include a full OS.

Isolation: Containers provide process-level isolation, which is lighter than VMs but still secure. They share the same OS kernel, making them less isolated than VMs.

Resource Usage: Containers are lightweight and use fewer resources because they share the host OS and do not require a separate OS for each container.

Boot Time: Containers have faster boot times as they do not need to start a full OS, just the application process.

Portability: Containers are highly portable across different environments (development, testing, production) because they encapsulate the application and its dependencies in a consistent environment.

Use Cases

VMs: Suitable for running multiple applications with different OS requirements, legacy applications, and scenarios requiring strong isolation.

Containers: Ideal for microservices architecture, continuous integration/continuous deployment (CI/CD) pipelines, and scenarios requiring rapid scaling and deployment.

In summary, VMs are best for scenarios requiring strong isolation and different OS environments, while containers are preferred for lightweight, portable, and scalable application deployment.

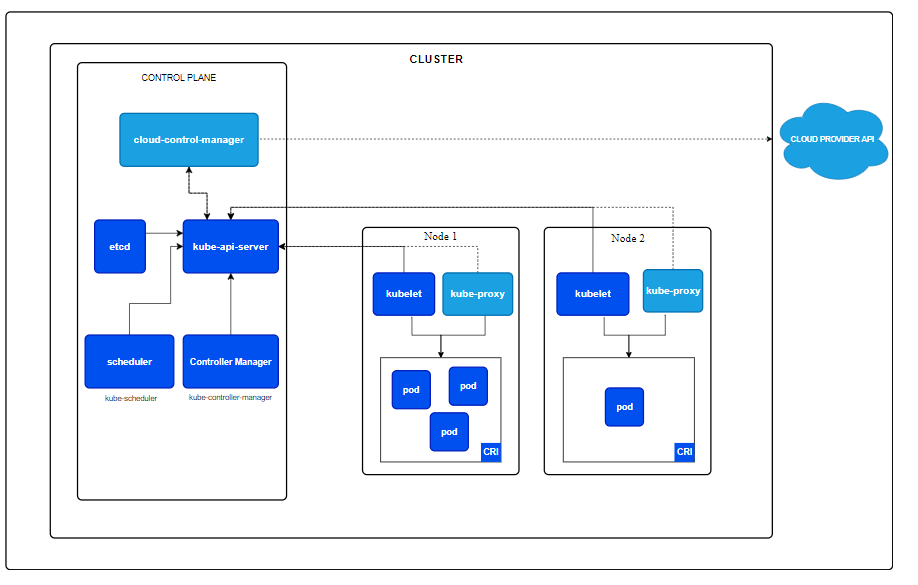

Components of K8s

Kubernetes architecture is composed of several key components that work together to manage containerized applications. Here are the primary components:

Master Node: The master node is responsible for managing the Kubernetes cluster. It consists of several components:

API Server: The API server is the front end of the Kubernetes control plane. It exposes the Kubernetes API and is the central point for all administrative tasks.

etcd: etcd is a distributed key-value store used to store all cluster data, including configuration and state information.

Controller Manager: The controller manager runs controller processes that handle routine tasks in the cluster, such as node management, replication, and endpoint management.

Scheduler: The scheduler is responsible for assigning workloads to nodes based on resource availability and other constraints.

Worker Nodes: Worker nodes run the containerized applications. Each worker node contains the following components:

Kubelet: The kubelet is an agent that runs on each worker node. It ensures that containers are running in a Pod and communicates with the master node.

Kube-proxy: Kube-proxy is a network proxy that runs on each worker node. It maintains network rules and handles communication between Pods and services.

Container Runtime: The container runtime is the software responsible for running containers. Kubernetes supports various container runtimes, including Docker, containerd, and CRI-O.

Pods: Pods are the smallest and simplest Kubernetes objects. A Pod represents a single instance of a running process in a cluster and can contain one or more containers.

Services: Services are an abstraction that defines a logical set of Pods and a policy for accessing them. Services enable communication between different parts of an application and can expose Pods to the outside world.

ConfigMaps and Secrets: ConfigMaps and Secrets are used to manage configuration data and sensitive information, respectively. They allow you to decouple configuration artifacts from image content to keep containerized applications portable.

Volumes: Volumes provide persistent storage for Pods. Kubernetes supports various types of volumes, including hostPath, NFS, and cloud-provider-specific volumes.

Namespaces: Namespaces provide a way to divide cluster resources between multiple users. They are useful for organizing and managing resources in a multi-tenant environment.

Ingress: Ingress is an API object that manages external access to services, typically HTTP. Ingress can provide load balancing, SSL termination, and name-based virtual hosting.

Understanding these components is crucial for effectively managing and deploying applications in a Kubernetes environment.

Note: Right now in this article I have documented all the components of K8s just for basic information. Going forward in the coming article we will go in deeper into each and every components.

Cluster Architecture

Flow Example

Imagine you have a web application you want to deploy.

Step-by-Step Flow

Define Deployment: You create a YAML file that defines the deployment of your web application. This file includes details like the number of replicas, the container image to use, and the resources required.

Submit to API Server: You submit this YAML file to the Kubernetes API server.

Store in etcd: The API server validates and stores this configuration in etcd.

Scheduler Assigns Nodes: The Scheduler checks which nodes have the resources to run your application and assigns the pods (instances of your application) to specific worker nodes.

Kubelet on Worker Nodes: The Kubelet on each assigned worker node receives instructions from the API server to start the pods.

Container Runtime Launches Pods: The container runtime (e.g., Docker) on each node pulls the specified container image and starts the containers.

Kube Proxy Handles Networking: The Kube Proxy on each node sets up the necessary networking so that the pods can communicate with each other and with external clients.

Monitor and Maintain: The Controller Manager continuously monitors the cluster’s state. If a pod crashes or a node goes down, it instructs the Scheduler to start a new pod to replace it, ensuring the desired state (three running replicas) is maintained.

Conclusion

In summary, Kubernetes is a powerful tool for managing applications in containers. It helps you deploy, scale, and manage applications efficiently. Key features include:

Scalability: Automatically adjusts the number of application instances based on demand.

Portability: Works across different environments like on-premises, public clouds, and hybrid setups.

High Availability: Ensures applications are always running by distributing them across multiple nodes.

Self-Healing: Automatically restarts or replaces failed containers.

Declarative Configuration: This allows you to define the desired state of your system, and Kubernetes maintains it.

Extensibility: This can be enhanced with various plugins and tools.

Efficient Resource Management: Optimizes resource use to ensure smooth application performance.

Community and Ecosystem: Supported by a large community with extensive documentation and tools.

Understanding Kubernetes' architecture and components, like master and worker nodes, Pods, and Services, helps you manage applications more effectively. Future articles will dive deeper into each component, making it easier to master Kubernetes and use it to its full potential.

Subscribe to my newsletter

Read articles from Abhishek Jha directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by