Enhancing Speech-to-Text Capabilities Locally with OpenAI's Whisper

Kevin Loggenberg

Kevin Loggenberg

Have you ever worked on a project, thinking that adding speech-to-text functionality would make it truly extraordinary, only to be disappointed by outdated solutions that require you to constantly repeat yourself?

With OpenAI's Whisper, you can leverage advanced speech-to-text technology locally. Whisper offers accurate and reliable transcription, allowing you to enhance your projects effortlessly. Say goodbye to the frustrations of old-school speech recognition systems and hello to seamless, effective, and modern speech-to-text capabilities.

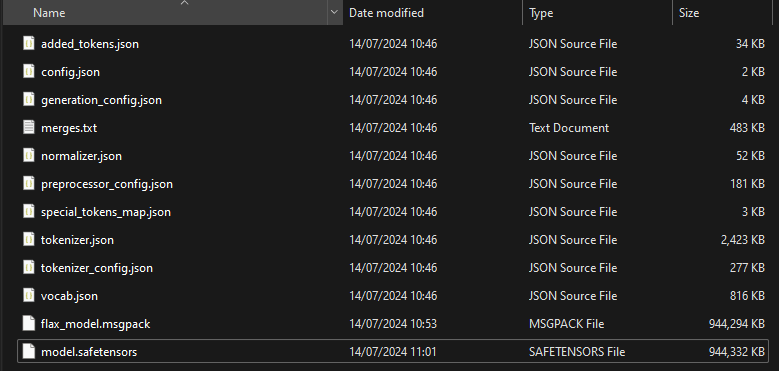

Step 1: Download the files

To enhance your speech-to-text capabilities, you'll need to download specific model files from Huggingface. These models will bring a touch of AI magic, improving the accuracy and performance beyond what standard speech-to-text libraries offer.

While our the has automated the download process, manually downloading and organizing the files ensures you have control over their location. This approach is beneficial from a file administration standpoint, especially if you plan to use the model for other purposes in the future.

Step 2: Install the packages

There are a few packages you will need to install, since we are going to be using our CPU for this, the installation process is quite straightforward and shouldn't be a pain.

Transformers and Torch:

These packages are essential for loading and using the AI models.

pip install transformers torch

Sounddevice and Scipy:

These packages are required for recording and processing audio.

pip install transformers torch

Pocketsphinx:

This package is used for real-time speech recognition.

pip install pocketsphinx

Additional Packages:

Depending on your environment, you might need other packages like numpy and winsound (the latter is specific to Windows).

pip install numpy

Step 3: Implementing the Speech-to-Text Code

In this step, we'll walk through the code that uses OpenAI's Whisper model to perform speech-to-text tasks. We'll cover initializing the model, recording audio, detecting a wake word (I made it 'Jasper'), and transcribing the recorded audio.

Initializing the Model and Processor

import os

import torch

from transformers import WhisperProcessor, WhisperForConditionalGeneration

# Initialize model and processor

processor = WhisperProcessor.from_pretrained("D:\\Models\\openai-whisper-small")

model = WhisperForConditionalGeneration.from_pretrained("D:\\Models\\openai-whisper-small")

model.config.forced_decoder_ids = None

Here, we're loading the pre-trained Whisper model and processor from the specified directory. This setup ensures that the model is ready to process and transcribe audio data.

Setting Up Recording Parameters

Next, we define the parameters for audio recording.

import sounddevice as sd

import numpy as np

from scipy.io.wavfile import write

import queue

import time

# Parameters for recording

sample_rate = 16000

record_duration = 5 # duration of recording after wake word detection

# Create a queue to store the audio data

audio_queue = queue.Queue()

In this section, we set the sample rate and the duration for which the audio will be recorded after the wake word is detected. We also create a queue to store the audio data.

An important thing to note here, the model has been trained on a 1600 sample rate, anything different will not work.

Transcribing the Audio

We then define a function to process and transcribe the recorded audio.

# Function to process and transcribe audio

def transcribe_audio(audio_data):

input_features = processor(audio_data, sampling_rate=sample_rate, return_tensors="pt").input_features

predicted_ids = model.generate(input_features)

transcription = processor.batch_decode(predicted_ids, skip_special_tokens=True)

return transcription[0]

This function takes the audio data, processes it using the Whisper processor, and then generates a transcription using the model.

Recording Audio

We then set up the wake word detection using the Pocketsphinx library.

# Function to record audio after wake word is detected

def record_audio(duration, sample_rate=16000):

print("Recording...")

audio = sd.rec(int(duration * sample_rate), samplerate=sample_rate, channels=1, dtype='float32')

sd.wait()

print("Recording finished.")

winsound.Beep(1000, 200)

return audio

This function uses the sounddevice library to record audio for a specified duration. A beep sound indicates the end of the recording.

Detecting the Wake Word

We then set up the wake word detection using the Pocketsphinx library.

from pocketsphinx import LiveSpeech

import winsound

# Initialize wake word detection

speech = LiveSpeech(lm=False, dic='custom.dict',

keyphrase='jasper', kws_threshold=1e-20)

print("Listening for wake word...")

Here, we initialize LiveSpeech with the necessary parameters to detect the wake word "jasper". The custom dictionary and key phrase threshold are set to recognize the wake word accurately. You can customize this wake word in 'whisper.py' and the 'custom.dict' file - or you can remove it, i just though it was cool to initiate the recording process.

Main Loop for Wake Word Detection and Transcription

Finally, we create a loop that listens for the wake word, records audio, and transcribes it.

for phrase in speech:

winsound.Beep(1000, 200)

os.system('echo \a')

print("Wake word detected")

# Record audio

audio_data = record_audio(record_duration)

# Preprocess audio data

audio_data = audio_data.mean(axis=1) if audio_data.ndim > 1 else audio_data

# Transcribe audio data

transcription = transcribe_audio(audio_data)

print("Transcription:", transcription)

print("Listening for wake word...")

In this loop, once the wake word is detected, a beep sound is played, and the system records audio for the specified duration. The recorded audio is then preprocessed (if necessary) and transcribed using the Whisper model. The transcription is printed to the console.

By following these sections, you should be able to implement a robust speech-to-text system using OpenAI's Whisper model. This step-by-step explanation helps in understanding each part of the code and how they work together to achieve the desired functionality.

OR

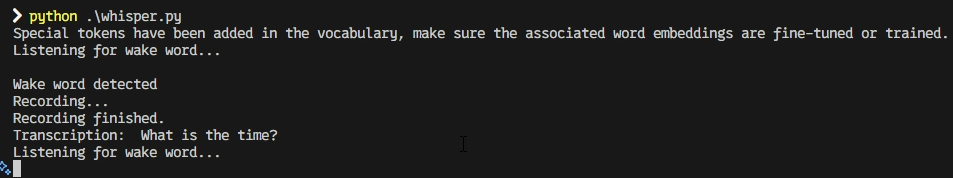

Step 4: Running the code

Below is screen shot of me running the python script, you will in the repo, i have just tried to add a little flare, i added a wake word to give it a bit of an assistant feel. The project by will analyze and transcribe a recording file, the process of mine is:

Wait for the wake word. (Accomplished using

pocketsphinx - LiveSpeech)Record for 5 seconds (You can change the duration)

Transcribe the recording

Delete the recording.

GitHub Repository: Can be found here

Thank you

For all contributions and open sourced items, you have made it possible for software developers to remain relevant.

Subscribe to my newsletter

Read articles from Kevin Loggenberg directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Kevin Loggenberg

Kevin Loggenberg

With an unwavering passion for metrics and an innate talent for problem-solving, I possess a wealth of experience as a seasoned full-stack developer. Over the course of my extensive tenure in software development, I have honed my skills and cultivated a deep understanding of the intricacies involved in solving complex challenges. Also, I like AI.