How I built Jargonize using NextJS, Groq API and Vercel

Vineeta Jain

Vineeta Jain

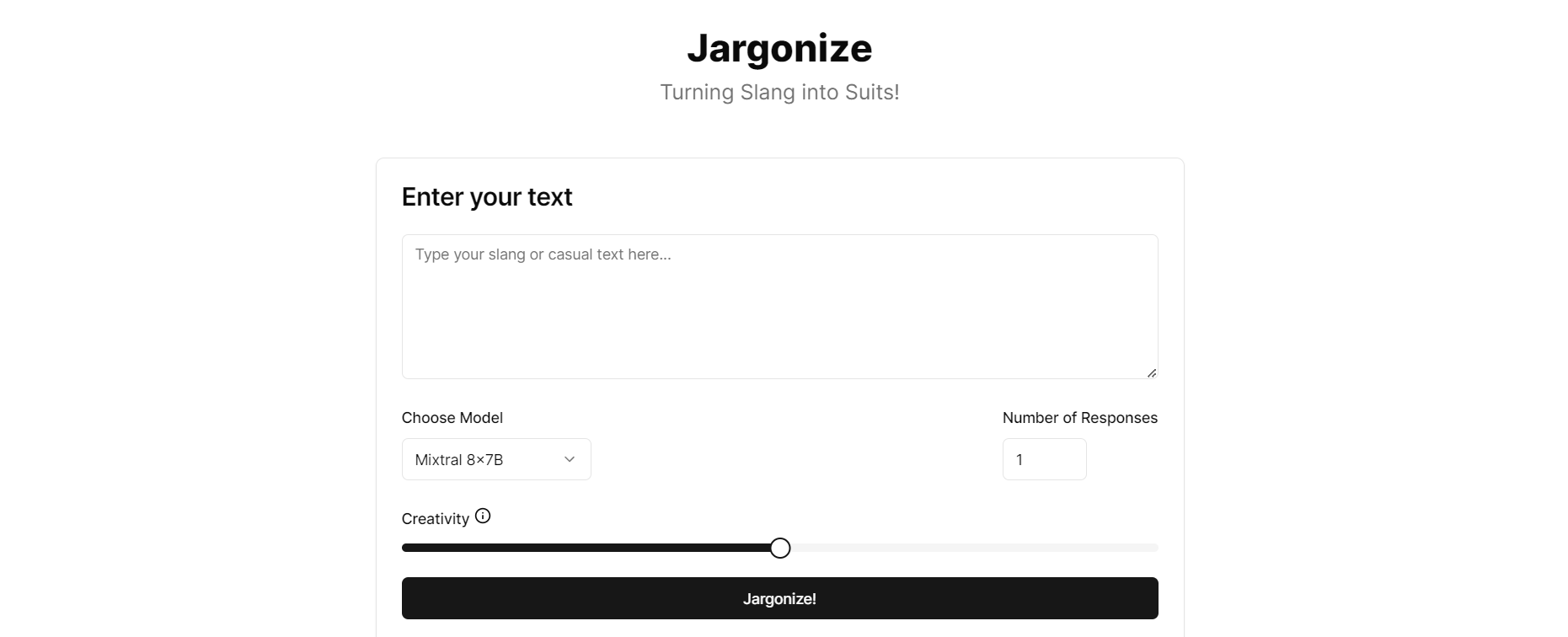

In this blog post, I will walk you through the process of building Jargonize, a web application that transforms casual text into professional corporate language using GroqAPI. The project is built with Next.js, leverages components from Shadcn, and is deployed on Vercel for easy and seamless deployment.

Project Overview

Jargonize is a simple yet powerful application designed to convert slang or casual text into professional corporate speak. The application allows users to input any text and get a more formal version in return. The backend leverages the Groq API for natural language processing, and the frontend is crafted with Next.js and Shadcn components for a polished UI. Groq acts as a bridge, allowing us to send user-provided text to the LLM and receive the "jargonized" version. The LLM analyzes the text and rephrases it using corporate language patterns.

Tech Stack

Next.js: For the overall framework and server-side rendering.

GroqAPI: For transforming text using advanced NLP models.

Shadcn: For UI components.

Vercel: For deployment.

Putting it all Together

Here's a simplified breakdown of how Jargonize works:

User enters casual text in the input field.

User selects the desired LLM model and number of responses to generate.

Clicking the "Jargonize!" button triggers an API call to the backend using Next.js API routes.

The backend utilizes Groq API to send the text to the chosen LLM model.

The LLM generates multiple "jargonized" versions of the text.

The API route receives the responses and sends them back to the frontend.

Jargonize displays the corporate versions in a user-friendly format.

Github Link

https://github.com/Ninjavin/jargonize

Deployed Link

Subscribe to my newsletter

Read articles from Vineeta Jain directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by