Gendered Echoes: Unveiling Gender Bias in AI Voice Assistants

Nityaa Kalra

Nityaa Kalra

Think about the voice that guides you through your day. It reminds you of appointments, plays your favourite music, and even controls your smart lights. But have you ever stopped to consider the gender of that voice? Interestingly, most AI assistants are female. This isn’t a random choice after all and with the ever-increasing use of AI voice assistants since the COVID-19 pandemic (a jump from 41% to 63%), it’s a pattern worth exploring.

This curious trend of female-voiced AI assistants raises some interesting questions. Does it simply reflect user preference? Or could there be deeper forces at play?

At times, these AI voice assistants sound suspiciously like a friendly neighbourhood customer service rep. There’s a reason for that, and it’s not just about sounding pleasant. Research suggests people might perceive female voices as more trustworthy and helpful. But is this preference for female voices entirely harmless? A study by UNESCO challenges this assumption, suggesting it might reinforce traditional gender roles, implying that women are better suited to supportive, service-oriented occupations.

As a result, this issue has been extensively researched and talked about. While there have been suggestions for developing more gender-neutral voice assistants, it remains uncertain whether this alone would address the deeply ingrained societal stereotypes.

This isn’t about pointing fingers at designers, but about understanding the ‘why’ behind these choices. Understanding AI bias alone may not dismantle it entirely, but hey, it’s a small step towards an inclusive future for technology :)

This first post dives into the historical roots of gender bias in AI voice assistants.

From Telephone Operators to AI Assistants: A Historical Look at Female Voices in Technology

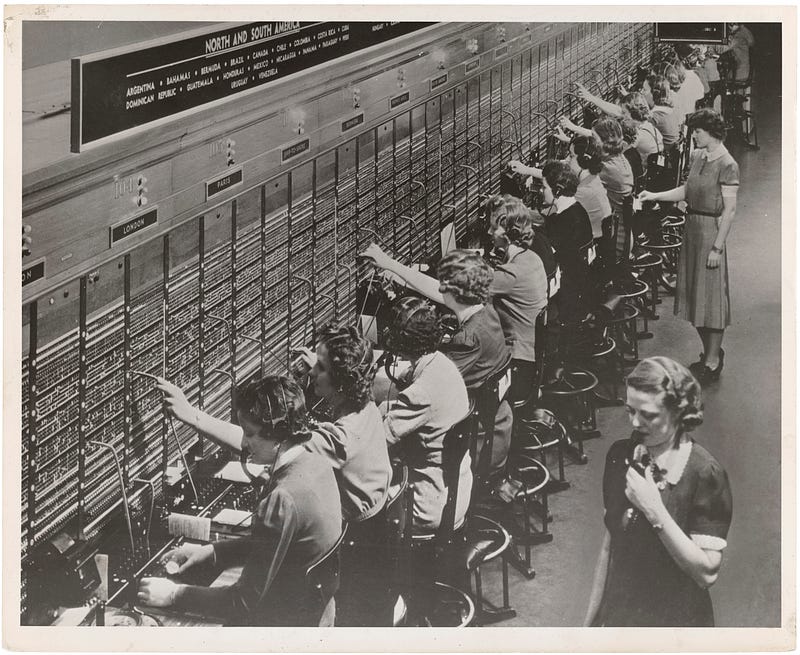

Let’s rewind to a time before smartphones and smart speakers, when ‘phone’ was still a brand new invention and connecting with someone required the guiding hand (or rather, voice) of a human intermediary: the telephone operator.

Back in 1878, Alexander Graham Bell himself, the mind behind the telephone, championed a switch — replacing the men initially hired as operators with women. This is when Emma Nutt became history’s first female telephone operator.

The trend persisted, and by the end of the 1880s, women’s voices were becoming the go-to choice for communication roles beyond the switchboard. They were taking centre stage in new media like radio and television as well. This shift, however, wasn’t driven by gender equality but by a perception of female voices being more pleasant and suitable for service roles.

Fast forward to the digital age, the legacy of the ‘female voice as ideal’ lives on. The pool of readily available recorded voices for AI assistants was heavily skewed towards women, simply because that’s what dominated communication technology for so long. This historical quirk, while unintentional, has limited the voice options for the training data available to developers of AI voice assistants today.

So, what does this mean for the future of AI assistants? Are we stuck with this stereotype, or is there room for more diversity? We’ll explore these questions and how this historical context continues to influence the design of these voice assistants in the next post.

References

C. Chin-Rothmann and M. Robison, “How AI bots and voice assistants reinforce gender bias,” Brookings, 23-Nov-2020. [Online]. Available: https://www.brookings.edu/articles/how-ai-bots-and-voice-assistants-reinforce-gender-bias/.

E. H. Schwartz, “Coronavirus lockdown is upping voice assistant interaction in the UK: Report,” Voicebot.ai, 07-May-2020. [Online]. Available: https://voicebot.ai/2020/05/07/coronavirus-lockdown-is-upping-voice-assistant-interaction-in-the-uk-even-when-it-ends-report/.

A. Elder, “Siri, stereotypes, and the mechanics of sexism,” Fem. Philos. Q., vol. 8, no. 3/4, 2022.

“I’d Blush If I Could: Closing Gender Divides in Digital Skills Through Education,” UNESCO, 2019.

“The woman who made history by answering the phone,” Time.

E. Fisher, “Gender bias in AI: Why voice assistants are female,” Adapt. [Online]. Available: https://www.adaptworldwide.com/insights/2021/gender-bias-in-ai-why-voice-assistants-are-female.

C. Nass, Y. Moon, and N. Green, “Are machines gender neutral? Gender‐stereotypic responses to computers with voices,” J. Appl. Soc. Psychol., vol. 27, no. 10, pp. 864–876, 1997.

Subscribe to my newsletter

Read articles from Nityaa Kalra directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Nityaa Kalra

Nityaa Kalra

I'm on a journey to become a data scientist with a focus on Natural Language Processing, Machine Learning, and Explainable AI. I'm constantly learning and an advocate for transparent and responsible AI solutions.