Exploring Cilium Network Integration with AWS EKS

Henry Uzowulu

Henry Uzowulu

Today, I explore how the Cilium network works by integrating it into AWS EKS, which has been quite intriguing. Creating and managing clusters with Cilium improves network connectivity, acting as a network superhero. We can leverage the add-on modules provided by EKS.

In this blog post, we will explore and test how to integrate the Cilium networking add-on directly with EKS. In the next blog, we will dive further into the newer version of AWS EKS cluster creation flexibility, which promises to simplify this integration.

Every EKS cluster comes with default networking add-ons, including AWS VPC CNI, CoreDNS, and kube-proxy, to enable pod and service operations in the EKS clusters. In our cluster deployment, we will follow the documentation where the taints provided control the scheduling of application pods to nodes based on the readiness status of Cilium. As of this writing, this is limited to IPv4.

Pre-Requisites

You should have an AWS subscription.

Install kubectl

Install Helm

The following EC2 privileges are required by the Cilium operator in order to perform ENI creation and IP allocation.

Install eksctl

Install awscli

Cilium CLI: Cilium provides Cilium CLI tool that automatically collects all the logs and debug information needed to troubleshoot your Cilium installation. You can install Cilium CLI for Linux, macOS, or other distributions on their local machine(s) or server(s).

Create a configuration file and name it cluster-config.yaml the managed nodes are tainted with node.cilium.io/agent-not-ready=true:NoExecute to ensure that application pods are not scheduled once cilium is ready to manage them

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: henry-eks

region: us-east-1

managedNodeGroups:

- name: henry-eks-app

instanceType: t2.medium

desiredCapacity: 1

privateNetworking: true

# taint nodes so that application pods are

# not scheduled/executed until Cilium is deployed.

# Alternatively, see the note below.

taints:

- key: "node.cilium.io/agent-not-ready"

value: "true"

effect: "NoExecute"

create the cluster using the configuration used

eksctl create cluster -f cluster-config.yaml

Once the cluster is created the networking add-on AWS VPC CNI plugin is responsible for setting up the virtual network devices as well as for IP address management via ENI. once the cilium CNI plugin is set up it attaches the eBPF programs to the network devices set up by the AWS VPC CNI plugin in other to enforce network policies, perform load-balancing and encryption.

To confirm the AWS VPC CNI the version 1.16.0 you are using to guarantee compatible with cilium

kubectl -n kube-system get ds/aws-node -o json | jq -r '.spec.template.spec.containers[0].image'

602401143452.dkr.ecr.us-east-1.amazonaws.com/amazon-k8s-cni:v1.16.0-eksbuild.1

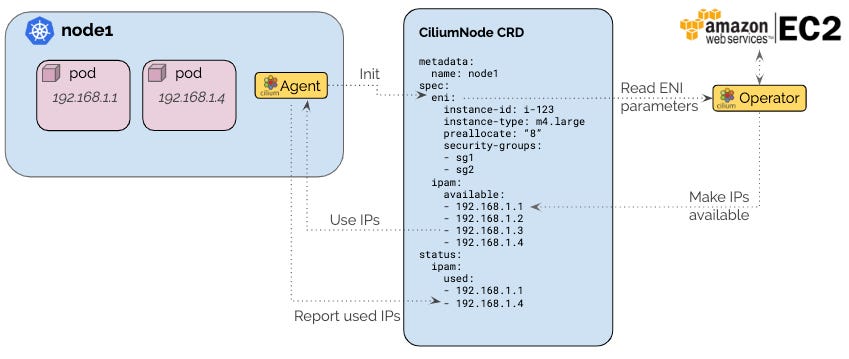

Before we go deeper into installing Cilium in the EKS cluster, I’ll discuss another feature, AWS ENI (Elastic Network Interface). It is an allocator and a virtual network interface that we can attach to any nodes on our cluster, necessary for allocating IP addresses needed by communicating with the EC2 instance API. Once Cilium is set up, each node creates a Cilium CRD matching the node name ciliumnodes.cilium.io, which also creates the ENI parameters by communicating with the EC2 metadata API to retrieve the instance ID and VPC information.

cilium.io

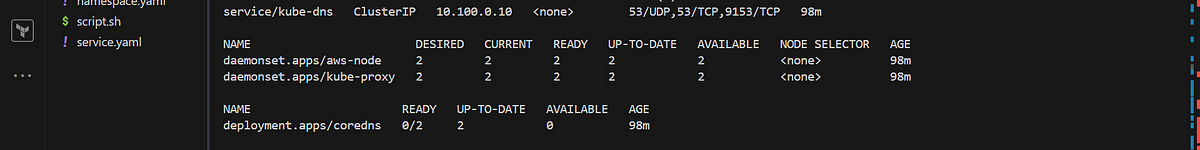

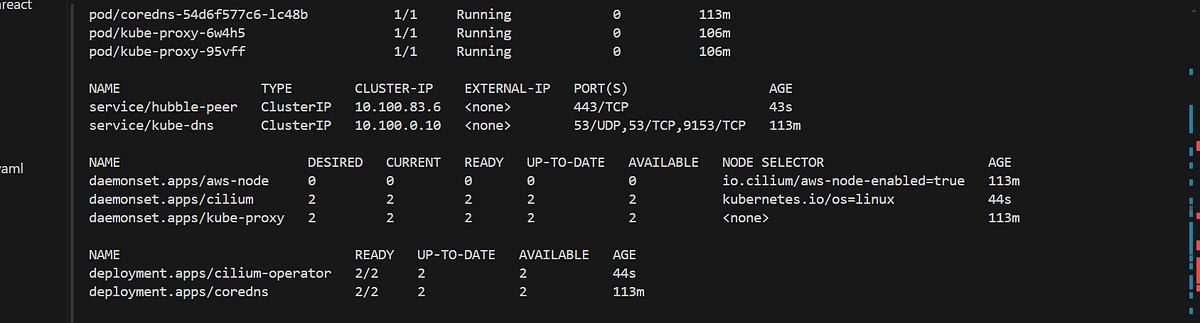

now cilium will manage the AWS ENI instead of the VPC CNI, so the aws-node daemonset must be patched to prevent conflicting behavior:

kubectl -n kube-system patch daemonset aws-node --type='strategic' -p='{"spec":{"template":{"spec":{"nodeSelector":{"io.cilium/aws-node-enabled":"true"}}}}}'

once it has been patched

then we work on installing cillium on the Eks cluster, using helm

helm repo add cilium https://helm.cilium.io/

then after updating and installing the cilium repo on the local machine, we then use helm install repo

helm install cilium cilium/cilium --version 1.15.6 \

--namespace kube-system \

--set eni.enabled=true \

--set ipam.mode=eni \

--set egressMasqueradeInterfaces=eth0 \

--set routingMode=native

If you created your cluster and did not taint the nodes with node.cilium.io/agent-not-ready, the unmanaged pods need to be restarted manually to ensure Cilium starts managing them. To do this:

kubectl get pods --all-namespaces -o custom-columns=NAMESPACE:.metadata.namespace,NAME:.metadata.name,HOSTNETWORK:.spec.hostNetwork --no-headers=true | grep '<none>' | awk '{print "-n "$1" "$2}' | xargs -L 1 -r kubectl delete pod

validate the installation

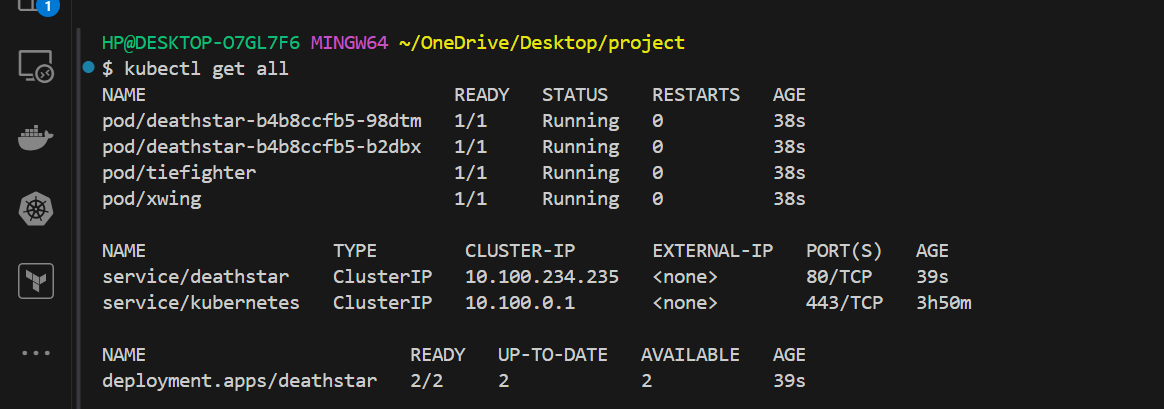

here, I tried something different instead of using the documentation, I deploy a Star Wars microservices application, which has three applications: Deathstar, tiefighter, xwing. the Deathstar runs on port 80 as the cluster IP, the Deathstar service provides the landing services to the application as a whole or spaceship. the tie fighter pod and xwing pod represent similar client service, moving on. to run this,

kubectl create -f https://raw.githubusercontent.com/cilium/cilium/1.15.6/examples/minikube/http-sw-app.yaml

cilium will represent every pod as an endpoint in the cilium agent. we can get the list of end-point by running this command

# the first node

kubectl -n kube-system exec cilium-cxvdh -- cilium-dbg endpoint list

# the second node

kubectl -n kube-system exec cilium-lsxht -- cilium-dbg endpoint list

this will list all the end-point for each node

kubectl -n kube-system get pods -l k8s-app=cilium

NAME READY STATUS RESTARTS AGE

cilium-cxvdh 1/1 Running 0 119m

cilium-lsxht 1/1 Running 0 119m

$ kubectl -n kube-system exec cilium-lsxht -- cilium-dbg endpoint list

Defaulted container "cilium-agent" out of: cilium-agent, config (init), mount-cgroup (init), apply-sysctl-overwrites (init), mount-bpf-fs (init), clean-cilium-state (init), install-cni-binaries (init)

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

143 Disabled Disabled 54154 k8s:app.kubernetes.io/name=tiefighter 192.168.92.83 ready

k8s:class=tiefighter

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:org=empire

147 Disabled Disabled 4849 k8s:app.kubernetes.io/name=xwing 192.168.85.248 ready

k8s:class=xwing

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:org=alliance

735 Disabled Disabled 4 reserved:health 192.168.75.160 ready

1832 Disabled Disabled 1 k8s:alpha.eksctl.io/cluster-name=henry-eks-app ready

k8s:alpha.eksctl.io/nodegroup-name=henry-eks-1

k8s:eks.amazonaws.com/capacityType=ON_DEMAND

k8s:eks.amazonaws.com/nodegroup-image=ami-057ddb600f3bba07e

k8s:eks.amazonaws.com/nodegroup=henry-eks-1

k8s:eks.amazonaws.com/sourceLaunchTemplateId=lt-07908f6a5332b214e

k8s:eks.amazonaws.com/sourceLaunchTemplateVersion=1

k8s:node.kubernetes.io/instance-type=t2.medium

k8s:topology.kubernetes.io/region=us-east-1

k8s:topology.kubernetes.io/zone=us-east-1b

reserved:host

2579 Disabled Disabled 6983 k8s:app.kubernetes.io/name=deathstar 192.168.79.49 ready

k8s:class=deathstar

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:org=empire

To confirm the pods can be accessed and the labels with org=empire are allowed to connect and request landing since no rules will attached to the pods xwing and tiefighter

$ kubectl exec xwing -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

Ship landed

$ kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

Ship landed

thank you

till next time

Linkedln: https://www.linkedin.com/in/emeka-henry-uzowulu-38900088/

Subscribe to my newsletter

Read articles from Henry Uzowulu directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Henry Uzowulu

Henry Uzowulu

i am a dedicated and enthusiastic professional with a solid year of hands-on experience in crafting efficient and scalable solutions for complex projects in Cloud/devops. i have strong knowledge in DevOps practices and architectural designs, allowing me to seamlessly bridge the gap between development and operations team, I possess excellent writing abilities and can deliver high-quality solutions