How to Automate Log File Analysis Using Bash Script

Sahil Mhatre

Sahil Mhatre2 min read

Table of contents

The script below will generate a summary of:

Date of analysis

Log file name

Total lines processed

Total error count

Top 5 error messages with their occurrence count

List of critical events with line numbers

Code:

#!/bin/bash

# Check if exactly one argument is passed

if [ $# -ne 1 ]; then

echo "Usage: $0 <path to log file>"

exit 1

fi

# Assign the first argument to log_file

log_file=$1

# Check if the log file exists and is readable

if [ ! -f "$log_file" ]; then

echo "Error: Log file '$log_file' not found or is not a file."

exit 1

fi

if [ ! -r "$log_file" ]; then

echo "Error: Log file '$log_file' is not readable."

exit 1

fi

# Get the current date

dt=$(date +%d-%m-%Y)

# Get the number of lines in the log file

num_lines=$(wc -l < "$log_file")

# Check for errors in the log file

num_errors=$(grep -i "error" "$log_file" | wc -l)

# Generate the summary report file

report_file="summary_report-$dt.txt"

echo "Date of analysis: $dt" > "$report_file"

echo "Log file name: $log_file" >> "$report_file"

echo "Total lines processed: $num_lines" >> "$report_file"

echo "Total error count: $num_errors" >> "$report_file"

# Get the top 5 error messages with their occurrence count

echo "Top 5 error messages with their occurrence count:" >> "$report_file"

grep -i "error" "$log_file" | sort | uniq -c | sort -nr | head -5 >> "$report_file"

# List critical events with line numbers (assuming 'critical' keyword for critical events)

echo "List of critical events with line numbers:" >> "$report_file"

grep -ni "critical" "$log_file" >> "$report_file"

cat $report_file

echo "Summary report saved : $report_file"

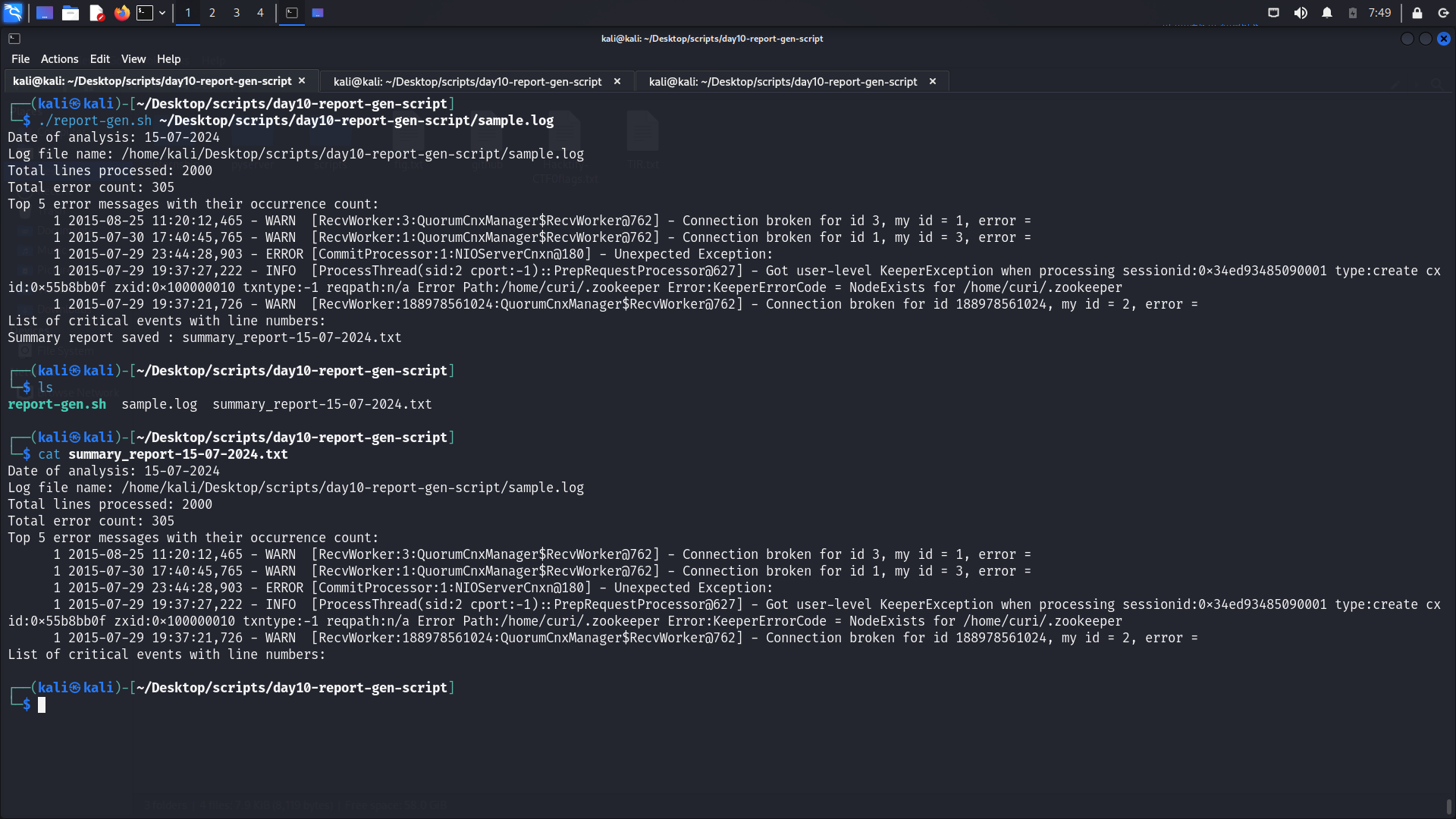

OUTPUT:

Log file used for analysis: https://github.com/logpai/loghub/blob/master/Zookeeper/Zookeeper_2k.log

0

Subscribe to my newsletter

Read articles from Sahil Mhatre directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by