NVIDIA A6000 vs A100: GPU Benchmarks and Performance Analysis| deep learning 2024

NovitaAI

NovitaAI

Introduction

The NVIDIA A6000 and A100 are two powerful GPUs that have made a big impact in the many fields. With top-notch performance and efficiency, they have the ability to deal with various professionals like data scientists, financial analysts, or genomic researchers.

In this blog, we’re going to compare the NVIDIA A6000 and A100 GPUs in some aspects. We’ll look at what makes them tick — from key features and how well they perform on different deep learning jobs to technical details you might be curious about as well as where these GPUs really shine in real-world use cases. Hoping that you will find the final answer after reading this passage.

Overview of NVIDIA’s A6000 and A100 GPUs for Deep Learning

The NVIDIA A6000 and the A100 are really great at doing tough calculations and can handle big chunks of data without breaking a sweat. Both these GPUs pack enough punch in terms of computational power and memory bandwidth, making them ideal choices for both training deep learning models and running inference tasks efficiently.

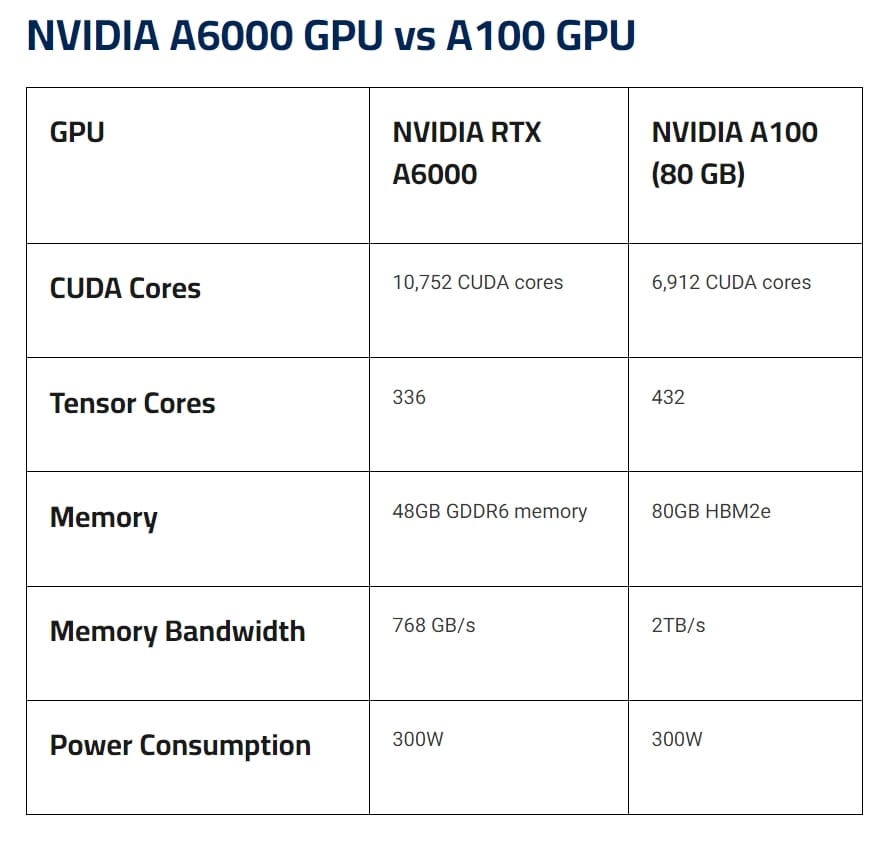

Key Features of NVIDIA A6000

a beast for deep learning tasks

10,752 CUDA cores

336 tensor cores

a whopping 48GB of GDDR6 memory

an impressive memory bandwidth of 768 GB/s

perfect for all sorts of AI stuff like figuring out what’s in pictures, understanding human language, or even recognizing speech patterns

good at speeding up both the training phase and inference part of deep learning projects

Key Features of NVIDIA A100

a top-notch GPU made for tackling deep learning and AI tasks

6,912 CUDA cores

432 tensor cores

a whopping 80GB of HBM2e memory

move data super fast, up to 2TB/s

smoothly manage huge amounts of data

well on cloud platforms

an awesome pick for AI applications where being able to scale up or down easily matters a lot

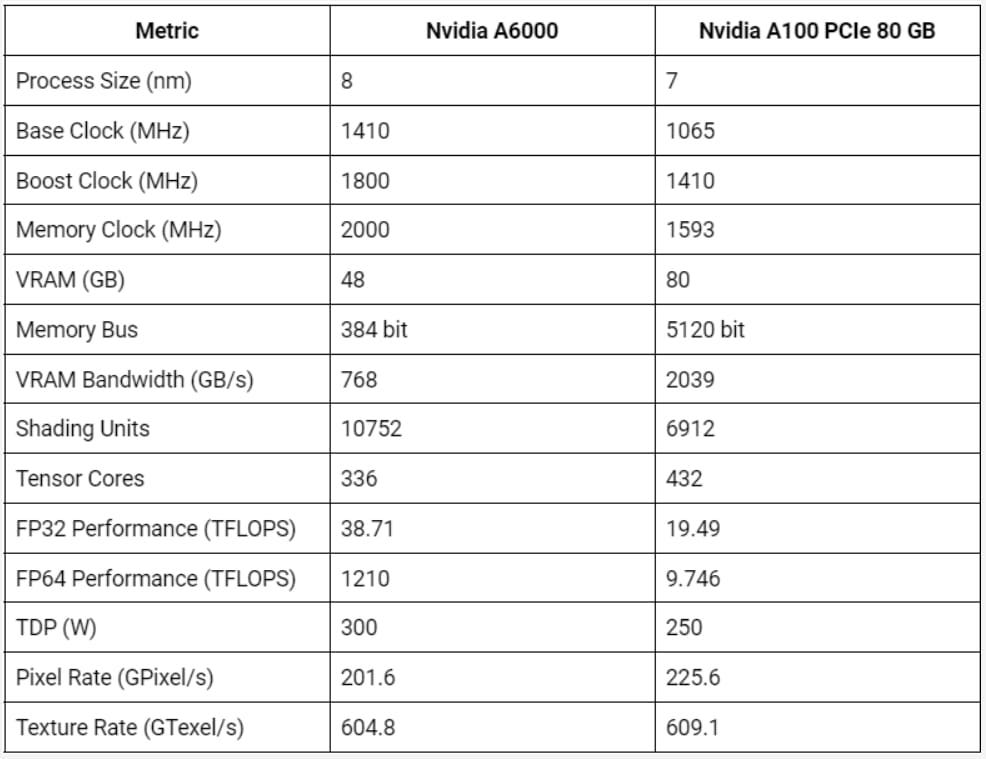

Performance Benchmarks: A6000 vs A100 in Deep Learning Tasks

To assess NVIDIA A6000 and A100 GPUs in deep learning tasks, we conducted tests involving training, stable diffusion work, and data processing.

Let’s analyze the performance of the A6000 and A100 GPUs.

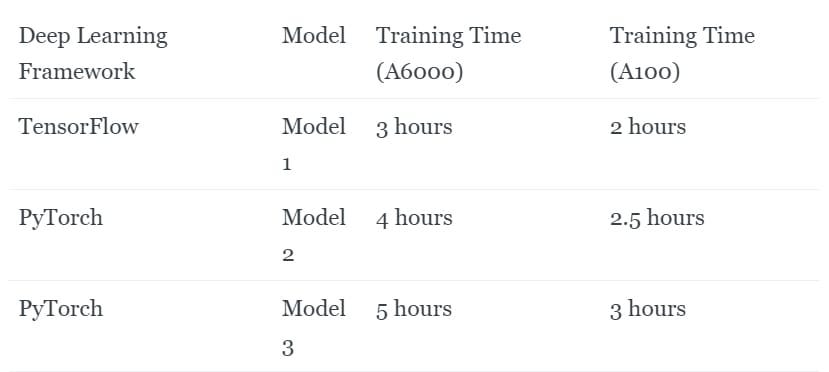

Training Performance Comparison

In our training performance comparison, we evaluated the NVIDIA A6000 and A100 GPUs using popular deep learning frameworks like TensorFlow and PyTorch. We trained various deep learning models, including those used in speech recognition tasks, to assess the GPUs’ performance.

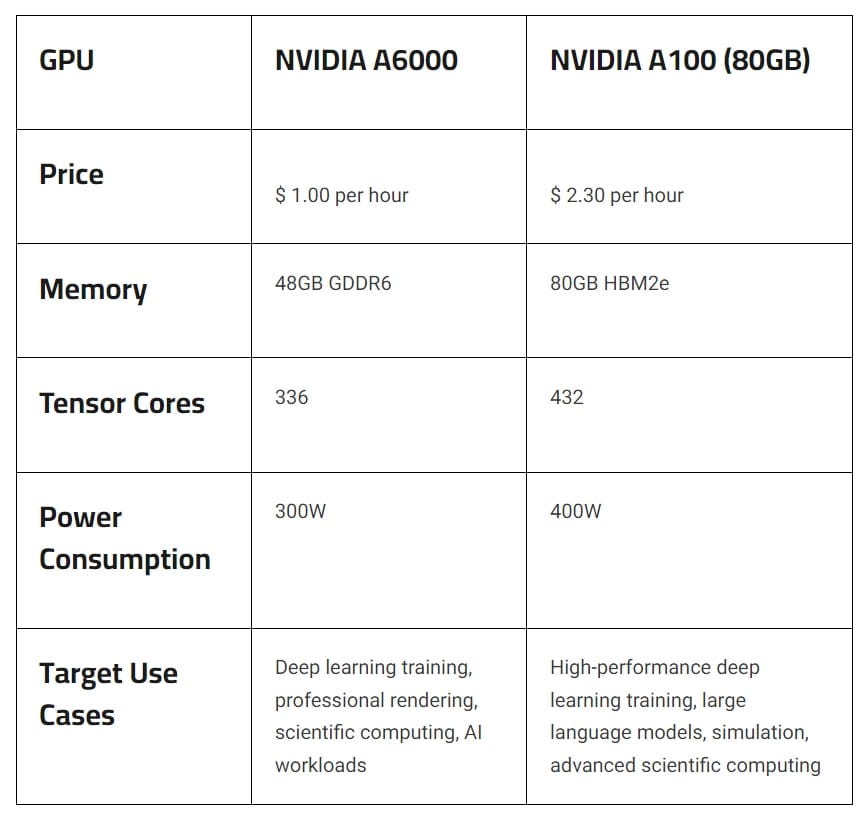

The text table below provides detailed information on our training performance comparison:

A100: The A100 shines in training large language models, image classification models, and other complex architectures. It achieves faster convergence and higher training throughput compared to the A6000.

A6000: While not as fast as the A100, the A6000 can still handle smaller models and basic training tasks efficiently.

Inference Speed and Efficiency

When it comes to figuring out things quickly and doing tasks efficiently, the NVIDIA A100 GPU was a step ahead of the A6000.

Benchmarks have demonstrated that the A100 GPU delivers impressive inference performance across various tasks. For example, in object detection tasks using popular datasets like COCO, the A100 has shown faster inference times than previous-generation GPUs. This is particularly beneficial in real-time applications that require quick and accurate object detection, like self-driving cars or video surveillance.

With its tensor cores and bigger memory bandwidth, the A100 could do calculations and move data around faster. This made a big difference in jobs like recognizing images or understanding human language, where the A100 beat the A6000 every time. The ability of the A100 to work with large deep learning models and sort through complicated algorithms more smoothly also meant it got better efficiency scores.

Technical Specifications and Architecture Analysis

To make a smart choice between the NVIDIA A6000 and A100 GPUs, it’s important to get the hang of their tech specs and design.

GPU Architecture: Ampere vs Volta

The NVIDIA A6000 and the A100 GPUs are like two different kinds of brains, where the A6000 uses something called Ampere to think and work, while the A100 thinks with Volta.

- A6000:

With its Ampere brain, the A6000 is really good at doing a lot of things at once more efficiently than how the Volta in the A100 does it. This means that for jobs where you need to do lots of calculations fast, like when computers learn on their own (that’s what deep learning and machine learning are about), the A6000 can handle them better because it has more CUDA cores and tensor cores. These parts help it process information faster and use its power smarter.

- A100:

On flip side though, thanks to its bigger memory bandwidth — which is kind of like having wider roads for data traffic — and special tensor cores designed by Volta architecture, the A100 shines when tackling complex problems specific to deep learning tasks. It’s all about picking the right tool for the job: if you’re into heavy-duty deep learning or machine learning projects, the Ampere-powered NVIDIA A6000 might be your go-to GPU for efficiency and power. But if your work leans more towards intensive deep learning tasks requiring lots of fast data movement, theVolta-basedNVIDIAA100 could offer that superior per for mance you need.

Memory and Bandwidth Considerations

When you’re looking into the NVIDIA A6000 and A100 GPUs for deep learning stuff, how much memory they have and how fast they can move data around are big deals.

- A6000:

With its 48GB of GDDR6 memory, the A6000 is pretty good. The A6000 isn’t as speedy in moving data since it tops out at 768 GB/s, which might slow things down if you’re working with lots of information.

- A100:

The A100 has a whopping 80GB of HBM2e memory. Because it has more memory, the A100 is really good at dealing with huge amounts of data and complicated deep learning models without breaking a sweat. On top of that, it can shuffle data around super quickly at 2TB/s which helps everything run smoothly.

Even so, if your deep learning projects aren’t gigantic or too complex, the amount of memory on an A6000 should do just fine — plus it’s easier on your wallet! Deciding between these two boils down to what kind or size project you’ve got going on: bigger tasks might need something like the A100, but smaller ones could be perfectly happy with an A6000.

Real-World Applications and Use Cases

The NVIDIA A6000 and A100 GPUs are versatile tools used in various fields like scientific research, autonomous vehicles, finance, video creation, graphic design, healthcare, and architecture. It caters to real-world applications that demand substantial computational power and high-quality graphics. Here’s how different industries benefit from choosing the right GPU for their specific needs:

Design and Rendering: It’s used in industries like architecture, engineering, and entertainment for 3D rendering, modelling, and visualisation tasks. It accelerates design workflows and enables faster rendering of complex scenes in software like Autodesk Maya, 3ds Max, and SolidWorks.

Data Science and AI: The A6000 is utilized in AI and machine learning applications for training and inference tasks. It’s employed in data centers and research institutions to process large datasets, train deep learning models, and accelerate AI algorithms.

Scientific Research: It aids in scientific simulations, weather forecasting, computational biology, and other research fields that require high-performance computing (HPC) capabilities. The GPU’s parallel processing power helps accelerate complex simulations and computations.

Medical Imaging: In healthcare, the A6000 assists in medical imaging tasks such as MRI, CT scans, and 3D reconstruction. It accelerates image processing and analysis, aiding in diagnostics and medical research.

Content Creation: Content creators, including video editors, animators, and graphic designers, benefit from the A6000’s performance for video editing, animation rendering, and graphic design tasks using software like Adobe Creative Suite and DaVinci Resolve.

Oil and Gas Exploration: In the oil and gas industry, the A6000 aids in seismic interpretation, reservoir modelling, and fluid dynamics simulations, helping in exploration and extraction processes.

Financial Modeling: It’s used in financial institutions for risk analysis, algorithmic trading, and complex financial modelling, where rapid computation and analysis of large datasets are crucial.

Pricing and Availability for Deep Learning Environments

The choice between the NVIDIA A6000 and A100 depends on your specific needs, budget, and the types of workloads you’ll be running.

Cost Analysis and Budget Considerations

The A6000’s lower price tag and comparable FP16 capabilities make it a viable option for a range of AI development, high-performance computing, and professional rendering applications. However, if your workload demands absolute top-tier performance in FP32 and FP64, or requires large memory capacities, the A100 remains the undisputed champion.

Availability and Scalability for Research Institutions

For research places that need strong computing power for their deep learning and AI work, being able to get and use GPUs easily is really important. The NVIDIA A6000 and A100 GPUs are great choices for these institutions because they cater to different needs.

With the A6000, schools or labs on a tight budget have a way in. It’s priced well so many can be bought without spending too much money. This means lots of tasks can be done at the same time, making it easier to tackle big projects related to deep learning.

On another note, the A100 fits perfectly for those with bigger computational fish to fry. Its top-notch performance and greater memory bandwidth make it perfect for doing complicated stuff like running detailed simulations, teaching big AI models new tricks, or working through huge amounts of data.

The Future of GPUs in Deep Learning and AI

Looking ahead, the role of GPUs in deep learning and AI is really exciting. With technology getting better and more people wanting powerful computers for their projects, GPUs are going to be super important for making deep learning tasks faster and helping AI applications do their thing.

With new kinds of GPU designs coming out that are made just for AI and deep learning, we’re going to see them work even better and use less power. These special GPUs will be key in dealing with huge amounts of data and complicated calculations.

As we look forward to what’s next like Hopper and Ada, it seems like GPUs are only going to get stronger and more efficient. This means folks working on research or creating new stuff with AI can take on bigger challenges than before. It’s a pretty cool time for anyone involved in the world of AI!

Emerging Trends in GPU Technology

The world of GPU tech is changing fast, and it’s really exciting to see where it’s heading, especially for deep learning and AI. Here are some cool things happening:

With GPUs getting their own special designs just for AI, like NVIDIA’s Ampere architecture, they’re getting way better at handling AI tasks. This means they can learn and make decisions quicker.

On top of that, these GPUs are going to have more memory bandwidth. That’s a fancy way of saying they can move data around faster which is great for working with big datasets and complicated AI models without slowing down.

As these GPUs get stronger, keeping them cool so they don’t overheat becomes super important. Luckily, there are smarter cooling methods being developed to keep everything running smoothly.

Another awesome thing on the horizon is mixing up AI with quantum computing ideas. This could lead us to new kinds of GPU designs that tackle super tough problems much faster than what we’re used to today.

All in all, these changes in GPU technology mean a lot for making progress in deep learning and creating amazing AI applications researchers.

Rent NVIDIA Series in GPU Cloud

As you can see, both the NVIDIA A6000 and A100 are indeed good GPU for you to choose. But what if you may consider how to get GPUs with better performance? Why don’t you make a decision after you really experience each of these two GPUs? Novita AI GPU Instance offers you this possibility!

Enjoy renting NVIDIA A6000 in Novita AI GPU Instance

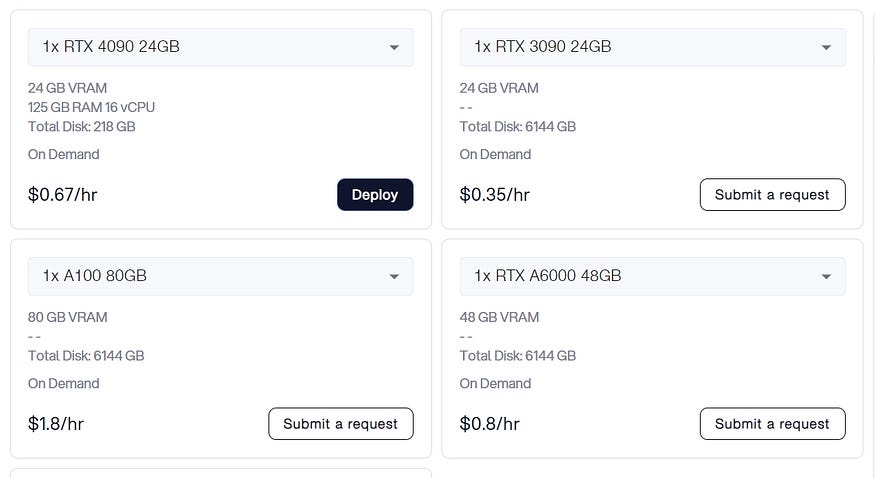

Novita AI GPU Instance provides access to high-performance GPUs such as the NVIDIA A100 SXM, RTX 4090, and RTX 3090, each with substantial VRAM and RAM, ensuring that even the most demanding AI models can be trained efficiently.

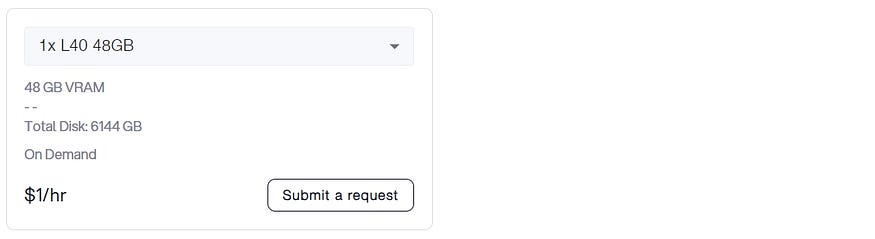

The 5 models providing by Novita AI GPU Instance are shown in this picture:

Therefore, users can rent NVIDIA A6000 and A100 mentioned above easily base on your own needs without buying the whole hardware with high prices.

What can you get from renting them in Novita AI GPU Instance?

cost-efficient: reduce cloud costs by up to 50%

flexible GPU resources that can be accessed on-demand

instant Deployment

customizable templates

large-capacity storage

various the most demanding AI models

get 100GB free

How to start your journey in Novita AI GPU Instance:

STEP1: If you are a new subscriber, please register our account first. And then click on the GPU Instance button on our webpage.

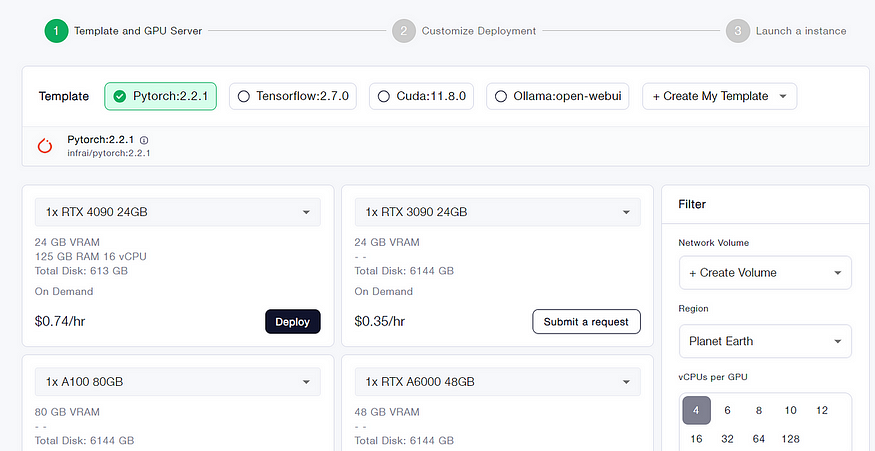

STEP2: Template and GPU Server

You can choose you own template, including Pytorch, Tensorflow, Cuda, Ollama, according to your specific needs. Furthermore, you can also create your own template data by clicking the final bottom.

Then, our service provides access to high-performance GPUs such as the NVIDIA RTX 4090, and RTX 3090, each with substantial VRAM and RAM, ensuring that even the most demanding AI models can be trained efficiently. You can pick it based on your needs.

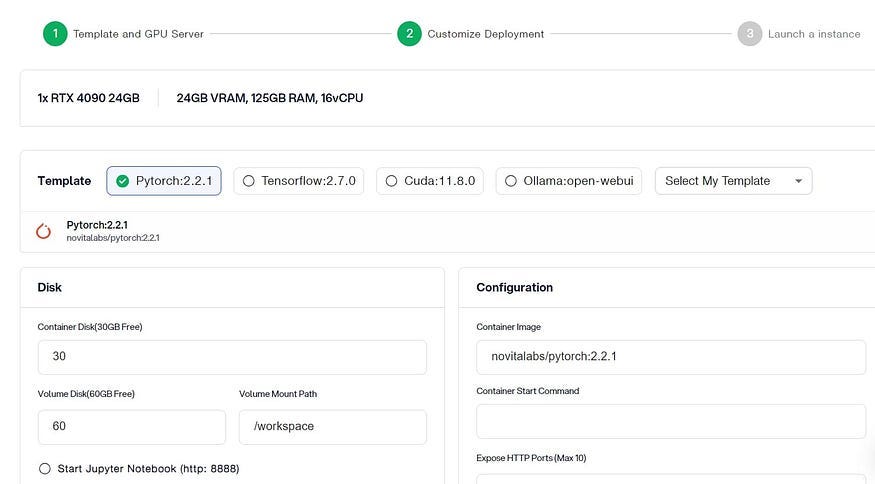

STEP3: Customize Deployment

In this section, you can customize these data according to your own needs. There are 30GB free in the Container Disk and 60GB free in the Volume Disk, and if the free limit is exceeded, additional charges will be incurred.

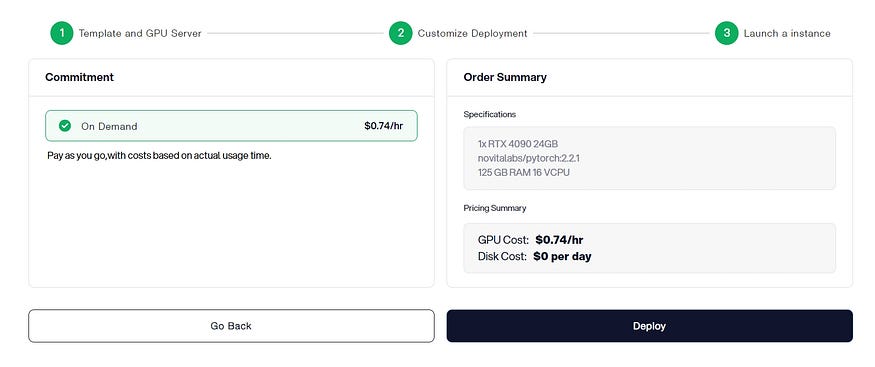

STEP4: Launch a instance

Conclusion

To wrap things up, when we look at NVIDIA’s A6000 and A100 GPUs side by side, it’s clear they each have their own set of strengths and capabilities especially for deep learning tasks. It’s really important to get a good grip on what these GPUs can do, how much they cost, and what other people think about them if you’re trying to pick the right one for your needs. With GPU technology getting better all the time, we’re likely to see some pretty cool developments in AI and machine learning soon. As folks who study this stuff keep pushing GPUs further, there’s a lot of excitement around what new innovations will come out next in deep learning.

Frequently Asked Questions

Which GPU is better for beginners in deep learning, A6000 or A100?

If you’re just starting out with deep learning, going for the NVIDIA A6000 is a smart move. It’s more budget-friendly but still gives you performance that can stand up to the A100.

How do A6000 and A100 compare in terms of support for large-scale AI models?

The A6000 and A100 GPUs can both handle big AI models. But, with its higher memory bandwidth and more computational power, the A100 is better at dealing with the tricky math and data work needed for these large-scale AI projects.

Is NVIDIA A100 better than NVIDIA RTX A6000 for stable diffusion?

Indeed, when it comes to stable diffusion, the NVIDIA A100 outshines the RTX A6000. With its more advanced architecture and greater memory bandwidth, the A100 can create high-quality images much faster than what you’d get with the A6000.

Originally published at Novita AI.

Novita AI, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.

Subscribe to my newsletter

Read articles from NovitaAI directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by