Mastering Laravel job batching Pt.2: Job batching and queue

Dominique VASSARD

Dominique VASSARDBatches are just a bunch of jobs

This article is 2nd of the 4-parts series: Mastering Laravel batches

In this chapter, we'll have a deeper look on how batches are managed in queues.

In order to have a better view on this topic, we'll use Redis queues and Telescope. In fact, we already use Redis but the combination of the two tools will make clear one important fact about batches.

Install Telescope

Telescope is a monitoring tool which allow to get valuable information about a running Laravel application. More info at: https://laravel.com/docs/11.x/telescope

Installation is straight forward, just follow these steps:

# Install dependency

sail composer require laravel/telescope

# Publish assets and migration

sail artisan telescope:install

# Create required tables

sail artisan migrate

You should be able to navigate to : http://localhost/telescope

Use redis queues (if not already)

If it's not already the case, check your .env file and ensure that the queue connection environment variable is set to redis:

QUEUE_CONNECTION=redis

Restart the queue:

sail artisan queue:restart

If you're not familiar with queues, note that they are long running process, then if you want code updates to be taken in account, you have to restart the queues with the above command.

Run a batch and inspect what happens

Let's review our ExampleJob and Batcher command in order to keep thing without tweaks or errors.

First, the job:

# app/Jobs/ExampleJob.php

// ...

class ExampleJob implements ShouldQueue

{

use Batchable, Dispatchable, InteractsWithQueue, Queueable, SerializesModels;

/**

* Create a new job instance.

*/

public function __construct(public int $number)

{

}

/**

* Execute the job.

*/

public function handle(): void

{

// If batch is cancelled, stop execution

if ($this->batch()->cancelled()) {

Log::info(sprintf('%s [%d] CANCELLED: Do nothing', class_basename($this), $this->number));

return;

}

// throw_if($this->number == 3, new Exception('I fail because 3.'));

Log::info(sprintf('%s [%d] RAN', class_basename($this), $this->number));

}

}

And our command:

#

//...

class Batcher extends Command

{

/**

* The name and signature of the console command.

*

* @var string

*/

protected $signature = 'batcher

{--cancel= : Cancel batch with the given id}';

/**

* The console command description.

*

* @var string

*/

protected $description = 'Batcher';

/**

* Execute the console command.

*/

public function handle()

{

if ($this->option('cancel')) {

$this->cancelBatch($this->option('cancel'));

} else {

$this->runExampleBatch();

}

}

/**

* Cancel the batch with the given id

*

* @param string $batch_id

* @return void

*/

private function cancelBatch(string $batch_id): void

{

// Find the batch for the given id

$batch = Bus::findBatch($batch_id);

// Cancel the batch

$batch->cancel();

}

/**

* Run a batch of ExampleJobs

*

* @return void

*/

private function runExampleBatch(): void

{

Bus::batch(

Arr::map(

range(1, 5),

fn ($number) => new ExampleJob($number)

)

)

->before(fn (Batch $batch) => Log::info(sprintf('Batch [%s] created.', $batch->id)))

->catch(function (Batch $batch, Throwable $e) {

Log::error(sprintf('Batch [%s] failed with error [%s].', $batch->id, $e->getMessage()));

})

->then(fn (Batch $batch) => Log::info(sprintf('Batch [%s] ended.', $batch->id)))

->progress(fn (Batch $batch) =>

Log::info(sprintf(

'Batch [%s] progress : %d/%d [%d%%]',

$batch->id,

$batch->processedJobs(),

$batch->totalJobs,

$batch->progress()

)))

->finally(fn (Batch $batch) => Log::info(sprintf('Batch [%s] finally ended.', $batch->id)))

->allowFailures()

->dispatch();

}

}

Now that all configuration is done and file are in order, we can restart the queue:

sail artisan queue:restart

sail artisan queue:work

And run our command:

sail artisan batcher

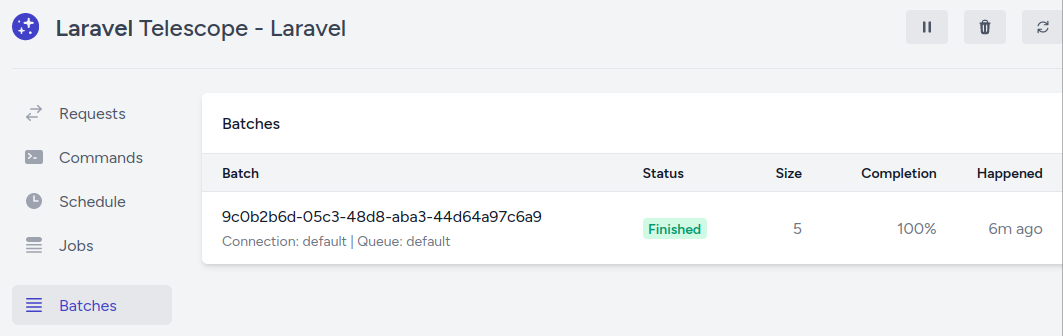

Now if we navigate to the Batches tab on the telescope page, we should see our batch:

If your batch is in status Pending, it is due to the fact that the queue is not running. you can run it using one of the following command:

sail artisan queue:work

# OR

sail artisan queue:listen

For our usage, they will behave in a similar way.

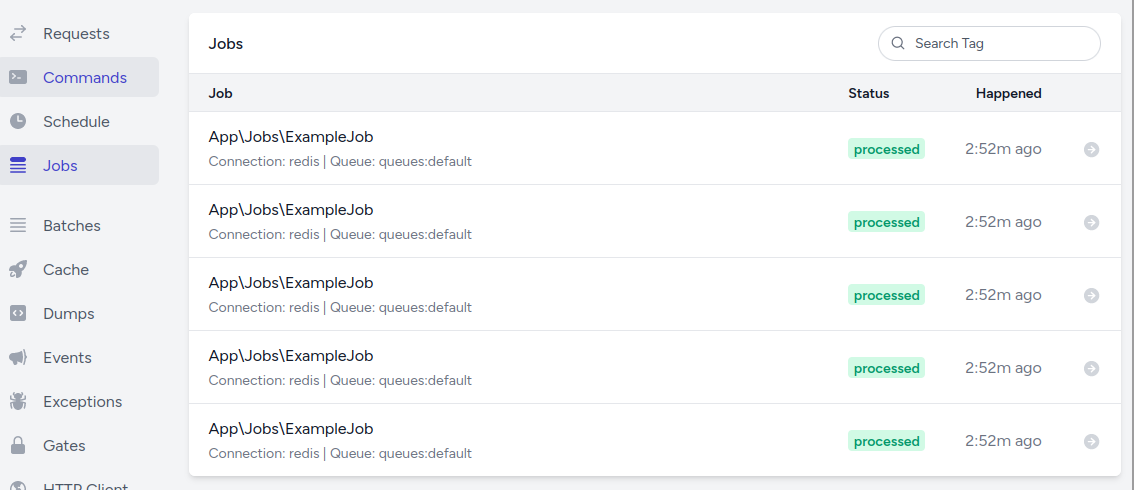

You can also check the jobs in the Jobs tab, checking that all 5 jobs ran.

But our attention will be on the Redis tab:

Here we can see what happened in the redis queue:

The

eval -- Push the job onto the queuelines tell us when a job in pushed to the queueThe

zrem queues:default:reserved...lines inform about when a job is popped from the queue for execution.

We can notice one very important thing about batches: when a batch is dispatched, all its jobs are pushed to the queue then they are executed.

What makes this point important will be clear with the next test: what happens if we dispatch 2 batches consecutively? Let's find out! First, we create a new job which is basically a copy of ExampleJob, we just change its name:

# app/Jobs/OtherJob.php

// ...

class OtherJob implements ShouldQueue

{

use Batchable, Dispatchable, InteractsWithQueue, Queueable, SerializesModels;

/**

* Create a new job instance.

*/

public function __construct(public int $number)

{

}

/**

* Execute the job.

*/

public function handle(): void

{

// If batch is cancelled, stop execution

if ($this->batch()->cancelled()) {

Log::info(sprintf('%s [%d] CANCELLED: Do nothing', class_basename($this), $this->number));

return;

}

// throw_if($this->number == 3, new Exception('I fail because 3.'));

Log::info(sprintf('%s [%d] RAN', class_basename($this), $this->number));

}

}

And we update our Batcher command to run a batch for each job type:

#

//...

class Batcher extends Command

{

//...

/**

* Execute the console command.

*/

public function handle()

{

if ($this->option('cancel')) {

$this->cancelBatch($this->option('cancel'));

} else {

$this->dispatchBatch(ExampleJob::class);

$this->dispatchBatch(OtherJob::class);

}

}

// ...

/**

* Run a batch of jobs

*

* @return void

*/

private function dispatchBatch(string $job_class_name): void

{

Bus::batch(

Arr::map(

range(1, 5),

fn ($number) => new $job_class_name($number)

)

)

//...

}

}

Keep in mind that a batch is dispatched, meaning that it will run asynchronously.

We expect that both batch run at the same time or at least that their respective jobs runs alternatively.

Restart the queue to have updates taken in account:

sail artisan queue:restart

sail artisan queue:work

And run our command:

sail artisan batcher

And now check telescope:

We can see that all jobs are pushed to queue before being executed.

And if we check our logs, we see the following:

[...] local.INFO: Batch [9c253dac-2604-4cf0-acb0-d6d3a00775ad] created.

[...] local.INFO: Batch [9c253dac-43d7-42b6-9d88-bf497e0ed2d9] created.

[...] local.INFO: ExampleJob [1] RAN

[...] local.INFO: Batch [9c253dac-2604-4cf0-acb0-d6d3a00775ad] progress : 1/5 [20%]

[...] local.INFO: ExampleJob [2] RAN

[...] local.INFO: Batch [9c253dac-2604-4cf0-acb0-d6d3a00775ad] progress : 2/5 [40%]

[...] local.INFO: ExampleJob [3] RAN

[...] local.INFO: Batch [9c253dac-2604-4cf0-acb0-d6d3a00775ad] progress : 3/5 [60%]

[...] local.INFO: ExampleJob [4] RAN

[...] local.INFO: Batch [9c253dac-2604-4cf0-acb0-d6d3a00775ad] progress : 4/5 [80%]

[...] local.INFO: ExampleJob [5] RAN

[...] local.INFO: Batch [9c253dac-2604-4cf0-acb0-d6d3a00775ad] progress : 5/5 [100%]

[...] local.INFO: Batch [9c253dac-2604-4cf0-acb0-d6d3a00775ad] ended.

[...] local.INFO: Batch [9c253dac-2604-4cf0-acb0-d6d3a00775ad] finally ended.

[...] local.INFO: OtherJob [1] RAN

[...] local.INFO: Batch [9c253dac-43d7-42b6-9d88-bf497e0ed2d9] progress : 1/5 [20%]

[...] local.INFO: OtherJob [2] RAN

[...] local.INFO: Batch [9c253dac-43d7-42b6-9d88-bf497e0ed2d9] progress : 2/5 [40%]

[...] local.INFO: OtherJob [3] RAN

[...] local.INFO: Batch [9c253dac-43d7-42b6-9d88-bf497e0ed2d9] progress : 3/5 [60%]

[...] local.INFO: OtherJob [4] RAN

[...] local.INFO: Batch [9c253dac-43d7-42b6-9d88-bf497e0ed2d9] progress : 4/5 [80%]

[...] local.INFO: OtherJob [5] RAN

[...] local.INFO: Batch [9c253dac-43d7-42b6-9d88-bf497e0ed2d9] progress : 5/5 [100%]

[...] local.INFO: Batch [9c253dac-43d7-42b6-9d88-bf497e0ed2d9] ended.

[...] local.INFO: Batch [9c253dac-43d7-42b6-9d88-bf497e0ed2d9] finally ended.

First batch runs completely before the second one can run its first job!

If we sum up, when a batch is dispatched, all its jobs are pushed to the queue. This means that a batch must run all its jobs before the queue can handle any other job.

Depending on your worker count, number of queues and number of jobs in batch, this can lead to serious delay in job executions...

What can we do to alleviate this?

Laravel chained jobs

What we're after is a way to tell the batch to treat the job one after another without pushing all the list to the queue.

One way to achieve this is to use chained jobs inside our batch. If instead of feeding the batch like this:

# app/Console/Commands/Batcher.php

class Batcher extends Command

{

//...

private function dispatchBatch(string $job_class_name): void

{

Bus::batch(

Arr::map(

range(1, 5),

fn ($number) => new $job_class_name($number)

)

)

//...

}

}

we can wrap the jobs in array like this:

# app/Console/Commands/Batcher.php

class Batcher extends Command

{

//...

private function dispatchBatch(): void

{

Bus::batch(

[

Arr::map(

range(1, 5),

fn ($number) => new $job_class_name($number)

)

]

)

}

}

Here, all jobs will run as a chain and because chained jobs are run (and pushed to queue) one after another, we should expect jobs of our different batches to be mixed.

Run our command:

sail artisan batcher

Now let's check the logs, they are as expected:

[...] local.INFO: Batch [9c260075-92a1-44d3-aba0-a3a62c3738bd] created.

[...] local.INFO: Batch [9c260075-a927-49b2-81e4-6226eb13644b] created.

[...] local.INFO: ExampleJob [1] RAN

[...] local.INFO: Batch [9c260075-92a1-44d3-aba0-a3a62c3738bd] progress : 1/5 [20%]

[...] local.INFO: OtherJob [1] RAN

[...] local.INFO: Batch [9c260075-a927-49b2-81e4-6226eb13644b] progress : 1/5 [20%]

[...] local.INFO: ExampleJob [2] RAN

[...] local.INFO: Batch [9c260075-92a1-44d3-aba0-a3a62c3738bd] progress : 2/5 [40%]

[...] local.INFO: OtherJob [2] RAN

[...] local.INFO: Batch [9c260075-a927-49b2-81e4-6226eb13644b] progress : 2/5 [40%]

[...] local.INFO: ExampleJob [3] RAN

[...] local.INFO: Batch [9c260075-92a1-44d3-aba0-a3a62c3738bd] progress : 3/5 [60%]

[...] local.INFO: OtherJob [3] RAN

[...] local.INFO: Batch [9c260075-a927-49b2-81e4-6226eb13644b] progress : 3/5 [60%]

[...] local.INFO: ExampleJob [4] RAN

[...] local.INFO: Batch [9c260075-92a1-44d3-aba0-a3a62c3738bd] progress : 4/5 [80%]

[...] local.INFO: OtherJob [4] RAN

[...] local.INFO: Batch [9c260075-a927-49b2-81e4-6226eb13644b] progress : 4/5 [80%]

[...] local.INFO: ExampleJob [5] RAN

[...] local.INFO: Batch [9c260075-92a1-44d3-aba0-a3a62c3738bd] progress : 5/5 [100%]

[...] local.INFO: Batch [9c260075-92a1-44d3-aba0-a3a62c3738bd] ended.

[...] local.INFO: Batch [9c260075-92a1-44d3-aba0-a3a62c3738bd] finally ended.

[...] local.INFO: OtherJob [5] RAN

[...] local.INFO: Batch [9c260075-a927-49b2-81e4-6226eb13644b] progress : 5/5 [100%]

[...] local.INFO: Batch [9c260075-a927-49b2-81e4-6226eb13644b] ended.

[...] local.INFO: Batch [9c260075-a927-49b2-81e4-6226eb13644b] finally ended.

The drawback of this solution is in the chained jobs themselves.

If any job fails, the chain stops and pending jobs are not executed.

In fact, this is what they are designed for, running dependent jobs then it is nice that they stops when a job fails.

Let's update our ExampleJob to have it throw an Exception to examplify this:

# app/Jobs/ExampleJob.php

// ...

class ExampleJob implements ShouldQueue

{

//...

/**

* Execute the job.

*/

public function handle(): void

{

// ...

throw_if($this->number == 3, new Exception('I fail because 3.'));

Log::info(sprintf('%s [%d] RAN', class_basename($this), $this->number));

}

}

Restart and run the queue:

sail artisan queue:restart && sail artisan queue:work

And run the command:

sail artisan batcher

And check the logs:

[...] local.INFO: Batch [9c2601fd-d351-4195-b1a1-4c1c0d6fc901] created.

[...] local.INFO: Batch [9c2601fd-ea5d-4772-9eaf-792f34516eb8] created.

[...] local.INFO: ExampleJob [1] RAN

[...] local.INFO: Batch [9c2601fd-d351-4195-b1a1-4c1c0d6fc901] progress : 1/5 [20%]

[...] local.INFO: OtherJob [1] RAN

[...] local.INFO: Batch [9c2601fd-ea5d-4772-9eaf-792f34516eb8] progress : 1/5 [20%]

[...] local.INFO: ExampleJob [2] RAN

[...] local.INFO: Batch [9c2601fd-d351-4195-b1a1-4c1c0d6fc901] progress : 2/5 [40%]

[...] local.INFO: OtherJob [2] RAN

[...] local.INFO: Batch [9c2601fd-ea5d-4772-9eaf-792f34516eb8] progress : 2/5 [40%]

[...] local.INFO: Batch [9c2601fd-d351-4195-b1a1-4c1c0d6fc901] progress : 2/5 [40%]

[...] local.ERROR: Batch [9c2601fd-d351-4195-b1a1-4c1c0d6fc901] failed with error [I fail because 3.].

[...] local.ERROR: I fail because 3. {"exception":"[object] (Exception(code: 0): I fail because 3. at /var/www/html/app/Jobs/ExampleJob.php:36)

...

[...] local.INFO: OtherJob [3] RAN

[...] local.INFO: Batch [9c2601fd-ea5d-4772-9eaf-792f34516eb8] progress : 3/5 [60%]

[...] local.INFO: OtherJob [4] RAN

[...] local.INFO: Batch [9c2601fd-ea5d-4772-9eaf-792f34516eb8] progress : 4/5 [80%]

[...] local.INFO: OtherJob [5] RAN

[...] local.INFO: Batch [9c2601fd-ea5d-4772-9eaf-792f34516eb8] progress : 5/5 [100%]

[...] local.INFO: Batch [9c2601fd-ea5d-4772-9eaf-792f34516eb8] ended.

[...] local.INFO: Batch [9c2601fd-ea5d-4772-9eaf-792f34516eb8] finally ended.

Because when a job fails in a chain the chain stops, the 2 last job weren't ran at all, meaning that the batch didn't end in any case. Even finally callback is of no help here as not all the jobs are ran at least once.

Another point to keep in mind is that batch progression is based on the number of jobs in the batch, being added atomically or many at a times with the help of a chain.

Regarding our issue, we still need to find a solution!

Laravel "progressive" batches

In fact, the neatest solution lies in a feature offered by batch itself.

It is possible to use what we could called "progressive batch": start a batch with only one job and add another job to the batch when the previous one has finished. Laravel documentation says:

Sometimes it may be useful to add additional jobs to a batch from within a batched job. This pattern can be useful when you need to batch thousands of jobs which may take too long to dispatch during a web request.

We can update this statement to make our own one:

Sometimes it may be useful to add additional jobs to a batch from within a batched job. This pattern can be useful when you need to batch thousands of jobs without blocking a queue with this one batch and still allow other batches to run.

Let's see if this works! First, we need to update our ExampleJob to have it add a new job to the batch. This can be done using $this->batch()->add(...) like this:

# app/Jobs/ExampleJob.php

class ExampleJob implements ShouldQueue

{

// ...

public function handle(): void

{

//...

// throw_if($this->number == 3, new Exception('I fail because 3.'));

Log::info(sprintf('%s [%d] RAN', class_basename($this), $this->number));

// If job is not the fifth one, add a job to the batch

if ($this->number < 5) {

$this->batch()->add([new static(++$this->number)]);

}

}

}

Do the same for our OtherJob.

Now, in our Batcher, we can dispatch the batch with only one job and the initial data:

# app/Console/Commands/Batcher.php

class Batcher extends Command

{

// ...

private function dispatchBatch(string $job_class_name): void

{

Bus::batch([new $job_class_name(1)])

// ...

}

}

What will happen is the following:

Batcherwill create the batch with only one jobThe batch will push its only job to queue

Job will be popped from queue and executed

At the

handleend, job will check if it is not the last of the batch (number < 5) :if no:

numberis incremented and a new job is pushed to the queueif yes: no new job is pushed and batch ends

Let's try to run the command. Restart the queue to have updates taken in account:

sail artisan queue:restart && sail artisan queue:work

And run our command:

sail artisan batcher

Now let's check the logs:

[...] local.INFO: Batch [9c260af9-4b69-456e-bb25-1fa1405dd835] created.

[...] local.INFO: Batch [9c260af9-65bb-4e7f-9e79-732308aec38d] created.

[...] local.INFO: ExampleJob [1] RAN

[...] local.INFO: Batch [9c260af9-4b69-456e-bb25-1fa1405dd835] progress : 1/2 [50%]

[...] local.INFO: OtherJob [1] RAN

[...] local.INFO: Batch [9c260af9-65bb-4e7f-9e79-732308aec38d] progress : 1/2 [50%]

[...] local.INFO: ExampleJob [2] RAN

[...] local.INFO: Batch [9c260af9-4b69-456e-bb25-1fa1405dd835] progress : 2/3 [67%]

[...] local.INFO: OtherJob [2] RAN

[...] local.INFO: Batch [9c260af9-65bb-4e7f-9e79-732308aec38d] progress : 2/3 [67%]

[...] local.INFO: ExampleJob [3] RAN

[...] local.INFO: Batch [9c260af9-4b69-456e-bb25-1fa1405dd835] progress : 3/4 [75%]

[...] local.INFO: OtherJob [3] RAN

[...] local.INFO: Batch [9c260af9-65bb-4e7f-9e79-732308aec38d] progress : 3/4 [75%]

[...] local.INFO: ExampleJob [4] RAN

[...] local.INFO: Batch [9c260af9-4b69-456e-bb25-1fa1405dd835] progress : 4/5 [80%]

[...] local.INFO: OtherJob [4] RAN

[...] local.INFO: Batch [9c260af9-65bb-4e7f-9e79-732308aec38d] progress : 4/5 [80%]

[...] local.INFO: ExampleJob [5] RAN

[...] local.INFO: Batch [9c260af9-4b69-456e-bb25-1fa1405dd835] progress : 5/5 [100%]

[...] local.INFO: Batch [9c260af9-4b69-456e-bb25-1fa1405dd835] ended.

[...] local.INFO: Batch [9c260af9-4b69-456e-bb25-1fa1405dd835] finally ended.

[...] local.INFO: OtherJob [5] RAN

[...] local.INFO: Batch [9c260af9-65bb-4e7f-9e79-732308aec38d] progress : 5/5 [100%]

[...] local.INFO: Batch [9c260af9-65bb-4e7f-9e79-732308aec38d] ended.

[...] local.INFO: Batch [9c260af9-65bb-4e7f-9e79-732308aec38d] finally ended.

Jobs are intertwined and this is what we want.

The solution works, however there is several points that needs a particular attention:

batch progress is not reliable anymore: Because we add jobs one by one,

totalJobsandpendingJobsdoes not reflect the reality of the work to do and progression is more a note on whether the batch ends than a real view what is done.Progressive batch can leads to infinite loop: because they are a kind of while loop on the queue, we need to be very careful with the condition to fulfill to add a new job to the queue and the code creating the new job itself. To experiment this, just try to use

$this->number++instead of++$this->number: increment will happen after job is created and you'll end up in a infinite loopwhen complex condition are needed of when treating complex list, an external queue may be required in order to push further jobs to queue with the required arguments. For example, it may not be a good idea to have a list of 5000 emails as an argument, storing them in database or as a queue in Redis is preferable.

Why using Laravel batches anyway?

We covered all the features of the Laravel batches we can find in documentation. But as always with Laravel, there is more to find if you look at the framework code, which I encourage you to do. Reading Laravel code will make you write more "Laravelic" code by grasping the core philosophy and you will have access to many of those features that makes Laravel extensible.

Considering batches, what we learn?

Mostly, we learn that they require a particular attention when used and that there is drawbacks in using them in almost every situations.

Then, why bother using them anyway? Answer is simple, it is a beautiful abstraction and provides a clean interface for common problem. Yes, we need to code what can be seen as workaround, but it is nice to be able to just write this:

Bus::batch([new $job_class_name(1)])

])->before(function (Batch $batch) {

// The batch has been created but no jobs have been added...

})->progress(function (Batch $batch) {

// A single job has completed successfully...

})->then(function (Batch $batch) {

// All jobs completed successfully...

})->catch(function (Batch $batch, Throwable $e) {

// First batch job failure detected...

})->finally(function (Batch $batch) {

// The batch has finished executing...

})->dispatch();

// Allow batch to fail without being cancelled

->allowFailures()

->dispatch();

And don't forget the ability to cancel a batch at any moment, to retry failed jobs.

High level is simple and clear and complexity can be hidden into more low level parts. Additionally, the responsibility are clearly expressed:

the job does only what it is supposed + launching other jobs (and this can done by a specific method)

one function to do work before batch starts

one function to do work when everything is done

etc.

Sometimes, it's not about the amount of code we write, buthow we can re-use it, read it and maintain it. And laravel batches are a good basis.

What's next

In the next chapter we'll build our own system on top on Laravel batches to fit a specific use case.

And we'll see how powerful batches are indeed.

Subscribe to my newsletter

Read articles from Dominique VASSARD directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by