Day 18/40 Days of K8s: Liveness vs Readiness vs Startup Probes in K8's !! ☸️

Gopi Vivek Manne

Gopi Vivek Manne

🔍 What is a Probe?

A probe is a mechanism for inspecting and monitoring something and taking necessary actions as required.

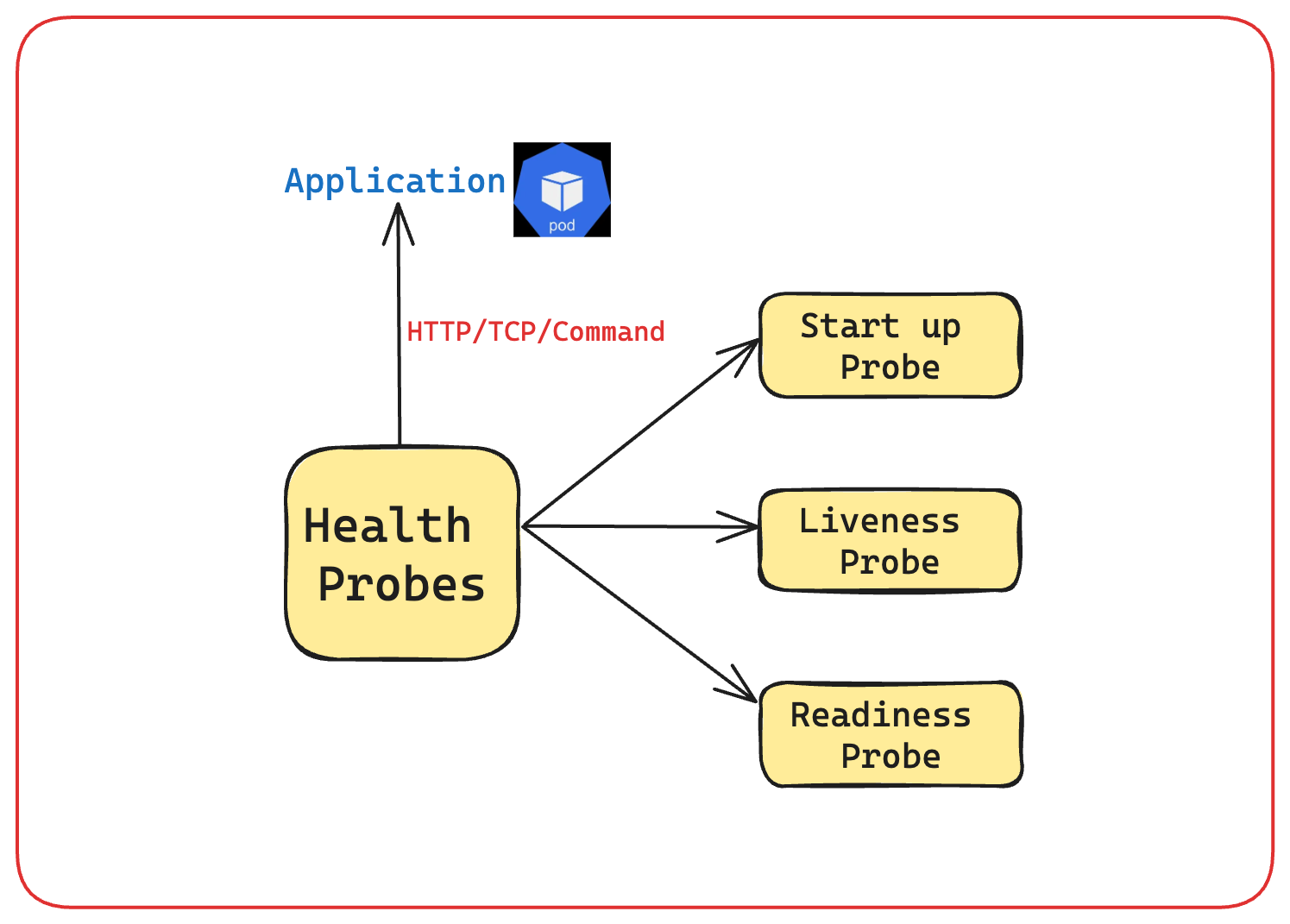

💁 Health Probes in Kubernetes

Health probes are used to inspect/monitor the health of pods to ensure:

The application remains healthy.

Pods are restarted if necessary (self-healing).

Applications are always running with minimal user impact.

🌟 Types of Health Probes

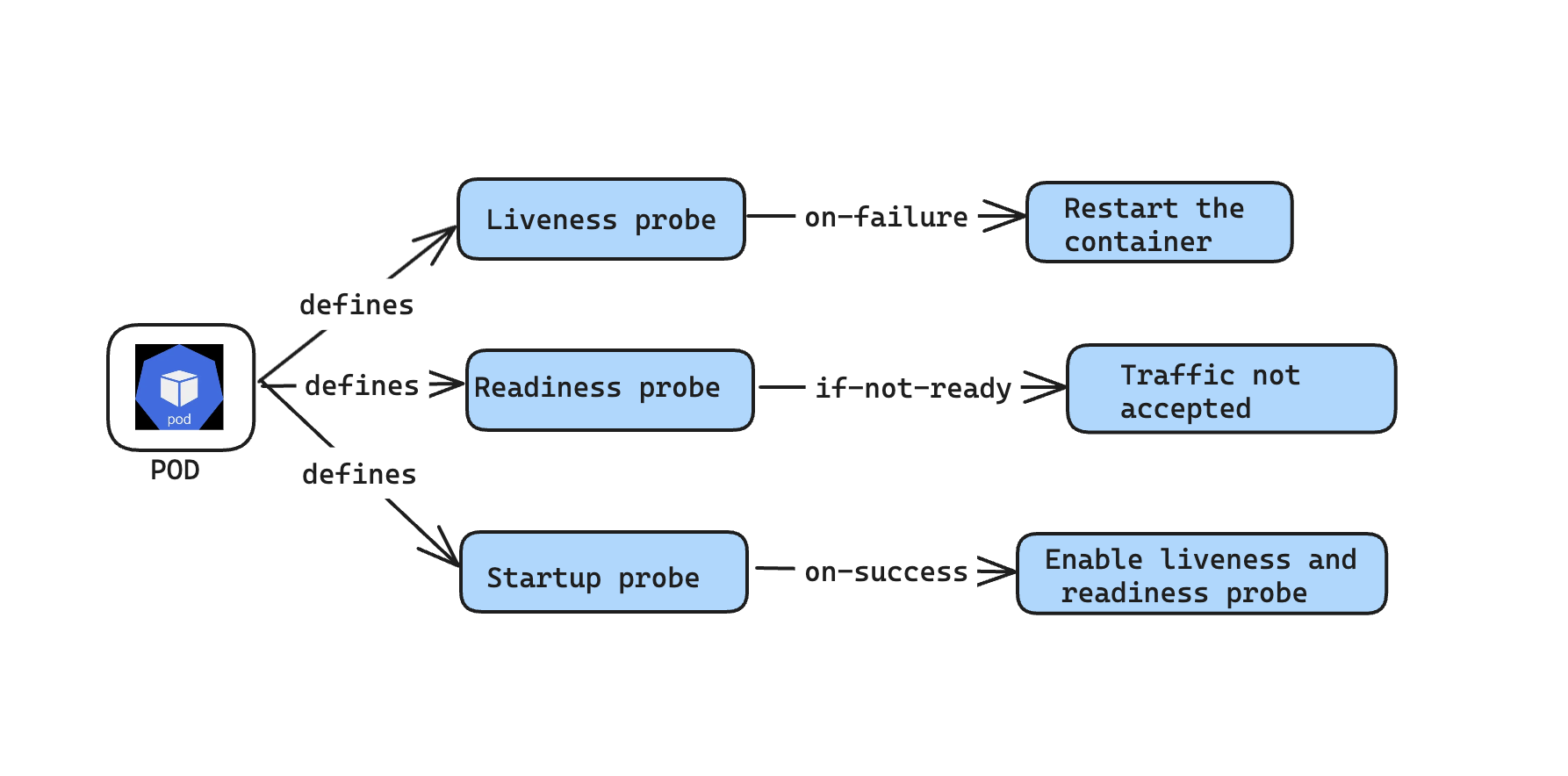

✅ Liveness Probe

Checks if the application is healthy or not. It Monitors application periodically and restarts the application if unhealthy.

Throws an error of CrashLoopBackoff after multiple restart attempts.

✅ Readiness Probe

Though the application is healthy it doesn’t mean that the application is ready to accept the traffic immediately.

Readiness probe will check the readiness of the application to serve the content by accepting the requests, but it will not restart the pod like liveness probe

✅ Startup Probe

- This probe is used for legacy apps, this ensures that application has enough time to start up before liveness probe begins checking its health. As soon as the startup probe confirms the application is ready, then liveness probe can be used to ensure the application remains live and healthy.

✳ Health Check Methods

All three health probes can perform health checks using:

HTTP: Health probe will send an HTTP request to the endpoint periodically and if it receives the response back like response code between

200-400then health check is successful.TCP: It will attempt to open a port against the container and upon successful responses health checks are successful.

Command: Executes a command against the container and should receive a successful response with an exit code of

0

In real time we use the combination of these health probes, mostly liveness and readiness probes.

✳ Configure Liveness, Readiness and Startup Probes Examples

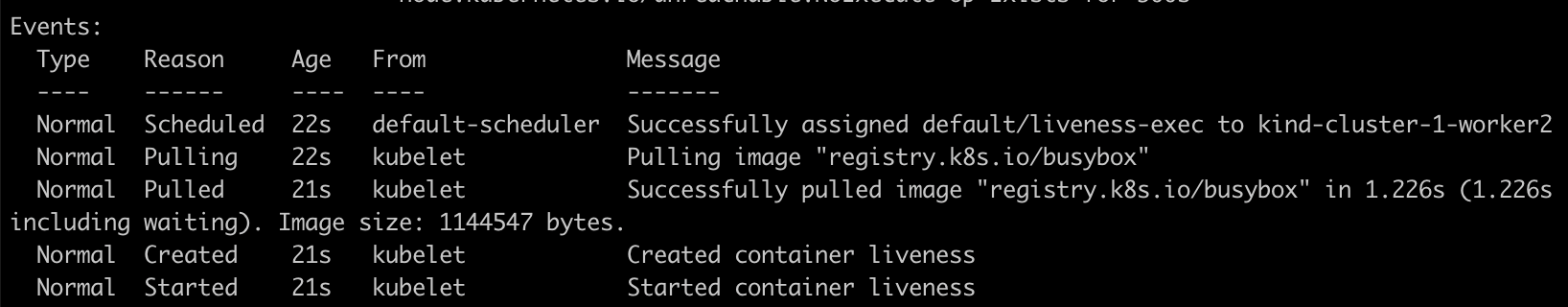

Command Health Check

Create a Pod that runs a container based on the

registry.k8s.io/busyboximage.# The liveness probe command based health check will be performed against the container, and if # it receives exit code 0, it means the check is successful. apiVersion: v1 kind: Pod metadata: labels: test: liveness name: liveness-exec spec: containers: - name: liveness image: registry.k8s.io/busybox args: - /bin/sh - -c - touch /tmp/healthy; sleep 30; rm -f /tmp/healthy; sleep 600 livenessProbe: exec: command: - cat - /tmp/healthy initialDelaySeconds: 5 #Wait for 5 sec before performing the initial health check periodSeconds: 5 #perform the health check for every 5 minCreate the pod and view the pod events

kubectl describe pod liveness-exec

The output indicates that no liveness probes have failed yet.

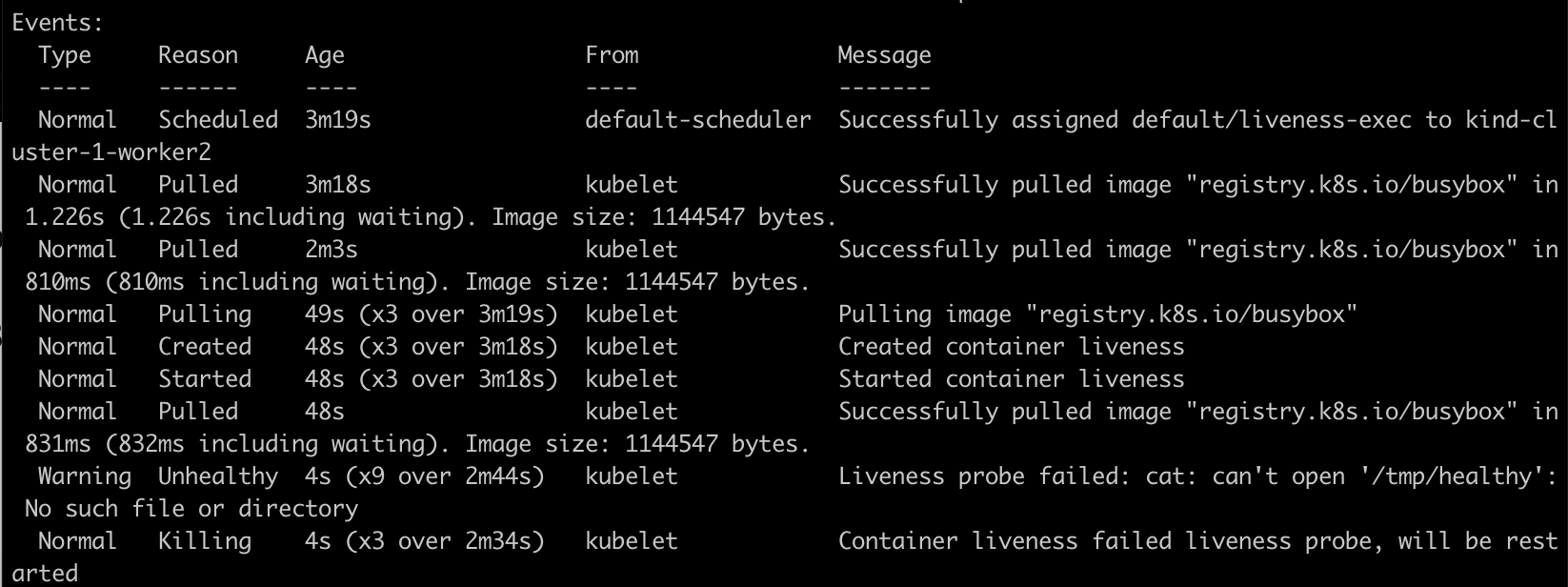

Wait for 35 seconds, view the Pod events again:

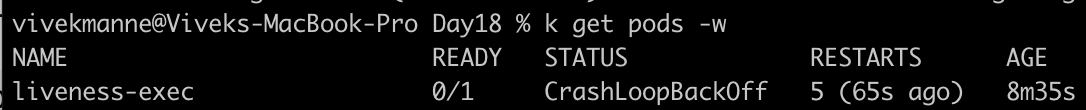

This indicates that liveness probes have failed, and causing the containers to be killed and recreated. This goes on and on like the container restarts by pulling the image, creating a file, and then deleting it after 30 seconds. During this time, the liveness probe health checks fail. After multiple restarts pod will enter into

CrashLoopBackOffstate as show below.

HTTP Health check

Create a pod using this yaml file

apiVersion: v1 kind: Pod metadata: labels: test: liveness name: liveness-http spec: containers: - name: liveness image: registry.k8s.io/e2e-test-images/agnhost:2.40 args: - liveness livenessProbe: httpGet: path: /healthz port: 8080 httpHeaders: - name: Custom-Header value: Awesome initialDelaySeconds: 3 #Wait for 3 sec before performing initial health check periodSeconds: 3 #perform the http health check against th container for every 3 secondsHere Liveness Probe will perform health check by sending http request against the container on the specified path.

View the pod events

kubectl describe pod liveness-http

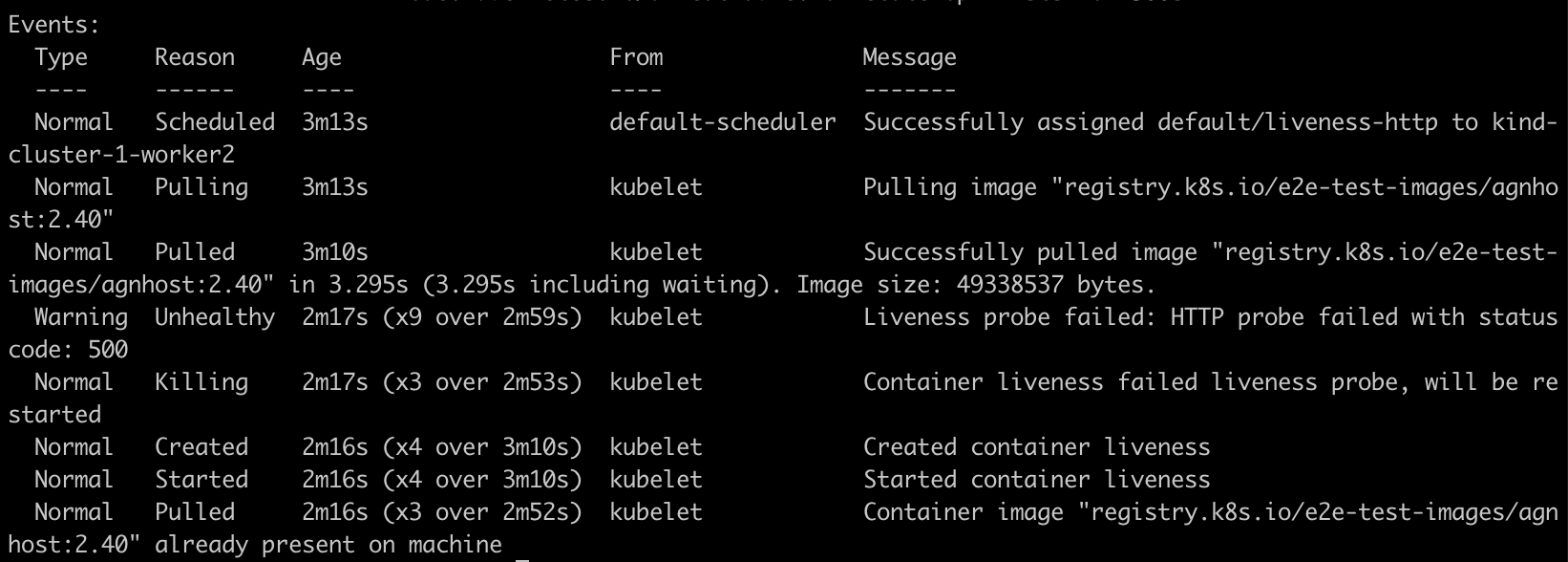

The Pod events indicates that liveness probes have failed and the container has been restarted as the path

/healthzdoesn't exist inside the container.

TCP Health check

Use the config file to create a pod

apiVersion: v1 kind: Pod metadata: name: goproxy labels: app: goproxy spec: containers: - name: goproxy image: registry.k8s.io/goproxy:0.1 ports: - containerPort: 8080 readinessProbe: # Readiness probe will perform readiness health check by openeing port 8080 agaisnt the container tcpSocket: port: 8080 initialDelaySeconds: 10 periodSeconds: 5 livenessProbe: # Liveness probe will perform health check by opening a port 8080 against the container tcpSocket: port: 8080 initialDelaySeconds: 10 # wait for 10 sec before doing initial health check periodSeconds: 5 # perform health check every 5 sec #Failure Threshold: This is the number of consecutive failed liveness probe attempts before Kubernetes considers the pod unhealthy and restarts it.This example uses both readiness and liveness probes, performs health checks by opening a port

8080against the container.View the pod events

kubectl describe pod goproxy

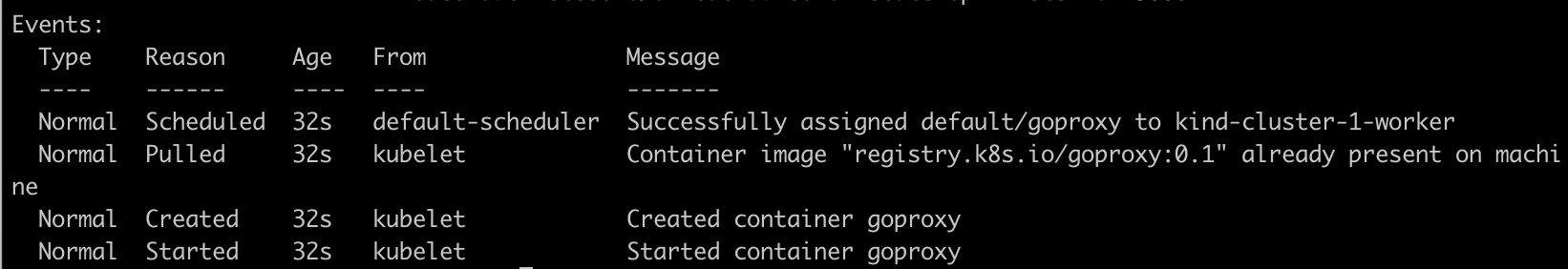

Since the container is running on port

8080, both health checks are successful and pod is in running state.Let's change the Liveness probe port to

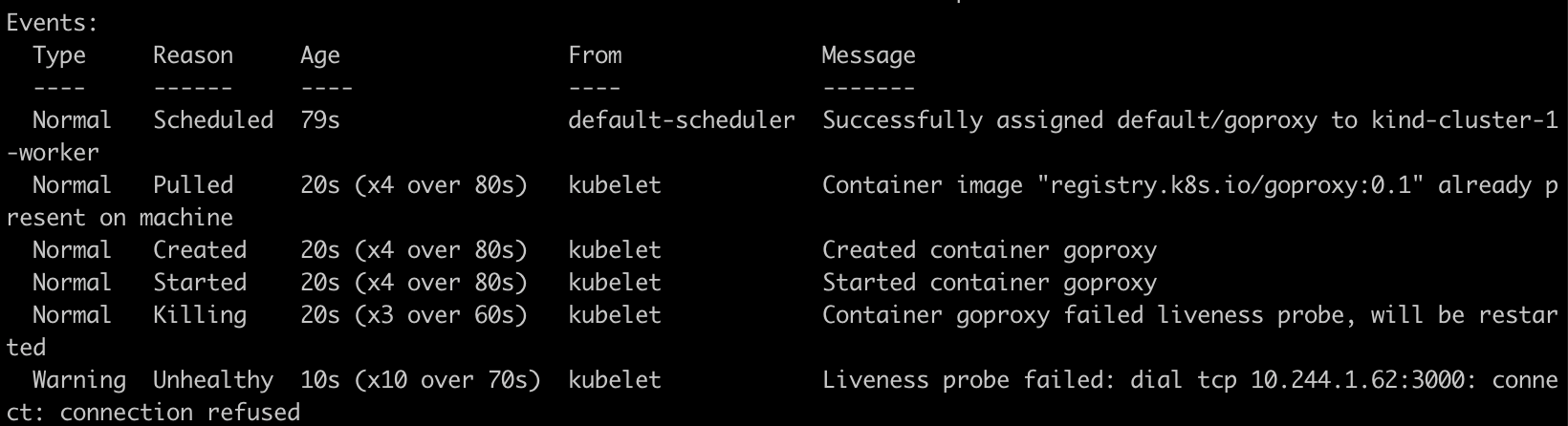

3000now, and either forcefully recreate the pod using--forcein the command or redeploy the pod again after removing the existing pod.kubectl apply -f liveness-tcp.yaml --force pod/goproxy configured kubectl describe pod goproxy

As you can see, Liveness probe failed with error

connection refusedas it tried to open port3000against the container but container is running on port8080

NOTE: The Kubelet continuously monitors both readiness and liveness probes independently.

#Kubernetes #ReadinessProbe #LivenessProbe #StartupProbe #40DaysofKubernetes #CKASeries

Subscribe to my newsletter

Read articles from Gopi Vivek Manne directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gopi Vivek Manne

Gopi Vivek Manne

I'm Gopi Vivek Manne, a passionate DevOps Cloud Engineer with a strong focus on AWS cloud migrations. I have expertise in a range of technologies, including AWS, Linux, Jenkins, Bitbucket, GitHub Actions, Terraform, Docker, Kubernetes, Ansible, SonarQube, JUnit, AppScan, Prometheus, Grafana, Zabbix, and container orchestration. I'm constantly learning and exploring new ways to optimize and automate workflows, and I enjoy sharing my experiences and knowledge with others in the tech community. Follow me for insights, tips, and best practices on all things DevOps and cloud engineering!